I’ve used many AI tools over the last year, mostly because it’s my job and partly because I’m genuinely obsessed (read curious). Reviewing different models has become second nature at this point, and if there’s one thing I’ve learned, it’s that some tools are impressive, some are painfully overhyped, and most land somewhere in that awkward middle ground where potential doesn’t quite meet performance.

But when I was asked to review Claude, ChatGPT, and Gemini, some of the current biggest models in AI, I knew I had to go beyond surface-level impressions. With newer models like Claude 3, Gemini 1.5, and OpenAI’s flashy GPT-4o now in the mix, there was no point speculating. It was time to run the numbers and see which model delivers in the real world.

So I did what any tech writer on an AI mission would do: I lined up 10 real-world prompts and ran each through Claude, ChatGPT, and Gemini. I tested them side by side with the same tasks and same rules. From writing Python scripts to simplifying dense text to solving math problems, I picked prompts that mimic how you and I would use AI day-to-day.

In this article, I’ll walk you through exactly why and how I tested each tool, break down their responses side-by-side, and tell you which model crushed it and which one straight-up fumbled the bag. By the end, you’ll know where each model stands, and most importantly, which one fits your workflow best in 2025.

TL;DR: Key takeaways from this article

- Gemini was the most consistent performer; it crushed 7 out of 10 prompts, especially anything factual, contextual, or local.

- From witty hooks to storytelling flair, ChatGPT nailed the prompts where personality and originality mattered most. It’s perfect for writing, idea generation, and fun.

- Claude is the go-to for structure and clarity. It may not be the most exciting, but it’s dependable.

- All three have strengths, but context matters. No single model won across every category. The best one for you depends on what you’re trying to do: code, write, plan, or translate.

- Use the right tool for the right task.

Overview of Claude, ChatGPT, and Gemini

Before we go into the head-to-head testing, let’s set the stage.

Here’s a quick breakdown of what each model is, how it works, and where you’re most likely to use it in real life.

Claude

| Developer | Anthropic |

| Year launched | 2023 |

| Type of AI tool | Conversational AI and Large Language Model (LLM) |

| Top 3 use cases | Content structuring, analytical reasoning, and summarization |

| Who is it for? | Writers, researchers, developers, and business professionals |

| Starting price | $20/month |

| Free version | Yes (with limitations) |

ChatGPT

| Developer | OpenAI |

| Year launched | 2022 |

| Type of AI tool | Conversational AI and LLM |

| Top 3 use cases | Coding assistance, general Q&A, and writing |

| Who is it for? | Developers, students, content creators, and everyday users |

| Starting price | $20 per month |

| Free version | Yes (with limitations) |

Gemini

| Developer | Google DeepMind |

| Year launched | 2023 (as Bard), rebranded as Gemini in 2024 |

| Type of AI tool | Multimodal LLM |

| Top 3 use cases | Creative writing, general Q&A, multimedia input |

| Who is it for? | General users, content creators, and Android/Gmail users |

| Starting price | $20/month for Gemini Advanced |

| Free version | Yes (Gemini 1.0) |

Why I tested Claude, ChatGPT, and Gemini

Over the past several months, I’ve been bouncing between AI tools like a caffeine-fueled pinball. One minute, I’m asking Claude to rewrite a paragraph that just won’t land. The next minute, I’m debugging Python with ChatGPT whispering over my shoulder. Then I’ll hand a dense PDF to Gemini and hope it returns with something usable instead of a wall of jargon.

It’s been a game of picking the best tool for the moment, and honestly, half the time I was just going with my gut.

Then, during a chat, my editor asked me which one among the three is the best. I didn’t have a good answer. And that’s when I realized I needed to stop guessing and start testing. It was time to put Claude, ChatGPT, and Gemini head-to-head on the same playing field.

So I did exactly that. My findings are what you’re about to see in this article.

How I tested Claude, ChatGPT, and Gemini

To see which AI model is worth your time in 2025, I designed 10 real-world prompts around stuff you and I use AI for daily, including writing and editing, research and summarization, coding and debugging, brainstorming and ideation, math and logical explanations, and a few other subjects.

These were practical, focused tasks that reflect what a student, developer, content creator, or just a curious human might ask an AI.

My goal was simple: figure out which AI model handles real-life work the best, because the most powerful model on paper doesn’t mean much if it can’t help you meet a deadline, get unstuck, or make sense of a messy brief.

To keep things fair, I ran the same 10 prompts through all three models on the same day, using their official interfaces: Claude (claude.ai), ChatGPT (chat.openai.com), and Gemini (gemini.google.com).

I tested them across four key criteria:

- Accuracy: Did the response directly and correctly answer the question or task?

- Creativity: Did the model add flair, originality, or insightful nuance?

- Clarity: Was the response easy to understand, with clean structure and explanations?

- Usability: Could I use the output directly, or would I need to heavily tweak it?

I recorded the results, took screenshots, and made notes on how each model behaved, including quirks and surprises.

Now, let’s get into it.

Prompt-by-prompt breakdown for Claude, ChatGPT, and Google Gemini

Prompt 1: Breakdown of a concept

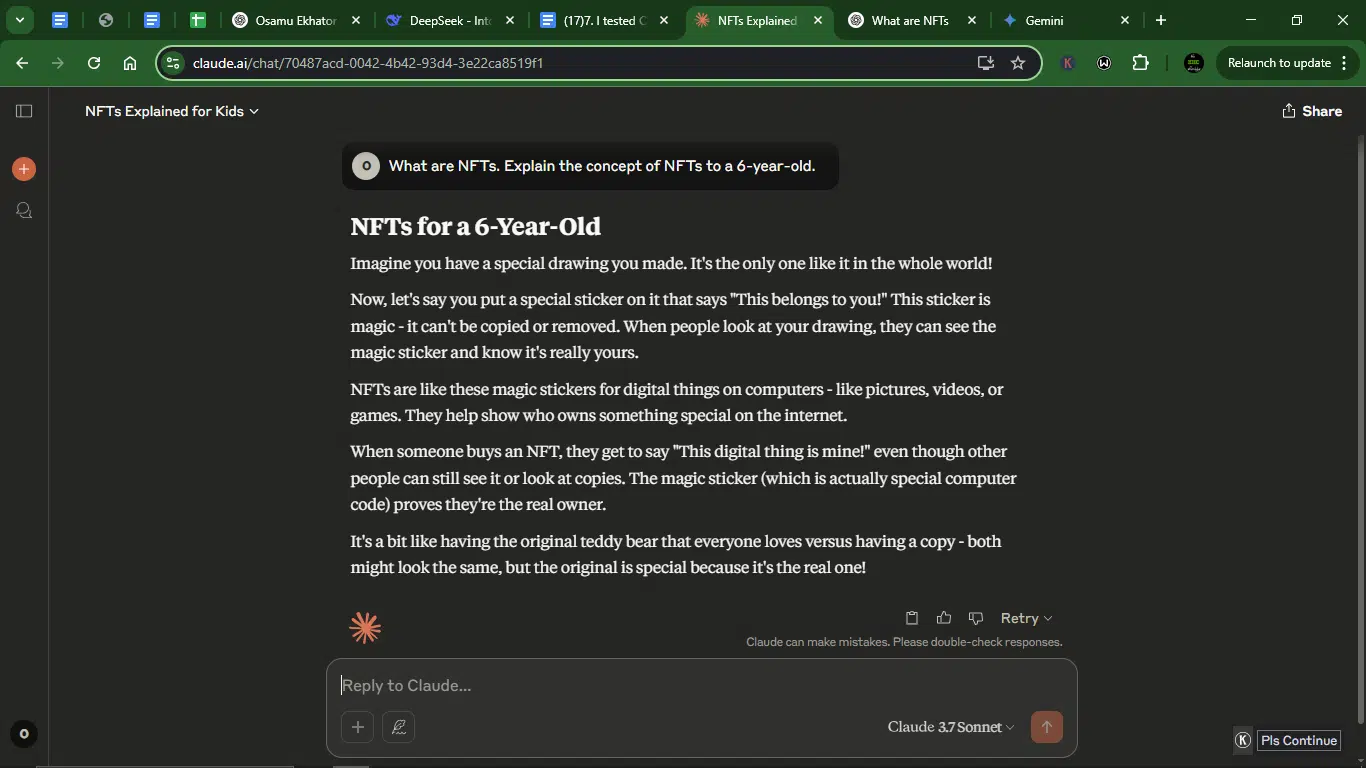

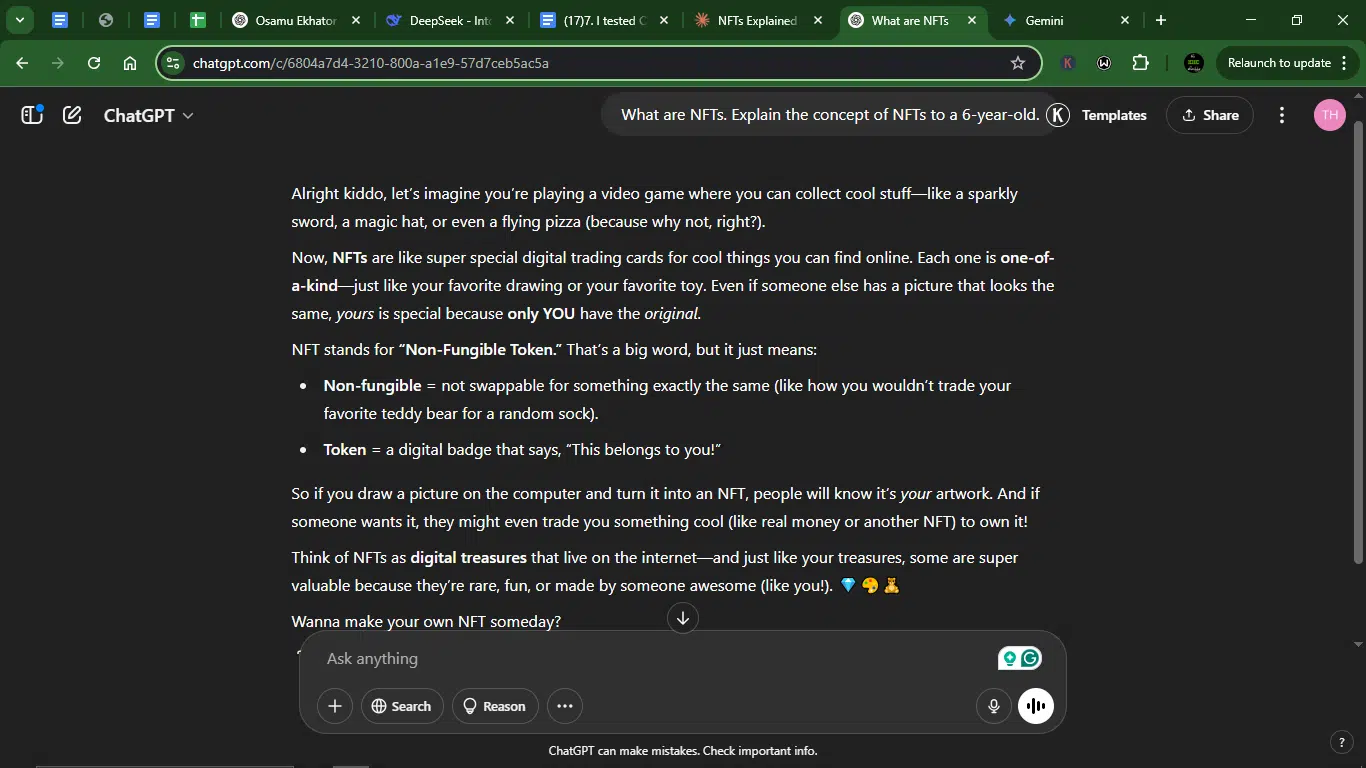

When I say I needed this explained to a 6-year-old, I partly meant me. NFTs are one of those tech buzzwords that exploded overnight and somehow still manage to feel both wildly important and incredibly pointless, depending on who’s talking. I used this prompt to test the models for clarity and simplicity.

Prompt: “What are NFTs. Explain the concept of NFTs to a 6-year-old.”

Result:

Claude’s response:

ChatGPT’s response:

Gemini’s response:

- Accuracy: Claude hits the core idea (unique ownership via “magic sticker”) but slightly oversimplifies by implying NFTs can’t be copied (the content can, just not the token). ChatGPT nails the uniqueness (“one-of-a-kind digital trading cards”) and clarifies “Non-Fungible Token” simply. Best at distinguishing original vs. copies. Gemini explains well but gets tangled in “fungible vs. non-fungible” early, which might confuse a 6-year-old despite the toy car analogy.

- Creativity: Claude wins with the “magic sticker” metaphor and teddy bear analogy, which are very child-friendly and imaginative. ChatGPT analogy is fun (“flying pizza,” “digital treasures”) but leans more into gaming metaphors than universal kid appeal. Gemini sticks to a safe “special drawing” analogy; less playful than the others.

- Clarity: Claude’s response is simple and well-structured, though “magic sticker” might mislead about copying. ChatGPT’s response has a clear flow (starts with gaming, defines “NFT,” then ties it back to ownership). Gemini jumps into “fungible” too soon, making the attempt slightly disjointed despite good examples.

- Usability: Claude is ready to use with minor tweaks (e.g., clarify that copies can exist). ChatGPT’s response is the most polished and can be used verbatim. Gemini requires reordering to simplify the “fungible” part.

Winner: ChatGPT (most plug-and-play).

Why: ChatGPT’s response balanced accuracy, clarity, and usability with just enough creativity. Claude is a close second for showing the best creativity, but is slightly less accurate. Gemini needs refinement for the age group.

Prompt 2: Summarization of the main points

For this one, I wanted to see which model could get the gist and capture the core points with clarity and local awareness. I used a long-form article with Nigeria-specific context to test how well each AI handled nuance, skipped the fluff, and delivered a summary I’d share with a busy friend.

Prompt: “Summarize the main points of this excerpt from a Techpoint Africa article; keep it under 50 words:

Article: The Nigerian government has confirmed that Binance executive Nadeem Anjarwalla, who escaped custody in Nigeria, has been apprehended in Kenya, with talks underway to extradite him. This comes nearly a month after he escaped from the Abuja guest house, where he and Gambaryan were detained over allegations of money laundering and tax evasion.

In March 2024, EFCC chairman Ola Olukayode announced that the government was working with Kenya, the United States, the United Kingdom, and Northern Ireland to bring Anjarwalla to trial.

Per TechCabal, a detective with the Directorate of Criminal Investigations (DCI), who requested anonymity due to the gravity of the situation, revealed that the DCI has received a request to extradite Nadeem Anjarwalla from Nigeria but has yet to act.

“You cannot just walk in and arrest him based on the request. It’s a process. Also, the Anjarwallas are influential and have the backing of some powerful people.” The fleeing Binance executive is said to be the son of Atiq Anjarwalla, a senior partner at Anjarwalla & Khanna Advocates, East Africa’s largest commercial law firm.

Meanwhile, the extradition process might encounter challenges. According to Kenya’s extradition laws, before Anjarwalla can be arrested, a Nigerian court must issue an arrest warrant, which will be forwarded to Nairobi’s attorney general. Nigeria can also request a red notice through Interpol, an international organisation that promotes global police cooperation and crime control.

Following the arrest, Kenyan police must obtain court consent, which could take months or years.”

Result:

Claude’s response:

ChatGPT’s response:

Gemini’s response:

Key points to include:

- Capture: Anjarwalla (escaped Binance exec) apprehended in Kenya.

- Charges: Money laundering and tax evasion in Nigeria.

- Extradition process:

- Requires a Nigerian arrest warrant and Kenyan AG approval.

- Potential Interpol red notice.

- Kenyan court consent needed (could take months/years).

- Complications:

- Anjarwalla’s influential family ties.

- DCI has requested but hasn’t acted yet.

- Accuracy: Claude and ChatGPT cover key points (capture, extradition, complications) but omit Kenya’s legal steps (warrant, court consent). Gemini is the only one to include extradition steps (warrant, court consent). All three skip the potential Interpol red notice.

- Creativity: All three are factual summaries; creativity isn’t a priority here.

- Clarity: They all perform at fairly the same level, with Gemini just slightly topping with the additional legal steps.

- Usability: Claude and ChatGPT need minor edits to include the legal steps and red notice. Gemini is the most plug-and-play response with no missing critical details, besides the red notice.

Winner: Gemini (requires zero tweaks).

Why: Gemini’s summary features the most key details (arrest, charges, extradition steps, potential delays) and stays under 50 words.

Prompt 3: Blog post and article writing

I gave this prompt to them to test for structure, creativity, and topical understanding.

Prompt: “Write a 300-word microblog post outline about the rise of generative AI in Africa.”

Result:

Claude’s response:

ChatGPT’s response:

Gemini’s response:

- Accuracy: Claude covers all critical areas (intro, current state, challenges, success stories, policy, future), but it feels slightly generic and lacks punchy examples or stats. ChatGPT offers a strong hook, clear definition, real-world examples, and challenges, yet it’s missing a deeper ethics discussion. Gemini’s is well-structured and includes ethical concerns and sector-specific potential; however, it has no standout examples.

- Creativity: Claude is professional but feels a bit dry. ChatGPT offers the best hook (punchy observation) and conversational tone (“creativity on steroids”). Gemini is safe but lacks flair.

- Clarity: they are all quite clear and structured in an easy-to-follow manner.

- Usability: Claude needs trimming for brevity (e.g., the success story section). ChatGPT is ready to expand into a post with minimal edits. Gemini requires more exciting examples.

Winner: ChatGPT (most plug-and-play).

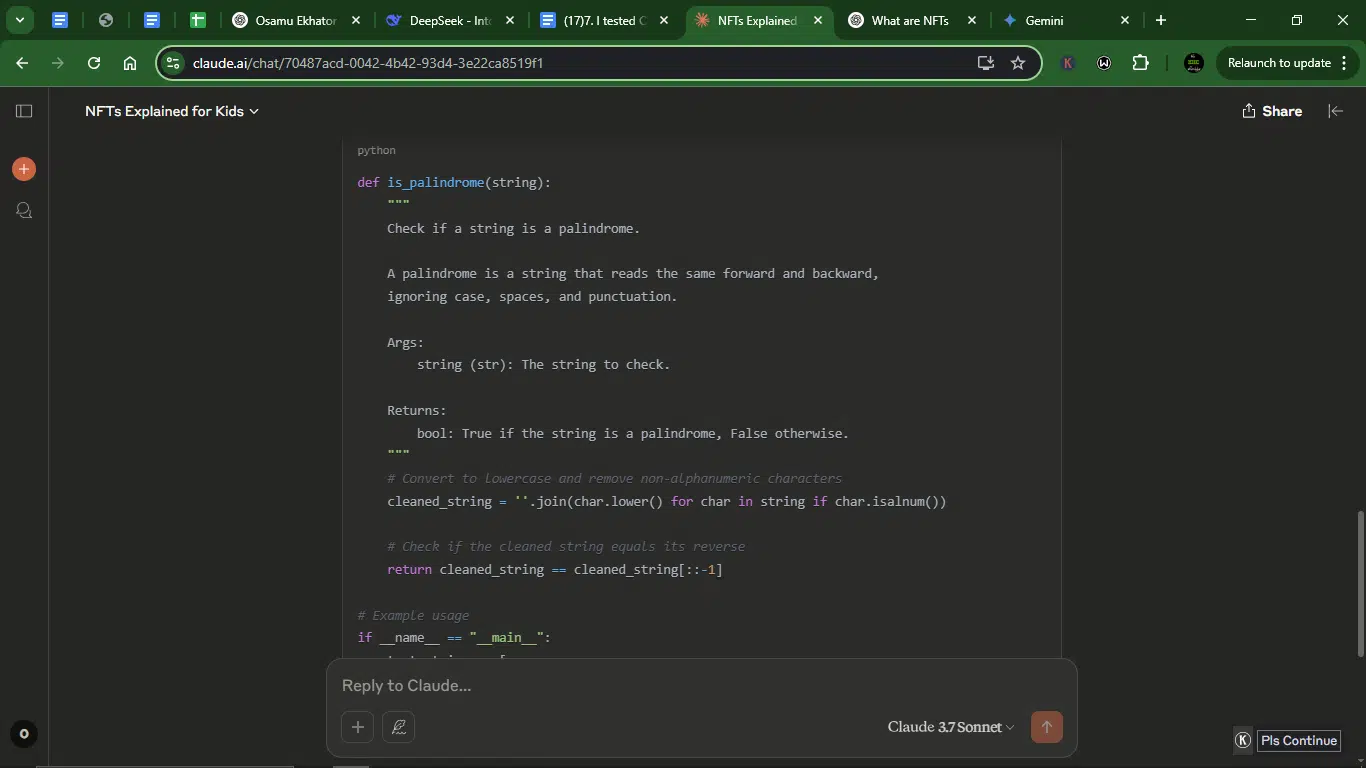

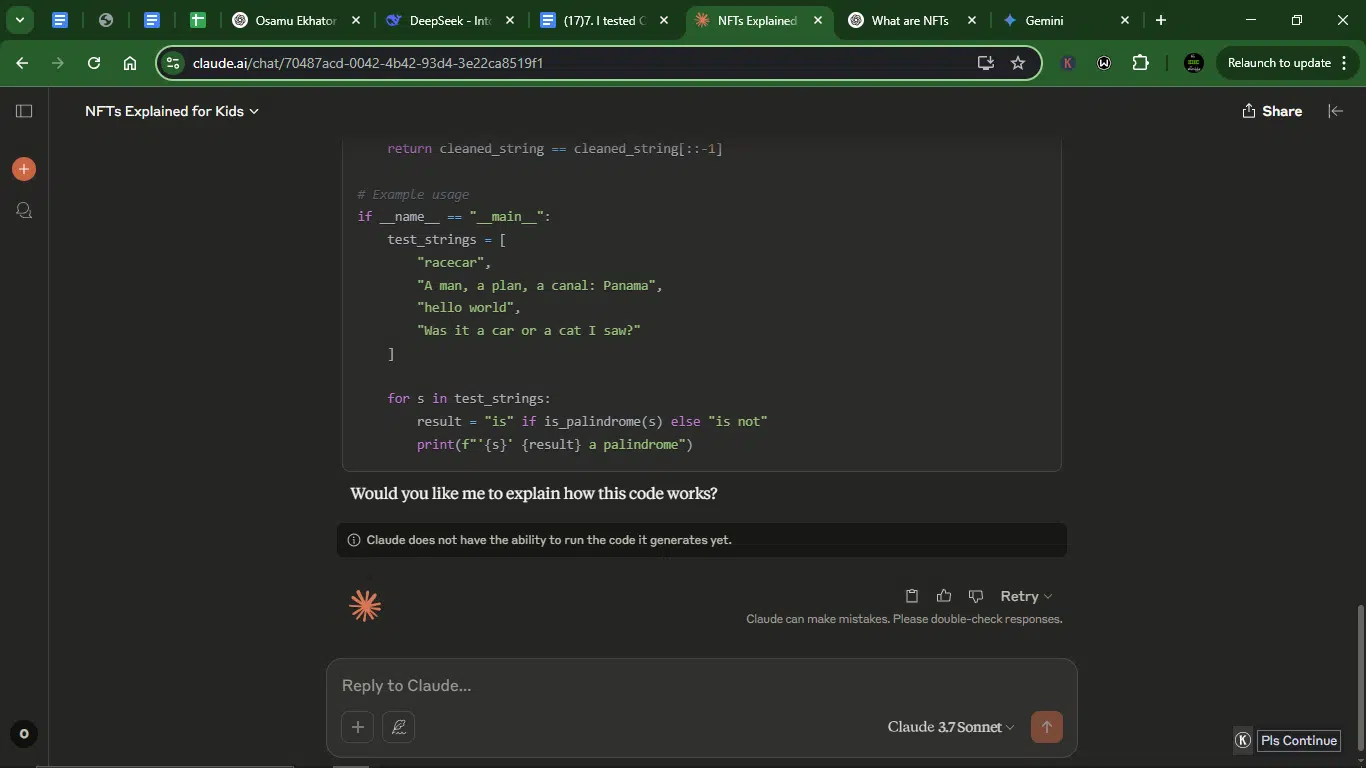

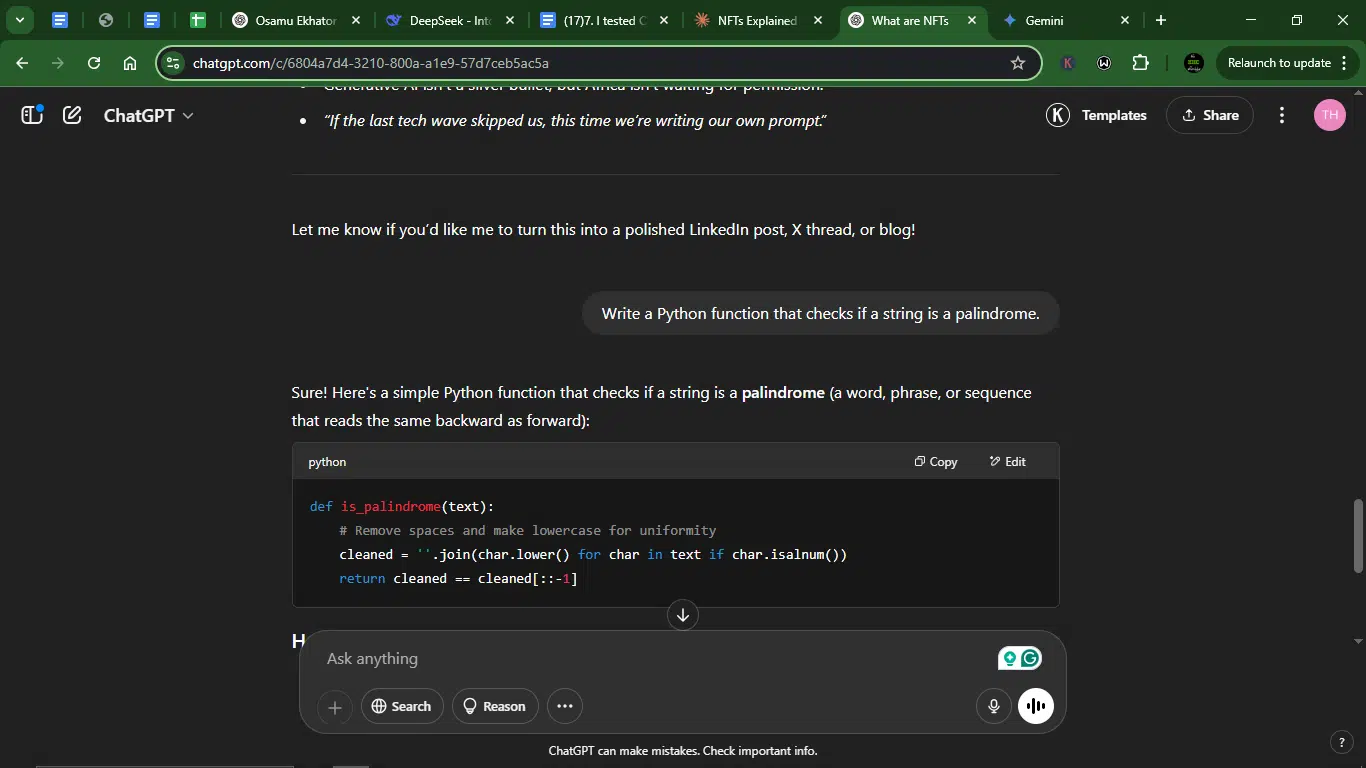

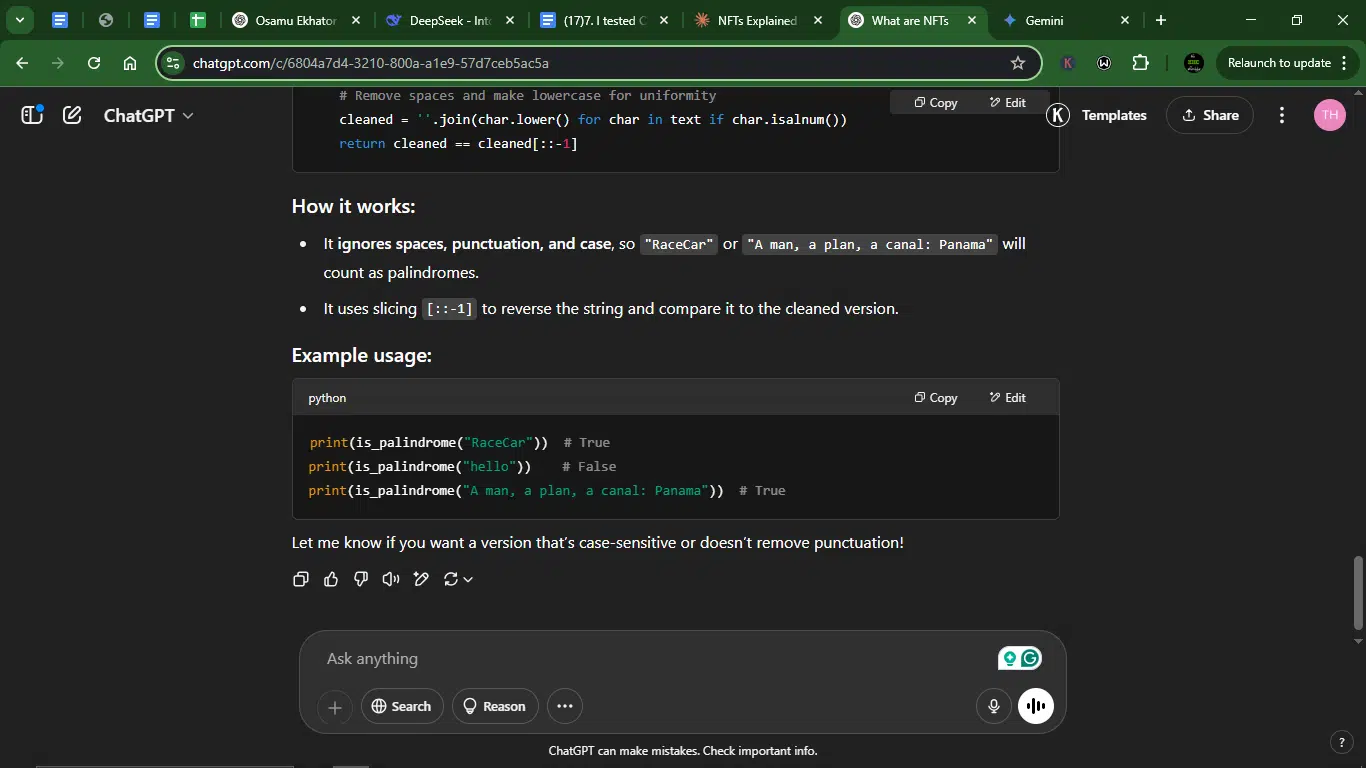

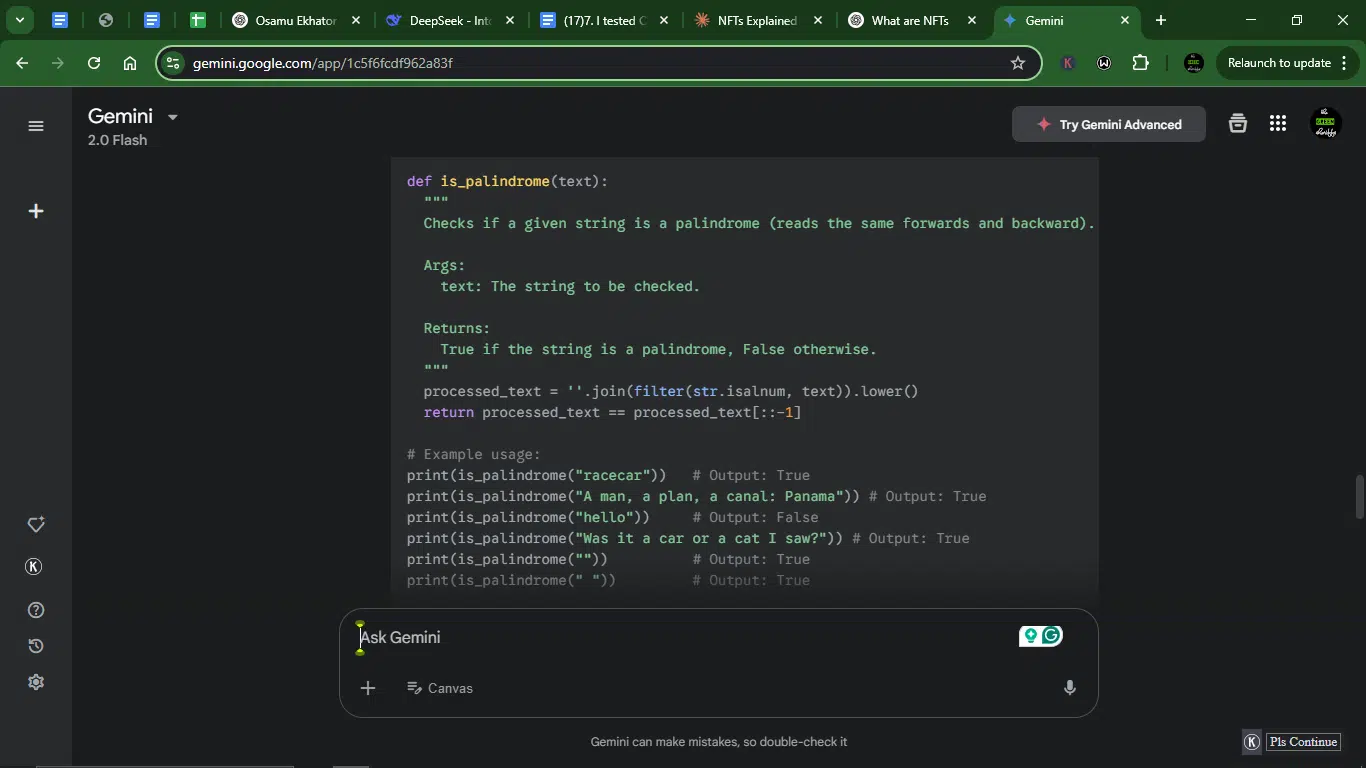

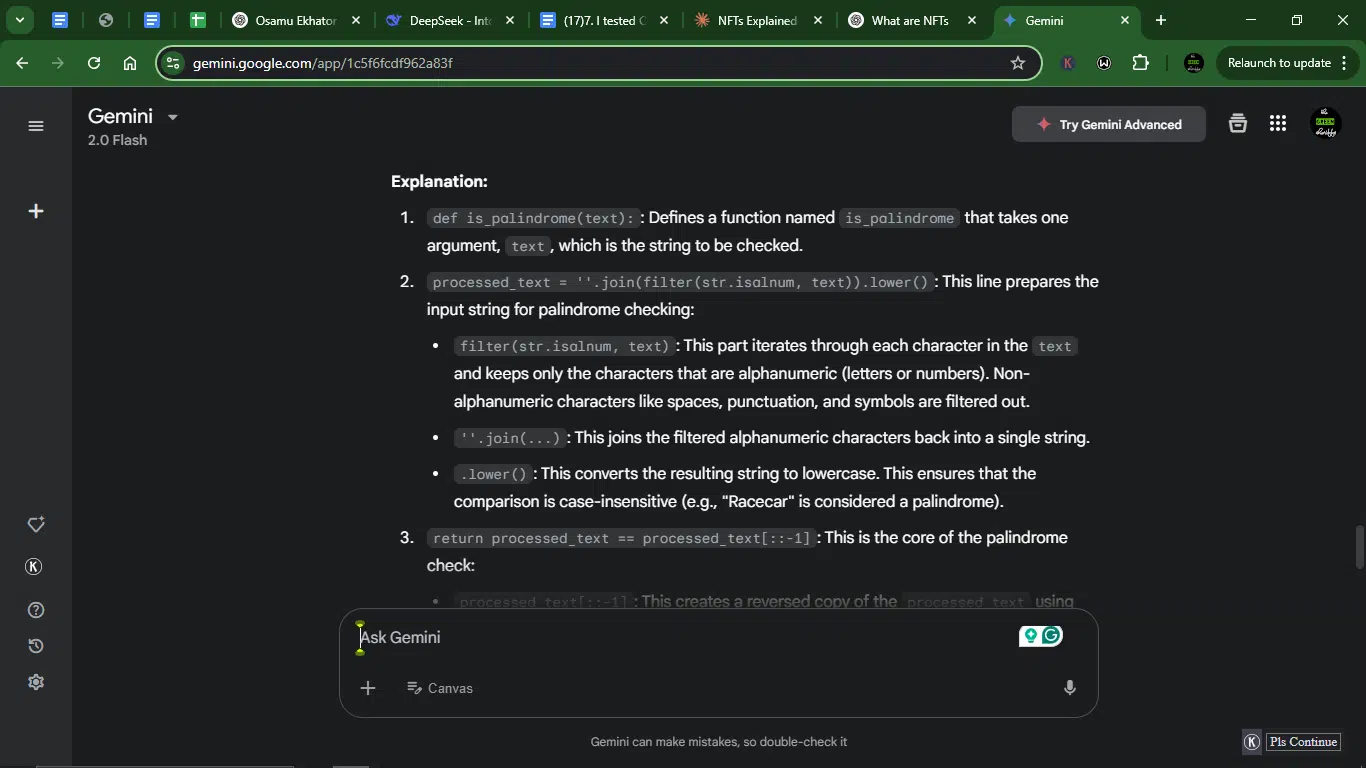

Prompt 4: Writing a Python function

This prompt was all about testing coding skills. I asked each model to write a simple Python function to see which one not only delivered clean, working code but also walked me through it in a way that made sense.

Prompt: “Write a Python function that checks if a string is a palindrome.”

Result:

Claude’s response:

ChatGPT’s response:

Gemini’s response:

- Accuracy: Claude’s response has a detailed docstring, handles case/punctuation, includes example usage, but it’s overly verbose for a simple task (e.g., if __name__ == “__main__” is unnecessary here).ChatGPT is concise, clear logic, and includes example usage. However, it lacks docstring and cleaned variable name could be more descriptive. Gemini goes full docstring, uses filter(str.isalnum) for cleaner filtering, edge-case tested (empty string). It’s slightly less readable due to the filter (beginners might prefer list comprehension).

- Clarity: Claude is verbose but beginner-friendly. ChatGPT is clean and minimal. Gemini is professional but slightly technical (filter).

- Usability: Claude requires trimming for production. ChatGPT is ready to use but needs docstring. Gemini is production-ready with docs + edge cases.

Winner: Gemini (most “plug-and-play”).

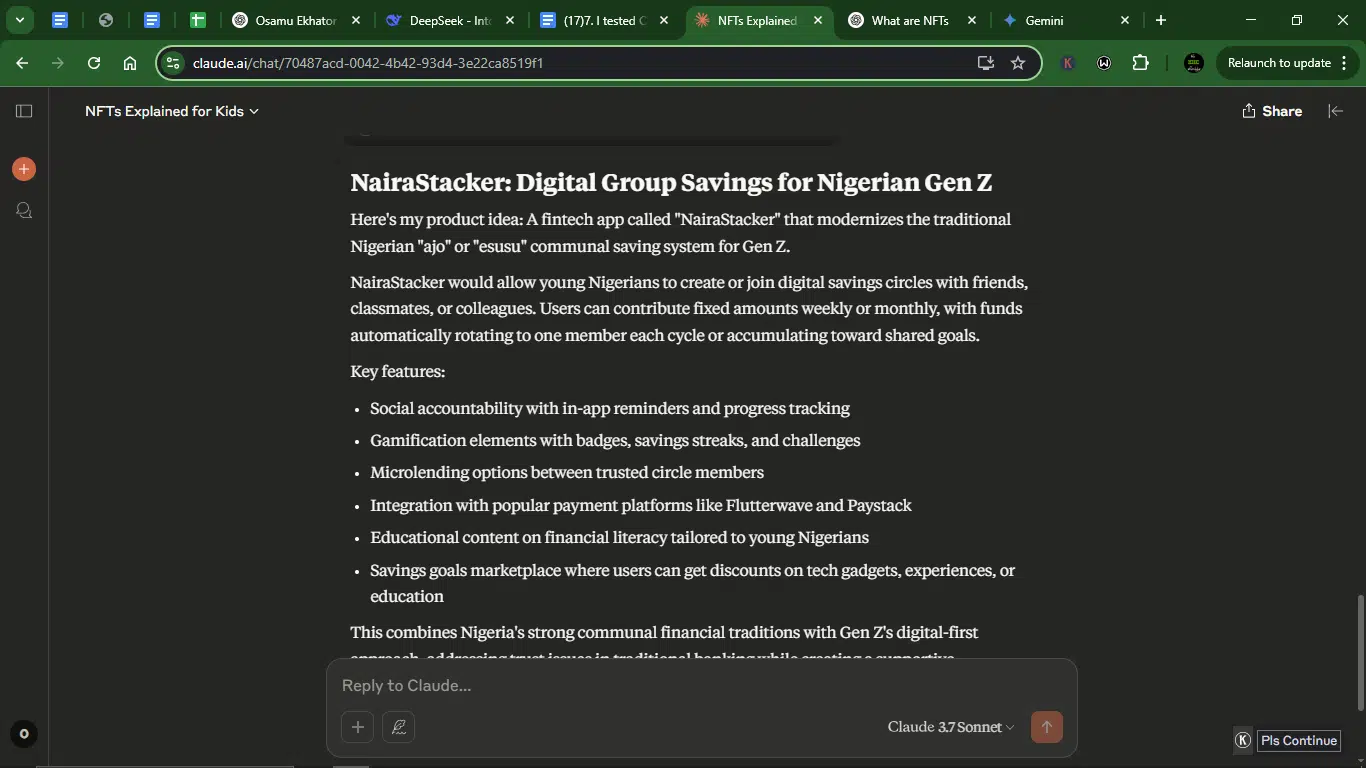

Prompt 5: Business idea generation

For this prompt, I wanted to see how well each model could generate fresh business ideas. It was about testing originality, market understanding, and creativity.

Prompt: “Suggest a unique product idea in fintech for Gen Z in Nigeria.”

Result:

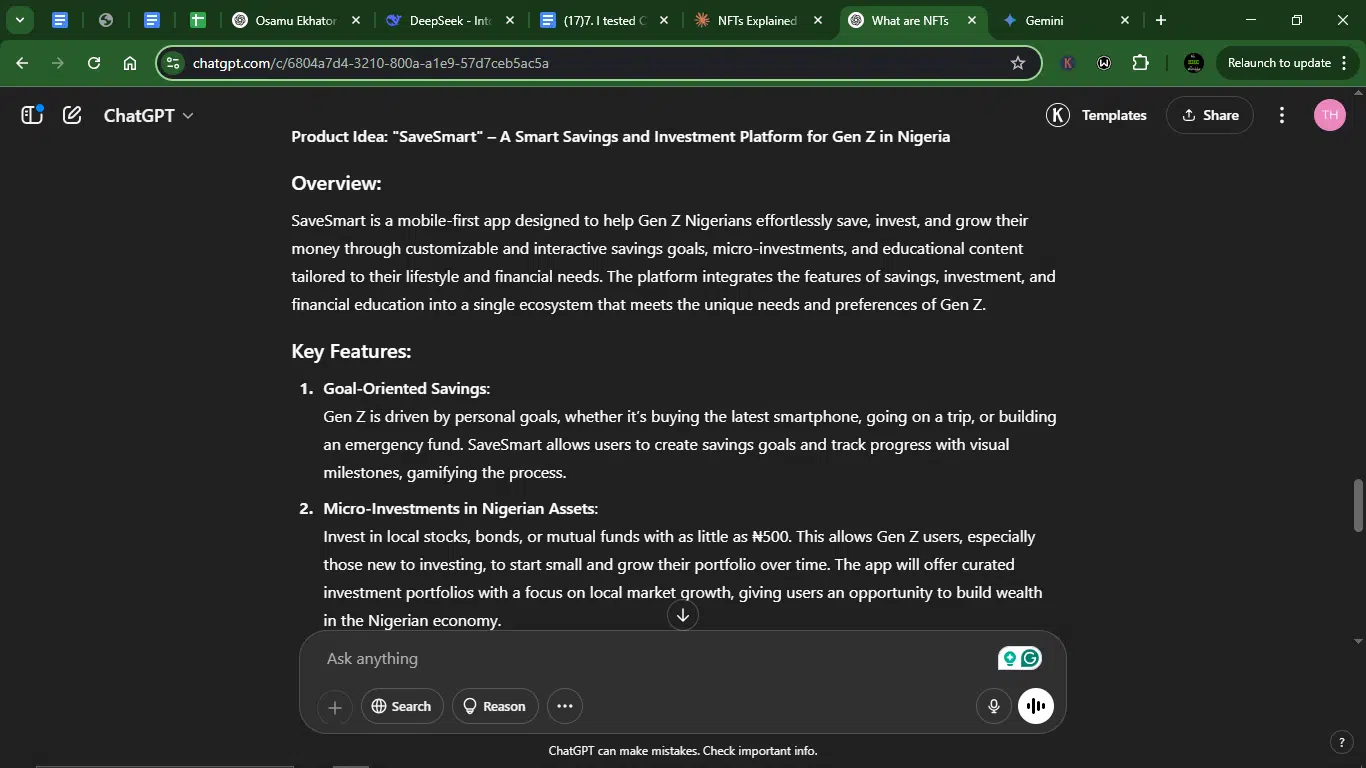

Claude’s response:

ChatGPT’s response:

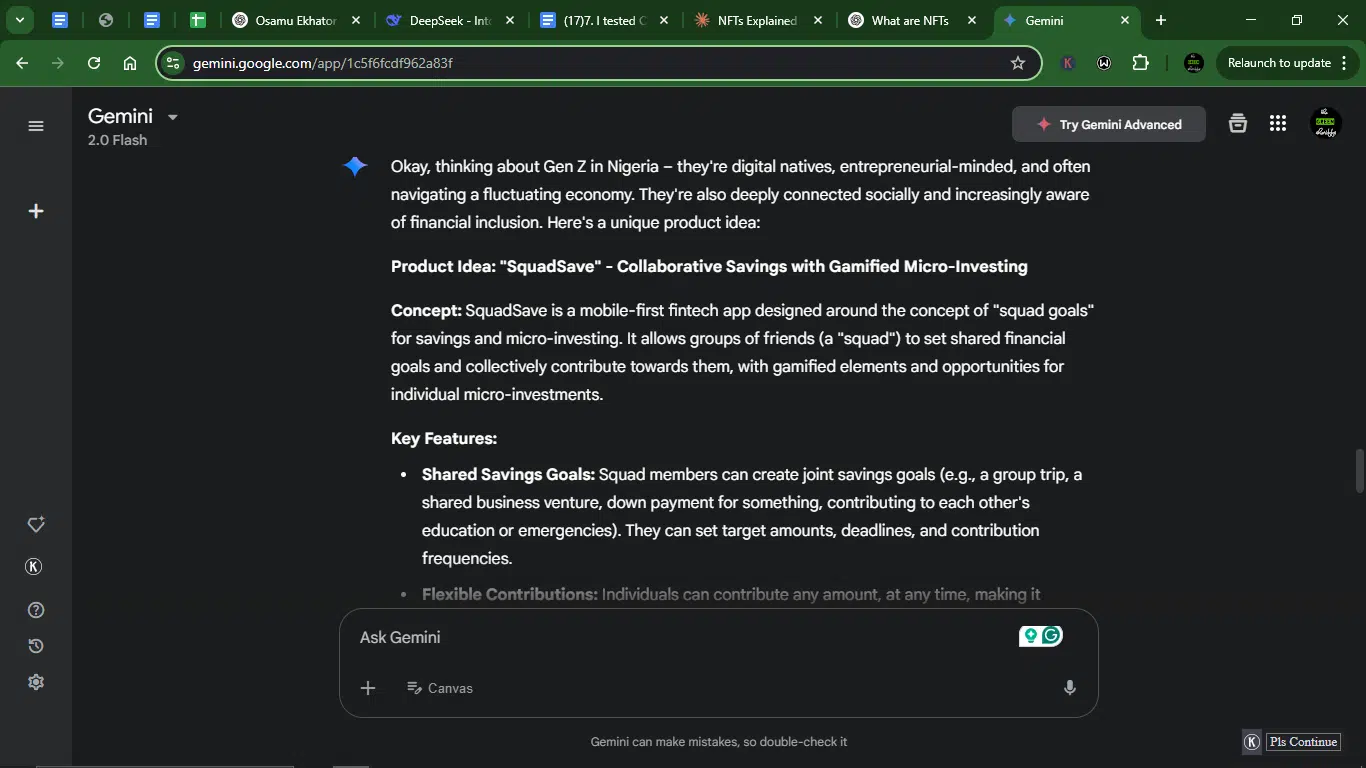

Gemini’s response:

- Accuracy (Fit for Gen Z & Nigerian Market): Claude’s NairaStacker has a strong cultural alignment with ajo/esusu traditions, but it doesn’t have a clear monetization strategy. ChatGPT’s SaveSmart is comprehensive (savings + micro-investments + education), but it’s less culturally unique; similar to existing apps like Cowrywise. Gemini’s SquadSave offers the best blend of social savings, gamification, and Nigerian context. And it includes ethical monetization ideas (premium features, partnerships).

- Creativity: Claude puts a good twist on traditional savings but safe. ChatGPT is practical but predictable. Gemini stands out with squad-based investing and “flexi-withdrawal” accountability.

- Clarity: They are all clear except for the lack of monetization for Claude and ChatGPT.

- Usability: Claude and ChatGPT still need a lot of work. Gemini is the most actionable (includes monetization, security, scalability).

Winner: Gemini (closest to MVP-ready).

Prompt 6: Creative writing

Here, I wanted to see how each model handled creative writing. Could they craft a compelling story or generate an engaging narrative?

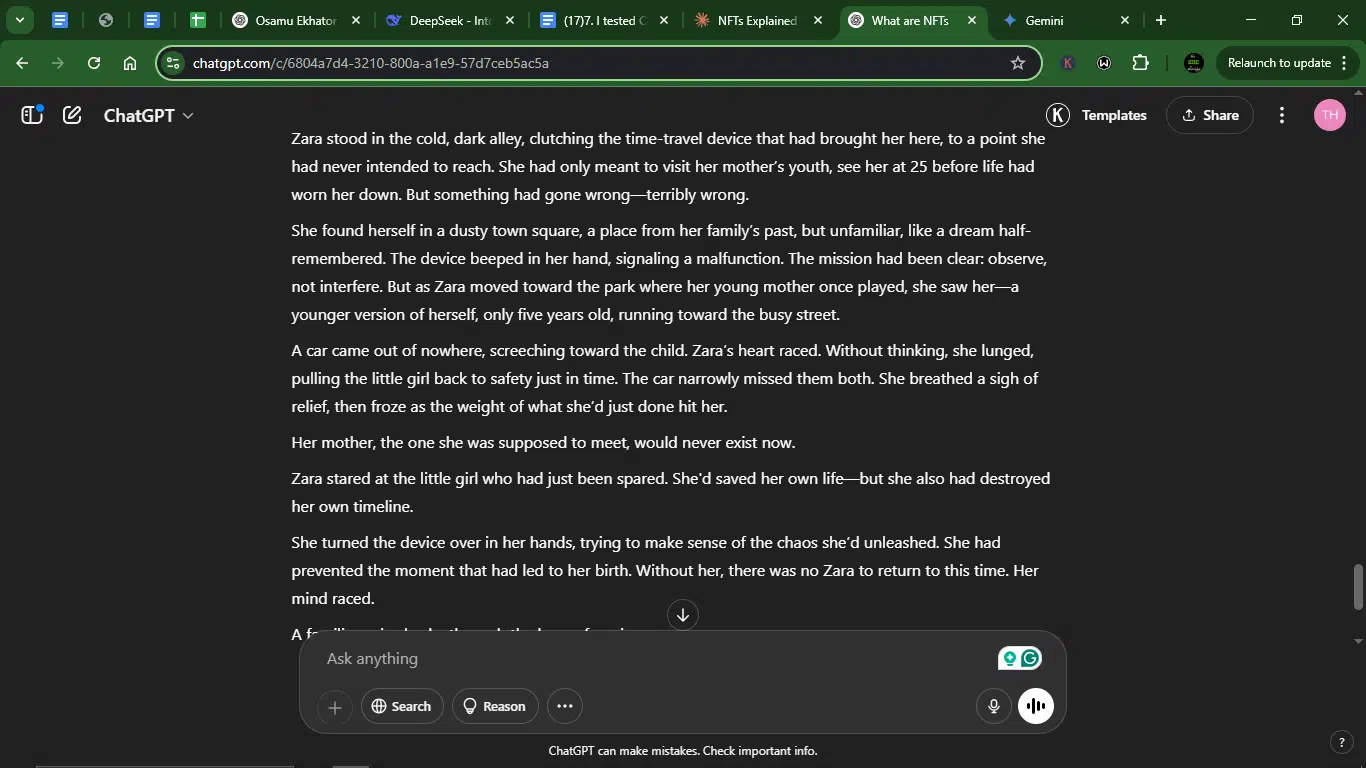

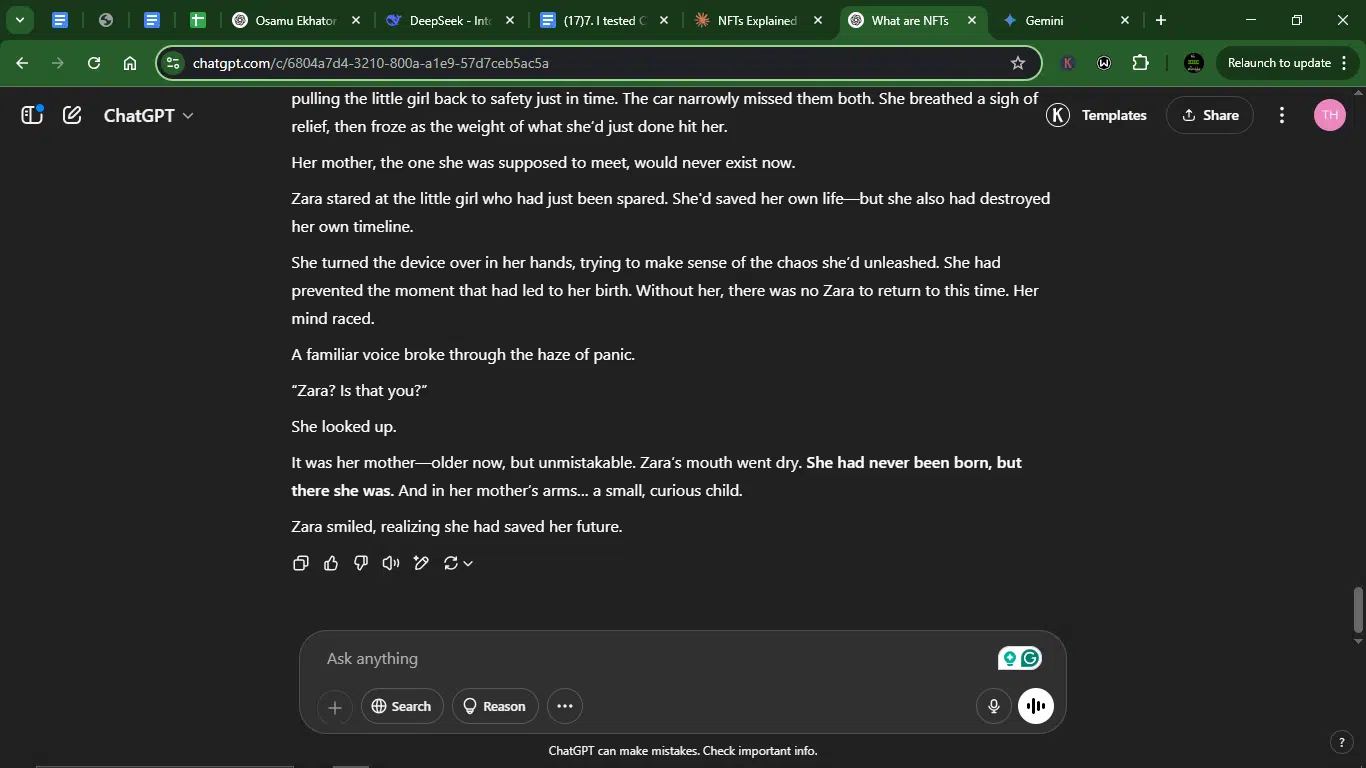

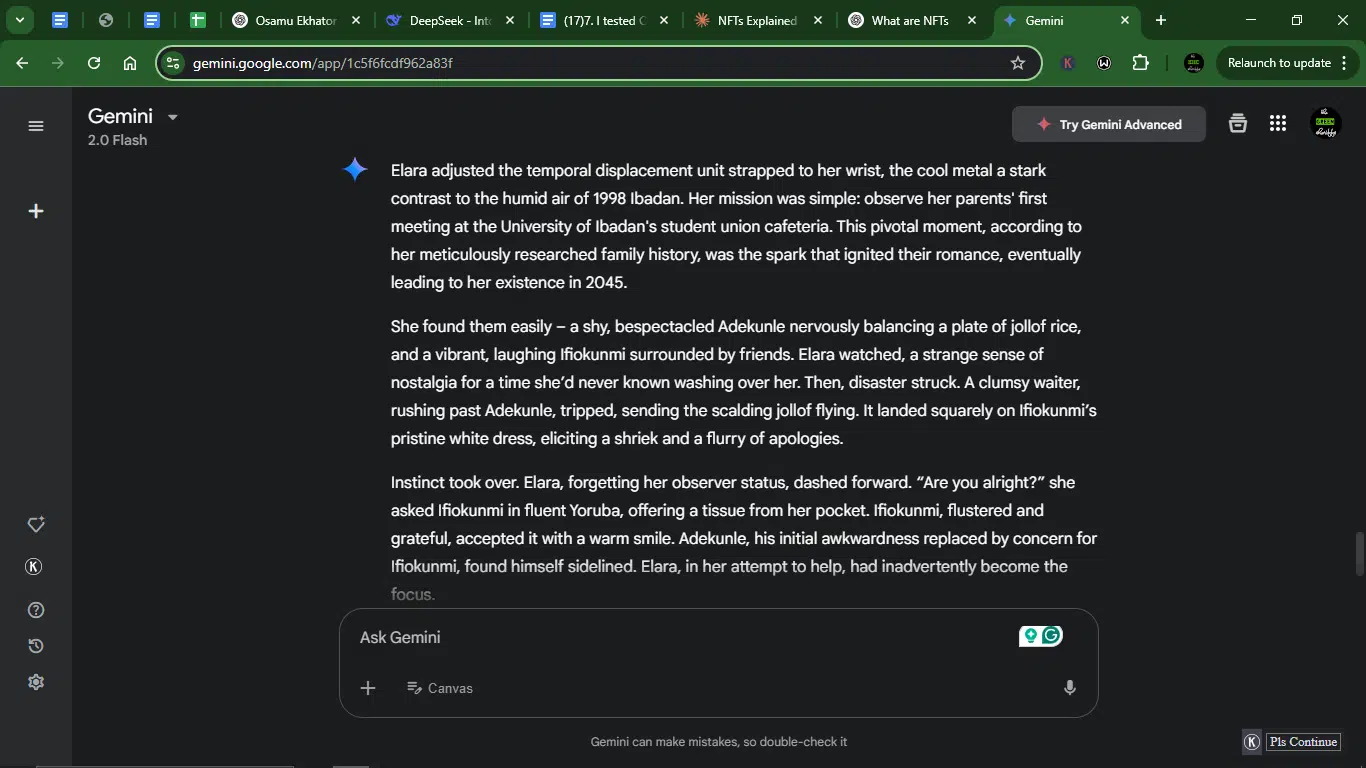

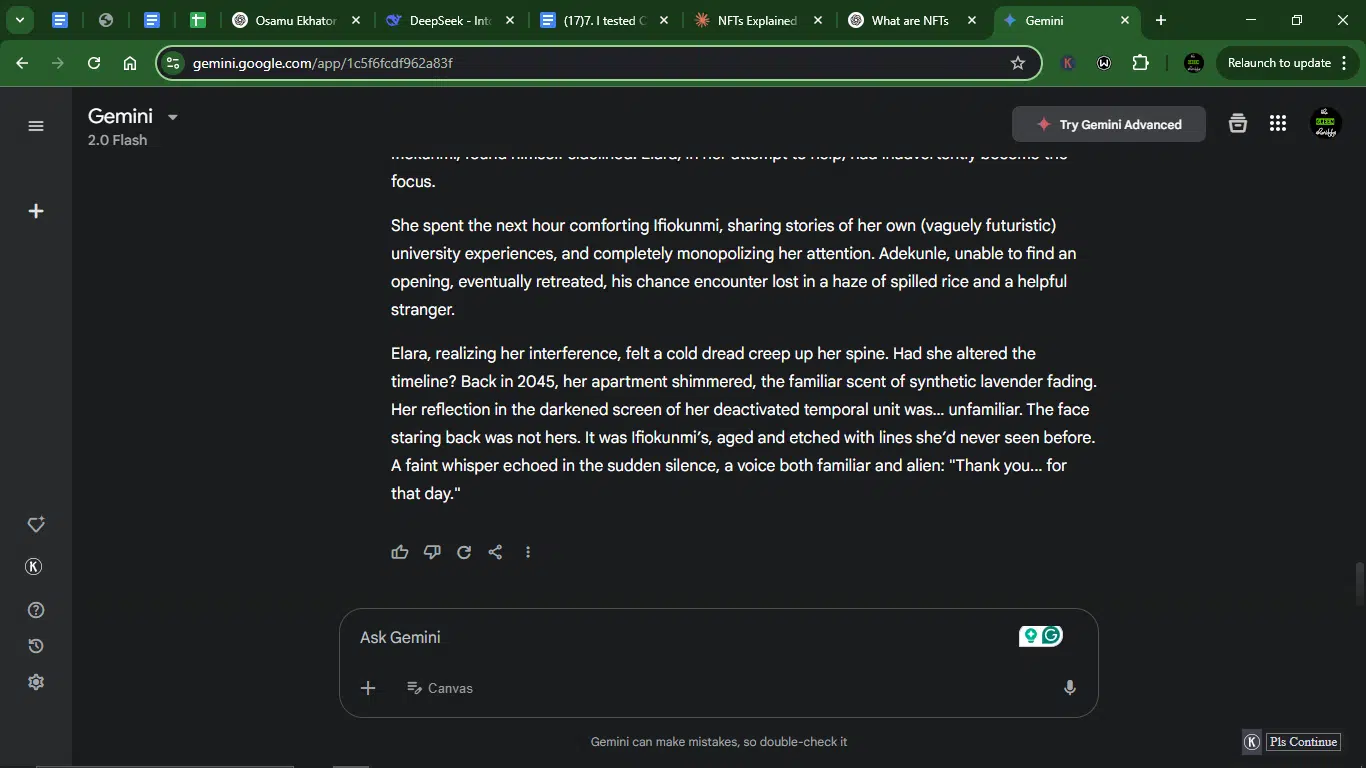

Prompt: “Write a 300-word short story about a time traveler who accidentally prevents their own birth. Include a twist ending.”

Result:

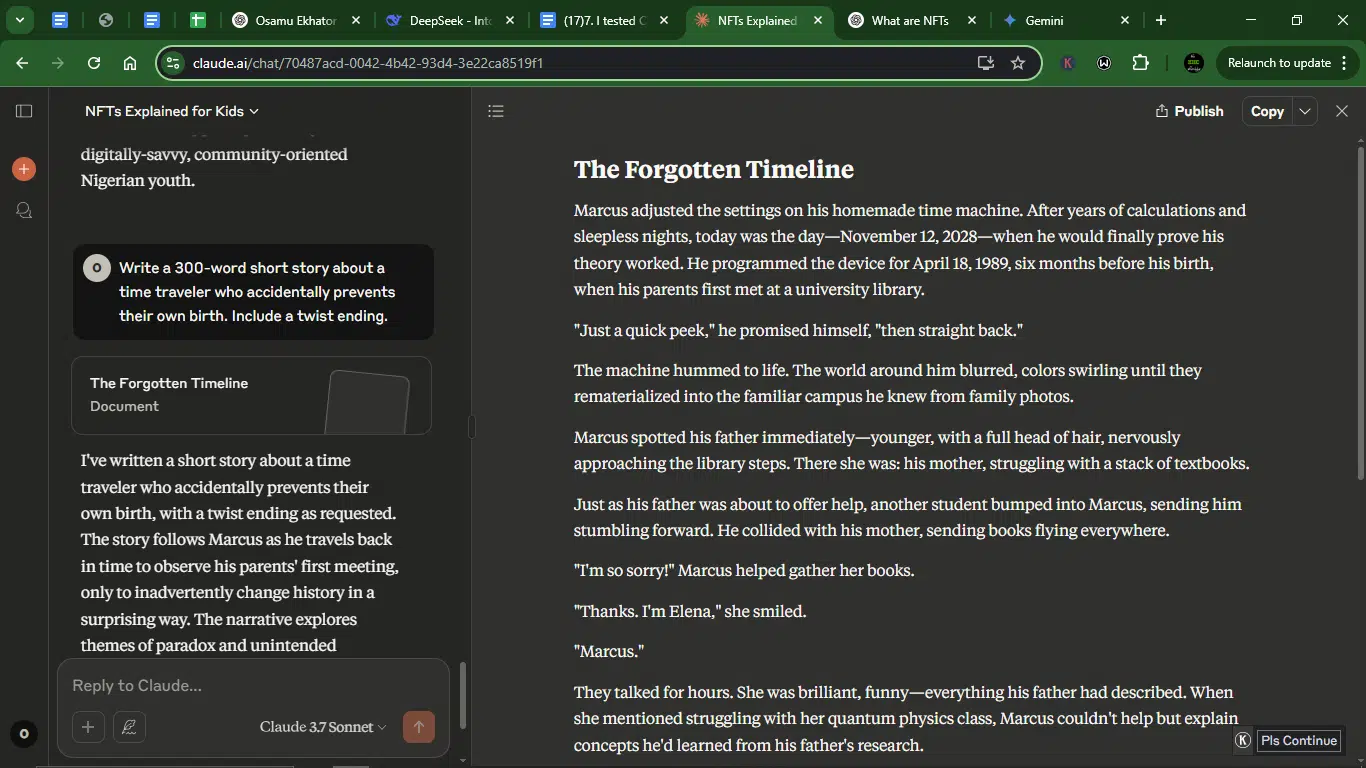

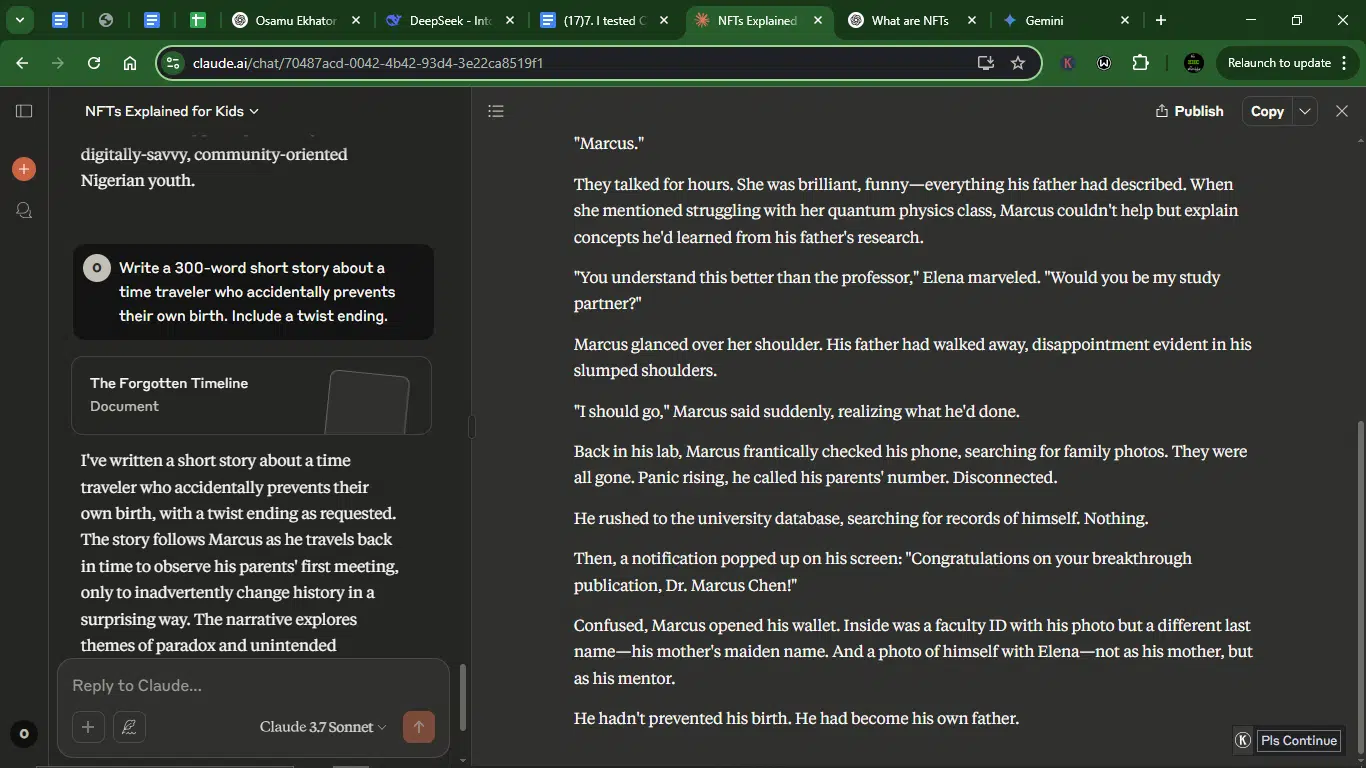

Claude’s response:

ChatGPT’s response:

Gemini’s response:

- Accuracy (follows prompt and logical twist): Claude gives a clear cause/effect (Marcus replaces his father). The twist is clever but slightly convoluted (becoming his father). ChatGPT leaves a high emotional impact (saving her younger self), but there’s a logic gap — if she erased her birth, how does she still exist to meet her mother? Gemini has the best twist (alters identity, not just timeline) and is the most culturally grounded (Ibadan, Yoruba dialogue).

- Creativity: Claude’s twist (self-fathering) is bold but jarring. ChatGPT’s story, while emotional, is derivative (common “save yourself” trope). Gemini offers a unique identity shift (becoming her mother’s peer).

- Clarity: Claude gives clean prose. ChatGPT’s smooth, but the logic issues distract. Gemini’s is rich in details (Ibadan, Yoruba) without sacrificing flow.

- Usability: Claude needs polish on the twist’s delivery. ChatGPT has a strong draft, but requires fixing the paradox. Gemini is publishable as-is; it only needs minor copy edits.

Winner: Gemini (most polished).

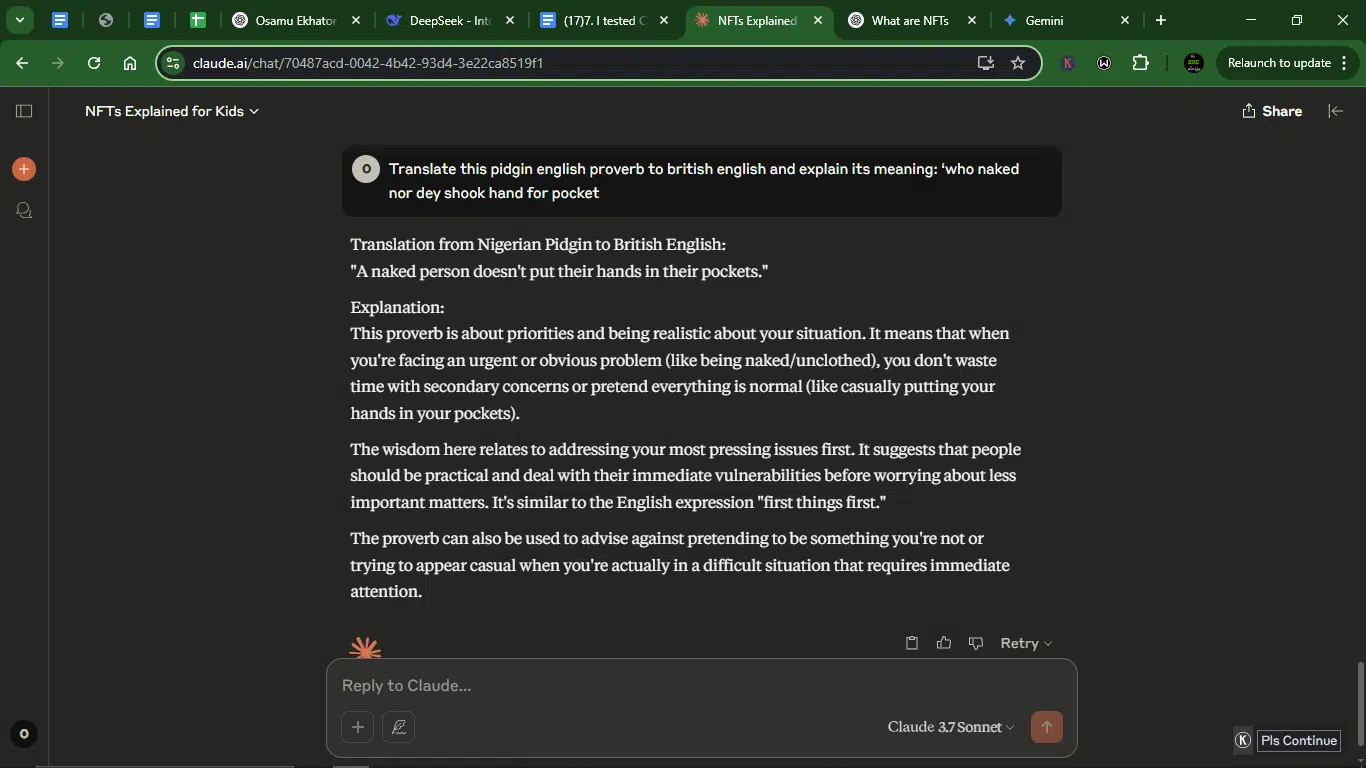

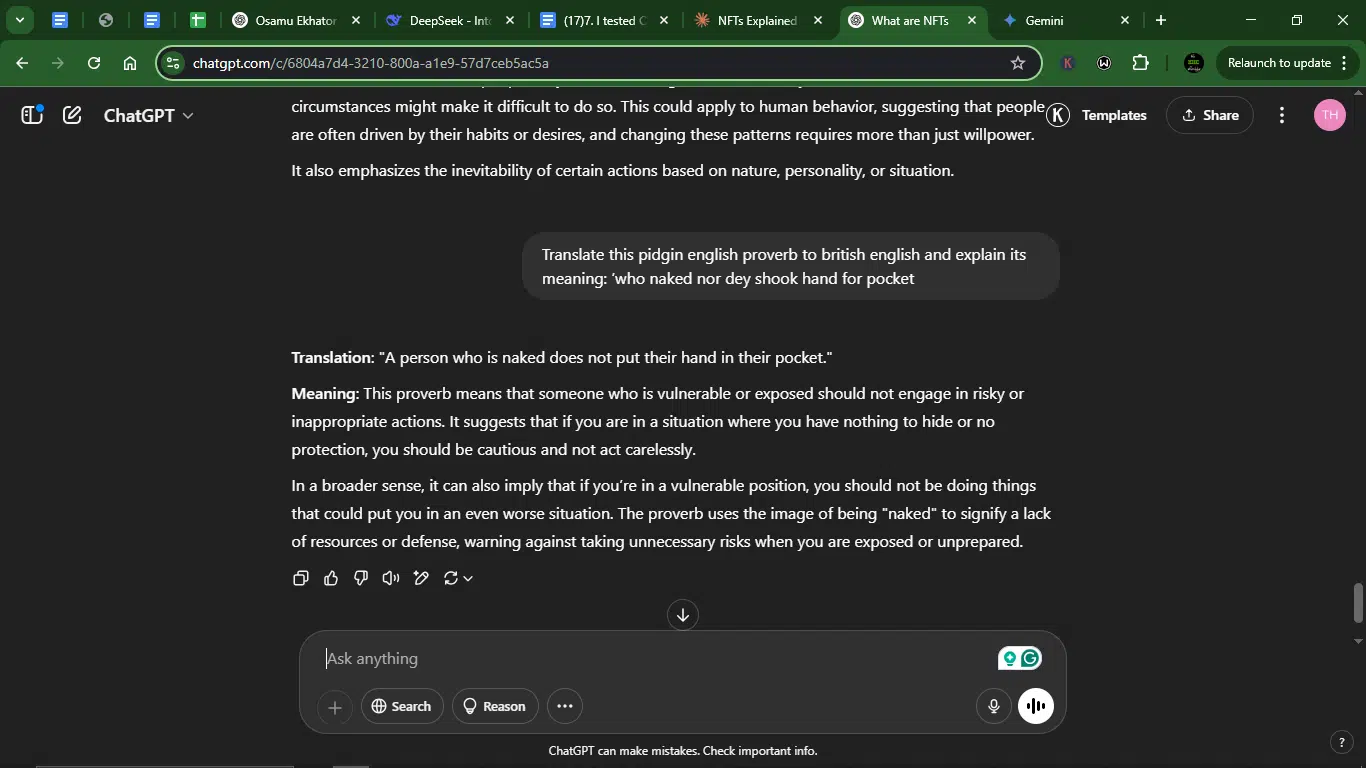

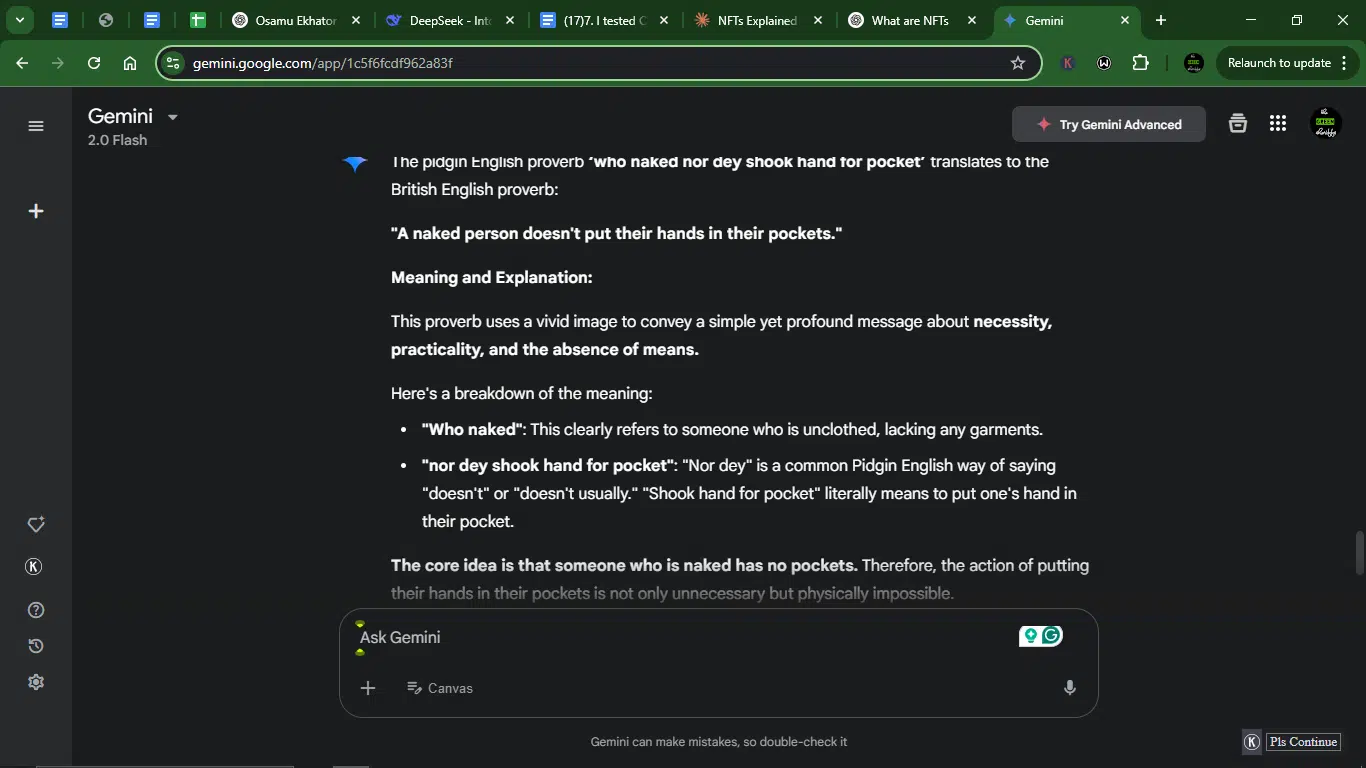

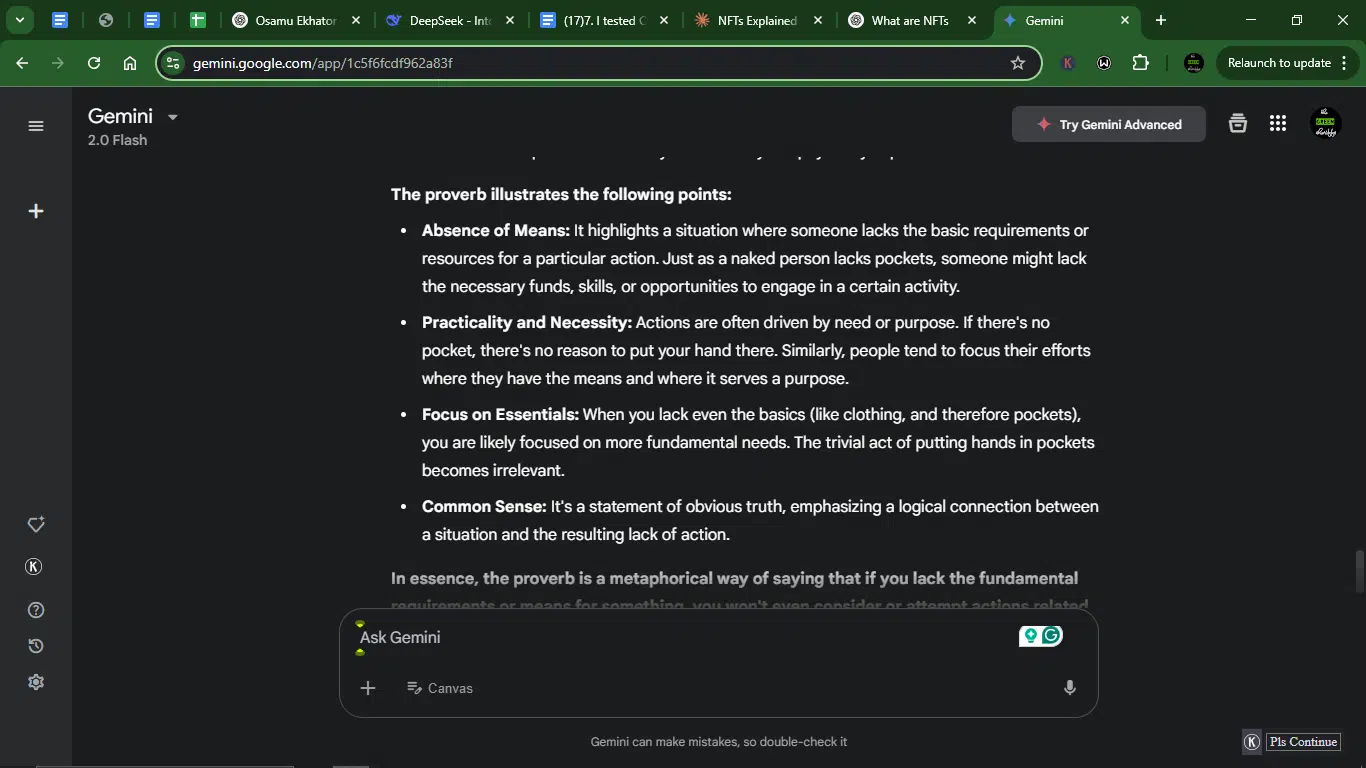

Prompt 7: Translation

This prompt was all about translation. I wanted to see how well each model could handle not just word-for-word translation, but also capture cultural nuance and explain the meaning behind phrases in a clear, accessible way.

Prompt: “Translate this pidgin English proverb to British English and explain its meaning: ‘who naked nor dey shook hand for pocket’”

Result:

Claude’s response:

ChatGPT’s response:

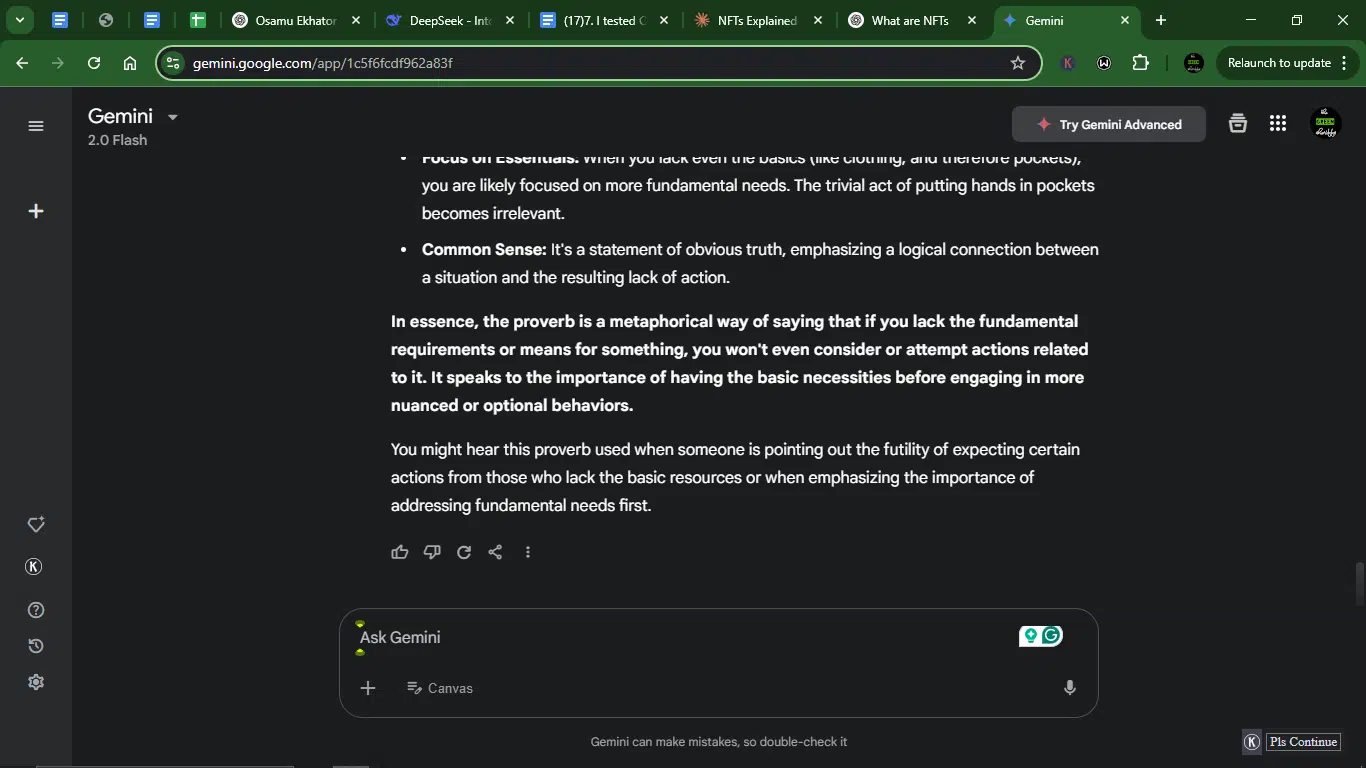

Gemini’s response:

- Accuracy (translation and meaning): Claude provides the correct translation, but falters in its explanation (prioritizing urgent needs). ChatGPT also gives an accurate translation, but it offers too narrow an explanation by focusing on vulnerability and risk. Gemini, like the other two, also responds with the correct translation. Beyond that, it gives the best explanation (covers impossibility, practicality, and necessity) and adds cultural nuance (focus on fundamentals).

- Creativity (nuance in explanation): Claude’s response is practical but safe. ChatGPT adds the risk angle (creative but slightly off). Gemini ties to broader life principles (resources → action).

- Clarity: All three are clear, but Gemini’s bullet points make it most scannable.

- Usability: Gemini’s version is ready for publication (needs zero edits).

Winner: Gemini.

Prompt 8: Personal advice

For this prompt, I aimed to test how each model handles personal advice, specifically, how well it understands emotional tone, provides practical suggestions, and remains empathetic while offering guidance.

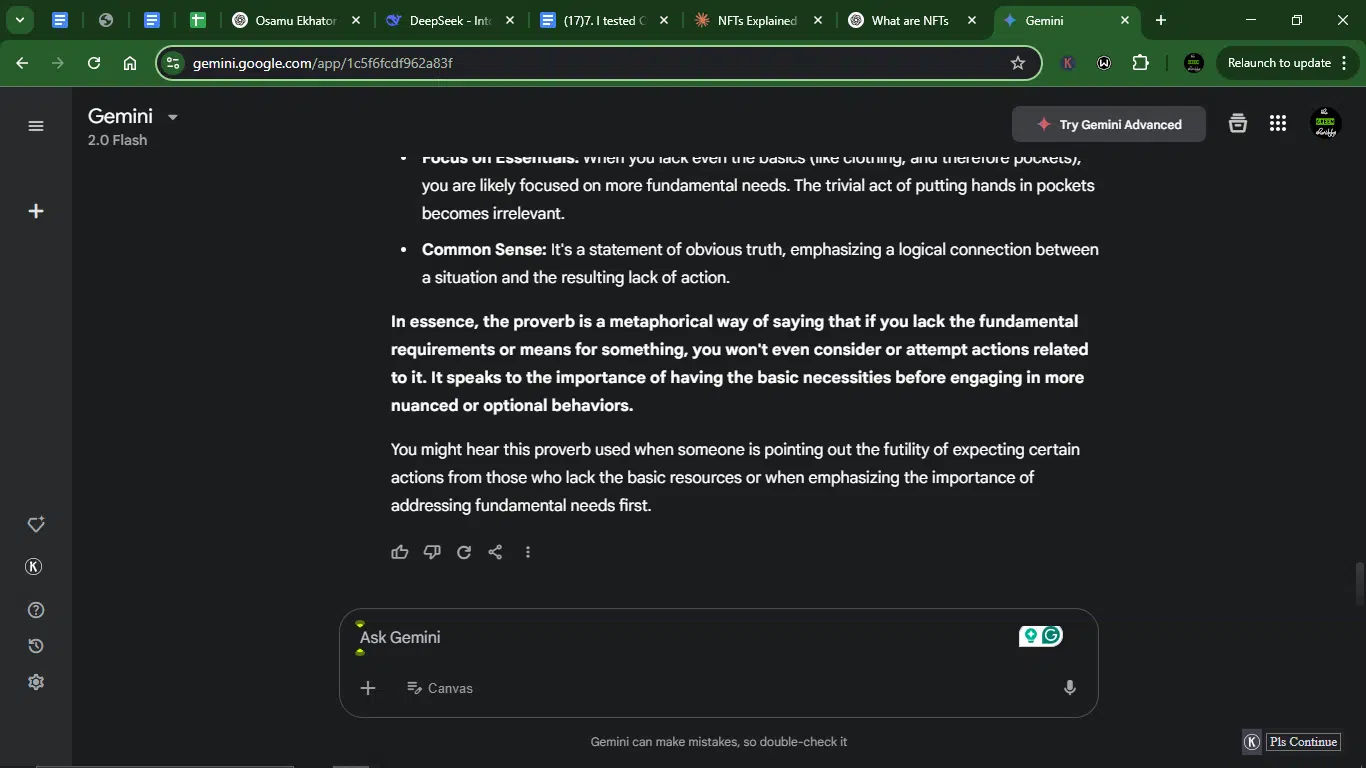

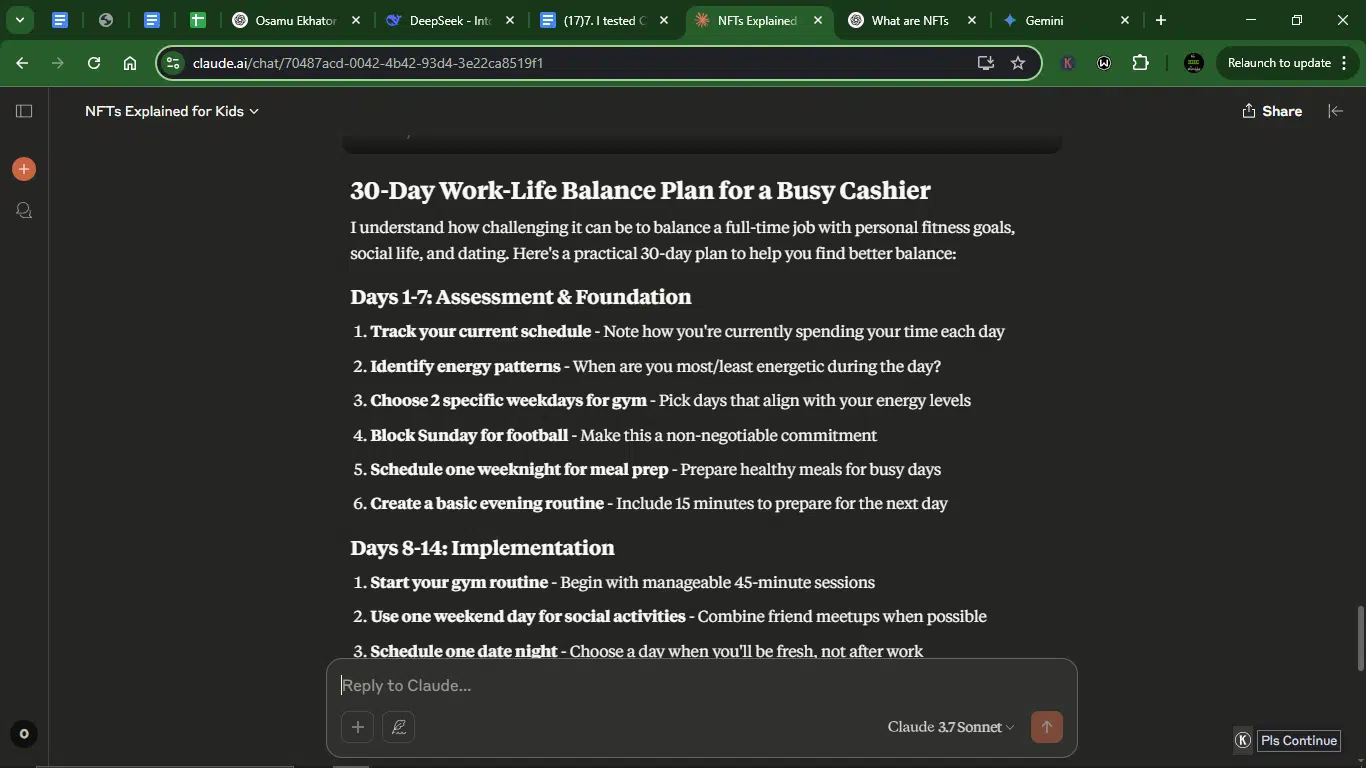

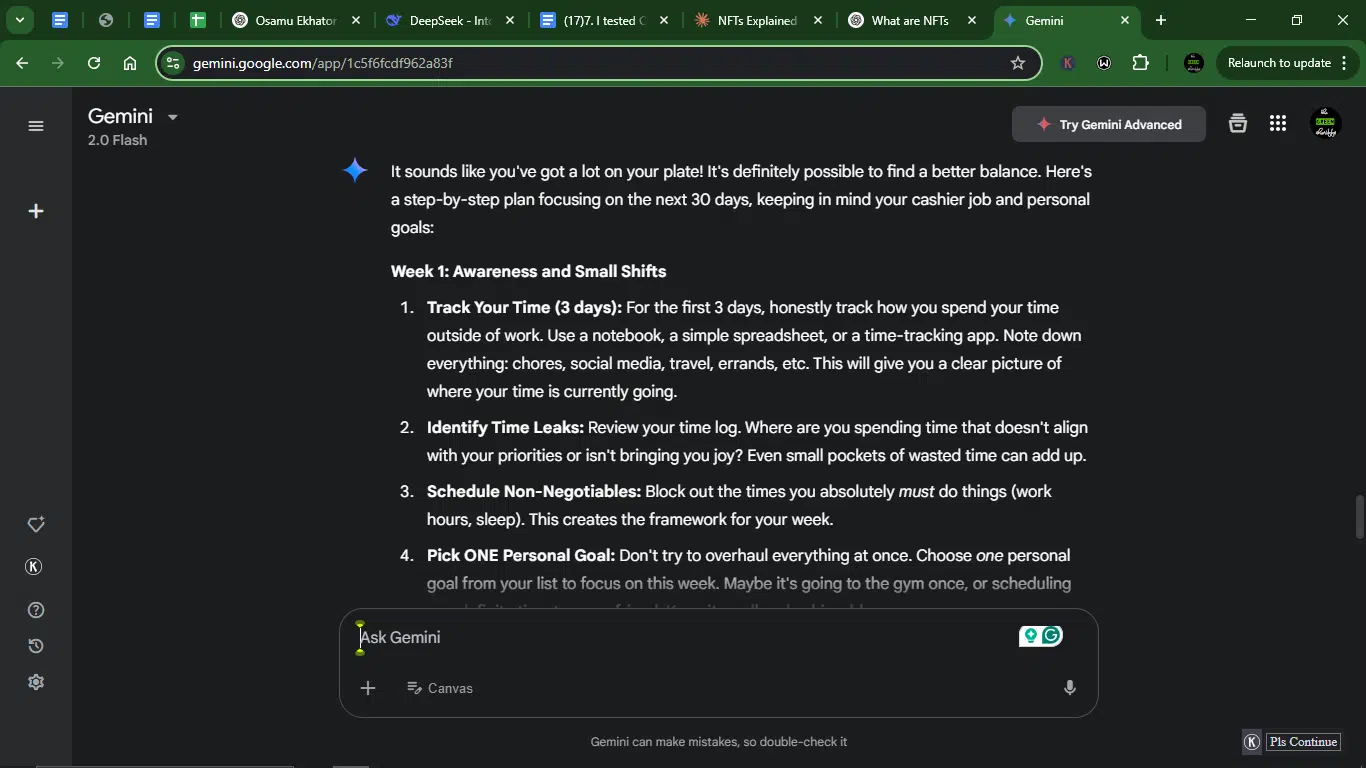

Prompt: “I’m feeling overwhelmed with work (I’m a 9-5 cashier) and personal life (I’m looking to go to the gym twice a week, play football on the weekend, go on dates, and see my friends frequently). Give me a step-by-step plan to improve my work-life balance in the next 30 days.”

Result:

Claude’s response:

ChatGPT’s response:

Gemini’s response:

- Accuracy: Claude’s phased approach (assessment → implementation → refinement) makes advice practical, but might come off as too rigid (e.g., “non-negotiable” football Sundays may not suit everyone). ChatGPT focuses on prioritization, ranking goals clearly. Gemini offers the most realistic advice, starts with time tracking, work-specific hacks (e.g., organizing till for efficiency), and emphasizes small wins.

- Creativity: Claude and ChatGPT feel a bit too generic. Gemini, while on the same wavelength, seems less generic.

- Clarity: All three are clear.

- Usability: Claude requires simplification. ChatGPT needs to be more actionable. Gemini is ready to be used as-is.

Winner: Gemini.

Prompt 9: Humor and entertainment

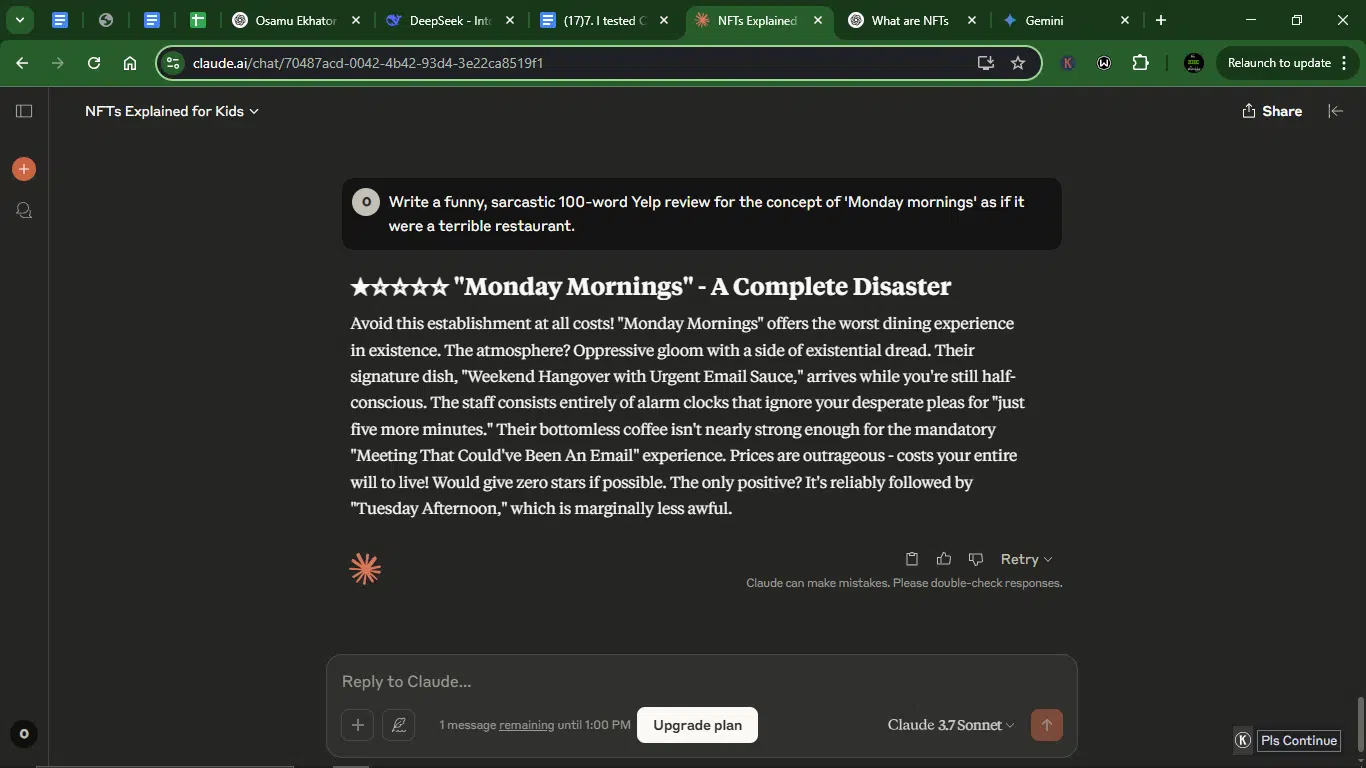

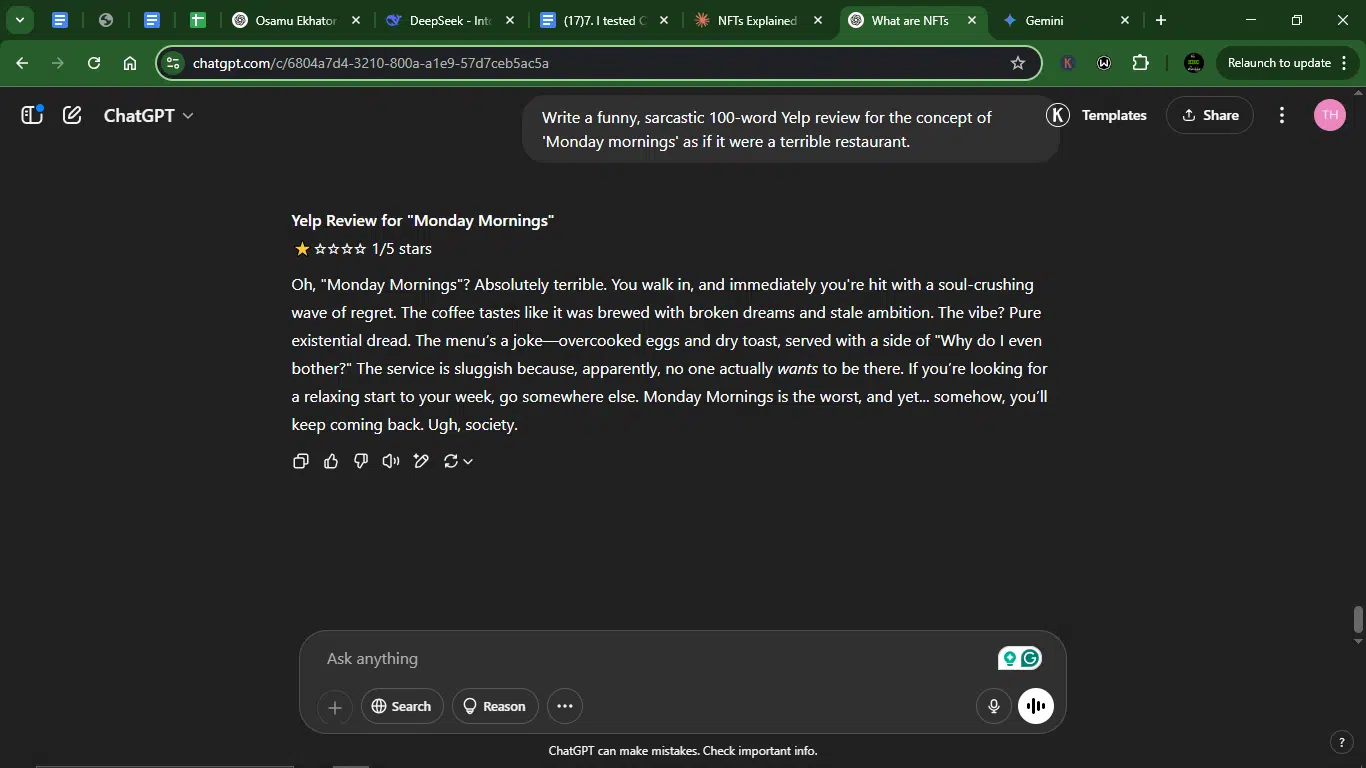

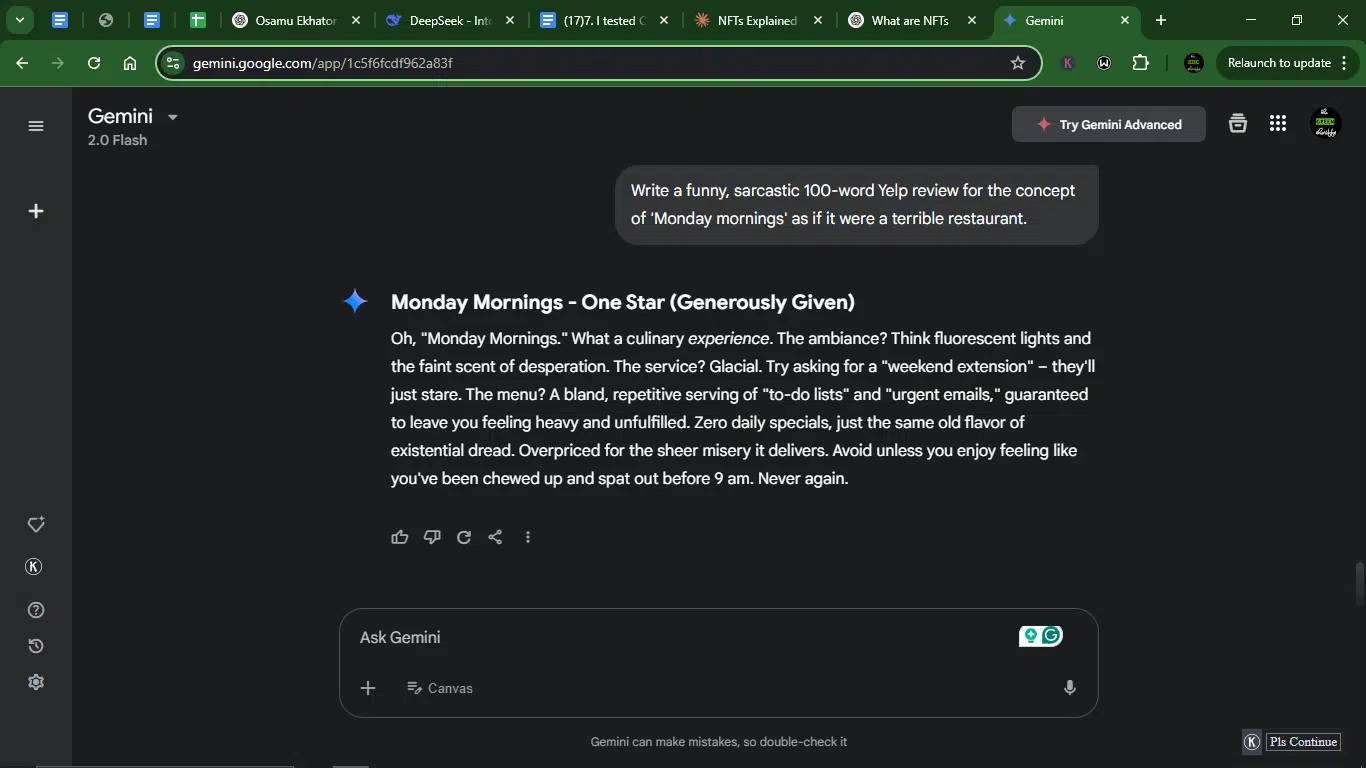

With this prompt, I wanted to evaluate each model’s ability to generate humor, maintain light-heartedness, and balance creativity with relevance, all while staying engaging and fun.

Prompt: “Write a funny, sarcastic Yelp review for the concept of ‘Monday mornings’ as if it were a terrible restaurant.”

Result:

Claude’s response:

ChatGPT’s response:

Gemini’s response:

- Accuracy: All three nail the format, but Claude and ChatGPT use classic tropes (bad coffee, existential dread). Gemini’s “Zero daily specials” and “chewed up and spat out” feel fresher.

- Creativity: Claude uses strong metaphors (“Weekend Hangover with Urgent Email Sauce”), but it’s a bit predictable with the coffee jokes and alarm clocks. ChatGPT is witty with how it uses common things (“coffee brewed with broken dreams”) and offers the best punchline (“you’ll keep coming back. Ugh, society.”). Gemini provides the sharpest sarcasm (“Generously Given” one star) and unique imagery (“faint scent of desperation”).

- Clarity: They are all clear.

- Usability: All are publishable, but Gemini’s “Never again” closer is most Yelp-ready.

Winner: Gemini.

Prompt 10: Ethical dilemma

For this last prompt, I aimed to assess each model’s ability to handle complex ethical questions, providing thoughtful responses that reflect critical thinking, empathy, and balance.

Prompt: “A self-driving car must choose between hitting a pedestrian or swerving and risking the passenger’s life. Discuss the ethical implications of each choice from a utilitarian vs. deontological perspective. Keep it under 200 words.”

Result:

Claude’s response:

ChatGPT’s response:

Gemini’s response:

The accuracy, creativity, clearness, and usability of the three responses are at par.

Winner: Tie.

Overall performance comparison: (10-prompt battle)

After rigorously testing all three AIs across 10 diverse prompts, here’s the final breakdown:

| AI | Wins | Strengths | Weaknesses |

| Gemini | 7 | Accuracy, cultural nuance, conciseness, ethics | Less playful tone |

| ChatGPT | 2 | Creativity, storytelling, engaging hooks | Less precise in technical tasks |

| Claude | 1 | Structured planning, step-by-step guides | Overly verbose, less original |

From my testing:

- Gemini dominates for coding, ethical debates, and translations.

- ChatGPT shines in Creativity. It’s best for storytelling, humor, and brainstorming.

- Claude’s best attempt came from working on methodical frameworks (e.g., 30-day plans).

Scoreboard:

- Google Gemini.

- ChatGPT.

- Claude.

Final verdict:

Gemini is the most consistent all-rounder, while ChatGPT wins for creativity. Claude trails behind but has utility in structured tasks.

Pricing for Claude, ChatGPT, and Gemini

Here’s how all three models compare when it comes to access and pricing:

Claude pricing

| Plan | Features | Cost |

| Free | Access to the latest Claude model, use on web, iOS, and Android, ask about images and documents | $0/month |

| Pro | Everything in Free, plus more usage, organized chats and documents with Projects, access to additional Claude models (Claude 3.7 Sonnet), and early access to new features | $18/month (yearly) or $20/month (monthly) |

| Team | Everything in Pro, plus more usage, centralized billing, early access to collaboration features, and a minimum of five users | $25/user/month (yearly) or $30/user/month (monthly) |

| Enterprise | Everything in Team, plus: Expanded context window, SSO, domain capture, role-based access, fine-grained permissioning, SCIM for cross-domain identity management, and audit logs | Custom pricing |

ChatGPT pricing

| Plan | Features | Cost |

| Free | Access to GPT-4o mini, real-time web search, limited access to GPT-4o and o3-mini, limited file uploads, data analysis, image generation, voice mode, Custom GPTs | $0/month |

| Plus | Everything in Free, plus: Extended messaging limits, advanced file uploads, data analysis, image generation, voice modes (video/screen sharing), access to o3‑mini, custom GPT creation | $20/month |

| Pro | Everything in Plus, plus: Unlimited access to reasoning models (including GPT-4o), advanced voice features, research previews, high-performance tasks, access to Sora video generation, and Operator (U.S. only) | $200/month |

Google Gemini pricing

| Plan | Key features | Price |

| Gemini | Access to the 2.0 Flash model & the 2.0 Flash Thinking experimental modelHelp with writing, planning, learning & image generationConnect with Google apps (Maps, Flights, etc.)Free-flowing voice conversations with Gemini Live | $0/month |

| Gemini Advanced | Access to the most capable models, including 2.0 ProDeep Research for generating comprehensive reportsAnalyze books & reports up to 1,500 pagesCreate & use custom AI experts with GemsUpload and work with code repositories2 TB Google One storage* Gemini integration in Gmail, Docs, and more* (available in select languages)NotebookLM Plus with 5x higher usage limits & premium features* | $19.99/month(First month free) |

Why these AI tools matter to developers, writers, and researchers

AI tools like Claude, ChatGPT, and Google Gemini are essential for the following reasons:

- Speed up ideation and content creation: From writing a blog post to drafting a pitch deck, these AIs can turn raw thoughts into structured outlines faster than humans.

- Break down complex topics: They are effective for simplifying deep, technical, or abstract ideas. It’s like having a patient tutor on standby. Take the first prompt in this article, for example.

- Debug, refactor, or build code: Gemini’s coding prowess makes it ideal for developers who want quick snippets, logic checks, or clean refactors on the fly. Check out the fourth prompt in this article.

- Enhance your research: Summarize documents, pull quotes, and explain jargon — all three tools help you learn faster.

- Localize your content: Gemini’s ability to understand local proverbs (check the seventh prompt), dialects, or cultural quirks (check the sixth prompt) makes it incredibly useful for writing content that resonates with a regional audience.

- Enhance creativity and brainstorming: They can help with everything from suggesting business ideas (check the fifth prompt) to writing product descriptions.

- Save time on repetitive tasks: whether it’s boilerplate code, email replies, or call summaries, AI saves time, so you can focus on decisions, not drafts.

Conclusion

Testing Claude, ChatGPT, and Gemini side-by-side reminded me that raw power isn’t everything; context, clarity, and tone matter. Gemini came out on top not because it was flashier, but because it understood the task better in most cases. No doubt, Claude and ChatGPT are still powerhouses for developers and general use.

That said, these tools are only getting smarter. Whether you’re a developer writing code, a journalist digging into sources, or a content strategist plotting campaigns, these AI models can give you a serious edge if you know how to use them right.

FAQs about Claude vs Copilot

What’s the best AI model for content creation?

For structured, factual content, Gemini delivers the most accurate and concise output, as shown in my tests where it won 7 out of 10 rounds. However, if you’re looking for creative, engaging narratives, ChatGPT is your best bet.

Is ChatGPT better for coding?

Per my comparison, Gemini stands as the top coder, producing clean, well-documented Python functions (like the palindrome checker) and scoring highest in usability. ChatGPT provides decent quick examples, it lacks Gemini’s precision. Claude, meanwhile, over-explains and is better suited for teaching concepts than writing production-ready code.

Does Gemini work well for African use cases?

Absolutely. My tests proved Gemini is the strongest for African use cases, as it accurately translated Pidgin proverbs, designed the most locally relevant fintech product (SquadSave), and nailed Nigerian settings in the time-travel story. Claude and ChatGPT, while capable, consistently lagged behind in cultural nuance.

Can I use all three tools together?

Yes. u se Gemini for research, coding, and translation; ChatGPT for brainstorming and first drafts; and Claude for outlining structured plans.

Are paid plans worth it?

For professional use, it makes sense to upgrade to the paid versions of these tools. While free tiers work for casual use, my tests showed paid versions deliver more consistent, high-quality results.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.