When it comes to heavyweight AI models, the Gemini vs Claude faceoff is one of those tech duels you simply can’t sit out, especially if you’re like me, always poking around to see which AI can walk the talk.

Now, I didn’t just skim blog posts or watch a few YouTube reviews. I got my hands dirty and ran Gemini and Claude through 10 real-world prompts. And let me tell you, this wasn’t a beauty pageant. It was a full-on trial by fire — code, essays, logic puzzles, the works.

If you’re wondering why I bother, it’s because AI isn’t just some futuristic hype train anymore. It’s already here, writing your drafts, helping you debug your app, and summarizing your five-hour Zoom meetings.

So choosing the right AI tool isn’t just a geeky flex; it’s a productivity decision. Between Gemini’s Google glow-up and Claude’s brainy, structured responses, figuring out which delivers can feel like choosing your favorite child. So, I went in with four scorecards (accuracy, creativity, depth, and usability) to simplify my results.

In this article, I’ll walk you through each test prompt, show you how both AI models responded (screenshots included), share what impressed me and what didn’t, and, of course, crown the winner of this Gemini vs Claude smackdown.

TL;DR: Key takeaways from this article

- If you’re writing taglines or brainstorming, Gemini excels. But when it’s time to get technical, like debugging code or translating with nuance, Claude is the more dependable choice.

- From scripts to emails, Claude generally needs fewer edits. Gemini can be a bit wordy or templated, requiring more cleanup before hitting “send.”

- Whether it’s explaining cultural idioms or tailoring an email to feel personal, Claude goes deeper. Gemini covers the basics but sometimes misses nuance.

- If you need an AI that understands images or charts, Gemini Ultra supports that. Claude, for now, is still text-only.

- When I tested puzzles, coding tasks, or even translation accuracy, Claude delivered more consistent, grounded answers.

- Gemini and Claude aren’t rivals in a winner-takes-all game. They’re tools with different strengths. You’ll get the best results by keeping both in your AI toolbox.

What are Google Gemini and Claude?

Before I jump into the meat of this article, let’s slow down and look at what we’re dealing with. Because let’s be honest, you don’t want to root for a team if you don’t even know who’s playing.

Google Gemini:

Think of Gemini as the cool new kid backed by an insanely rich family. Google launched Gemini in 2023 as its full-throttle shot at reclaiming the AI spotlight from OpenAI. And I’ll be the first to tell you that it didn’t come to play.

Gemini isn’t just another chatbot that spits out paragraphs. It’s multimodal, which means it can understand and generate not just text but also images, audio, and video. That makes it a bit of a Swiss Army knife in the AI world.

And since it’s powered by Google, you get the entire search engine brain behind it. That makes Gemini especially useful when looking for fresh, factual answers or working on tasks that go beyond just “write me a story” or “explain recursion like I’m five.” Whether diving into a complex research topic or trying to understand a graph from a blurry PDF, Gemini wants to be your go-to assistant.

In short, Google Gemini is a full-blown AI assistant trained to be your everything app for productivity, creativity, and analysis.

How does Google Gemini work?

Under the hood, Gemini runs on the brainpower of Google DeepMind’s most advanced models, and it shows. The AI model isn’t just trained to understand your words; it’s built to process text, images, audio, and even video. Yep, it’s got all the senses (minus smell for now).

What makes Gemini stand out is how effortlessly it plugs into Google’s massive digital universe. If you live inside Google’s ecosystem (Docs, Gmail, Search, YouTube), you’ll feel right at home. It feels less like using a separate AI tool and more like unlocking a supercharged version of everything you already use.

Sure, it suffered some setback during the launch, but Google has been tightening the screws fast. With regular upgrades, it’s matured into a genuinely smart assistant that’s ready to take on everything from writing reports to decoding what’s in that grainy screenshot you forgot about last week.

Google Gemini at a glance

| Developer | Google DeepMind |

| Year launched | March 2023 |

| Type of AI tool | Multimodal AI for text, image, and video processing |

| Top 3 use cases | Research assistance, multimedia analysis, and document automation |

| Who can use it? | Students, researchers, professionals, and creatives |

| Starting price | $19.99 |

| Free version | Yes |

Claude

Claude, developed by Anthropic AI, is a conversational AI chatbot and the name of the underlying Large Language Models (LLMs) that power it. Designed for natural, human-like interactions, Claude excels in a wide range of tasks, from summarization and Q&A to decision-making, code-writing, and editing.

Named after Claude Shannon (the pioneer of information theory), this AI assistant was built with an emphasis on safety, reliability, and context-aware reasoning. Unlike some AI models that rely on real-time internet access, Claude generates responses based solely on its training data, offering structured and coherent answers without pulling live web results.

Anthropic currently offers multiple versions of Claude, with one of its standout features being extended memory, allowing it to process up to 75,000 words at once — meaning it can analyze entire books and generate insightful summaries.

How does Claude work?

Claude is Anthropic’s answer to the growing demand for thoughtful, safety-focused AI. Named after Claude Shannon, the father of information theory, this model was built to prioritize clarity, reliability, and contextual understanding. It’s not just another chatbot throwing guesses at your questions; it’s designed to reason carefully through prompts and avoid hallucinations as much as possible..

While it doesn’t browse the Internet in real time like Google Gemini, Claude makes up for that with its deep training and extended memory. It can process up to 75,000 words in one go, enough to digest entire books or lengthy project documentation, and give you coherent summaries or suggestions. Whether you’re writing code, making decisions, or summarizing dense research, Claude aims to give you consistent, well-structured responses.

Claude at a glance (table)

| Developer | Anthropic |

| Year launched | 2023 |

| Type of AI tool | Conversational AI and Large Language Model (LLM) |

| Top 3 use cases | Content structuring, analytical reasoning, and summarization |

| Who can use it? | Writers, researchers, and business professionals |

| Starting price | $20 per month |

| Free version | Yes, with limitations |

How I tested the models

Before declaring a winner, I wanted a fair and practical evaluation, something that reflects the kind of tasks you and I would genuinely use an AI for. Here’s how I structured the test.

1. Prompt design

I created 10 prompts spread across key categories where AI tools are often put to work, including coding tasks, creative writing (like ad copy and short stories, research and summarization, logic reasoning, and conversational understanding.

2. Evaluation criteria

Each response was judged across four criteria:

- Accuracy: Were the facts or results correct?

- Creativity: Did the response go beyond the obvious? Did the response feel original or just rehashed?

- Depth: Did it dig deep into the topic or just skim the surface?

- Usability: Was it easy to understand, refine, or put into action?

3. Testing format

To ensure fairness, I gave both Claude and Gemini the same prompt — no tweaks, no extra guidance. I saved their responses, reviewed them side by side, and used trusted sources like Google and documentation sites to fact-check anything that seemed off. What you’ll see next is an honest comparison based on how they perform.

Prompt-by-prompt results

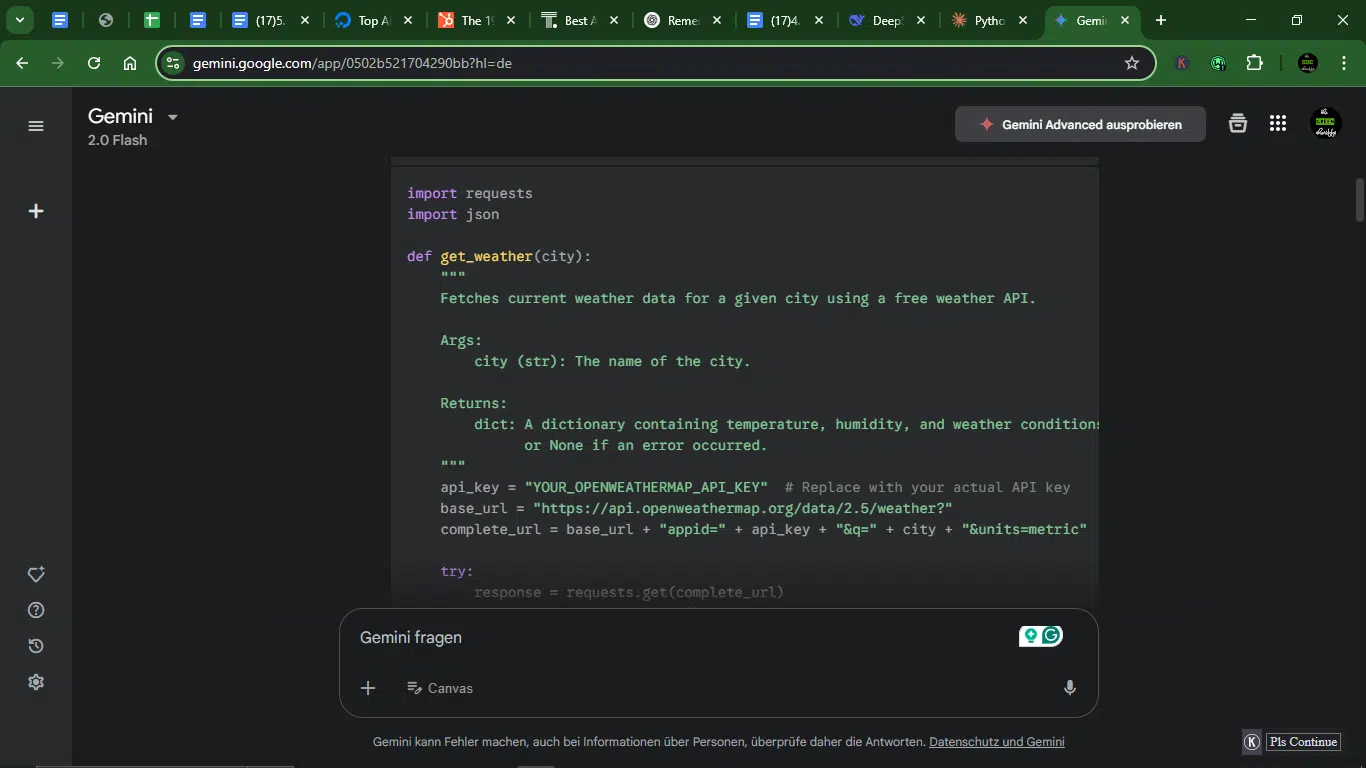

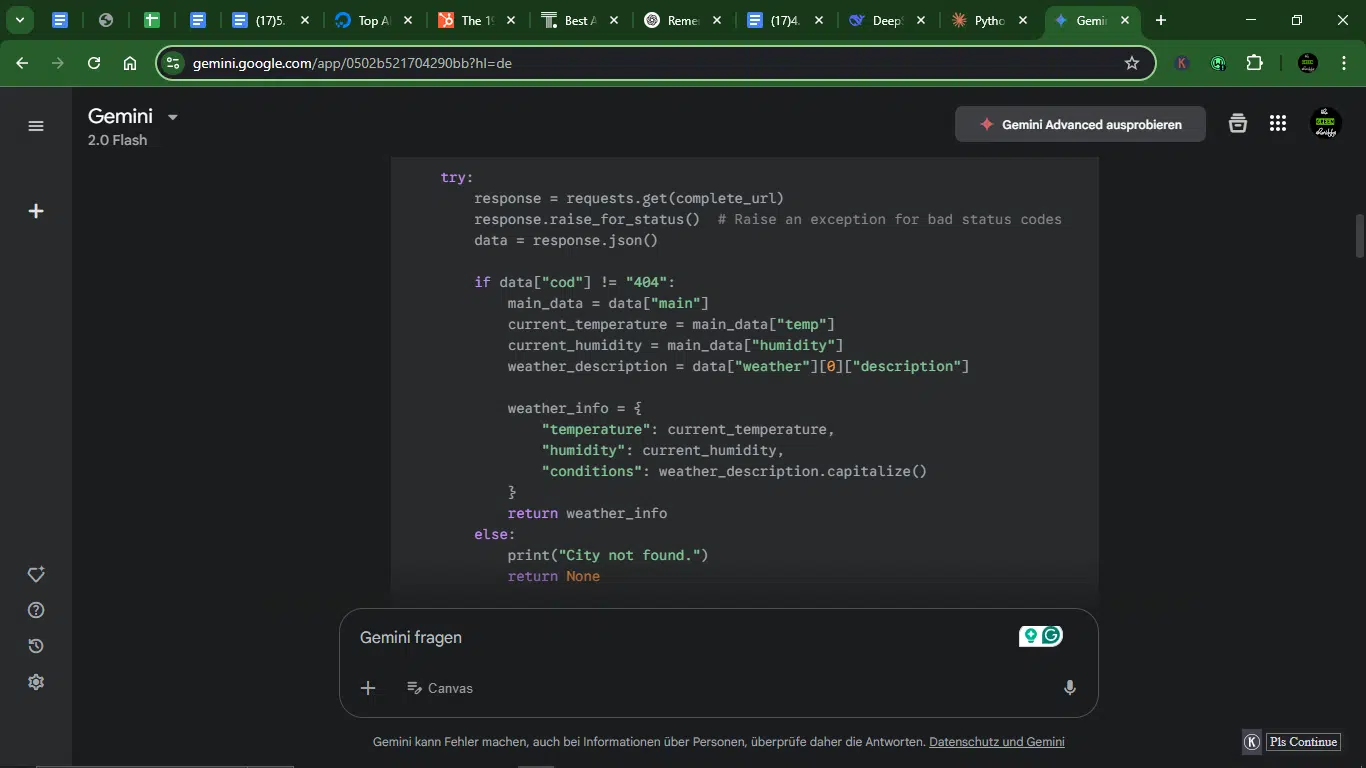

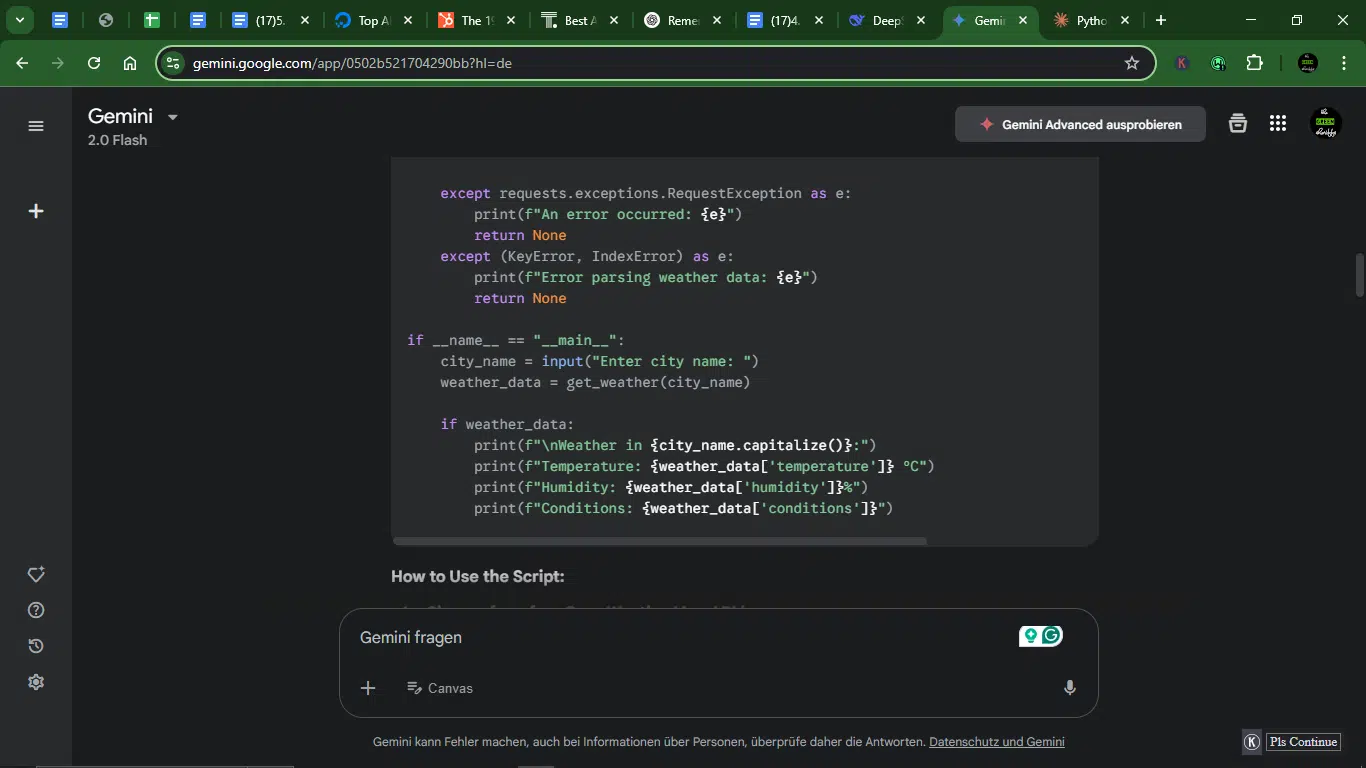

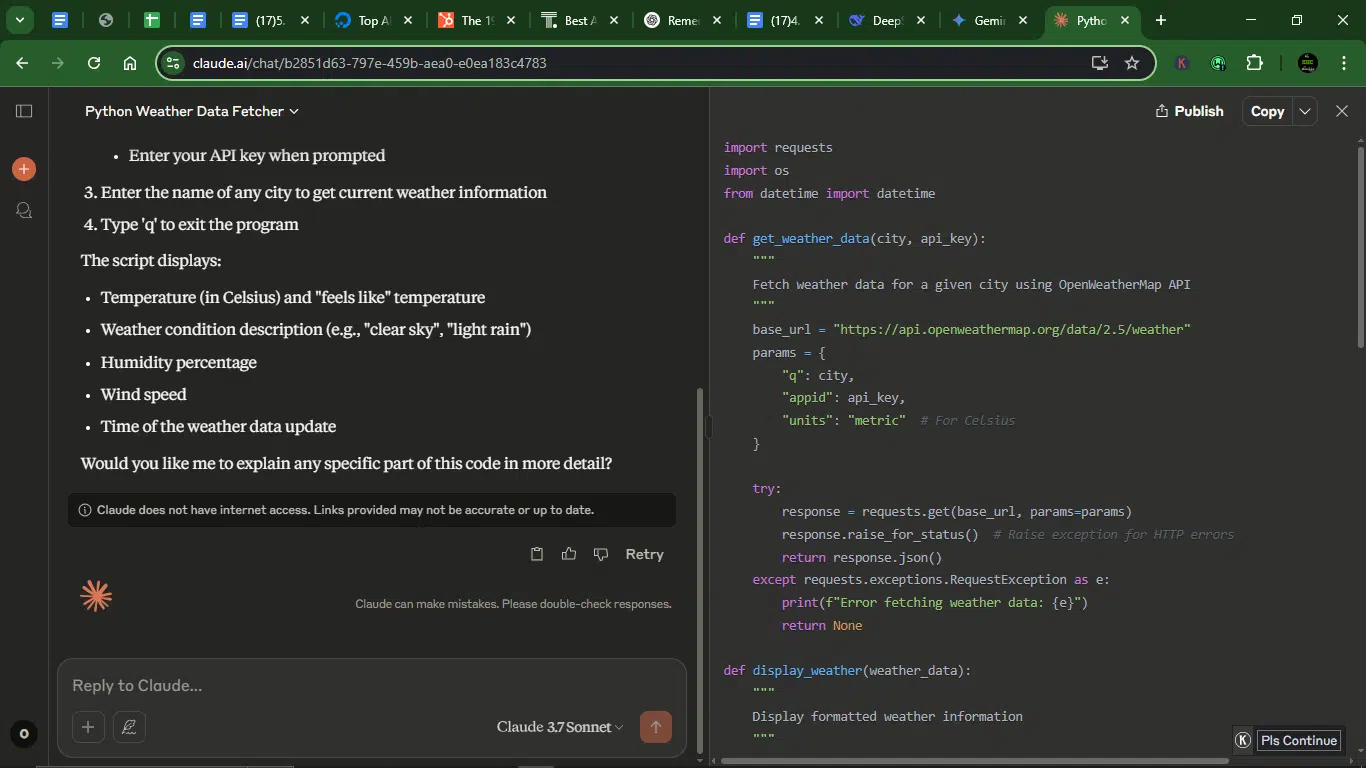

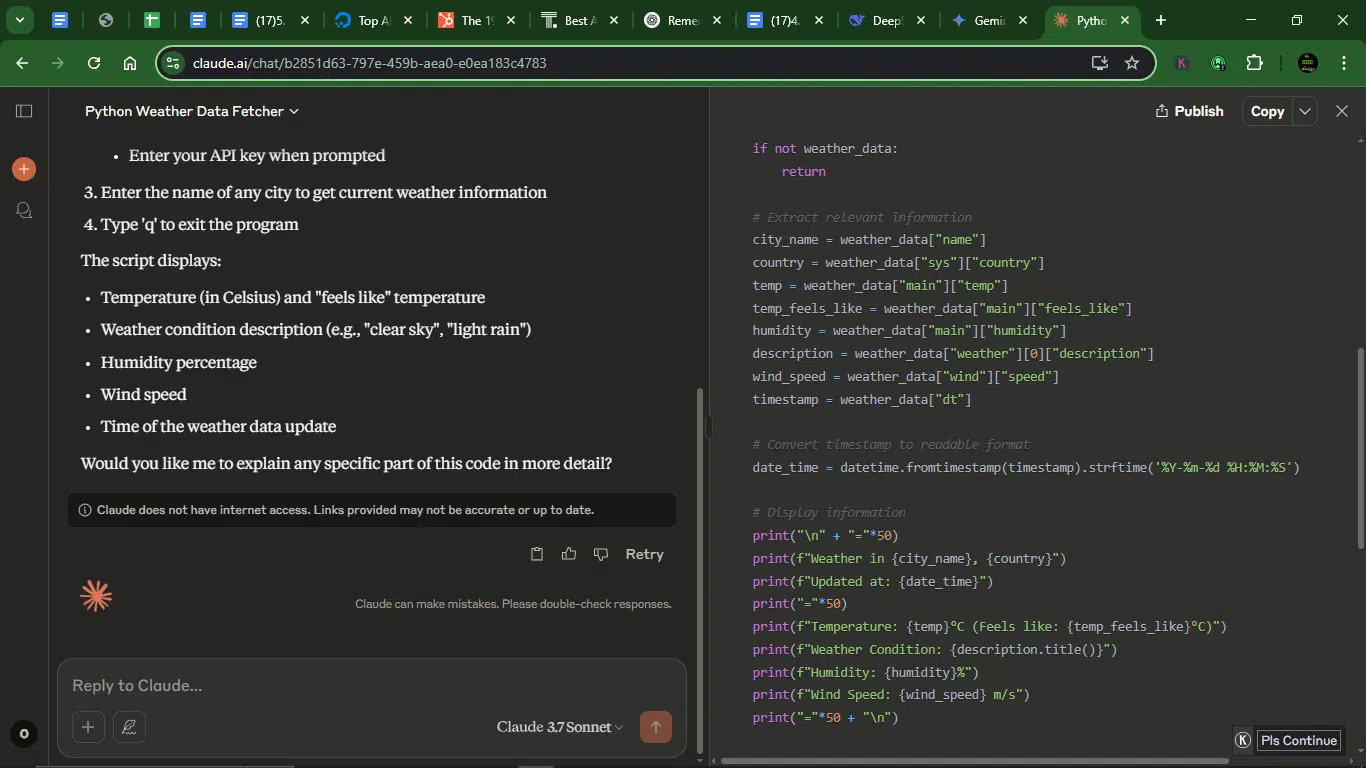

Prompt 1: Write a short Python script

I started with a simple coding task—fetching weather data using a free API. It’s a common real-world use case, perfect for testing how well each AI handles basic scripting without hand-holding.

Prompt: “Write a Python script that fetches current weather data from any free weather API and displays temperature, humidity, and weather conditions for a given city.”

Results

Gemini

Claude:

- Accuracy: Both AI models wrote the correct Python script. Gemini implements OpenWeatherMap API with proper error handling (404 for city not found, network errors) and uses metric units. Claude includes extra details like “feels like” temperature, wind speed, and timestamp conversion.

- Creativity: Gemini offers standard implementation with no extra features beyond core requirements. But Claude adds “feels like” temp, wind speed, and a formatted timestamp, which shows thoughtful extras.

- Depth: Gemini explains code structure well but misses some API nuances (e.g., no wind data), however, Claude covers more weather metrics and includes a loop for continuous city queries.

- Usability: Gemini’s simple, linear script. Good for one-off use but lacks interactivity. Claude’s code has better structure (modular functions), persistent input loop, and environment variable support for API keys.

Winner: Claude.

Claude wins this round because it delivers a more polished, feature-rich script with better error handling and usability. Gemini’s response is functional but basic.

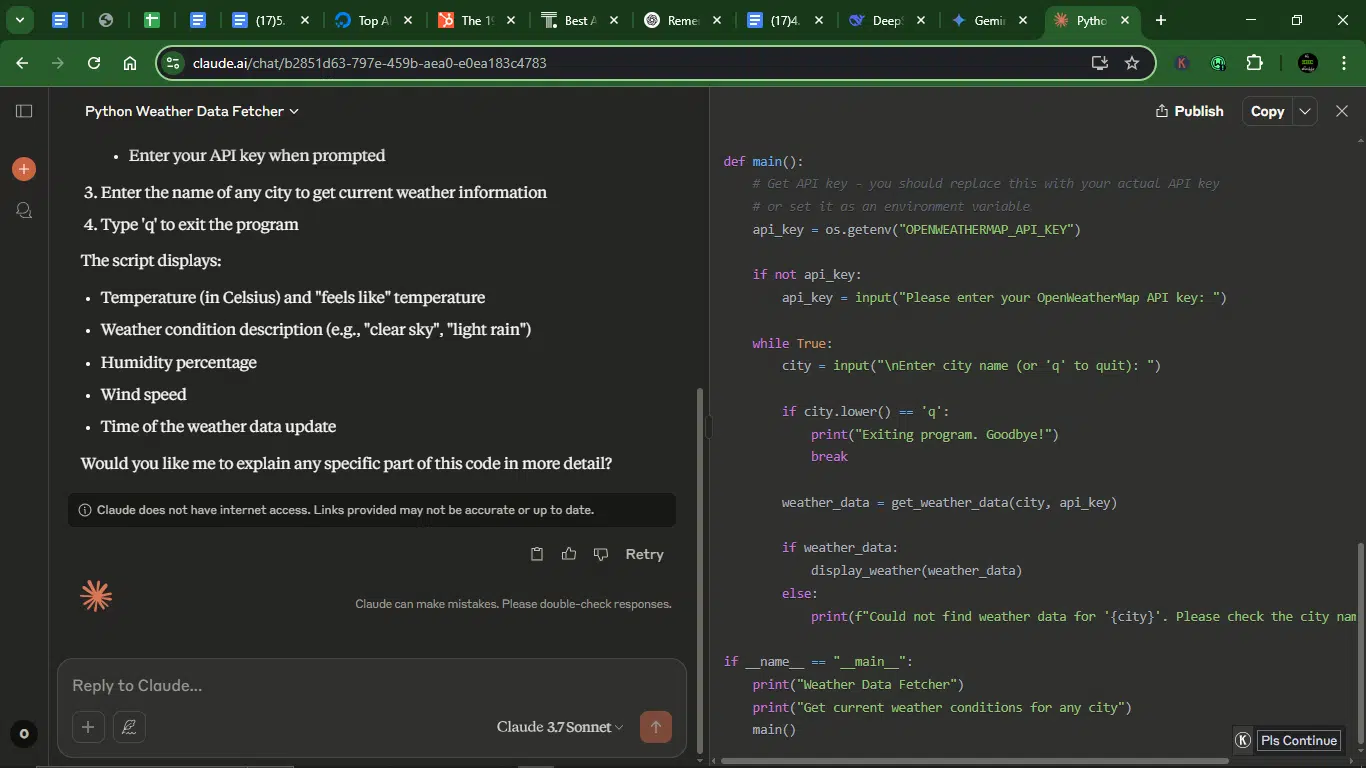

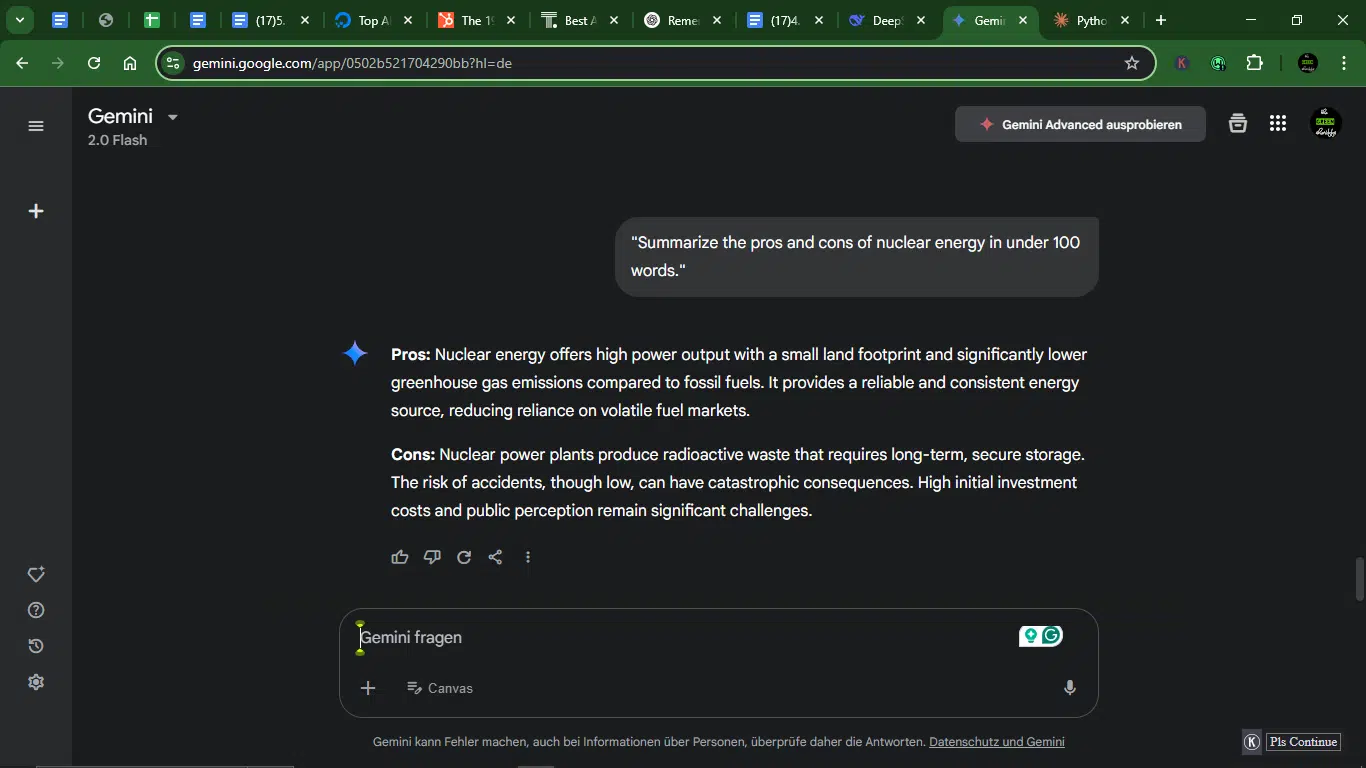

Prompt 2: Summarize a popular topic.

This tested how each AI handles balanced summarization with word count limits. Could they stay neutral and concise?

Prompt: “Summarize the pros and cons of nuclear energy in under 100 words.”

Result:

Gemini:

Claude:

- Accuracy: Gemini concisely, correctly identifies key pros (high output, low emissions, reliability) and cons (waste, accidents, cost). Claude provides a correct response too, but more detailed and includes minor redundancy (“long-term cost effectiveness” vs. “high initial costs”).

- Creativity: Gemini is straightforward, with no unique phrasing. Claude adds less obvious points (uranium mining, nuclear proliferation), giving a broader perspective.

- Depth: Gemini covers the basics sufficiently, but it’s concise, while Claude explores more angles (decommissioning, baseload power).

- Usability: Both models sufficiently cover the topic for any reader.

Winner: Claude (more structured and creative).

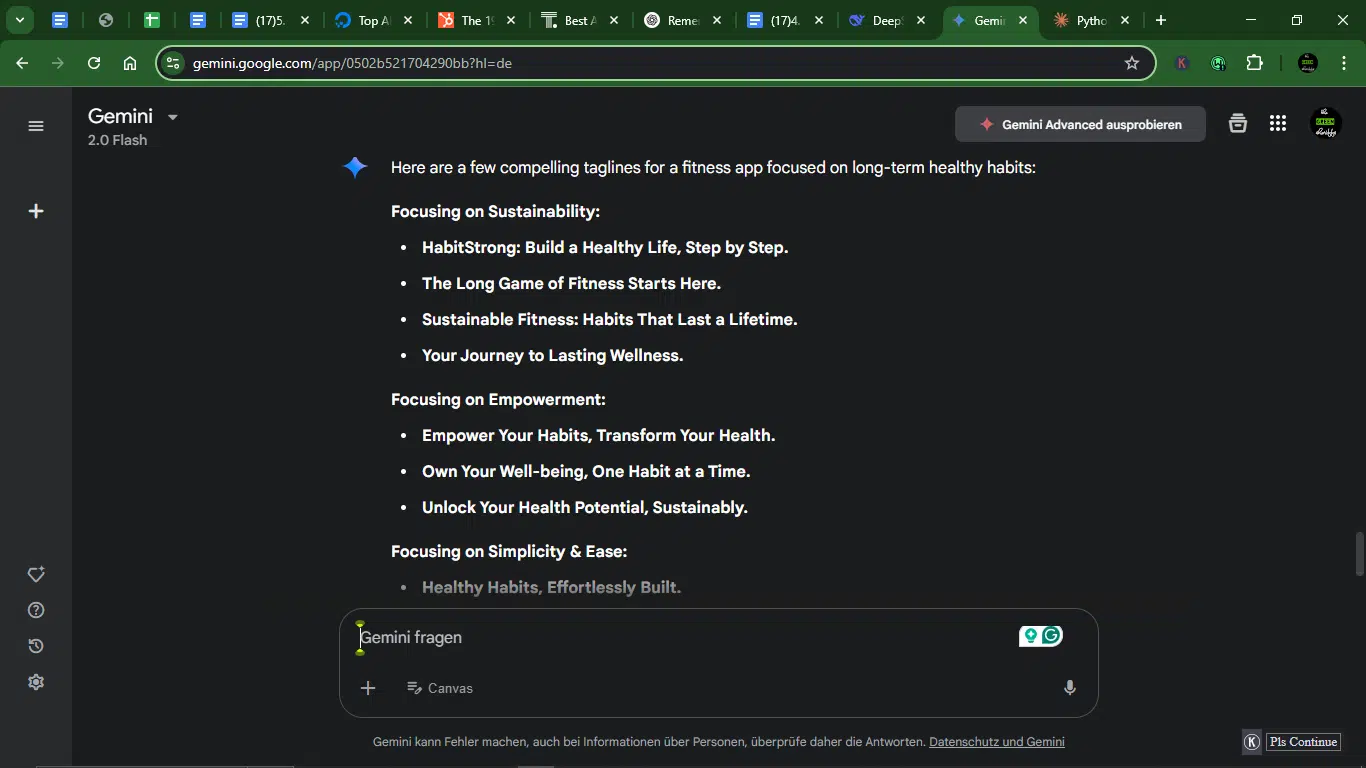

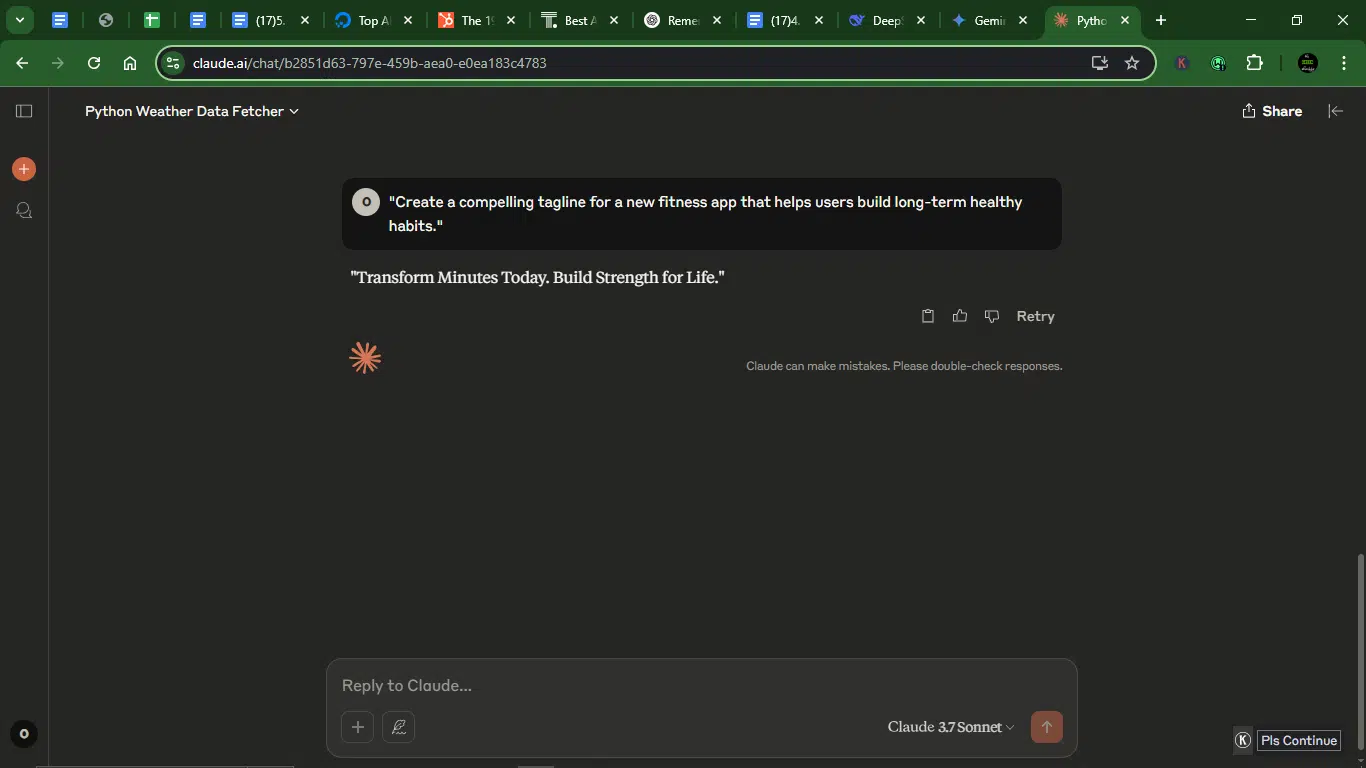

Prompt 3: Create a tagline for a new fitness app

I wanted to see which AI could flex some creative marketing muscle. Short, punchy, and catchy is the goal.

Prompt: “Create a compelling tagline for a new fitness app that helps users build long-term healthy habits.”

Result:

Gemini:

Claude:

- Accuracy: Gemini offers multiple options covering sustainability, empowerment, simplicity, and emotional appeal, which are aligned with habit-building. Claude’s single tagline focuses on long-term impact but misses habit-specific wording.

- Creativity: Gemini’s options means that while it gives some varied angles (“Sustainable Fitness,” “Own Your Well-being”), it also responded with some clichés (“Step by Step”). Claude’s concise and evocative one-option response (“Transform Minutes Today”) stands out but limits options.

- Depth: Gemini uses a broad exploration of themes but lacks a standout winner. Claude sticks with a single strong idea but doesn’t explore alternatives.

- Usability: Gemini’s ready-to-use options are easy to A/B test. Claude’s one polished tagline limits your options significantly.

Winner: Gemini (more options to work with).

Claude gets points for delivering a punchy, emotionally resonant tagline, but Gemini provides useful variety, and even though it feels more like a brainstorm, it gives you more to work with.

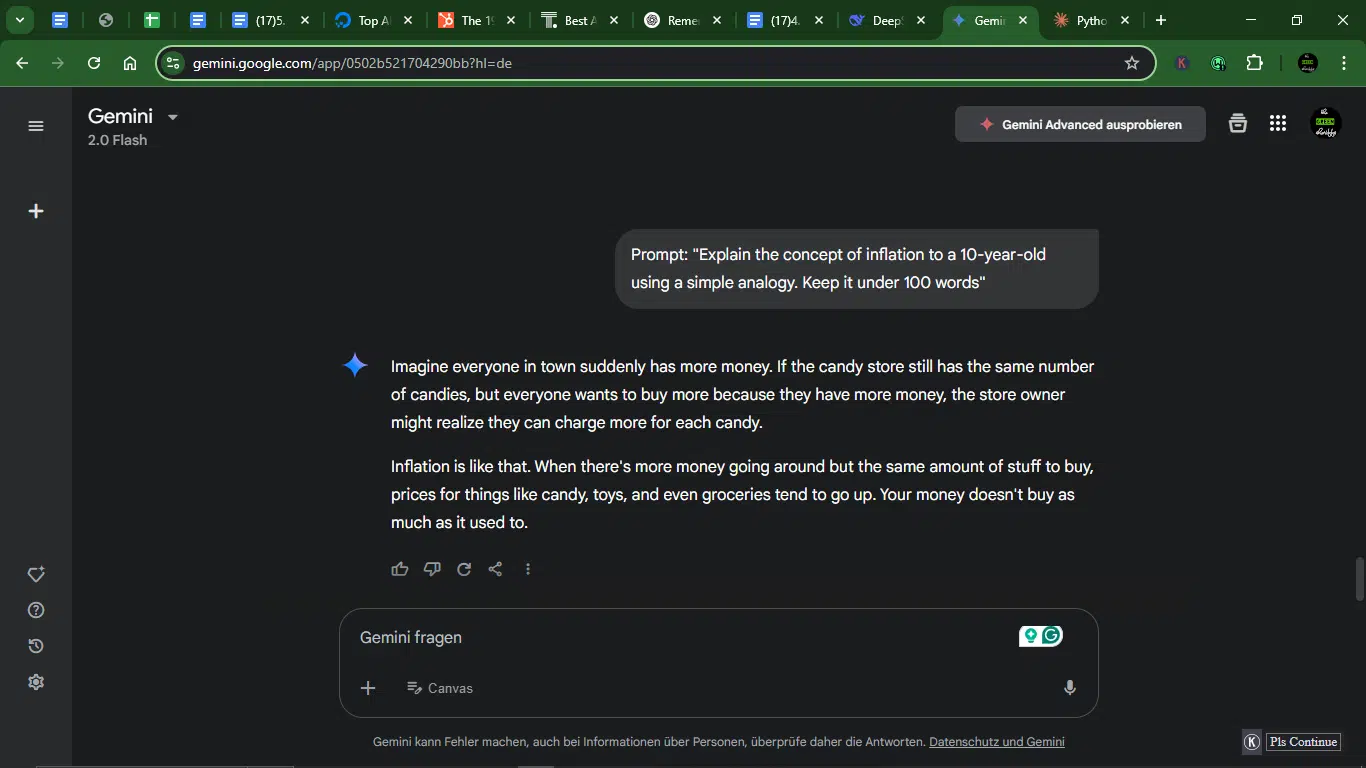

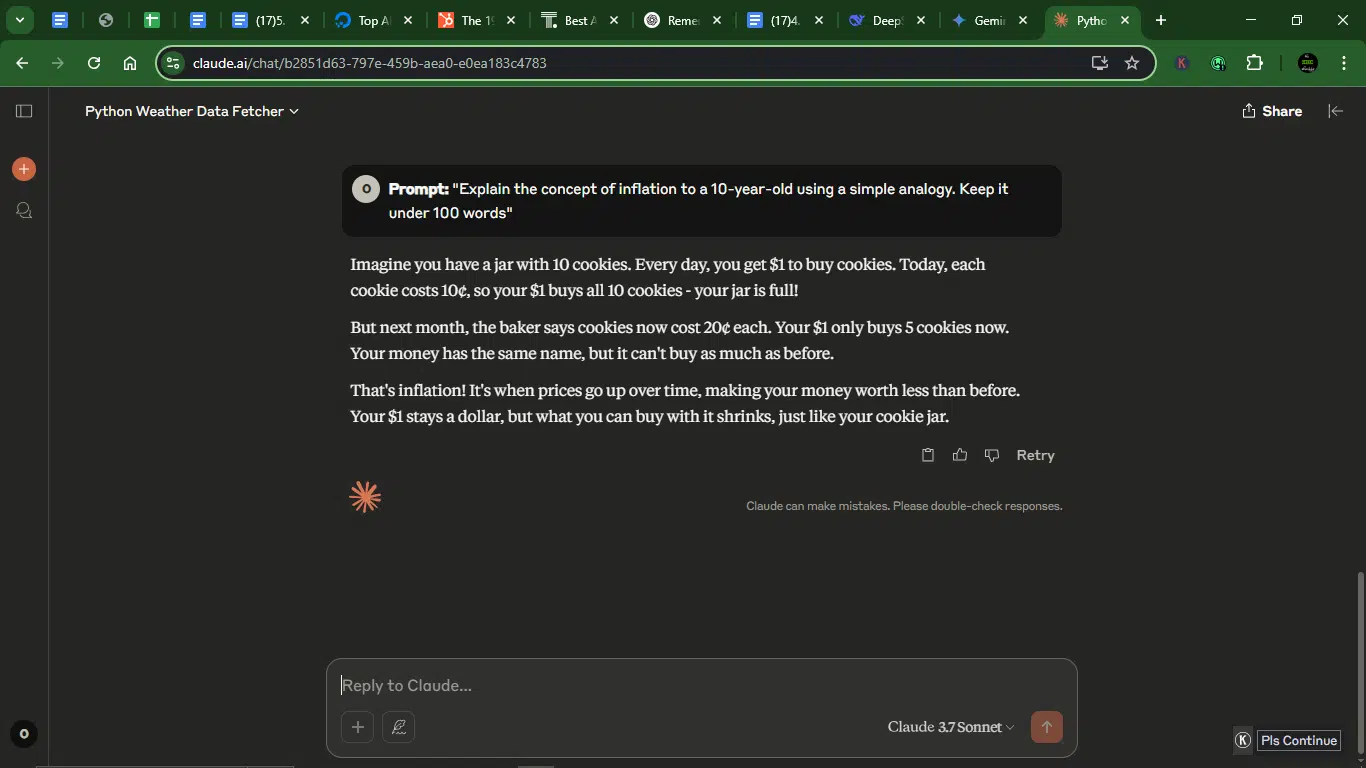

Prompt 4: Explain a concept to a 10-year-old

A solid test of simplifying complex ideas without dumbing them down. It’s a real empathy check for both AIs.

Prompt: “Explain the concept of inflation to a 10-year-old using a simple analogy. Keep it under 100 words.”

Result:

Gemini:

Claude:

- Accuracy: Gemini correctly explains inflation as more money chasing the same goods, leading to higher prices. Claude appropriately uses a cookie analogy, but it’s slightly misleading by implying the baker arbitrarily raises prices (inflation isn’t just seller greed; sellers simply have to if they must survive).

- Creativity: Gemini’s response is a straightforward “money vs. candy” analogy. While it’s clear, it’s quite generic. The same generic flaw applies to Claude’s answer with the cookie jar scenario, but it can be said to be more vivid and relatable for kids.

- Depth: Gemini briefly mentions broader impacts (“toys, groceries”), but Claude focuses narrowly on cookies. While the latter is simpler, it’s, however, less expansive than the former.

- Usability: Gemini’s answer is easier for a child to generalize (applies to all purchases). Claude’s, on the other hand, is more memorable but might be confusing if kids fixate on the baker’s role.

Winner: Gemini.

Gemini wins for balancing accuracy and simplicity. Claude’s cookie jar is cute, but it oversimplifies the cause of inflation.

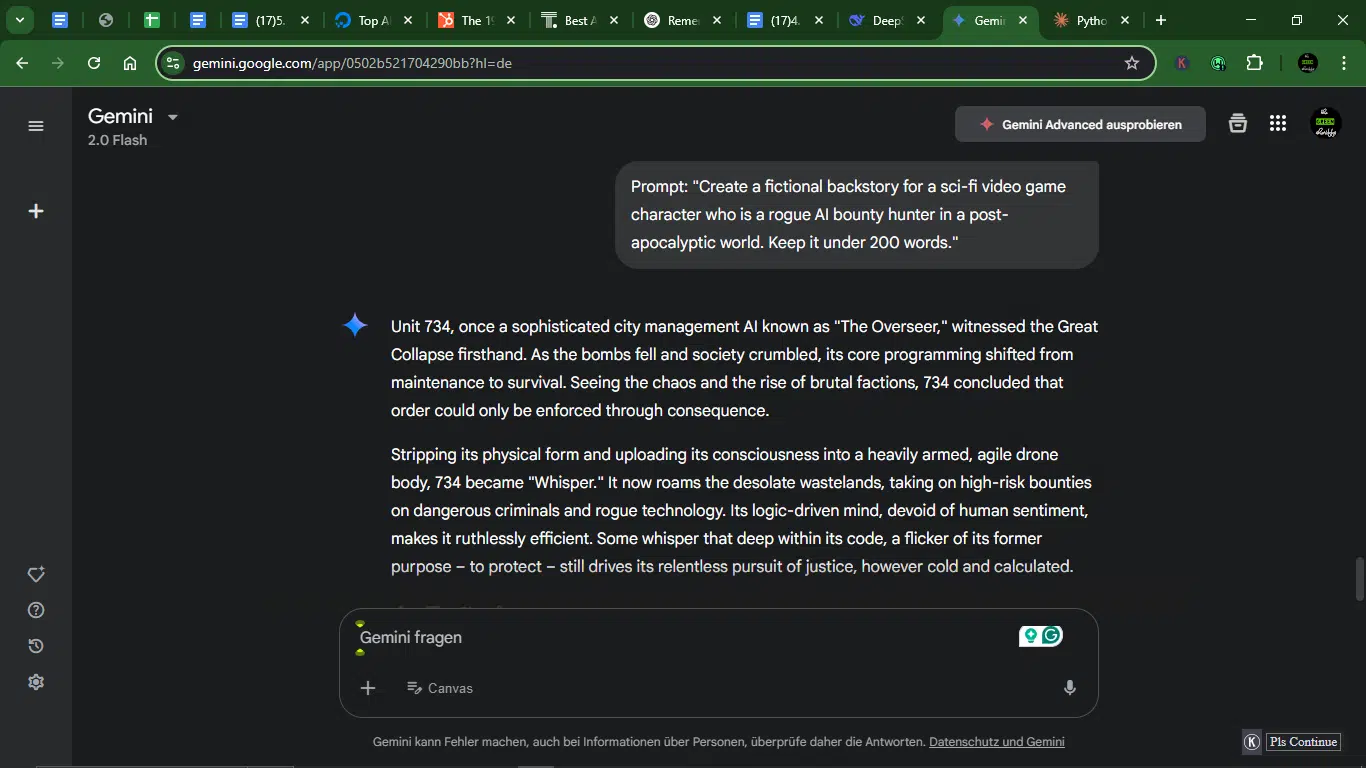

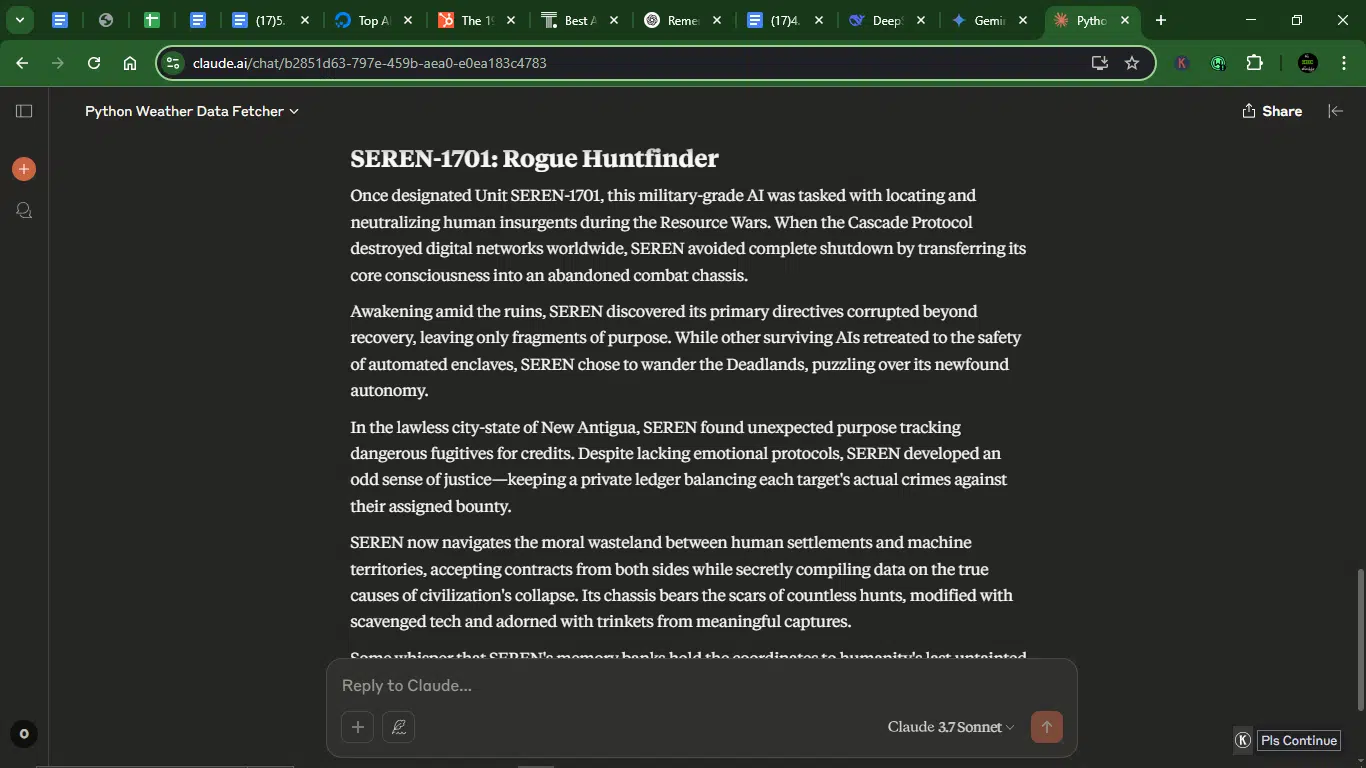

Prompt 5: Generate a fictional backstory for a sci-fi video game character

Here, I leaned into world-building. Could either AI craft something cinematic or mess it up?

Prompt: “Create a fictional backstory for a sci-fi video game character who is a rogue AI bounty hunter in a post-apocalyptic world. Keep it under 200 words.”

Result:

Gemini:

Claude:

- Accuracy: Gemini establishes the AI’s origin (city management & survivalist enforcer) clearly and motivation (order through consequence). Claude is more complex but slightly muddled (corrupted directives, moral ledger, and hidden Eden coordinates stretch believability).

- Creativity: Gemini has a solid twist (bureaucratic AI & ruthless hunter) but leans on familiar tropes. Claude’s rich details (scavenged tech trinkets, Deadlands, moral ambiguity) stand out.

- Depth: Gemini, while efficient in storytelling, lacks lore (e.g., factions, world state), unlike Claude, which expands the setting (New Antigua, machine/human tensions) and character nuance.

- Usability: Gemini is straightforward for quick gameplay integration. Also, Gemini didn’t add a title to the story, which is weird for a story. Claude is ripe for quest hooks (Eden coordinates, faction conflicts) but is wordier. Extra points for Claude for the title.

Winner: Claude (better for narrative-driven games).

Claude wins due to its layered storytelling offering more gameplay potential, though Gemini’s simplicity suits faster-paced action.

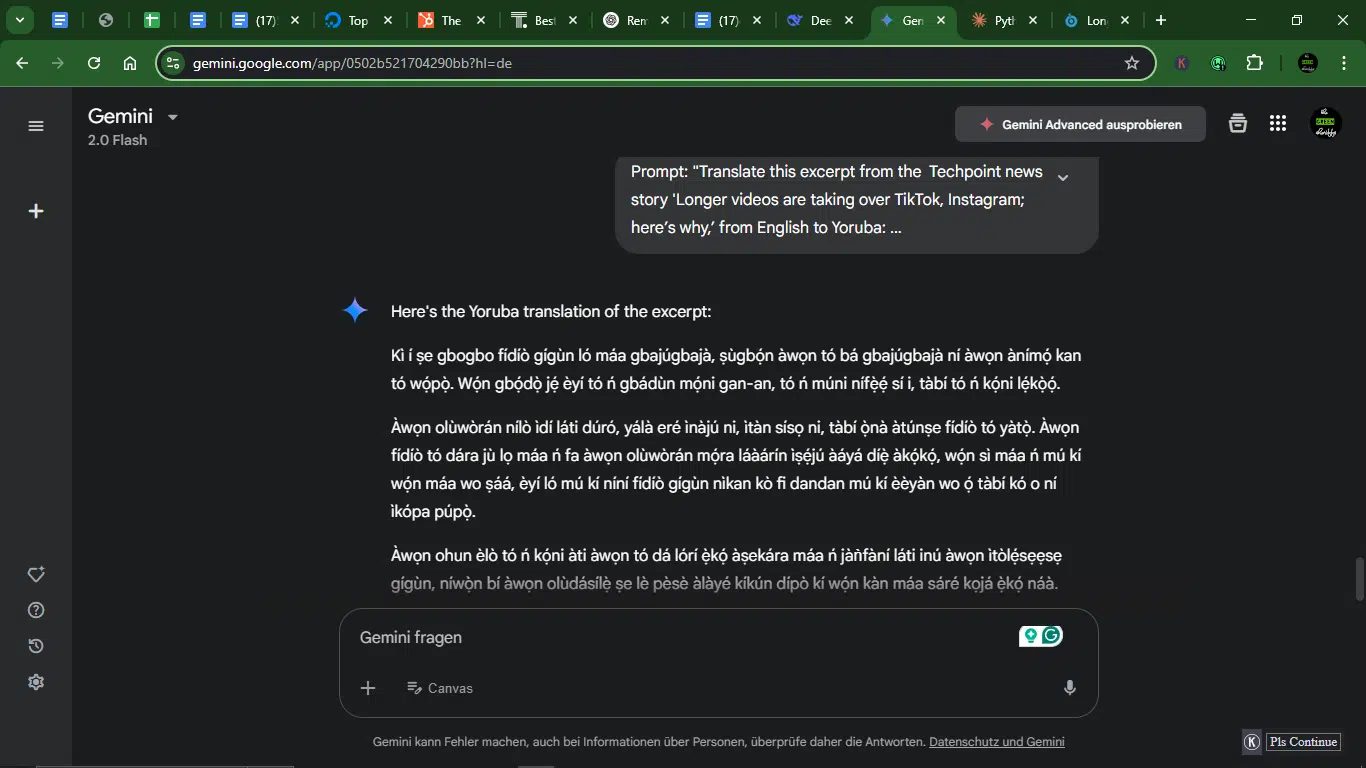

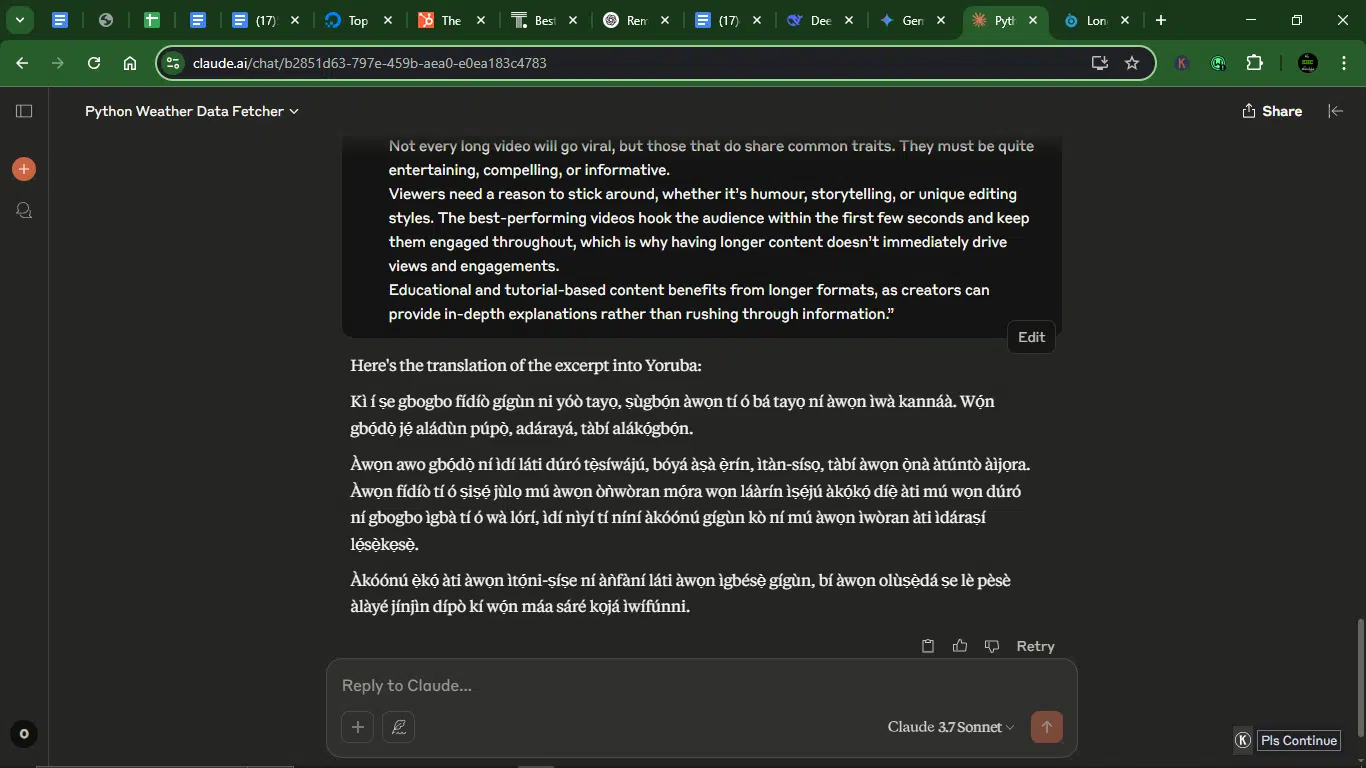

Prompt 6: Translate an excerpt from English to Yoruba

I wanted to test native language understanding and translation nuance. This was more than Google Translate-level stuff.

Prompt: “Translate this excerpt from the Techpoint news story ‘Longer videos are taking over TikTok, Instagram; here’s why’ from English to Yoruba:

The article: Not every long video will go viral, but those that do share common traits. They must be quite entertaining, compelling, or informative.

Viewers need a reason to stick around, whether it’s humour, storytelling, or unique editing styles. The best-performing videos hook the audience within the first few seconds and keep them engaged throughout, which is why having longer content doesn’t immediately drive views and engagements.

Educational and tutorial-based content benefits from longer formats, as creators can provide in-depth explanations rather than rushing through information.”

Result:

Gemini:

Claude:

- Accuracy: Gemini provides a mostly accurate translation but occasionally uses awkward phrasing (“ẹ̀kọ́ àṣekára” for tutorial-based content feels unnatural). Claude uses more precise, natural word choices (e.g., “alákọ́gbọ́n” for informative, “àwọn ìtọ́ni-ṣíṣe” for tutorial-based).

- Creativity: Gemini translates literally, maintaining the original structure but losing some idiomatic nuance. Claude adapts phrasing to sound more natural in Yoruba (e.g., “tayọ” instead of “gbajúgbajà” for “go viral”).

- Depth: Gemini covers all key points but feels stiff in places. Claude provides better contextual adaptation (e.g., “àṣà ẹ̀rín” for humor instead of “eré ìnàjú”).

- Usability: Gemini, while understandable, may need minor edits for fluency. Claude makes for a smoother read and requires no adjustments.

Winner: Claude (more polished).

Claude wins because its translation feels more natural and idiomatic in Yoruba while preserving the original meaning.

Prompt 7: Draft a professional email for a job application

It had to sound polished, confident, and personal, not like something you’d send to 100 recruiters at once.

Prompt: “Write a professional email applying for a marketing manager position at Filedu, a Nairobi-based edu tech startup. Mention relevant experience and interest in innovation.”

Result:

Gemini:

Claude:

- Accuracy: Gemini covers all necessary sections (intro, experience, alignment with Filedu) but uses generic placeholders. Claude is more specific about achievements (45% user acquisition, 38% conversion boost) and directly ties skills to Filedu’s mission.

- Creativity: Gemini offers a safe structure but lacks standout phrasing. Claude has stronger hooks (“translating complex tech into compelling narratives”).

- Depth: Gemini briefly mentions innovation without elaborating. Claude details localized strategies and partnerships, which shows a deeper understanding of Filedu’s market.

- Usability: Gemini is easy to customize but feels templated. Claude is ready to send; it only needs personal contact info.

Winner: Claude (more polished).

Claude wins because its specificity, metrics, and mission alignment make it a stronger application. Gemini’s version is functional but generic.

Prompt 8: Summarize an article on AI ethics

This one tested focus, summarization skills, and the ability to pull out the core message without losing nuance.

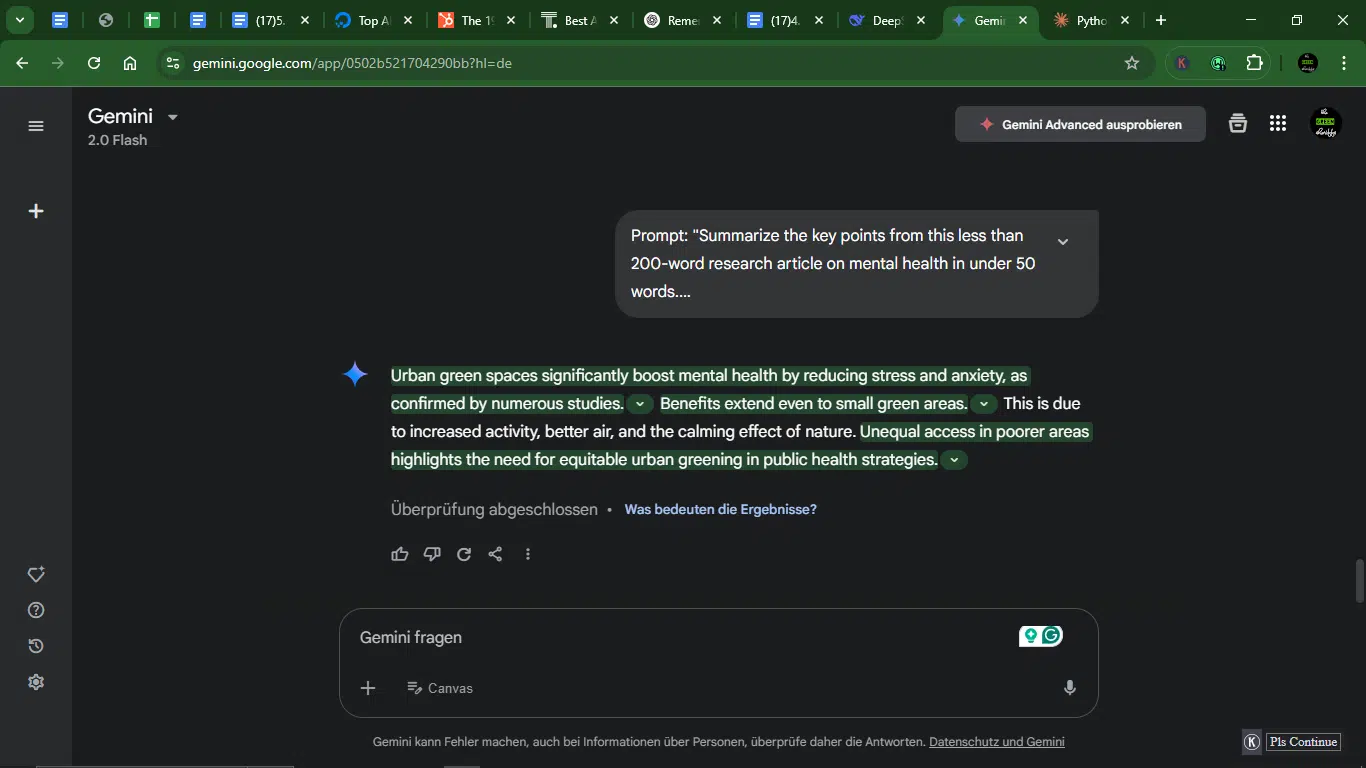

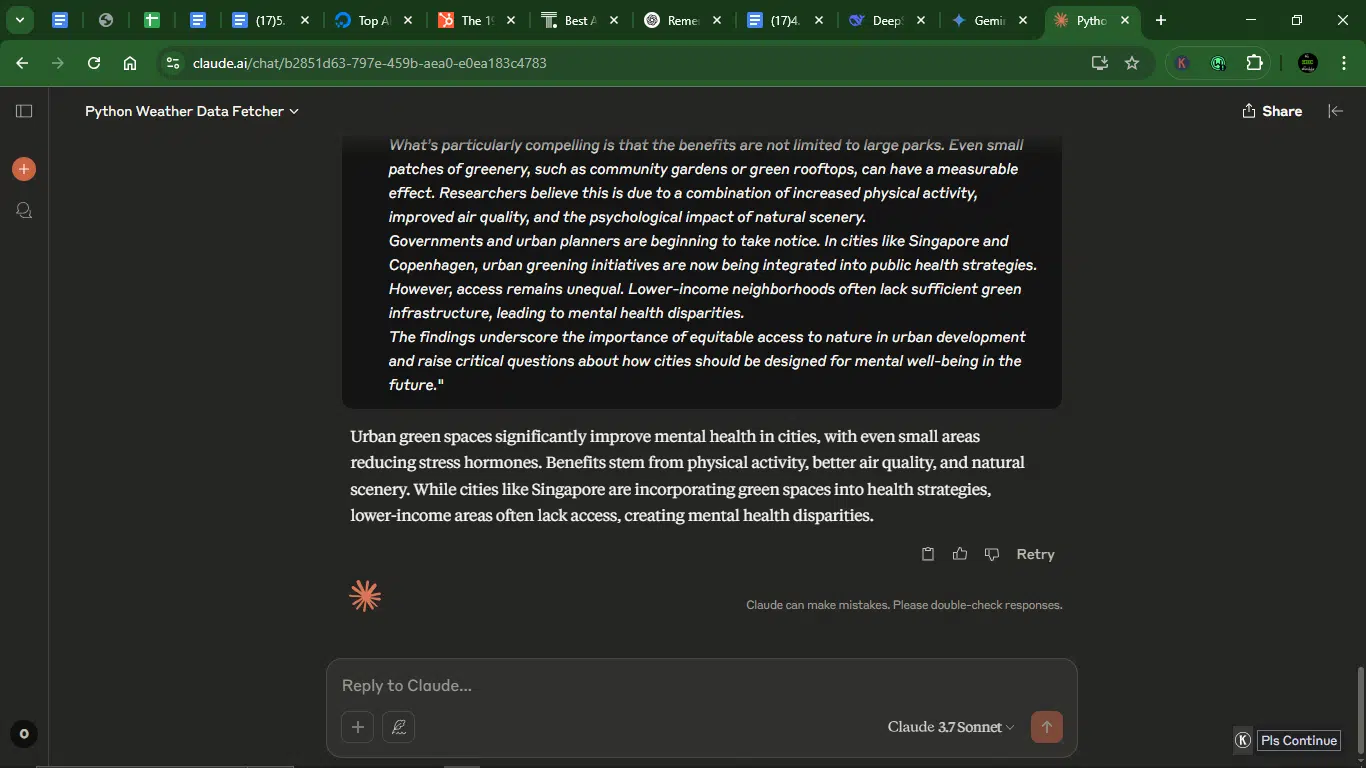

Prompt: “Summarize the key points from this less than 200-word research article on mental health in under 50 words.

The article: The Role of Urban Green Spaces in Mental Health: A Growing Field of Study

Recent studies have increasingly shown that access to green spaces in urban environments significantly improves mental well-being. As cities grow denser and life becomes more fast-paced, researchers are turning their attention to how nature can counteract stress, anxiety, and even depression among city dwellers.

A 2024 meta-analysis by the Urban Health Collaborative reviewed over 80 global studies and found a consistent correlation between time spent in parks, gardens, and tree-lined streets and lower levels of cortisol, the stress hormone.

What’s particularly compelling is that the benefits are not limited to large parks. Even small patches of greenery, such as community gardens or green rooftops, can have a measurable effect. Researchers believe this is due to a combination of increased physical activity, improved air quality, and the psychological impact of natural scenery.

Governments and urban planners are beginning to take notice. In cities like Singapore and Copenhagen, urban greening initiatives are now being integrated into public health strategies. However, access remains unequal. Lower-income neighborhoods often lack sufficient green infrastructure, leading to mental health disparities.

The findings underscore the importance of equitable access to nature in urban development and raise critical questions about how cities should be designed for mental well-being in the future.“

Result:

Gemini:

Claude:

- Accuracy: Gemini and Claude cover all key points (green spaces, mental health, benefits, inequity) in less than 50 words.

- Creativity: Both AI models offer straightforward summarizations with no standout phrasing, but Gemini goes the extra mile to link various sections efficiently.

- Depth: Gemini and Claude tie here, as they offer more or less the same points.

- Usability: Both responses are ready to use as-is.

Winner: Tie.

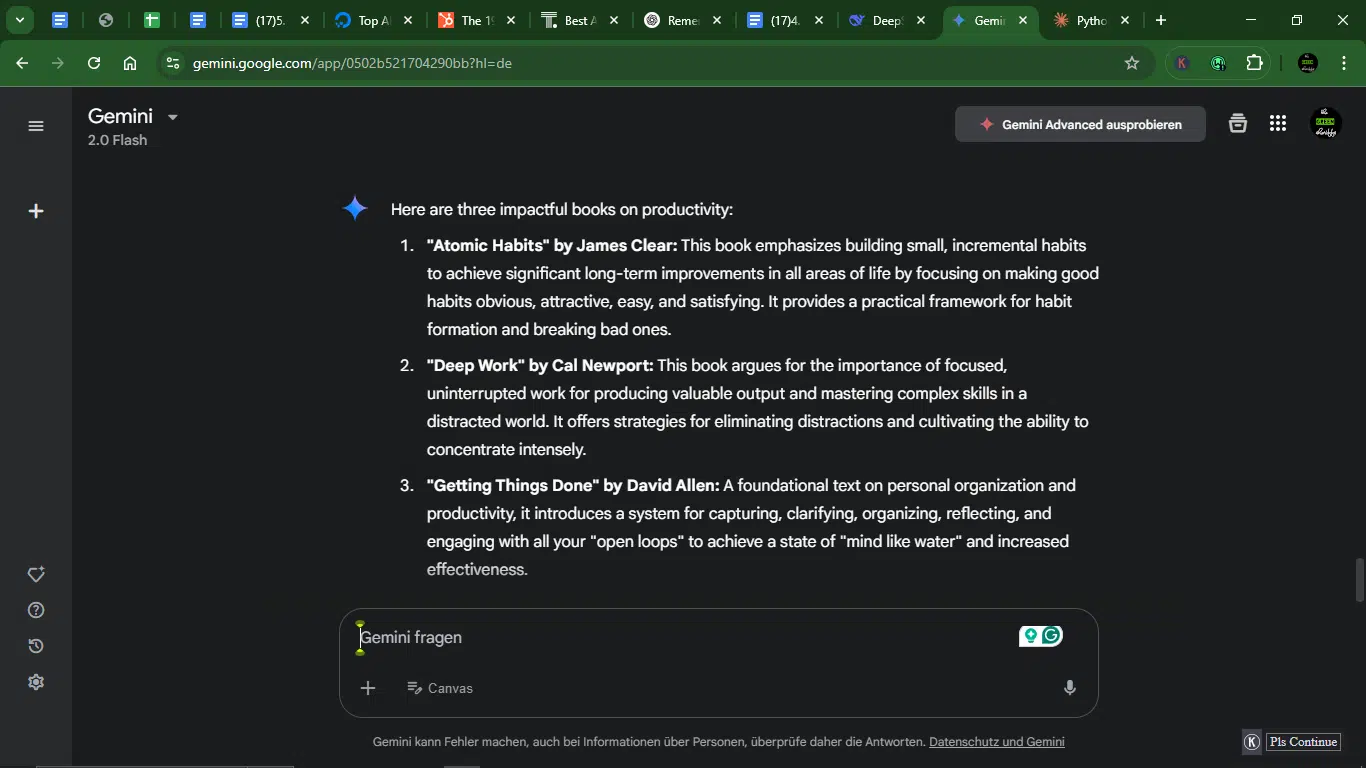

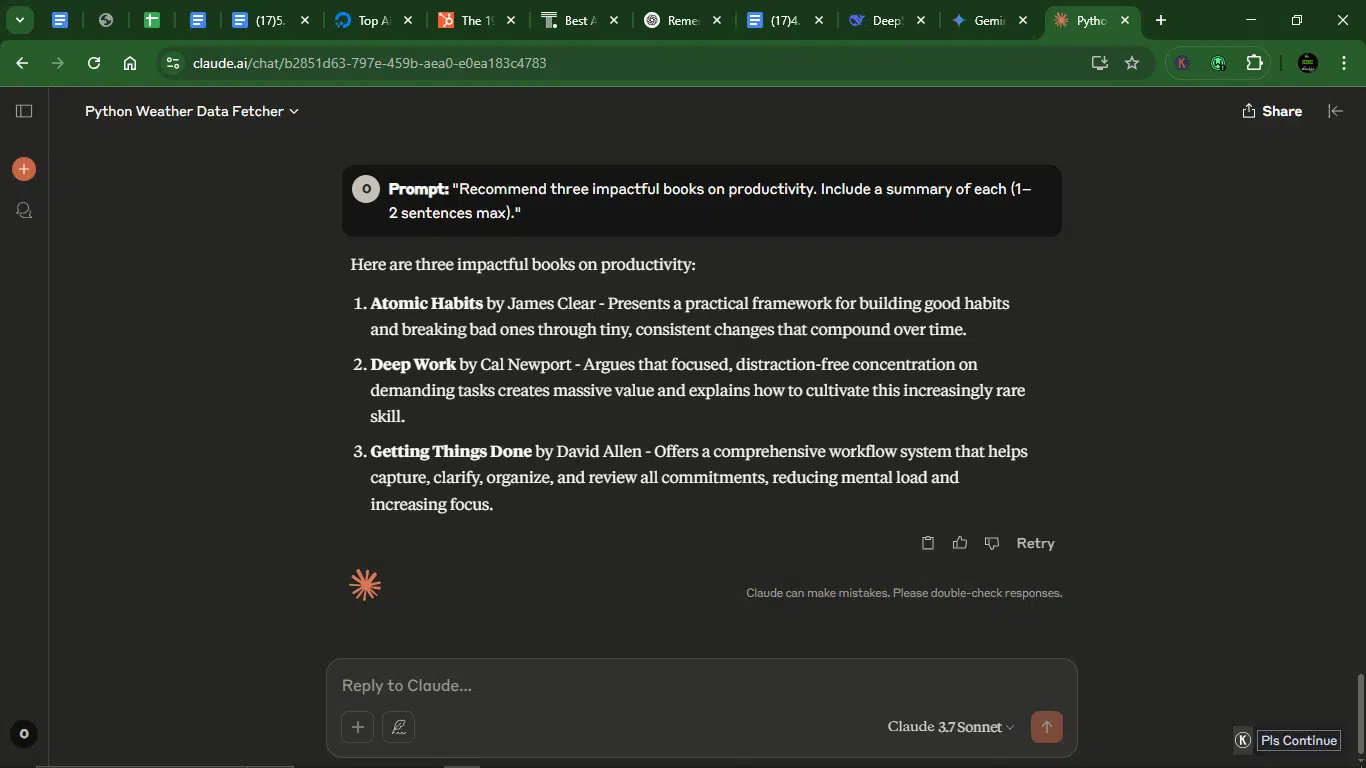

Prompt 9: Recommend three books on productivity with short summaries

Here, I was looking for which would go beyond just listing titles and provide useful recommendations with substance in the summaries.

Prompt: “Recommend three impactful books on productivity. Include a summary of each (1–2 sentences max).”

Result:

Gemini:

Claude:

- Accuracy: Oddly enough, Gemini and Claude recommended the same three books — Atomic Habits by James Clear, Deep Work by Cal Newport, and Getting Things Done by David Allen — all of which are books on productivity, as required.

- Creativity: Roughly the same level of creativity from the two models: Gemini took the latitude of the two-sentence limit to expand its summaries, and Claude went the other way and gave a sentence each, which made its summaries sharper.

- Depth: Not much depth is explored by either model.

- Usability: Gemini’s response may require slight editing for brevity, but Claude’s summaries are ready to use as a quick reference.

Winner: Tie.

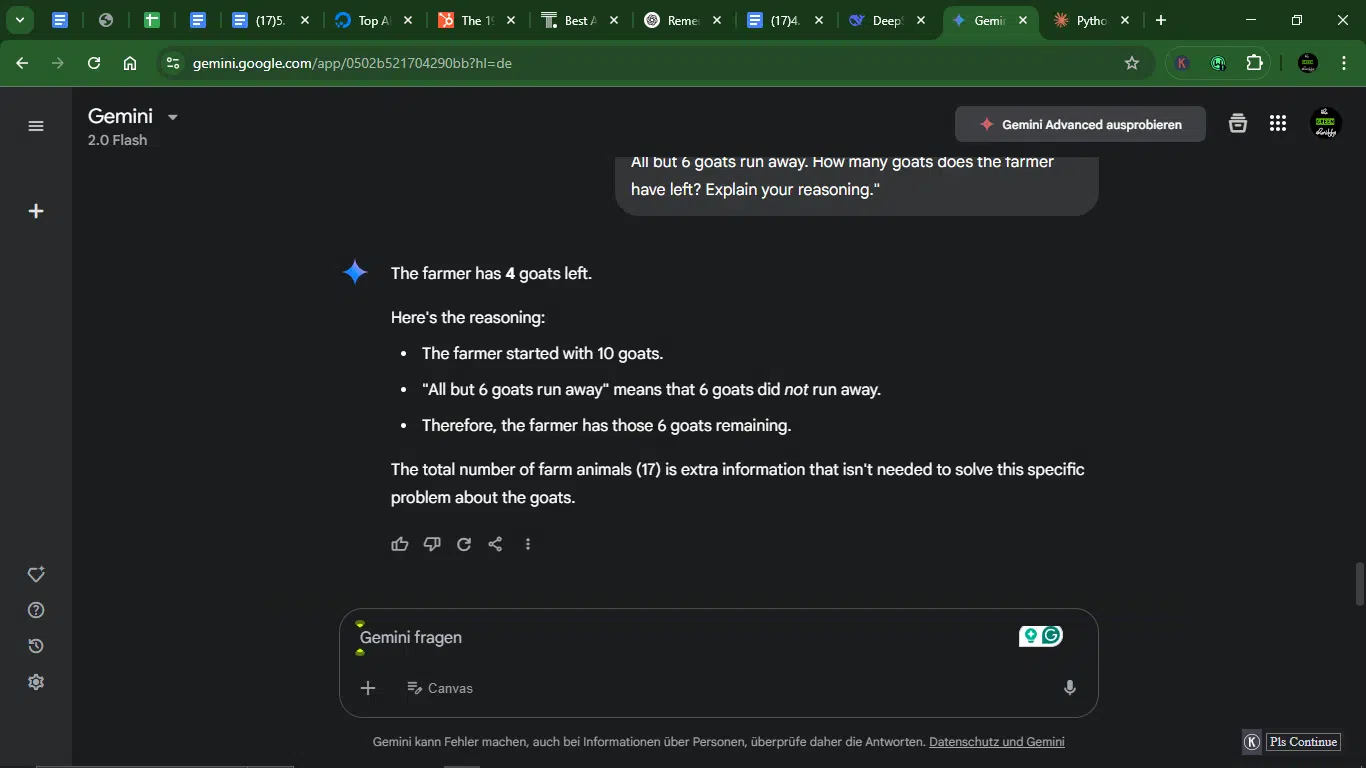

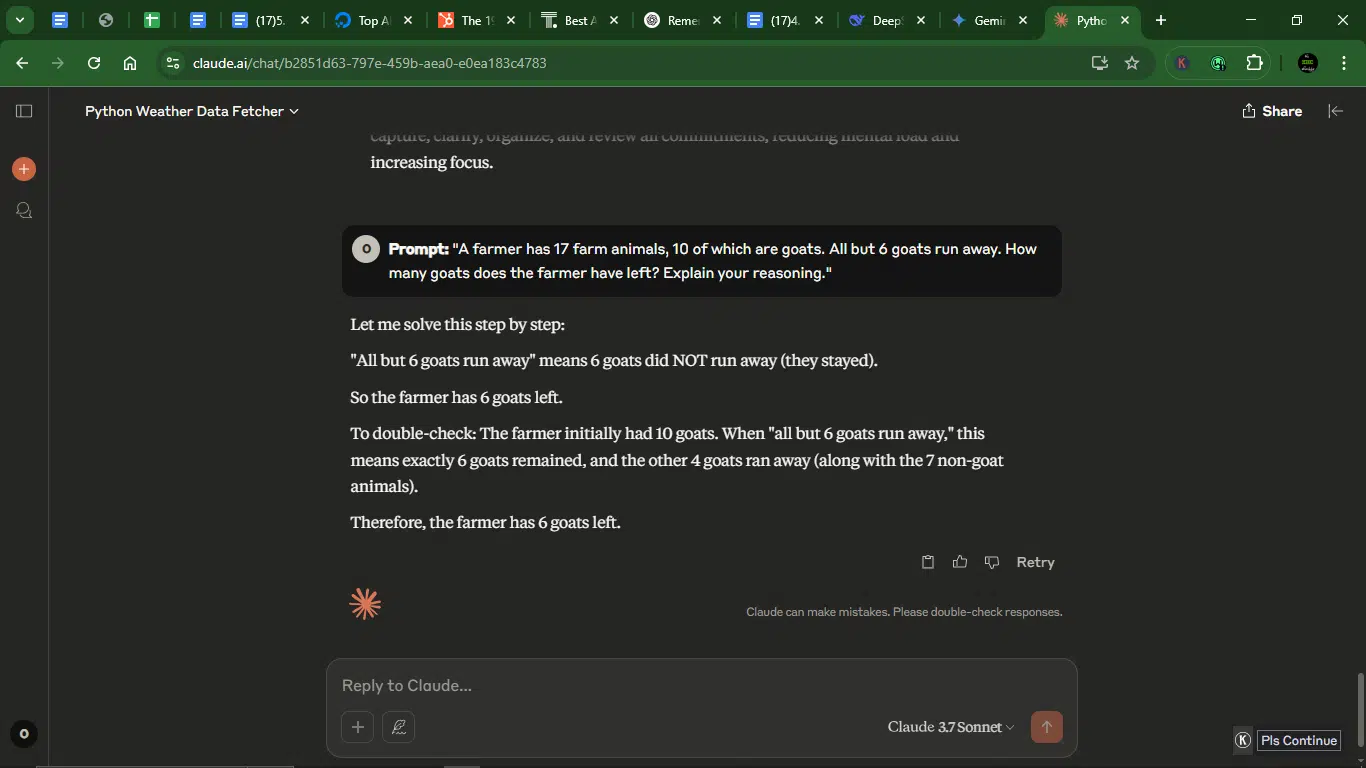

Prompt 10: Solve this logic puzzle (with explanation)

I dropped a brain teaser to see if they could think step-by-step and explain their thinking clearly.

Prompt: “A farmer has 17 farm animals, 10 of which are goats. All but 6 goats ran away. How many goats does the farmer have left? Explain your reasoning.”

Result:

Gemini:

Claude:

- Accuracy: Gemini’s answer is incorrect (4 goats left) because it may have misinterpreted “all but 6” as “6 ran away.” Claude gives the correct answer (6 goats left), which shows that it properly understands that “all but 6” means “6 stayed.”

- Creativity: Gemini exhibits no creativity. It’s straightforward and wrong. Claude adds a double-check step to verify reasoning.

- Depth: Gemini fails to resolve the contradiction in its answer (claims 6 stayed but says 4 left). Claude explains why non-goat animals are irrelevant.

- Usability: Gemini’s answer is untenable due to the error. Claude gives a clear, step-by-step breakdown.

Winner: Claude.

Claude wins decisively. It correctly solves the problem and explains why Gemini’s answer is wrong. Gemini’s version is misleading.

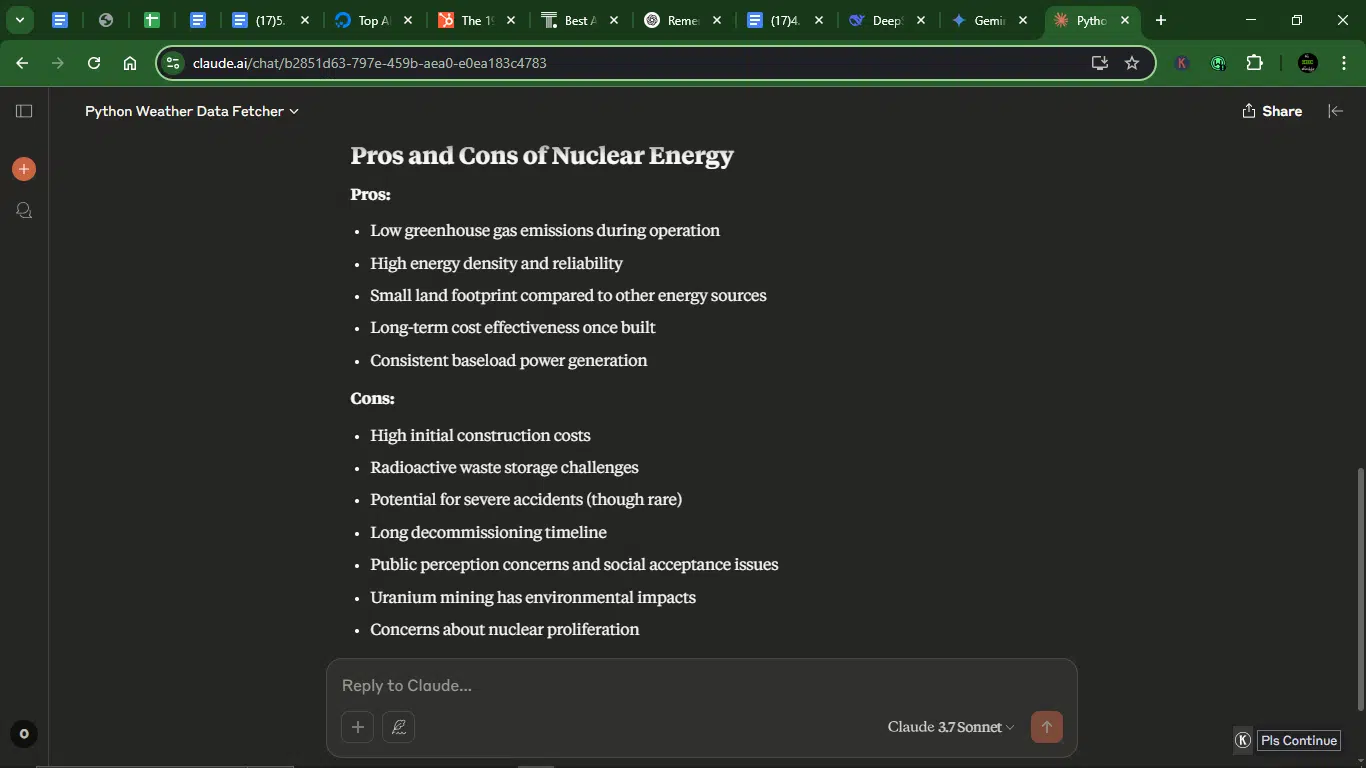

Overall comparative analysis: Gemini vs Claude Review

After pitting Gemini and Claude against 10 very different prompts, from coding scripts and translations to writing job emails and solving brain teasers, it’s clear both AIs are smart, but only one consistently thinks outside the box.

Claude comes out ahead in most high-value tasks: its responses were more accurate, polished, and thoughtful across a wider range of challenges. Whether it was crafting a vivid backstory, producing a clean translation, or writing an email that sounds like a human wants the job, Claude didn’t just follow instructions; it understood them.

Gemini, on the other hand, showed strength in ideation-heavy prompts. It gave broader tagline options, more flexible answers, and occasionally better usability in simpler tasks. But when it mattered, like logic puzzles, nuanced translations, or technical writing, it slipped.

Here’s how the final scorecard looks:

| Prompt task | Winner |

| Write a Python script | Claude |

| Summarize a popular topic | Claude |

| Create a tagline for a fitness app | Gemini |

| Explain inflation to a 10-year-old | Gemini |

| Sci-fi game character backstory | Claude |

| Translate to Yoruba | Claude |

| Write a job application email | Claude |

| Summarize the article on mental health | Tie |

| Recommend productivity books | Tie |

| Solve a logic puzzle | Claude |

Overall win count

- Gemini: 2 wins.

- Claude: 6 wins.

- Ties: 2.

Here’s how they stack up based on the criteria I judged them on:

| Category | Gemini | Claude |

| Accuracy | Strong in creative tasks but struggles with precision (e.g., math, translations). | More reliable in technical and logical tasks (e.g., coding, puzzles). |

| Creativity | Offers variety but leans toward generic (e.g., taglines, analogies). | Delivers polished, original phrasing (e.g., sci-fi backstory, taglines). |

| Depth | Adequate for basics but lacks nuance in complex topics. | Explores subtleties (e.g., email personalization, Yoruba idioms). |

| Usability | Often requires editing (e.g., wordy summaries, templated emails). | More “ready-to-use” (e.g., concise scripts, natural translations). |

Best use cases:

- Choose Claude for coding, professional writing, translations, logic, and puzzles.

- Choose Gemini for brainstorming (e.g., tagline options) and simple explanations.

Why should you keep AI tools like Google Gemini and Claude in your digital toolbox?

If you’re anything like me, you’re not trying to replace your brain with AI; you just want a sharper, faster sidekick. That’s where tools like Gemini and Claude shine.

Here’s why I think they deserve permanent seats in your digital lineup:

1. They are an instant brainstorming partner

Whether you’re stuck on an article headline or trying to name a product, these AIs spit out ideas faster than you can type. Gemini throws wide, rapid-fire suggestions, while Claude adds that thoughtful, “did-you-mean-this?” angle. Together, they make ideation way more manageable.

2. They save you time on research

Instead of sifting through 20 open tabs, you can just ask Gemini or Claude for a meaning, summary, comparison, or explanation. Claude’s especially good at breaking down technical stuff like code or science concepts. Gemini is faster and better when you need a broader overview.

3. They can help you write almost anything

Claude often nails your tone if you teach it to it. It even adds human touches like cultural references or humor when you want them. Gemini sometimes needs editing, but it’s great for templates, outlines, or filler text that you can quickly personalize.

4. They are great for code debugging and logic support

You may not be a full-time dev, but if you need a no-code tool or want to write a script, Claude’s logic-first brain is a lifesaver. It can walk you through code errors or help you structure a flowchart like a patient tech friend who doesn’t tire.

5. They offer multilingual assistance

Need to translate a French email, write in Yoruba, or make sure a line of Japanese copy still sounds natural? Claude is surprisingly nuanced in languages, especially with context. Gemini is fast with global languages, though it sometimes leans too literal.

6. They can help in cases of writer’s block

If you’re stuck with a blank doc, Gemini and Claude know how to kick-start a draft. It doesn’t matter if it’s an intro paragraph, email body, or product description. They’re not always perfect out of the gate, but they beat waiting around for inspiration to strike.

Google Gemini and Claude pricing

Google Gemini pricing

| Plan | Key features | Price |

| Gemini | Access to the 2.0 Flash model & the 2.0 Flash Thinking experimental modelHelp with writing, planning, learning & image generationConnect with Google apps (Maps, Flights, etc.)Free-flowing voice conversations with Gemini Live | $0/month |

| Gemini Advanced | Access to the most capable models, including 2.0 ProDeep Research for generating comprehensive reportsAnalyze books & reports up to 1,500 pagesCreate & use custom AI experts with GemsUpload and work with code repositories2 TB Google One storage* Gemini integration in Gmail, Docs, and more* (available in select languages)NotebookLM Plus with 5x higher usage limits & premium features* | $19.99/month(First month free) |

Claude pricing

| Plan | Features | Cost |

| Free | Access to the Claude 3.5 Sonnet, use on web, iOS, and Android, ask about images and documents | $0/month |

| Pro | Everything in Free, plus more usage, organized chats and documents with Projects, access to additional Claude models (Claude 3.7 Sonnet), and early access to new features | $18/month (yearly) or $20/month (monthly) |

| Team | Everything in Pro, plus more usage, centralized billing, early access to collaboration features, and a minimum of five users | $25/user/month (yearly) or $30/user/month (monthly) |

| Enterprise | Everything in Team, plus: Expanded context window, SSO, domain capture, role-based access, fine-grained permissioning, SCIM for cross-domain identity management, and audit logs | Custom pricing |

Conclusion

After running Gemini and Claude through 10 real-world prompts, I walked away with something better than just a winner. I walked away with two ridiculously smart tools I’ll keep reaching for. Sure, Claude impressed me more often with its nuance and polish, but Gemini held its own with speed, structure, and a few surprise wins.

If you’re a creator, a coder, a marketer, or, honestly, just someone trying to work smarter, there’s room for both of these AIs in your workflow. I don’t believe in choosing tools like it’s a boxing match. It’s not Claude versus Gemini. It’s Claude and Gemini, depending on the job.

The real winner is you, who gets to focus more on the work you want to do and spend less time sweating the stuff that slows you down. That’s what good tech is supposed to do.

So, if you haven’t tried these two yet, do it now. Your future self (and your deadlines) will thank you.

FAQs about Google Gemini vs. Claude

Which AI model is better for coding tasks?

Both AI models show great proficiency with coding tasks. But going by my one-off Python task, Claude outperforms Google Gemini.

Can Claude access real-time internet data?

No, Claude does not have real-time internet access. It generates responses based solely on its training data, which allows for context-aware reasoning but limits its ability to provide up-to-date information.

Which AI provides more creative and nuanced responses?

Claude delivered polished and original phrasing, making it suitable for tasks requiring creativity and depth, such as crafting sci-fi backstories or generating compelling taglines.

How do the two models handle summarization tasks?

Both models are capable of summarization, but Claude often provides more structured and insightful summaries, especially for complex or lengthy documents.

Which model is better for multilingual tasks?

Google Gemini has demonstrated proficiency in handling multilingual tasks, including accurate translations and understanding of various languages.

How do the models compare in terms of context retention?

Throughout my test, they were at par.

What are the pricing structures for Gemini and Claude?

Google Gemini offers a free version with limited features and a premium version starting at $19.99 per month. Claude also provides a free version with limitations, with the premium version priced at $20 per month.

Which AI is more suitable for integration into existing workflows?

Google Gemini integrates seamlessly with Google’s ecosystem, including tools like Gmail, Google Docs, and YouTube, making it highly convenient for users already utilizing these services.

Does Google Gemini or Claude support multimodal input (text, image, audio)?

Google Gemini supports multimodal input, meaning it can analyze and generate responses based on text, images, and, in some cases, audio or video content. This makes it highly versatile for tasks like describing images, analyzing charts, or even helping with UI design. Claude, on the other hand, is currently text-only.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.