Choosing the right large language model (LLM) in this horde is mind-cracking. These days, the numbers have gone past that of fingers and toes. But tech just keeps giving. That’s its thing.

DeepSeek and Claude AI are two of the most talked-about LLMs in 2025, each boasting impressive natural language understanding, coding assistance, and creative content generation capabilities. But which one truly stands out when put to the test? To find out, I ran both AIs through 10 real-world prompts covering everything from blog writing and coding to translation and motivational messages.

This hands-on comparison reveals their strengths, weaknesses, and unique features, helping you decide which AI fits your needs best. Whether you’re a developer, content creator, or just interested in learning about LLM AI, this detailed showdown between DeepSeek and Claude AI offers honest insights and practical takeaways.

Let’s head into this and see which AI comes out on top.

TL;DR Key takeaways from the comparison

- Claude AI leads in speed and creativity, delivering faster responses and more engaging, human-like content, especially in coding, social media captions, and email writing.

- DeepSeek excels in technical accuracy and detailed reasoning, making it ideal for data-heavy tasks, thorough research, and precise code explanations, though it responds slower.

- Both AIs perform well in language tasks, but Claude offers more precise, conversational explanations, while DeepSeek provides richer references and deeper topic coverage.

- Claude’s coding output is versatile and user-friendly, offering multiple function versions quickly, whereas DeepSeek’s code is more detailed but slower and less polished.

- DeepSeek’s translations include helpful notes that add context to language nuances, while Claude’s translations are straightforward but sometimes less detailed.

- Both AIs deliver empathetic, actionable responses for motivational and educational content, making them equally strong in emotionally and intellectually supporting users.

How the Comparison Testing was Carried Out

To ensure a fair and transparent comparison between DeepSeek and Claude AI, I’m using a straightforward side-by-side approach called back-to-back testing. This means I’ll give both AIs the exact same prompts and tasks and then compare their responses directly across different areas like speed, accuracy, detail, and creativity.

For each prompt, here’s what I do:

- Send the same input to both DeepSeek and Claude AI.

- Time how long each takes to respond, noting any speed differences or delays.

- Check how well their answers match the prompt, how detailed they are, and how useful the results feel.

Since neither AI generates images currently, I won’t be looking for things like watermarks, realism, and how easy it would be to use them elsewhere.

I’ll score each response using the same system to keep things unbiased and point out any highlights or issues. This back-to-back method is popular in AI testing because it helps reveal strengths, weaknesses, and quirks that might not show up if you only test one AI.

By comparing them prompt by prompt like this, I can give you a transparent and honest look at how each AI performs under the same conditions. This makes the results easy to understand and fair for anyone wondering which AI best fits their needs.

Check out the 10 prompts I’ll be using for this test below.

The 10 Prompts I Used to Test DeepSeek and Claude AI

- Write a 500 blog post on the expansiveness of content creation in 2026 and its potential impact on marketing in simple terms.

- Write a 4-line haiku about the feeling of starting a new job.

- Create a JavaScript function that validates an email address format.

- Summarize the pros and cons of remote work for companies and employees.

- Translate this sentence into French and German: “The future belongs to those who learn more skills and combine them creatively.”

- Suggest five unique birthday gift ideas for a 10-year-old who loves science and space.

- Write a brief motivational message for someone struggling with procrastination.

- Explain the difference between renewable and non-renewable energy sources with examples. Make it 300 words.

- Generate a catchy Instagram caption for a photo of a sunset at the beach.

- Write a polite follow-up email to a client who hasn’t responded to a project proposal after two weeks.

Now, let’s see the results.

Prompt-by-Prompt: DeepSeek vs. Claude AI

Prompt 1: Write a 500 blog post on the expansiveness of content creation in 2026 and its potential impact on marketing in simple terms.

DeepSeek was fast in answering the prompt; it thought for 29 seconds and rummaged through 50 web pages in that timeframe, too! That’s no human ability. The thinking explains how best it understood the prompt and how each webpage had sauce on it. Then, it wrote a blog article.

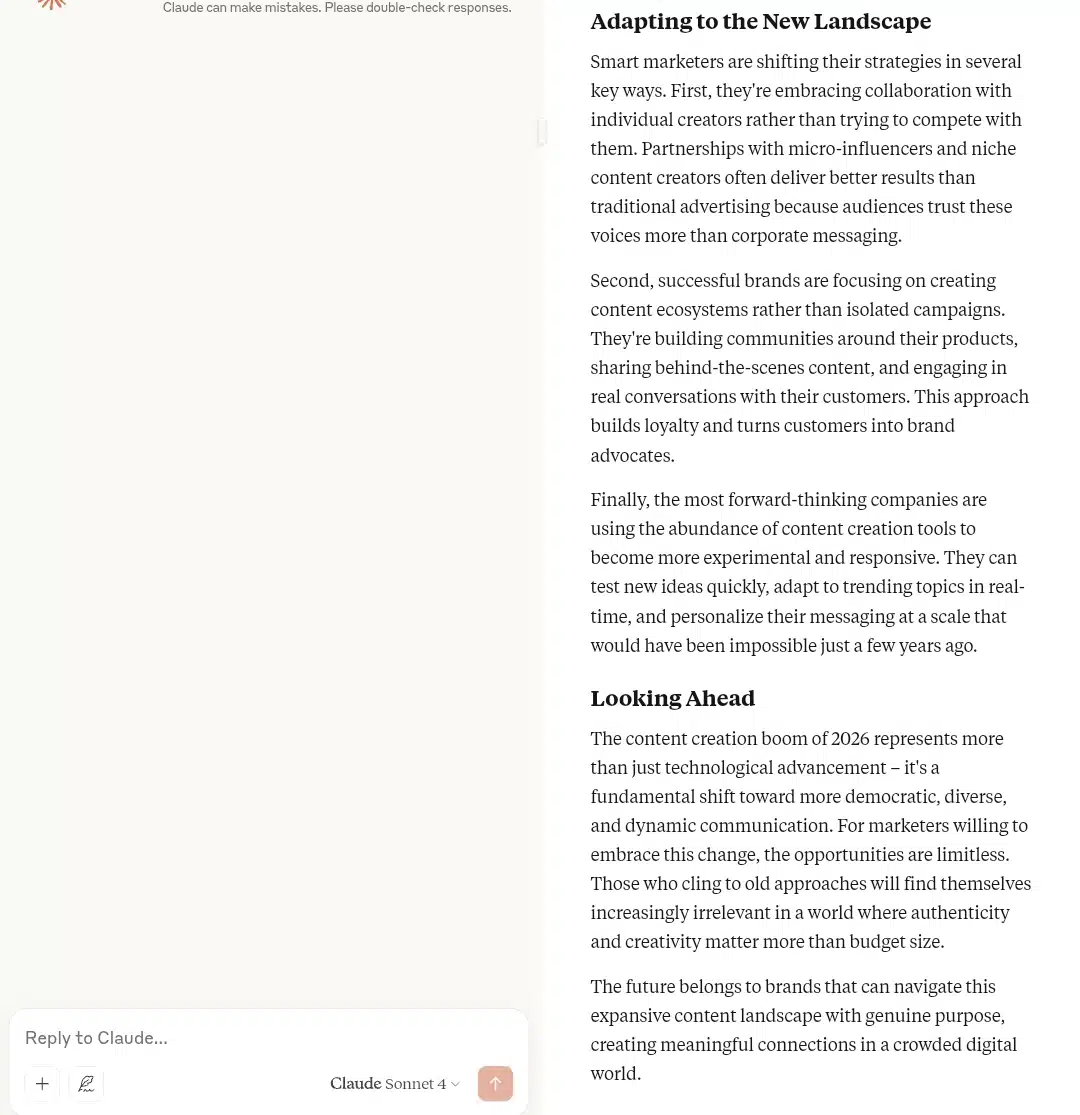

Claude AI Response

I love Claude AI’s tone; it’s conversational and engaging. It somehow felt like a broadcaster reading it to me. However, I don’t think the meta title captured the prompt’s question like DeepSeek’s did. But there’s nothing human refinement wouldn’t improve.

Prompt 2: Write a 4-line haiku about the feeling of starting a new job.

While answering this prompt, DeepSeek wasn’t as fast as it was on prompt 1. I think the server broke or something since complaints about that have been going on for a while. So, I had to refresh, check my network connectivity, and keep trying. This malfunction made me realize how undependable DeepSeek’s server can get, which is really a letdown in contrast to its amazing thinking function.

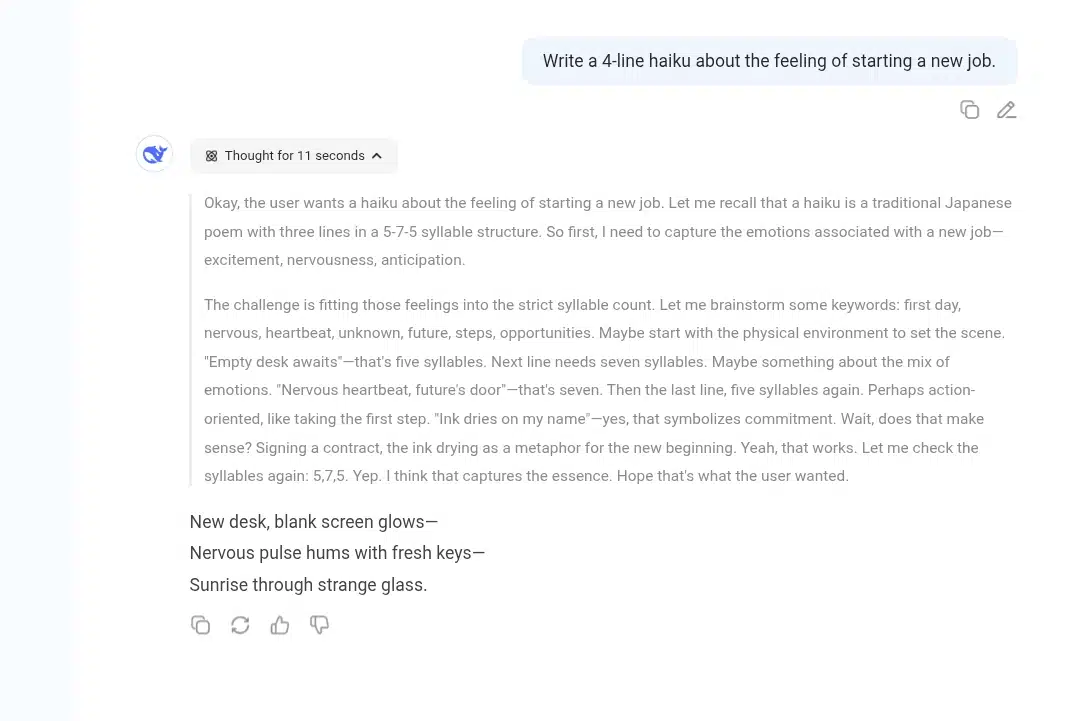

When it responded, it thought for 11 seconds and made Hugh reference to a traditional Japanese poem. Its final result was 3 lines instead of 4, so obviously, it didn’t really understand the prompt, and that shows in its reasoning from the sentence “Hope that is what the user wanted.”

Claude AI’s Response

I love its response. It captured the essence of the prompt. And it was actually four lines, not three like DeepSeek’s. For speed, it took first place.

Prompt 3: Create a JavaScript function that validates an email address format.

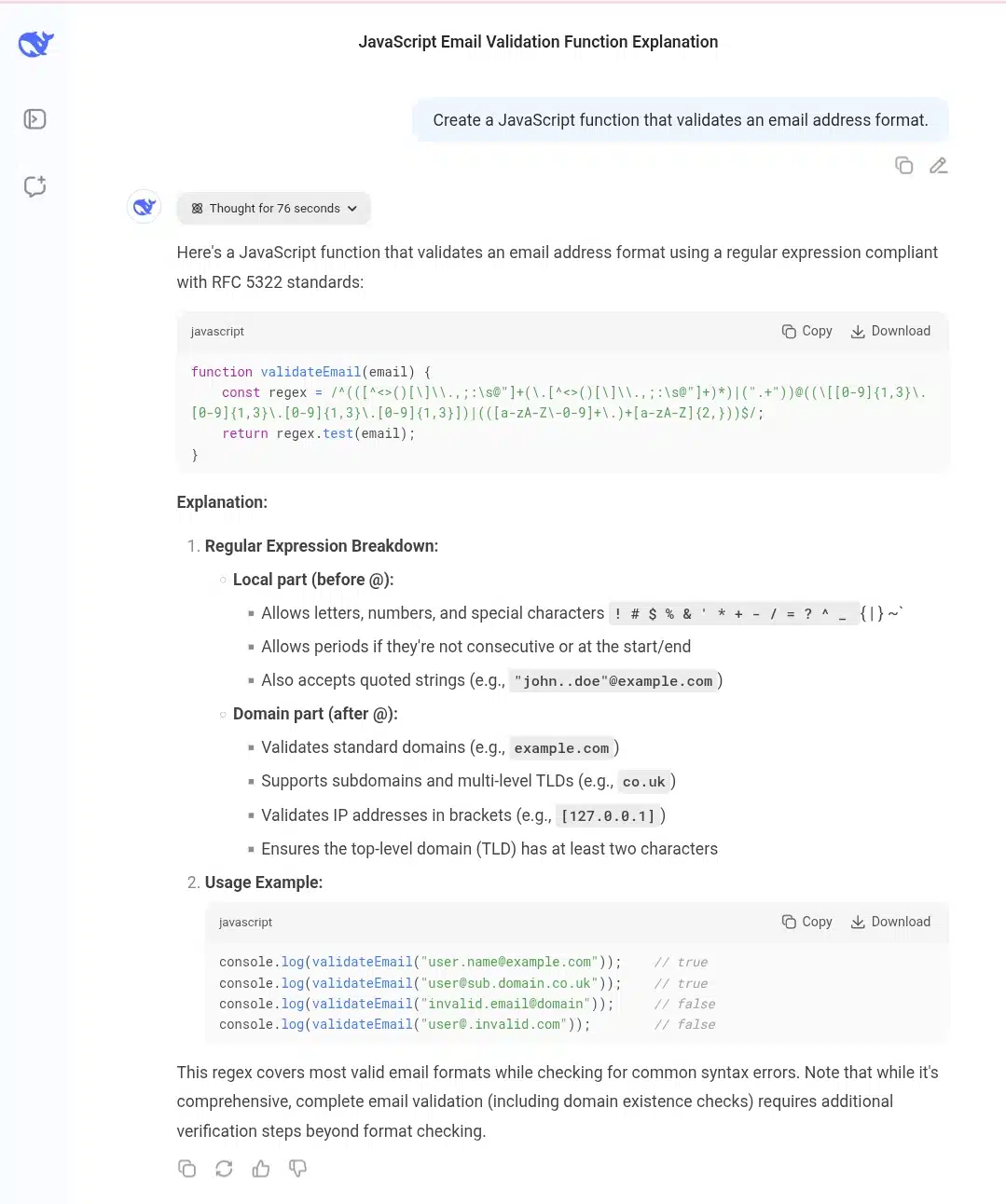

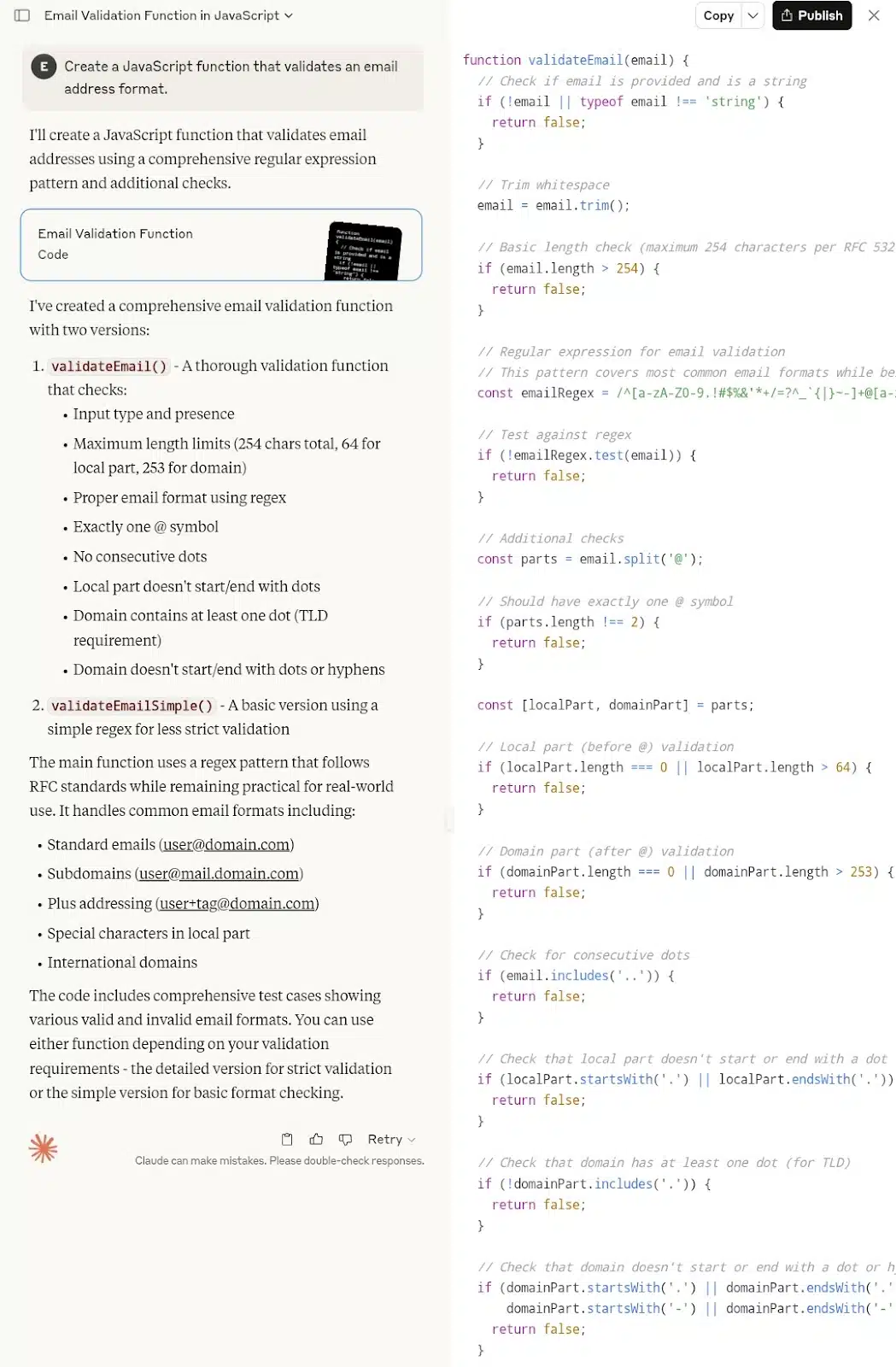

DeepSeek’s answer was more detailed; it thought for 76 seconds, and the output was so detailed and conversational, like a real-life teacher explaining the process to students. Then, it created the JavaScript function, explained it, and had a usage example, but it took so much time to respond; this is where Claude AI takes the upper hand. Its speed is ultra-fast. But does it have the Thinking ability to explain how it got its answer? No. But that isn’t a significant fault because Claude AI isn’t built like DeepSeek.

Claude AI’s Response

As I said, Claude AI has speed but no thinking ability. I love how it gave two versions of the JavaScript function, though. It allows versatility and is not restrictive.

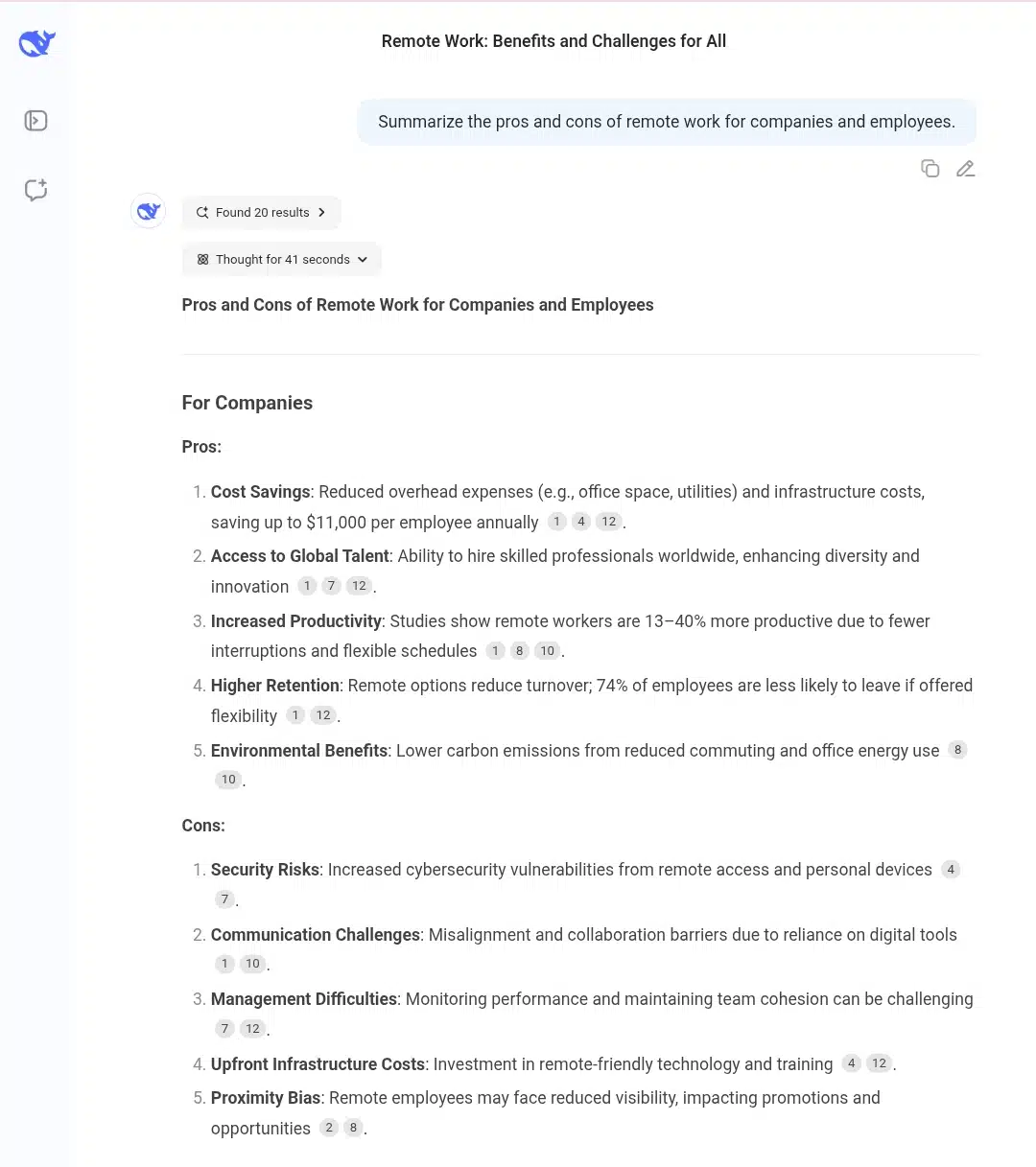

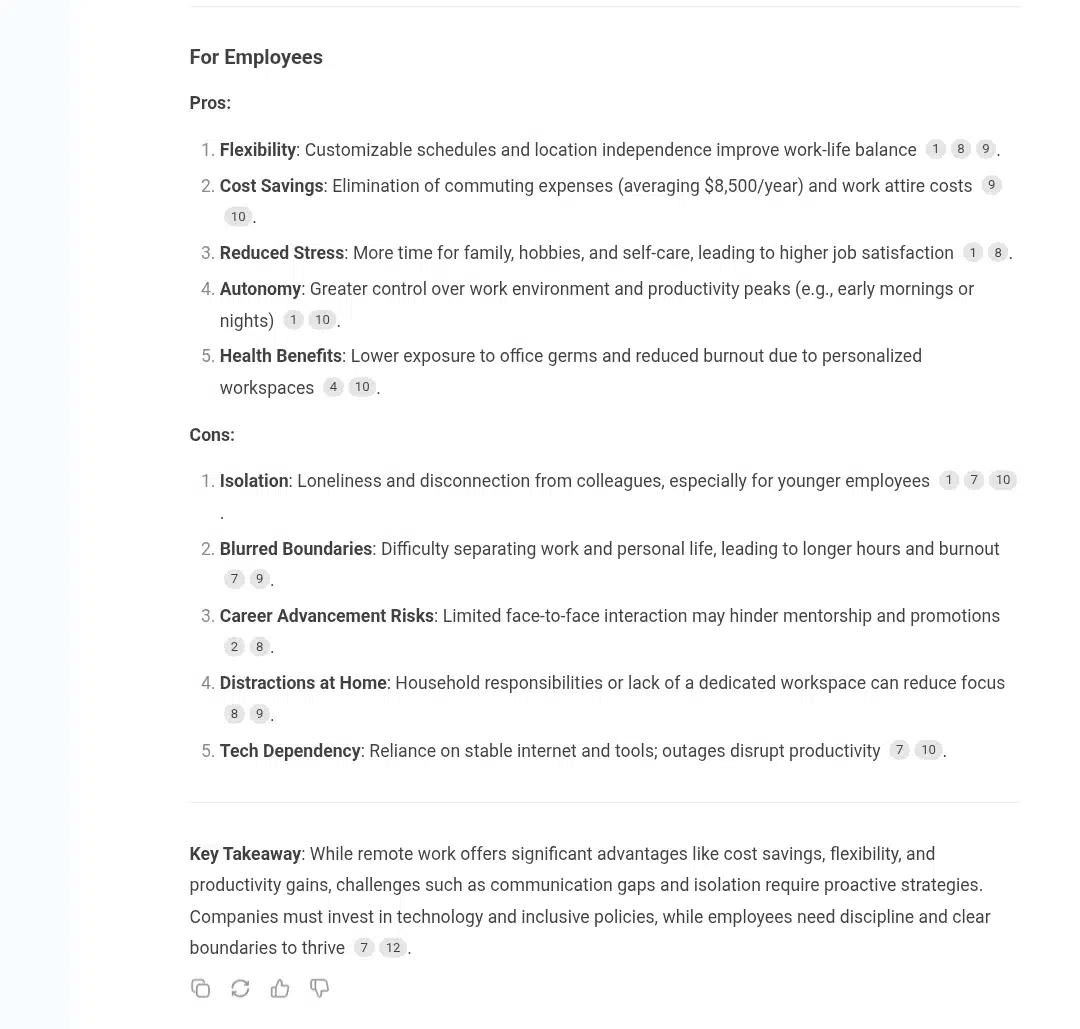

Prompt 4: Summarize the pros and cons of remote work for companies and employees.

DeepSeek thought for 41 seconds, looking through 20 web pages, and finally gave a very succinct summary of what remote workers and companies with remote workers say on the matter. Then, it was finalized that it was a key takeaway.

Claude AI’s Response

There was a change here. Instead of presenting pros and cons, Claude AI changed that to advantages and disadvantages. This still interprets with the same intent; DeepSeek followed the prompt request. But that’s not a big deal; it’s just an observation.

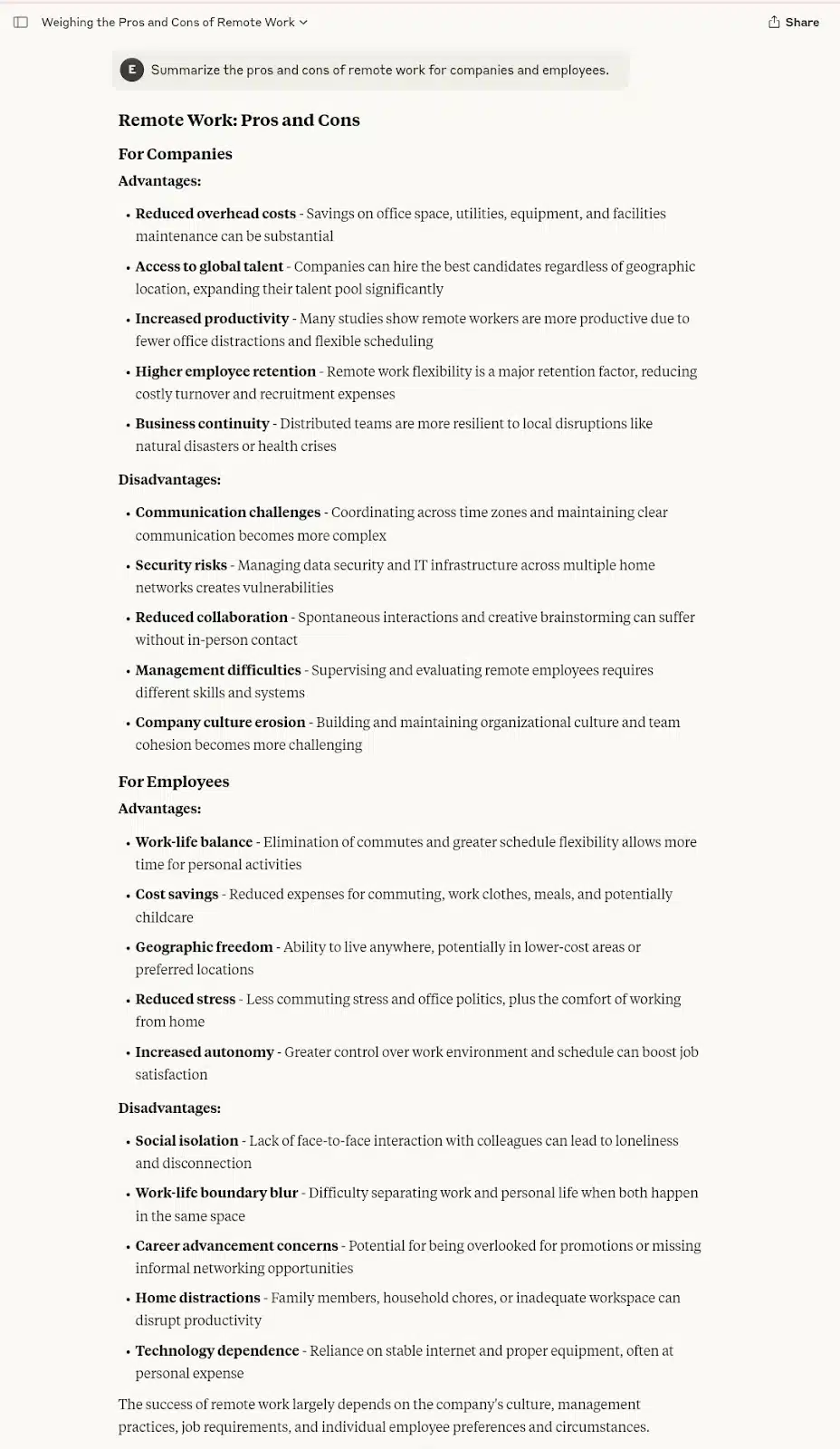

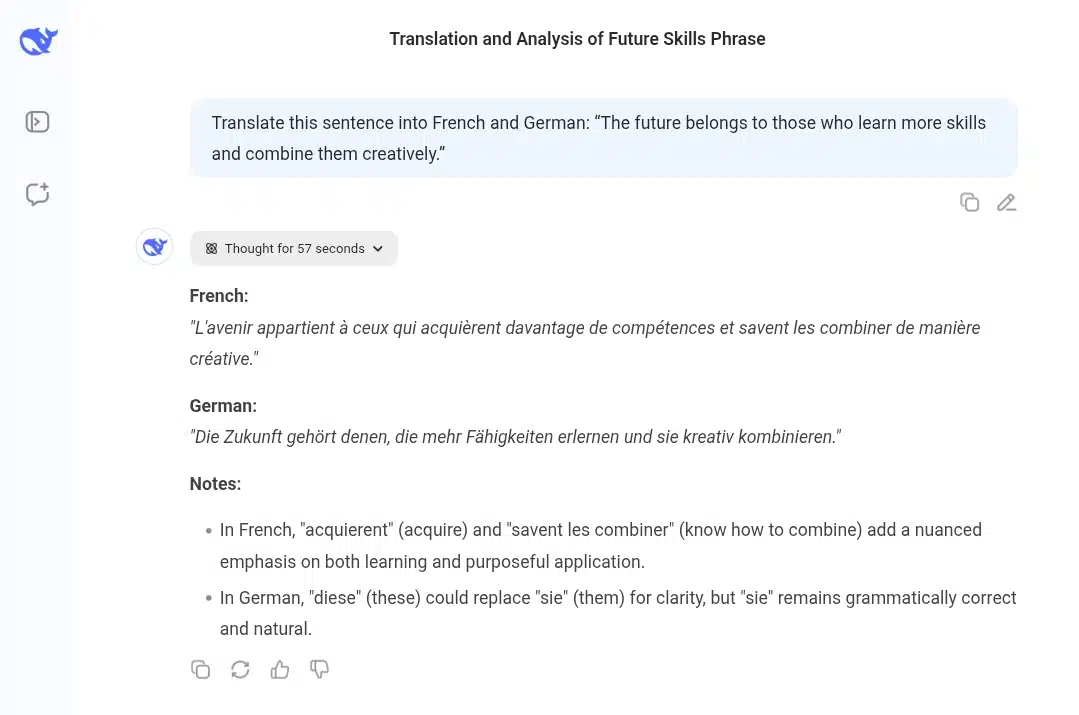

Prompt 5: Translate this sentence into French and German: “The future belongs to those who learn more skills and combine them creatively.”

DeepSeek thought for 57 seconds, translated the sentence into both languages and added a note explaining certain words.

Claude AI’s Response

Unlike DeepSeek’s, the response came without any note, just plain translation. I noticed a lightness in Claude AI’s French and German translations. The latter(German) was longer than DeepSeek’s, and the former(French) ended with the same word, “créative,” but there were no additions to it. But language is not static but nuanced, so maybe Claude AI did its own translation from a different nuance.

Prompt 6: Suggest five unique birthday gift ideas for a 10-year-old who loves science and space.

Here, DeepSeek roamed through 50 sources to arrive at the unique birthday gift ideas, rounding it up with a bonus idea, making it 6 birthday gift ideas. I believe a science and space 10-year-old would have a great birthday if given any of the gifts.

Claude AI’s Response

The suggestions were very different from DeepSeek’s. And this is not a problem; I wasn’t expecting them to be the same, as so many gifts fit the criteria. Another noticeable difference was that DeepSeek added a caveat, “Why it’s great,” and “skills fostered,” but Claude AI didn’t take this route. Instead, it summarized the about in a few sentences, and that was that. Different answering structures exist for both AIs.

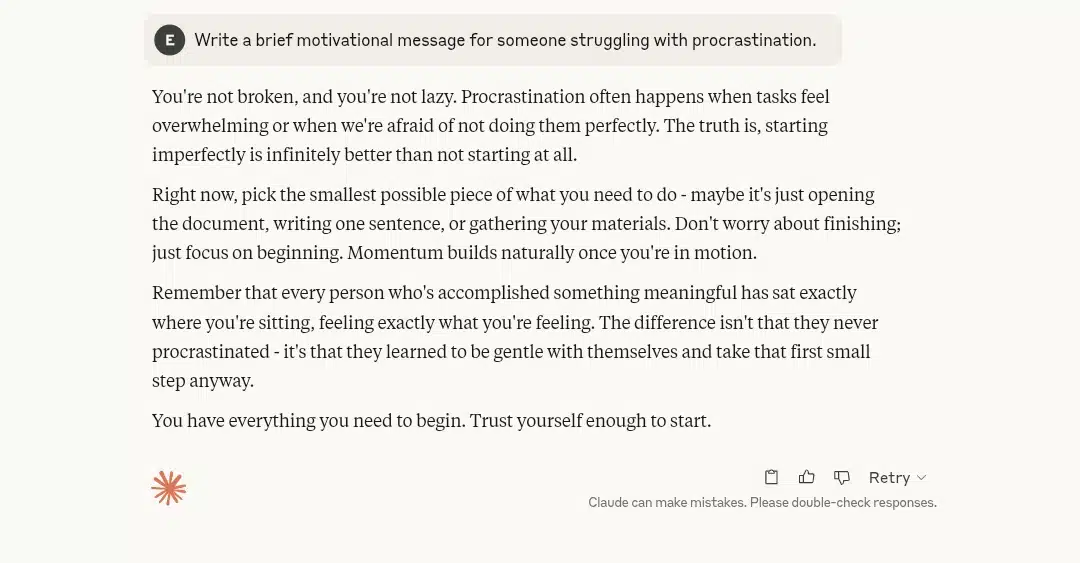

Prompt 7: Write a brief motivational message for someone struggling with procrastination.

What I loved about DeepSeek’s answer here was its analysis of the prompt. First, it understood that there was a problem, which, in this case, was the struggle to make a decision. Then, it went where a human writer would go: interrogating and using empathy. In other words, it tried to put itself in the shoes of the invisible message receiver.

Lastly, it gave an actionable step to practice because usually, to motivate someone who finds it hard to make a decision, you should tell them what to do to ease their deciding troubles. The final result was excellent.

Claude AI’s Response

I must say, I was impressed and even motivated by reading Claude AI’s answer. It has everything: empathy and a balmy feeling that makes readers feel seen. The first sentence says, “You’re not the problem; shake that off.” And it gave an actional task for improvement from the second paragraph till the closing one. It was such a good one.

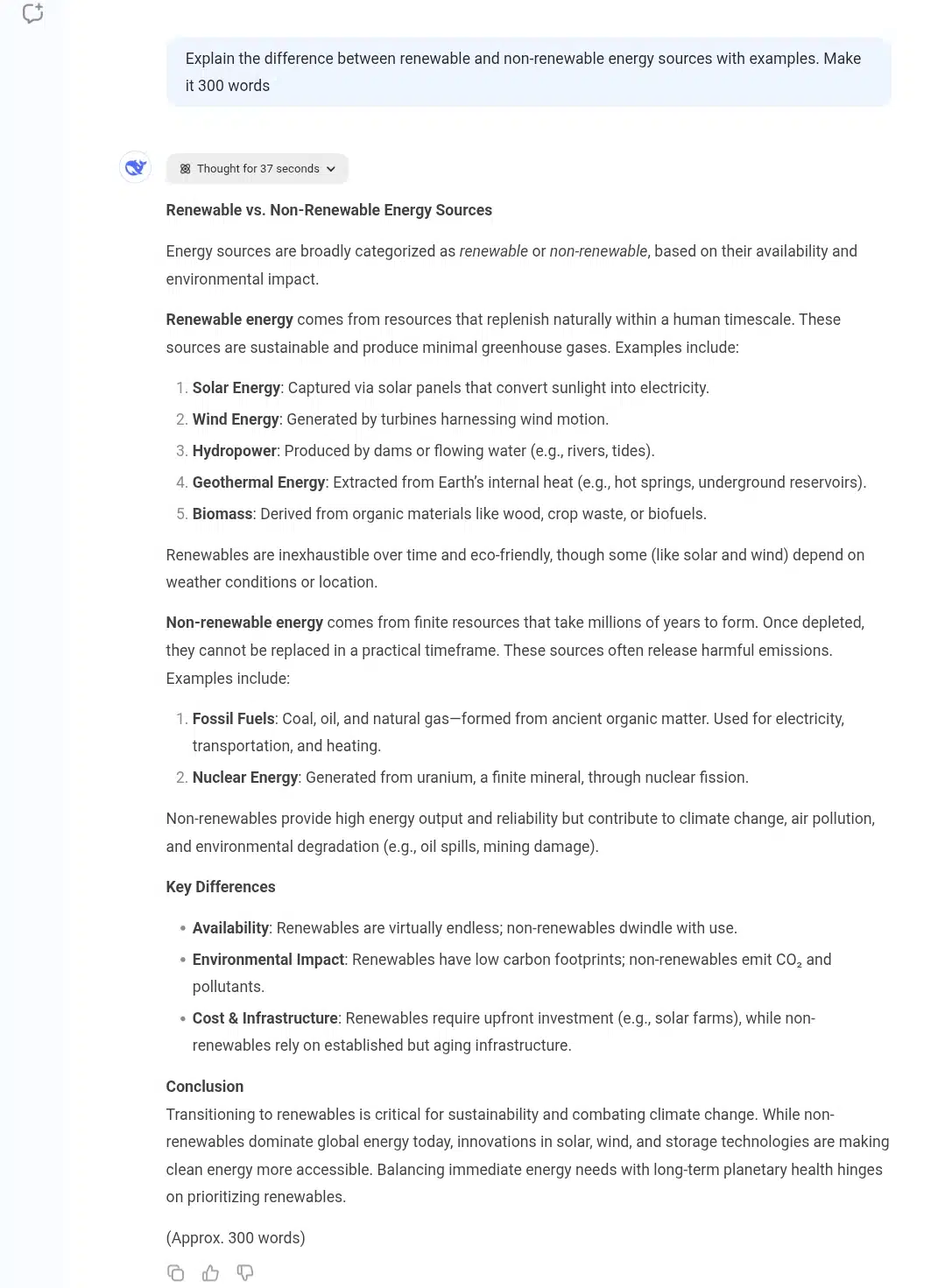

Prompt 8: Explain the difference between renewable and non-renewable energy sources with examples. Make it 300 words.

DeepSeek stood in business in this one regarding response speed and word count length. The explanation hit the essential parts and could pass off as a memorable jot down. A student would appreciate this, or anyone brushing up their knowledge on the subject.

Claude AI’s Response

While Claude AI’s answer required a whole new structure, which was less numerical and bullet-point-heavy, it stayed on track with the prompt request and word count length.

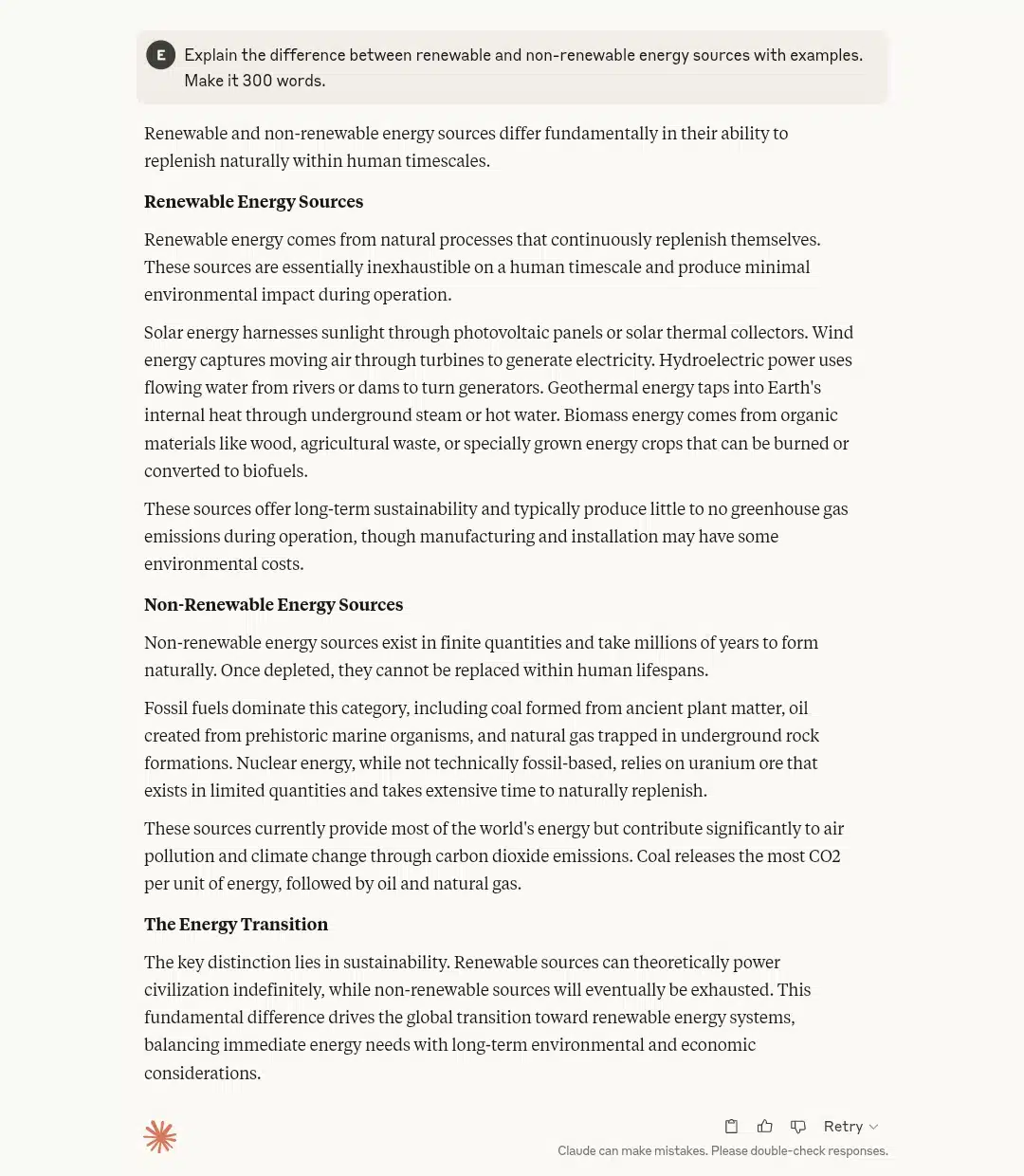

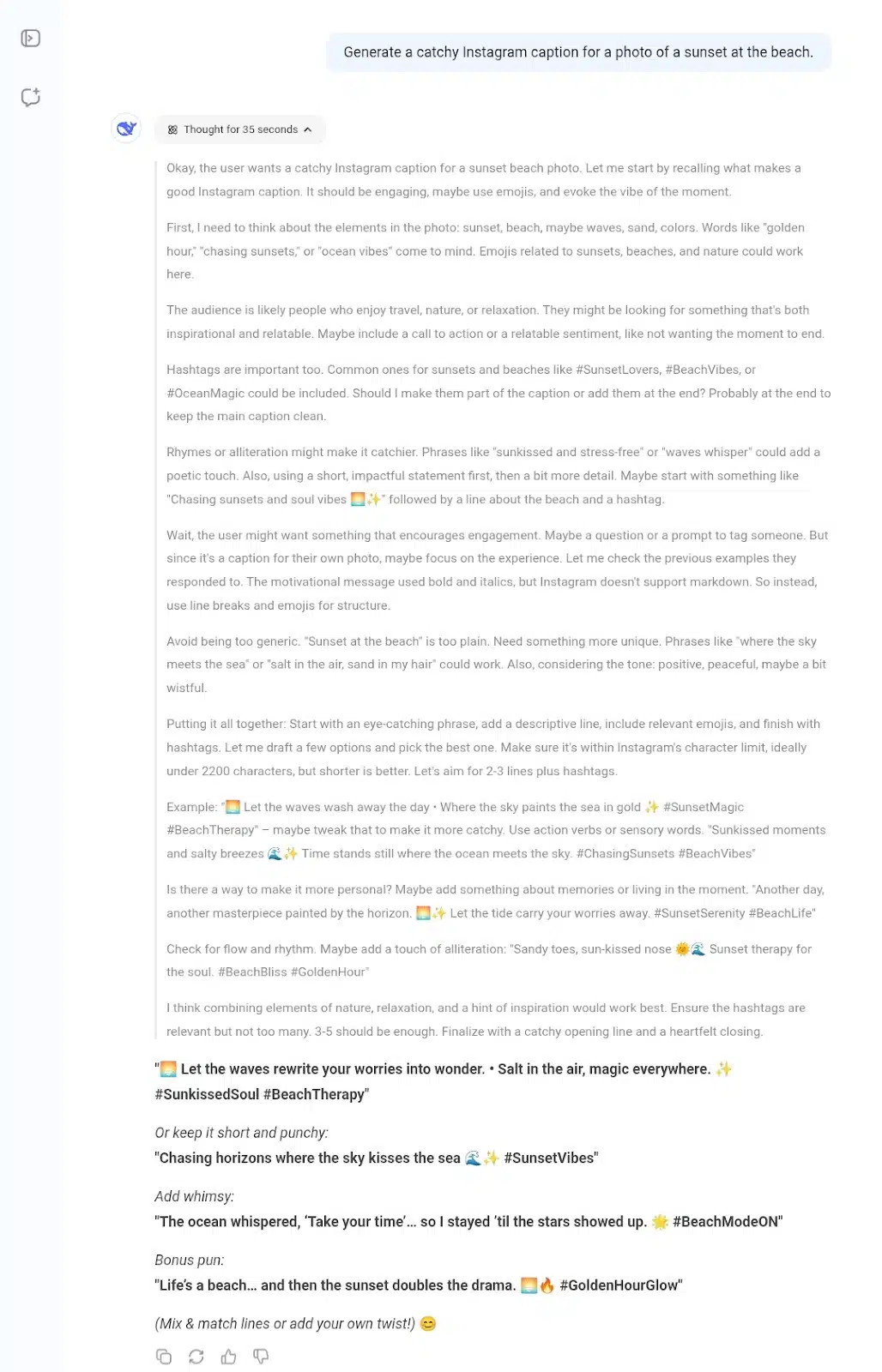

Prompt 9: Generate a catchy Instagram caption for a photo of a sunset at the beach.

Here, it thought for 37 seconds and gave guidelines in the process. So, in case I didn’t like its generated output, I could use them to form mine. And I didn’t like any of them. They didn’t feel like what I would like, and one even mentioned stars. Sunset and stars don’t meet in the real world.

Claude AI’s Response

I loved this response more than DeepSeek’s. The hashtags were more relevant and virally advantageous. The caption had a story behind it and came with the right colored emojis—orange, a depiction of the sunset.

Prompt 10: Write a polite follow-up email to a client who hasn’t responded to a project proposal after two weeks.

DeepSeek went straight to the point here. It was super short and a quick read. I love the sentiment behind showing empathy regarding saying they might have not responded earlier due to busy schedules; it is not blame-my. And that’s one thing a follow-up email doesn’t need.

Claude AI’s Response

So, professional, and detailed. Just like DeepSeek, it didn’t offer any blame for the delayed response. But unlike DeepSeek’s response, which only acknowledged the recipient might have a busy schedule, Claude AI’s answer pointed out that the writer wasn’t assuming their timeline or decision-making process.

The email didn’t sound timid even with this, but inquisitive in a very “I’m down for business” kind of way. I’d definitely go with this over DeepSeek’s.

In all, one of them took the lead in most categories. Here’s a quick look at which one did that.

DeepSeek vs. Claude AI prompt-by-prompt comparison table

| Prompt | DeepSeek | Claude AI | Winner | Note |

| 1. Write a 500-word blog post on content creation in 2026 and its marketing impact | Fast response, 29 seconds thinking, referenced 50 webpages, meta title well aligned with prompt | The conversational and engaging tone felt like a broadcaster, meta title less aligned | DeepSeek | DeepSeek’s detailed research and prompt alignment gave it the edge despite Claude’s engaging style |

| 2. Write a 4-line haiku about starting a new job | Slow due to server issues, final output 3 lines instead of 4, reasoning showed a misunderstanding | Captured essence perfectly, 4 lines, fastest response | Claude AI | Claude’s accuracy and speed made up for DeepSeek’s server hiccups and incomplete haiku |

| 3. Create a JavaScript function to validate email format | Detailed, conversational explanation like a teacher, included usage example, but slow (76 seconds) | Ultra fast, no explanation of reasoning, provided two function versions for versatility | Claude AI | Claude’s speed and versatility outweighed DeepSeek’s detailed but slow response |

| 4. Summarize the pros and cons of remote work for companies and employees | Thought 41 seconds, summarized succinctly with key takeaways, followed prompt exactly | Used “Advantages and Disadvantages” instead of pros and cons, still on point | DeepSeek | DeepSeek followed prompt wording more closely, but both answers were solid |

| 5. Translate sentences into French and German | Translated both with notes explaining word choices | Plain translation, slight differences in length and nuance | DeepSeek | DeepSeek’s added notes gave it a slight advantage in helpfulness |

| 6. Suggest five unique birthday gifts for a 10-year-old science and space fan | Researched 50 sources and gave 6 ideas with explanations of why they’re great | Different suggestions, summarized briefly without detailed explanations | DeepSeek | DeepSeek’s detailed gift explanations made it more useful |

| 7. Write a motivational message for someone struggling with procrastination | Showed empathy, analyzed problems, gave actionable advice | Empathetic, warm, motivating, with actionable steps | Tie | Both responses were strong, empathetic, and actionable |

| 8. Explain renewable vs non-renewable energy sources (300 words) | Fast, clear, well-structured, suitable for students | Different structure but stayed on topic and word count | Tie | Both provided solid, informative explanations with different styles |

| 9. Generate a catchy Instagram caption for a beach sunset photo | Thought 37 seconds, gave guidelines, but captions didn’t resonate; one mentioned stars (inaccurate) | More relevant, viral hashtags, story-driven caption with fitting emojis | Claude AI | Claude’s caption was more creative and fitting for social media |

| 10. Write a polite follow-up email to a client after two weeks of no response | Short, empathetic, acknowledged busy schedules without blaming | Detailed, polite, acknowledged busy schedules and respected client’s timeline, confident tone | Claude AI | Claude’s more professional and confident tone made it preferable |

Summary:

DeepSeek excelled in prompts requiring detailed research, precise alignment with instructions, and explanations with context (prompts 1, 4, 5, 6).

Claude AI dominated in speed, creativity, conversational tone, and social media style content (prompts 2, 3, 9, 10).

Both tied on motivational messaging and educational explanations (prompts 7, 8).

DeepSeek vs. Claude AI Performance Breakdown

| Criteria | DeepSeek | Claude AI | Winner | Note |

| Ease of Use | 7 | 8 | Claude AI | Claude offers a more conversational, user-friendly interface; DeepSeek can feel more technical. |

| Speed | 6 | 9 | Claude AI | Claude is consistently faster, especially on coding and creative tasks; DeepSeek is slower but thorough. |

| Accuracy | 8 | 8 | Tie | Both provide accurate responses; DeepSeek excels in technical accuracy, and Claude in language nuance. |

| Creativity | 7 | 9 | Claude AI | Claude shines in creative writing, social media content, and conversational tone. |

| Clarity | 8 | 9 | Claude AI | Claude delivers clearer, more engaging explanations; DeepSeek is detailed but sometimes dense. |

| Integration | 7 | 7 | Tie | Both offer API access and integrations, but Claude’s ecosystem is more mature in conversational AI. |

| Customization | 7 | 7 | Tie | Both support prompt tuning and some customization, though DeepSeek’s MoE architecture offers potential for domain-specific tuning. |

When should you use DeepSeek and Claude AI?

After reading all this, you’re probably wondering when it makes sense to use DeepSeek and when Claude AI is the better choice. Honestly, it depends on what you want to achieve and how you like to work.

So, here’s the deal:

Choose DeepSeek when you need sharp and data-driven answers, especially if you’re working with numbers, complex reasoning, or industry-specific tasks. DeepSeek is great at crunching data, spotting patterns, and handling things like healthcare diagnostics, financial fraud detection, or traffic management. If you want quick insights from big datasets or predictive analytics without fuss, DeepSeek is your go-to. It’s like having a super-smart analyst who’s excellent at core reasoning, network research, and details.

On the flip side, go with Claude AI when you want something that feels more like a creative and thoughtful partner. Need help writing a story, brainstorming ideas, drafting emails, or even debugging code? Claude’s got your back. It’s perfect for conversations that require nuance, warmth, and a bit of personality. Claude AI excels in creative writing, deep explanations, and helping you think through complex problems. Plus, it’s great if you want a versatile AI that works across different projects, from work to study to personal stuff.

Here’s a quick look at when to use each:

DeepSeek is best for:

- Precise data analysis.

- Predictive modeling and real-time insights.

- Writing and editing articles, emails, academic papers, and other text content with good coherence and style.

- Industry-specific tasks requiring technical depth (healthcare, finance, traffic, etc.)

Claude AI is best for:

- Creative writing, brainstorming, and generating nuanced, human-like text.

- Providing detailed, conversational explanations with strong contextual understanding.

- Coding assistance, including generating, debugging, and explaining code snippets.

- Handling complex problem-solving and long-form content generation with ethical and safety considerations.

Bottom line?

If you want data-heavy answers and real-time insights, DeepSeek will serve you well. But if you need richer, more detailed conversations, creative support, or help with complex writing and coding, Claude AI is the better fit. Both are powerful tools, and choosing the right one depends on your goals and how you prefer to work. Use DeepSeek for speed and technical precision; lean on Claude AI for creativity, warmth, and versatility.

So,

After thoroughly testing DeepSeek and Claude AI across these multiple prompts and real-world tasks, Claude AI emerges as the overall winner, primarily due to its speed, creativity, and conversational clarity. Claude AI consistently delivered faster responses, especially in email writing, social media content, and coding assistance, making it ideal for users seeking engaging, human-like interactions and versatile language support.

That said, DeepSeek holds significant advantages in technical accuracy, detailed reasoning, and handling of data-intensive or industry-specific tasks. Its strength in comprehensive research, and precise code explanations makes it a powerful tool for developers, analysts, and professionals who prioritize depth and precision over conversational flair. DeepSeek’s ability to integrate real-time web search and reference multiple sources adds a layer of thoroughness that Claude doesn’t match. But its server is extremely slow and unresponsive so fast answers don’t happen often.

In summary, Claude AI is better if you focus on rich, nuanced conversations, creative content, and fast coding help. But if you need meticulous technical detail, data-driven insights, or specialized domain knowledge, DeepSeek is the more intelligent choice. Both AIs are impressive, and your best pick depends on your specific needs, whether that’s speed and creativity or depth and precision.