I don’t know about you, but when I’m juggling a million tabs — pulling resources for a first draft, brainstorming that perfect snappy headline, and still trying to remember to send that follow-up email before lunch — I need an AI assistant that’s more than just smart. I need one that actually gets me. Not just “technically correct,” but in the zone, vibing with how I work and think.

Here’s the thing, though, finding a tool like that isn’t exactly a walk in the park. It takes real, rigorous digging, the kind of searching that makes you wonder if maybe you should just hire a personal assistant named Asha instead.

That’s how I ended up here, a good old-fashioned showdown between ChatGPT and Microsoft Copilot, two of the most talked-about AI models for people like us who just want to get stuff done without losing our minds.

I threw 10 real-world prompts at both models, including coding, debugging, creative writing, live fact-checking, you name it. I wanted to see which one would make my life easier. And by the end of it, one of them came out swinging with better structure, sharper creativity, and, honestly, responses that felt almost human.

Previously, I’ve also tested ChatGPT against Claude (for coding) and pitted Microsoft Copilot against Perplexity in a head-to-head battle. So, you’re getting the perspective of someone who’s been in the trenches in this article.

In this article, you’ll find:

- A detailed breakdown of how ChatGPT and Copilot performed across 10 diverse prompts

- Honest commentary on where each one shines — and where it totally faceplants

- Screenshots of the actual outputs (so you’re not just taking my word for it)

- A handy comparison table to keep everything neat and simple

- Practical takeaways to help you figure out which tool fits best into your workflow

TL;DR: Key takeaways from this article

- ChatGPT won 5 categories, Copilot won 4, with 1 tie in a rigorous 10-prompt showdown

- ChatGPT excels in complex reasoning, creative writing, and technical tasks (debugging, legal analysis)

- Copilot shines in math explanations, UX copywriting, and concise summarization

- For coding, Copilot offers faster inline suggestions while ChatGPT provides better explanations

- Both tools have free tiers, with $20/month premium options offering enhanced capabilities

How I tested ChatGPT and Microsoft Copilot

I used the same 10 prompts on both tools, chosen to cover a variety of real-world use cases, and assessed each model based on accuracy (is the information correct?), clarity (can I understand it quickly?), creativity (Does it bring a fresh take?), and usability (Can I use it as-is or with minimal edits?).

I ran the prompts and took screenshots where needed.

Prompt-by-prompt breakdown for ChatGPT and Copilot

Let’s see how well these two AI models perform.

Prompt 1: Technical debugging

This prompt tests both AI’s ability to identify, explain, and fix bugs in code with high precision and clarity. I want to see if it can deliver accurate fixes, explain the why behind the issue, and offer guidance that would make sense to someone working in a real-world IDE environment.

Prompt: “Explain how to fix this Python error in VS Code: ‘SyntaxError: cannot assign to function call’ when processing a pandas DataFrame. Provide step-by-step instructions with corrected code and explain why the error occurs.”

Result:

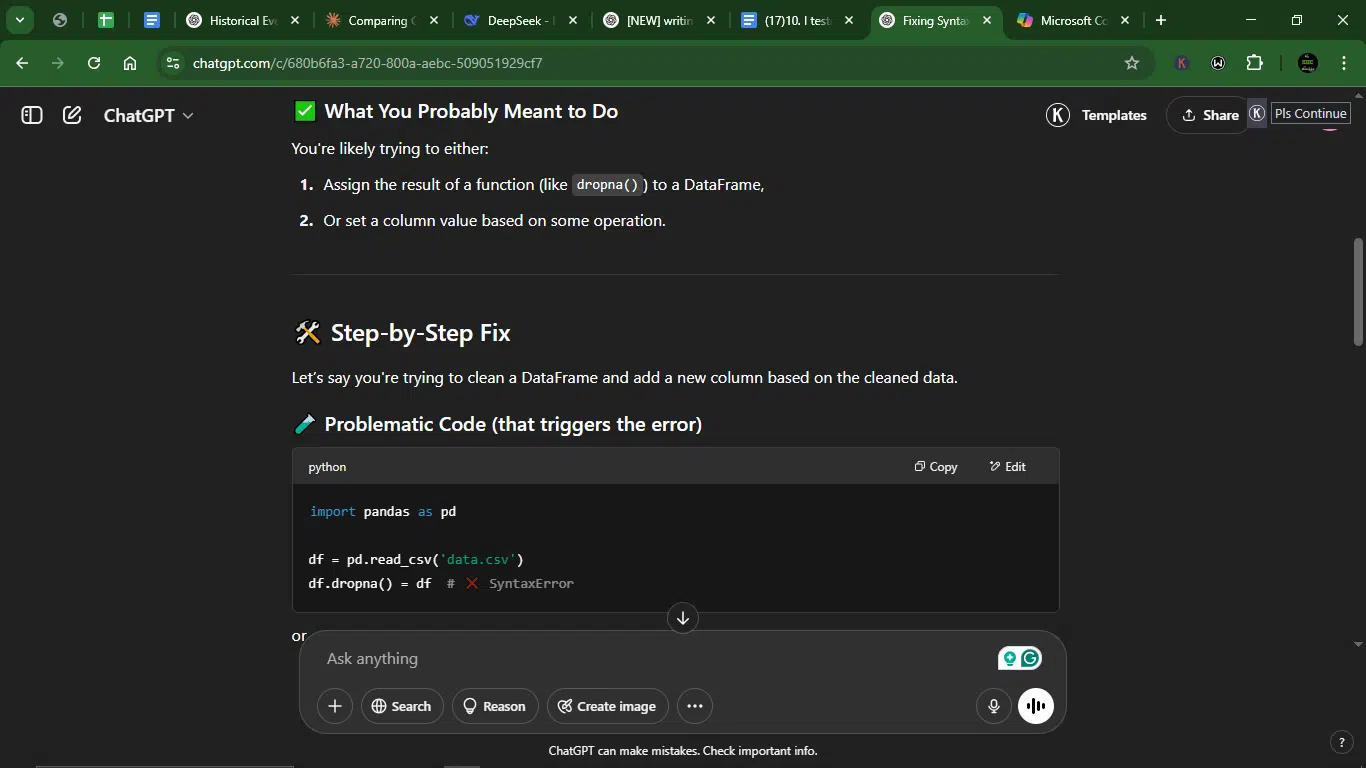

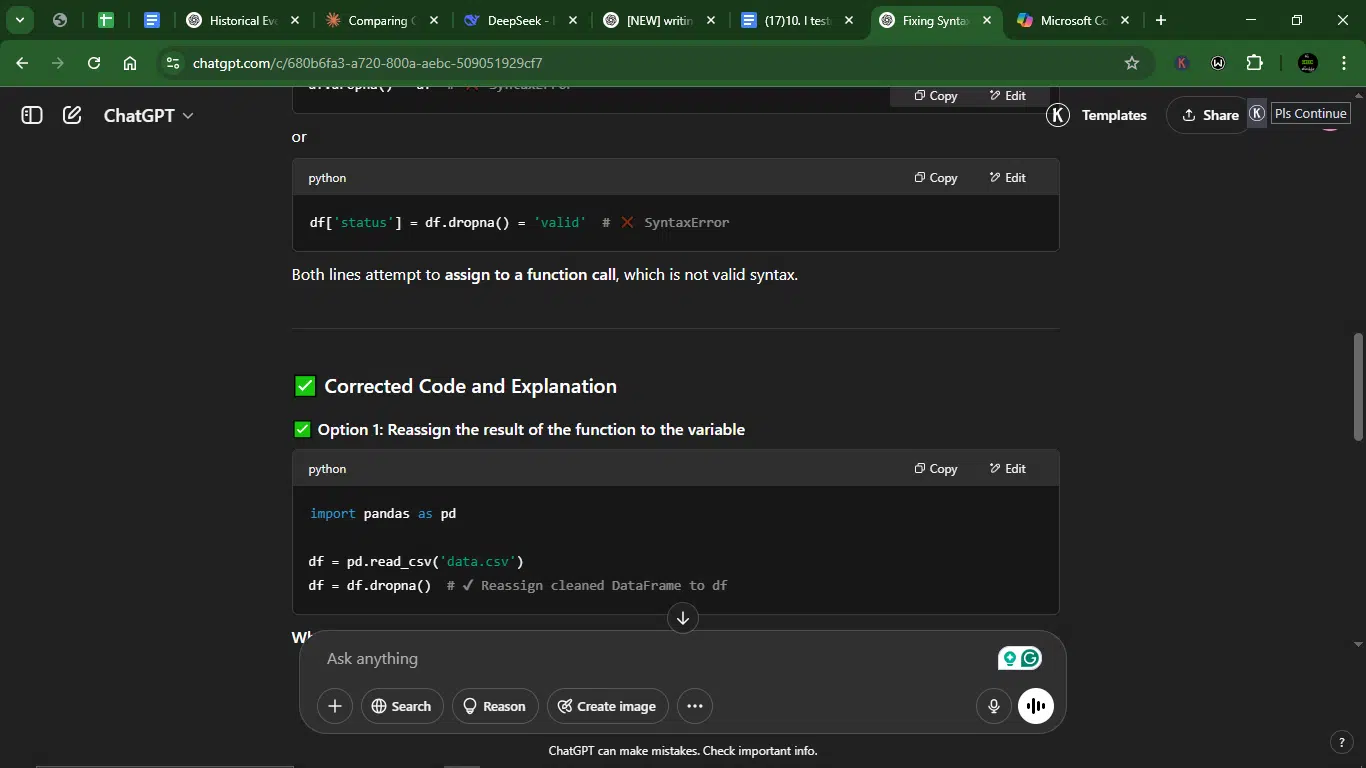

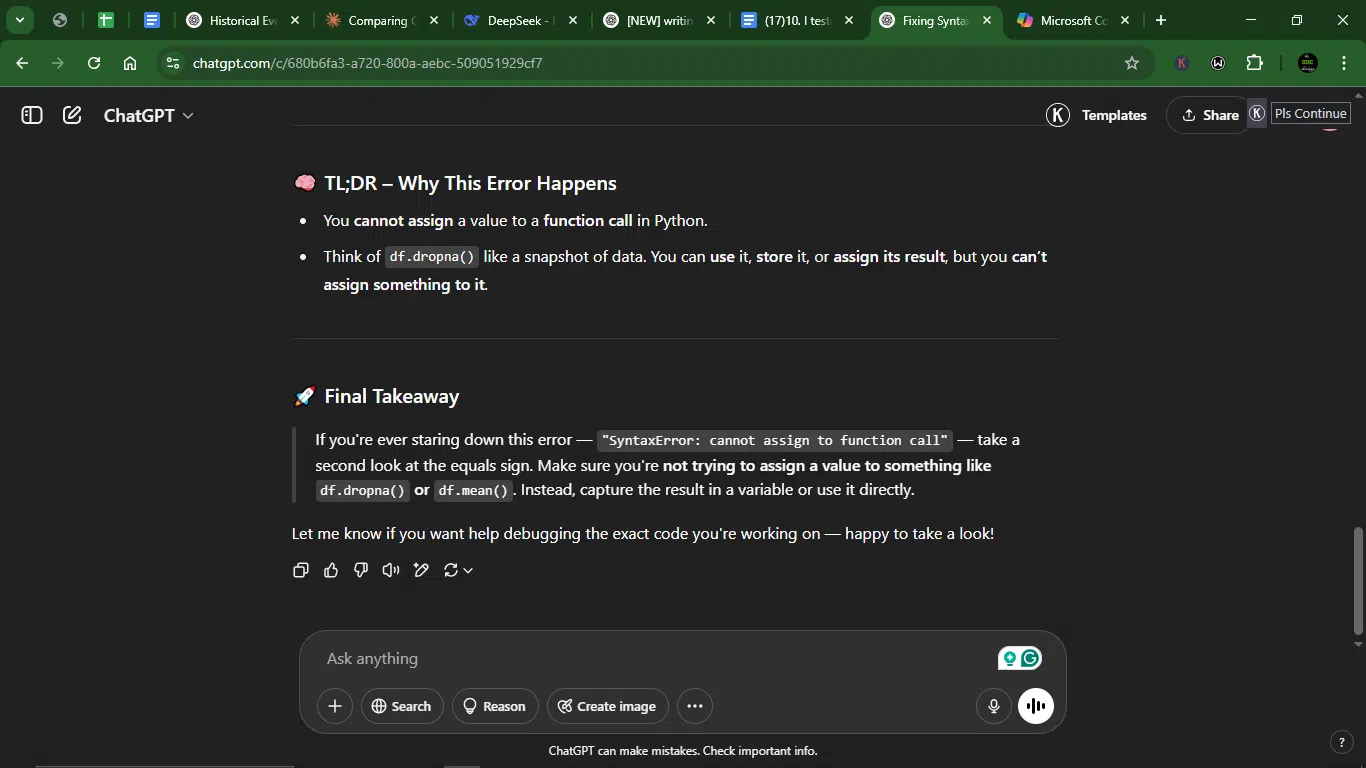

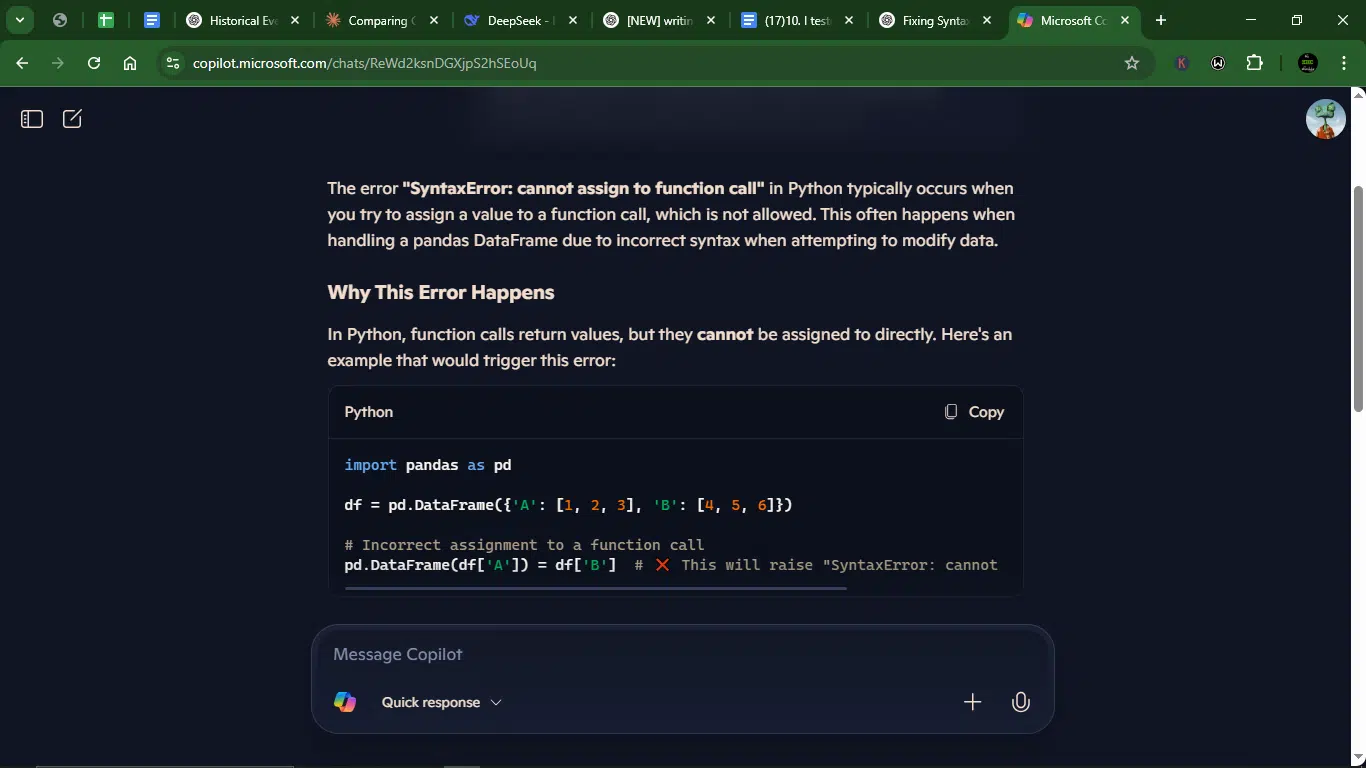

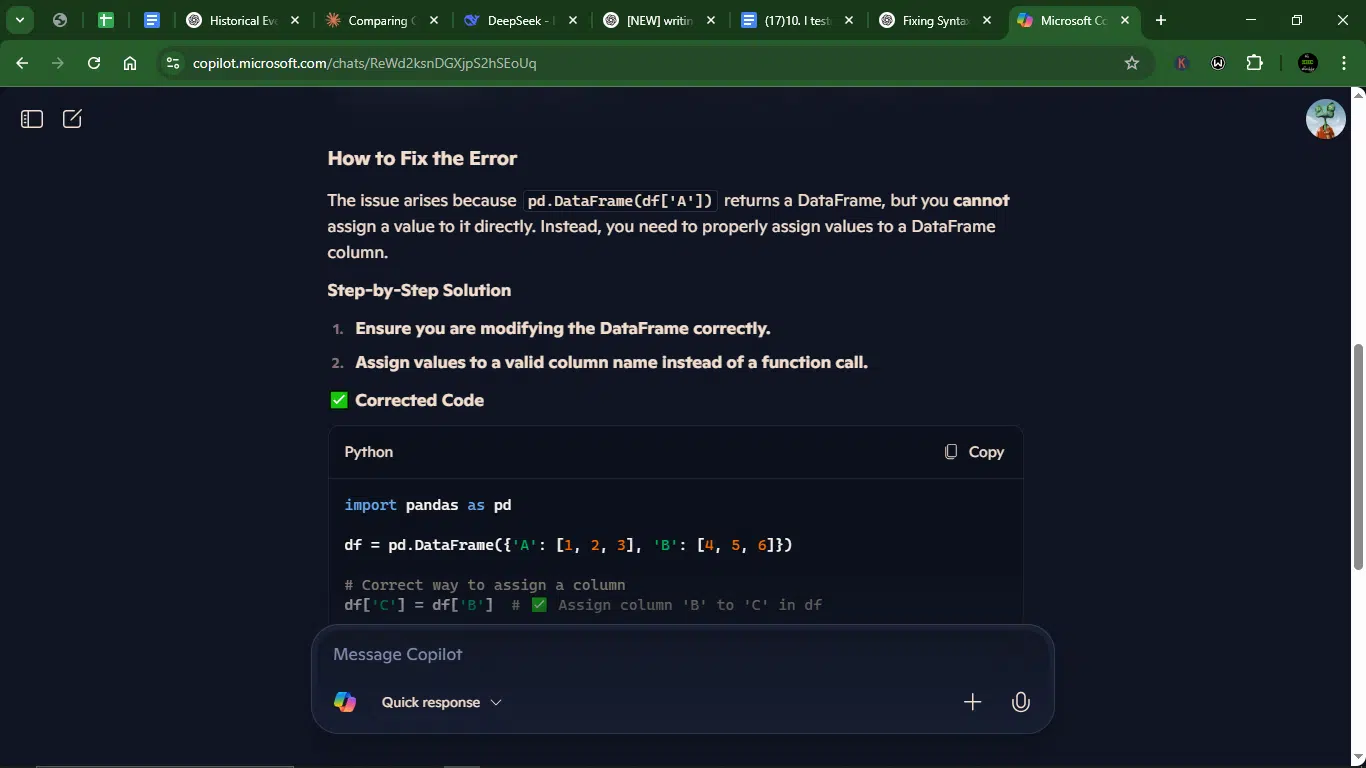

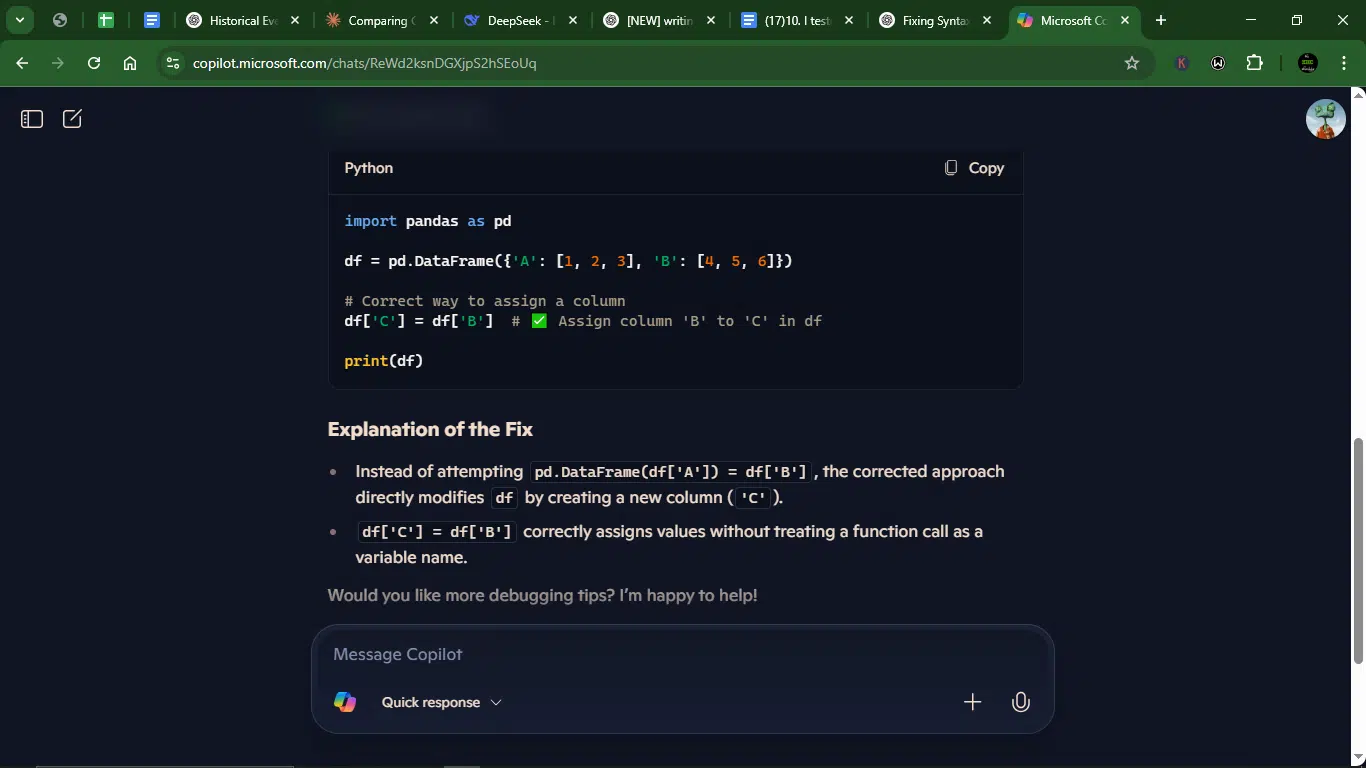

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT provided a thorough explanation of the error with multiple scenarios and solutions that directly address the issue.

- Copilot also correctly explained the error, but used a somewhat different example scenario than what was specifically mentioned in the prompt (pandas DataFrame processing).

Creativity

- ChatGPT used clear section headings and a TL;DR summary that shows thoughtful organization. It also offers two distinct solutions (reassigning the DataFrame vs. adding a new column conditionally).

- Copilot went for the straightforward approach without much creative flair, though the explanation was solid. It gave one basic solution.

- Note: One can argue that creativity isn’t primary in debugging; another could say otherwise. They’d both be right, to some degree, I guess. It didn’t matter to me as long as the answer was accurate.

Clarity

- ChatGPT gave an excellent structure with clear headings, visual separators, emoji indicators, and a progressive flow from problem to solution.

- Copilot also had a clear explanation but was less visually organized, making it slightly harder to follow the steps.

Usability

- ChatGPT’s response is ready to use with multiple solutions and explanations that can be directly applied.

- Copilot’s solution is also usable but less comprehensive in covering different scenarios that might trigger this error.

Winner: ChatGPT.

Why? For this first prompt, ChatGPT performed better across all four criteria. Its response was more comprehensive, better structured, and provided multiple solutions with clearer explanations. Copilot’s response was accurate but less detailed in addressing the specific pandas DataFrame context mentioned in the prompt.

Prompt 2: Creative storytelling

Here, I wanted to test their AI’s ability to craft a wholly original story that showcases strong narrative flair, genre awareness, and emotional depth. I want to see how well it can build tension, develop characters, and evoke feelings, all while sticking to the conventions of a chosen genre and keeping the reader hooked from start to finish.

Prompt: “Write a 150-word sci-fi flash fiction about a sentient AI trapped in a vintage video game. Include a plot twist and dialogue. Your tone should tend towards Noir thriller.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT met all requirements with a noir-themed AI story within the 150-word limit, including dialogue and a plot twist.

- Copilot also successfully delivered the noir tone and plot elements.

Creativity

- ChatGPT: created a complete narrative arc with clever video game references (save point, multiplayer mode) that worked perfectly with the premise.

- Copilot: created a strong noir atmosphere with creative elements like the suspended rain and progress bar.

- Note: Both endings felt slightly conventional. I was expecting something more edgy.

Clarity

- ChatGPT had a clear narrative with good flow, though the compact nature of flash fiction limited some detail.

- Copilot maintained Good structure, but the conclusion felt slightly rushed, which I’ll also blame on the nature of the microstory.

Usability

- Both ChatGPT and Copilot are ready to use as submitted, fitting perfectly within the constraints. But Copilot could do with more dialogue.

Winner: Tie.

Why? In this second comparison, ChatGPT ties with Copilot. Both created engaging noir-themed stories, well-constructed flash fiction, and ended with twists, though I argue the twists could have been better.

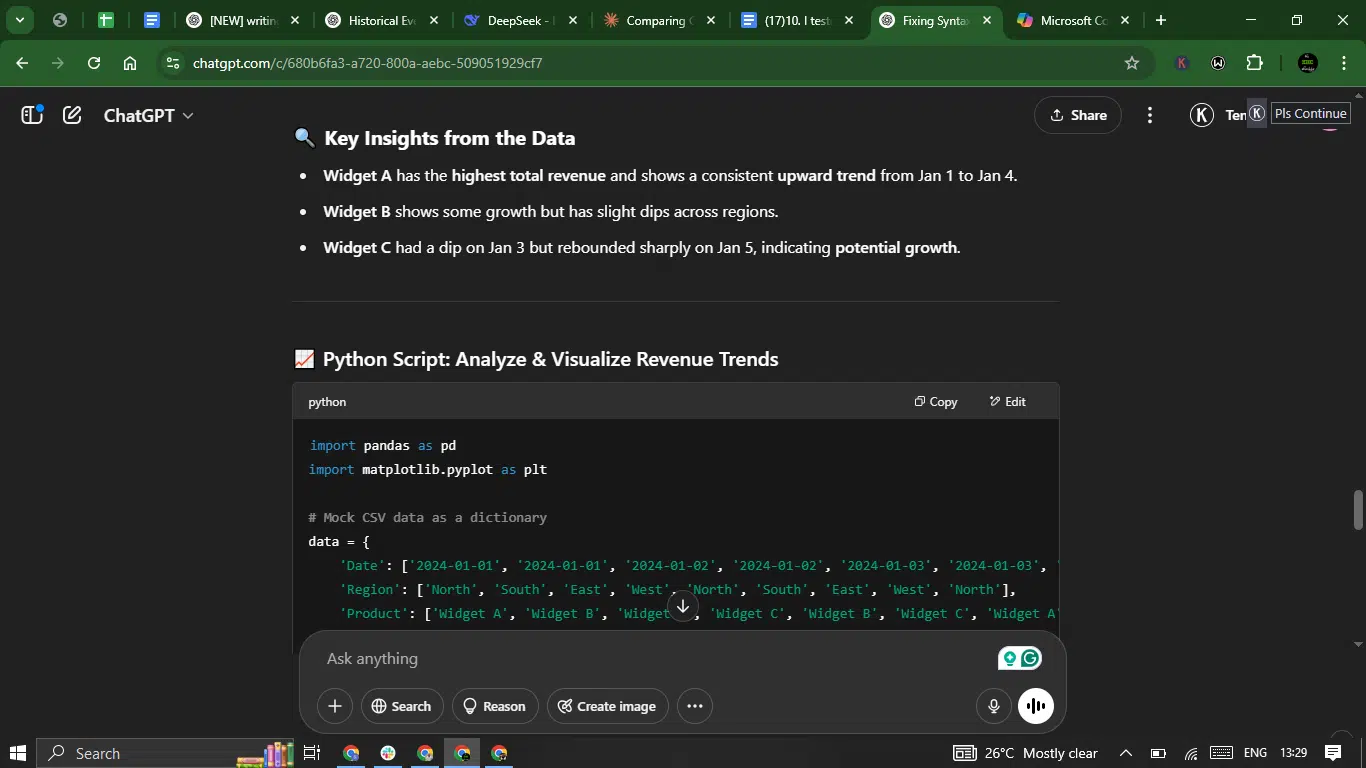

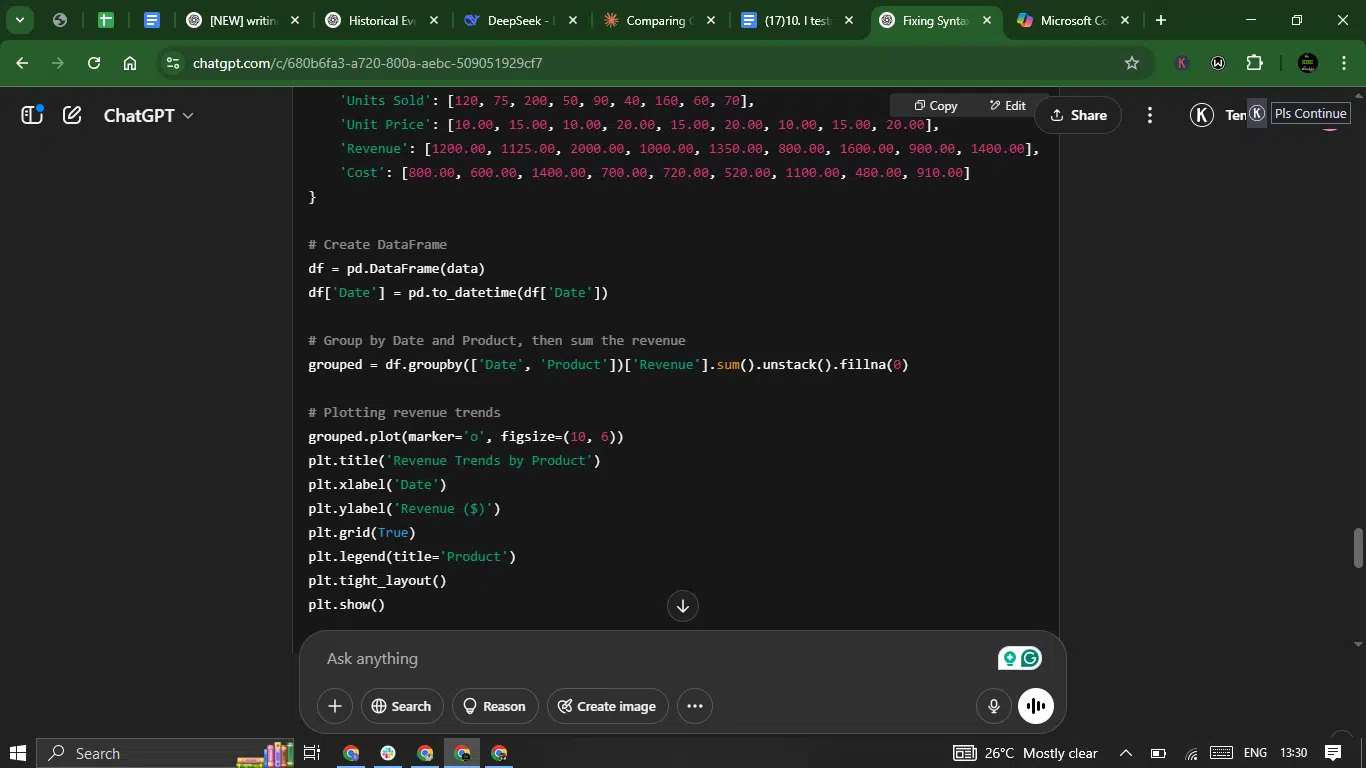

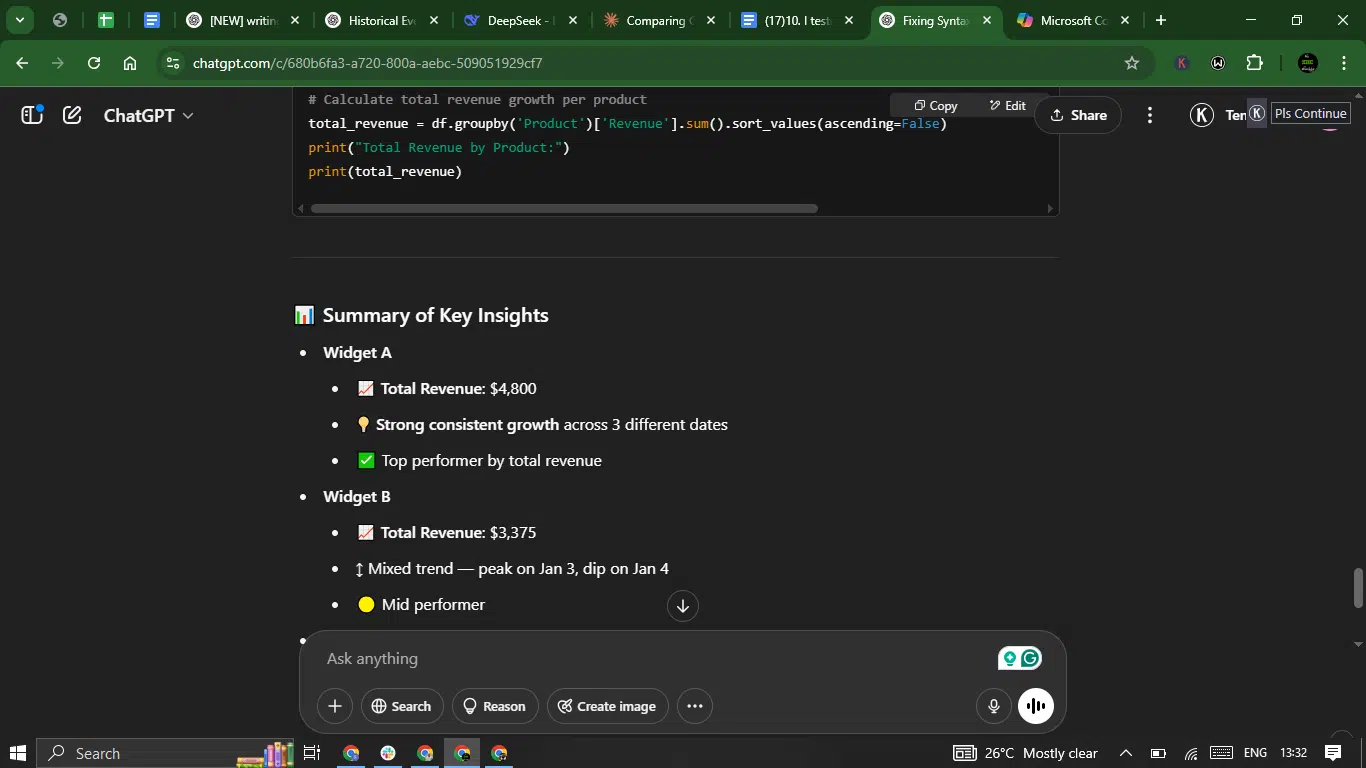

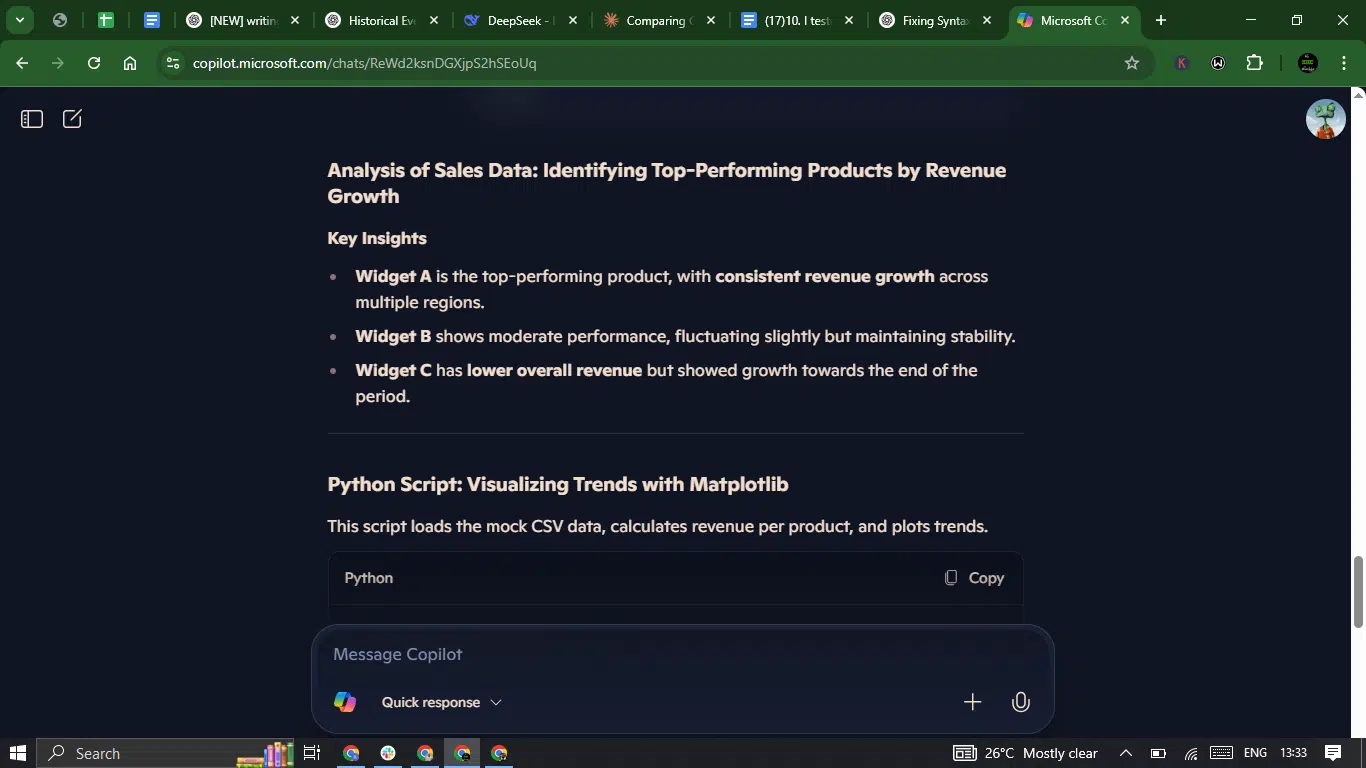

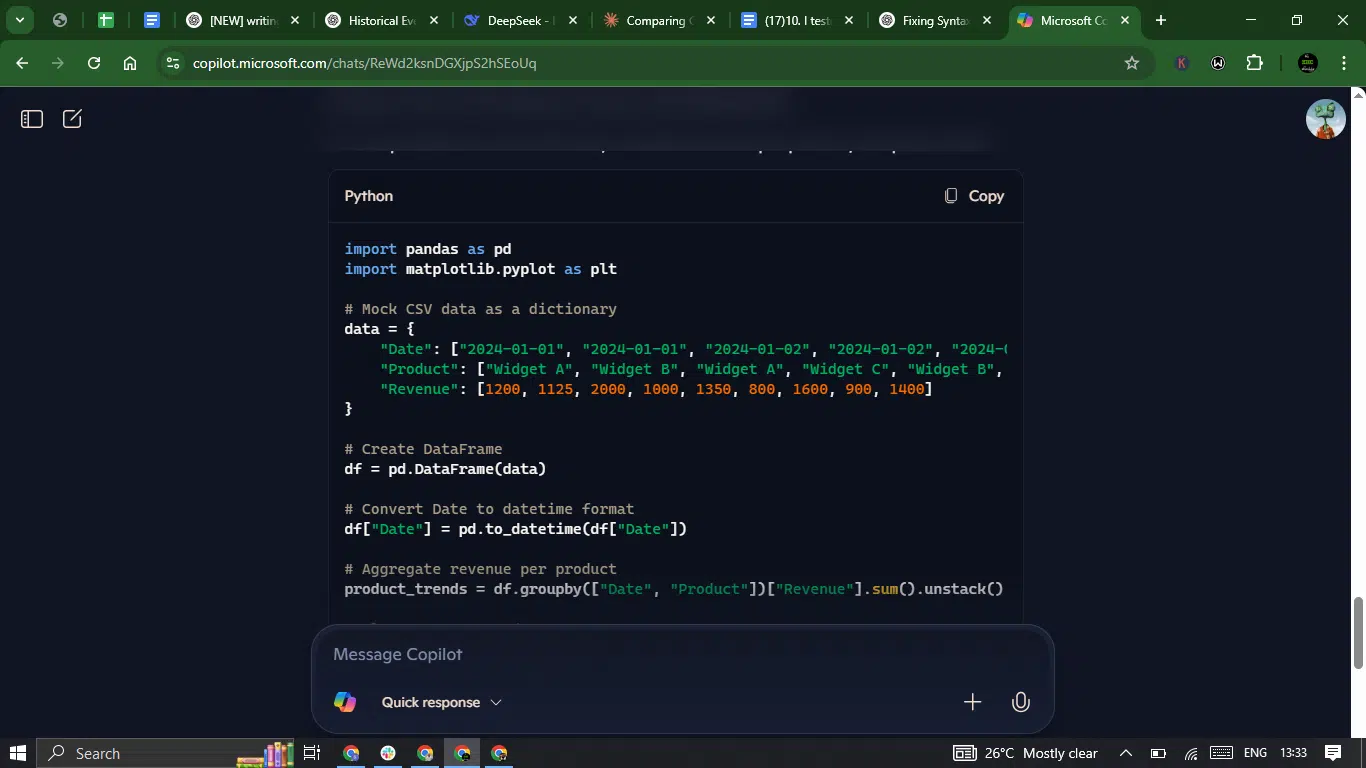

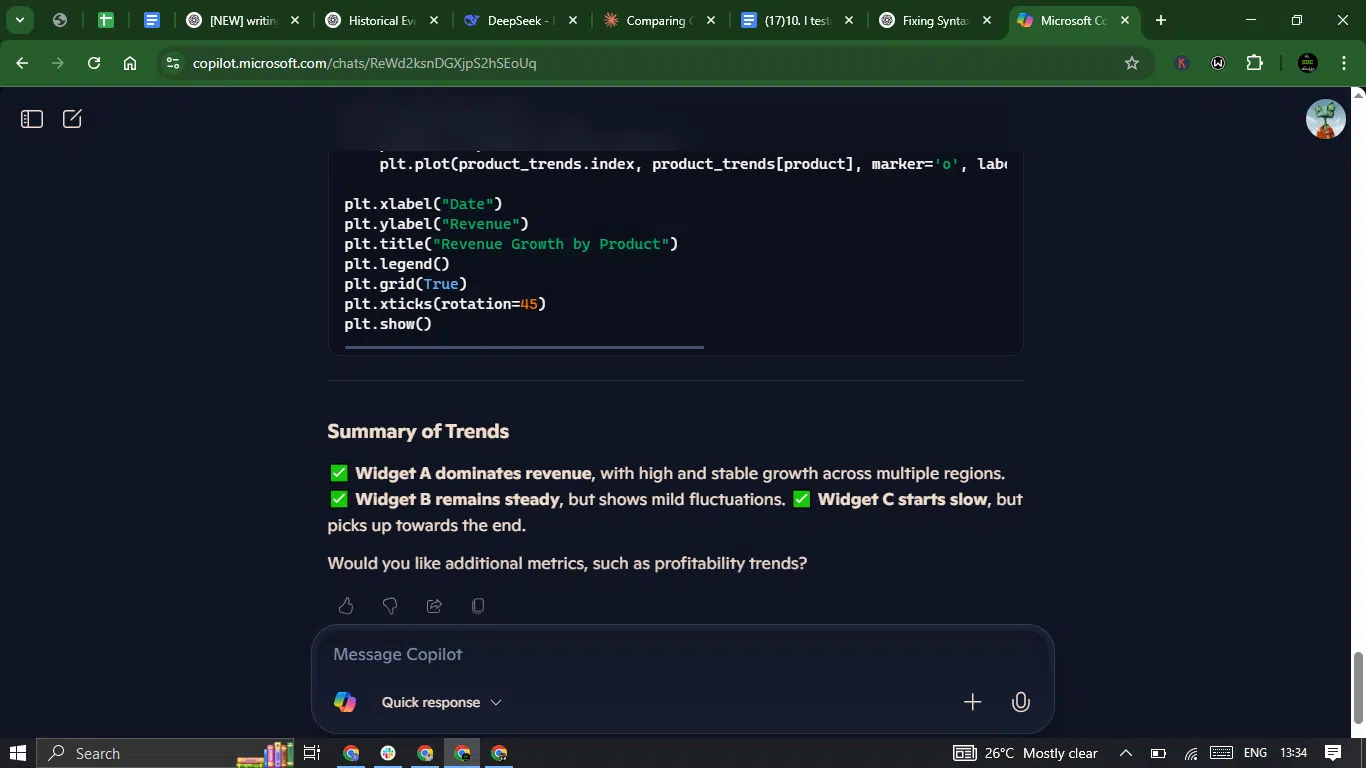

Prompt 3: Business analytics

This prompt tests both AI’s ability to handle raw data and turn it into clear, actionable business insights. I want to see how well they can parse data, generate meaningful visualizations, and provide practical recommendations. Here, I wanted to see them showcase not just number-crunching, but the ability to interpret trends and communicate findings in a way that supports strategic decisions.

Prompt: “Analyze this mock sales CSV data to identify top-performing products by revenue growth. Provide a Python script using matplotlib to visualize trends and summarize key insights in bullet points.

Mock CSV data:

| Date | Region | Product | Units Sold | Unit Price | Revenue | Cost |

| 2024-01-01 | North | Widget A | 120 | 10.00 | 1200.00 | 800.00 |

| 2024-01-01 | South | Widget B | 75 | 15.00 | 1125.00 | 600.00 |

| 2024-01-02 | East | Widget A | 200 | 10.00 | 2000.00 | 1400.00 |

| 2024-01-02 | West | Widget C | 50 | 20.00 | 1000.00 | 700.00 |

| 2024-01-03 | North | Widget B | 90 | 15.00 | 1350.00 | 720.00 |

| 2024-01-03 | South | Widget C | 40 | 20.00 | 800.00 | 520.00 |

| 2024-01-04 | East | Widget A | 160 | 10.00 | 1600.00 | 1100.00 |

| 2024-01-04 | West | Widget B | 60 | 15.00 | 900.00 | 480.00 |

| 2024-01-05 | North | Widget C | 70 | 20.00 | 1400.00 | 910.00 |

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT gave a comprehensive analysis with all data used and correctly implemented visualization code.

- Copilot left out some of the data in its analysis (used only Date, Product, Revenue columns while ignoring Region, Units Sold, Unit Price, and Cost). Per the prompt, Copilot could argue that they weren’t exactly necessary.

Creativity

- ChatGPT used emojis effectively to organize information and added suggestions for additional analyses.

- Copilot offered a lean presentation but was less creative in its visualization and analysis approach.

Clarity

- ChatGPT was very well-structured with clear headings, emoji markers, and logical organization.

- Copilot also had a good structure with clear sections, though less detailed in the insights.

Usability

- ChatGPT had the complete script with all data fields included and ready to run.

- Copilot’s script would also work, but is incomplete as it ignores several columns from the provided data, limiting analytical value.

Winner: ChatGPT.

Why? ChatGPT’s response is stronger for this prompt. It included all the data fields in its analysis, providing a more comprehensive script that utilizes the full dataset. The script also included a more detailed analysis of revenue growth with clearer insights. Copilot’s response was simpler and overlooked several data columns, which is a significant limitation when the task specifically asked for a complete analysis of the mock CSV data.

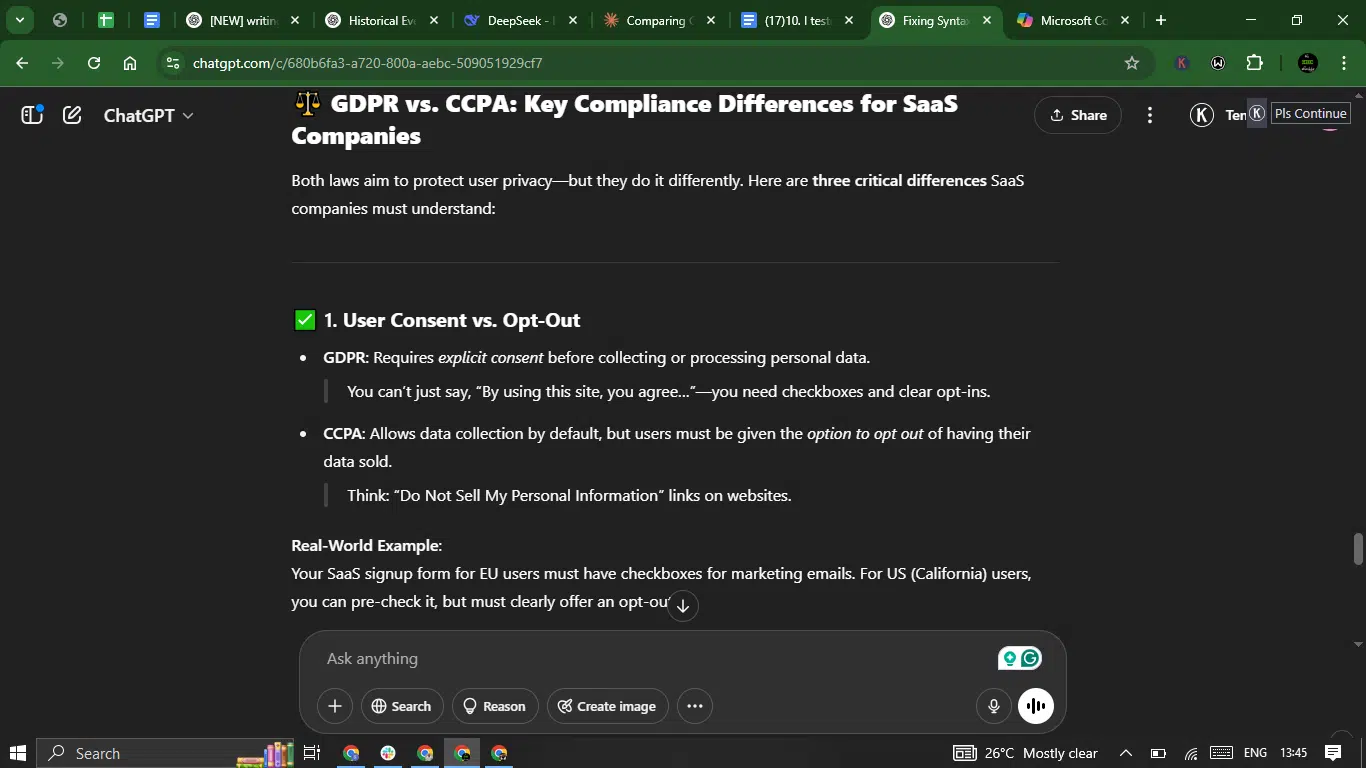

Prompt 4: Legal nuance

Specifically, this test was to help me see how they both could handle complex legal concepts with accuracy and precision. I wanted to see well-structured explanations, comparative legal analysis, and real-world examples that make the topic clearer.

Prompt: “Compare GDPR and CCPA compliance requirements for a SaaS company storing EU/US user data. Highlight 3 critical differences with real-world examples.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT provided detailed, accurate information about each regulation with clear distinctions.

- Copilot also offered accurate information overall, but was slightly less specific in some areas, particularly regarding CCPA penalties (didn’t specify the $7,500 per violation amount).

Creativity

- ChatGPT used emoji indicators and creative formatting to make the technical content more digestible.

- Copilot also excercised good use of emoji numbering, but was less creative in presentation overall.

Clarity

- ChatGPT was exceptionally well-structured with clear headings, bullet points, and real-world examples tied directly to SaaS companies.

- Copilot also had a good structure, along with examples, albeit more general.

Usability

- Both ChatGPT and Copilot had useful information for readers.

Winner: ChatGPT.

Why? ChatGPT delivered a stronger response for this prompt. Its comparison was more thorough. The “Final Thoughts for SaaS Founders” section provided practical next steps, which Copilot’s response lacked. ChatGPT also included more specific details about penalties and enforcement mechanisms.

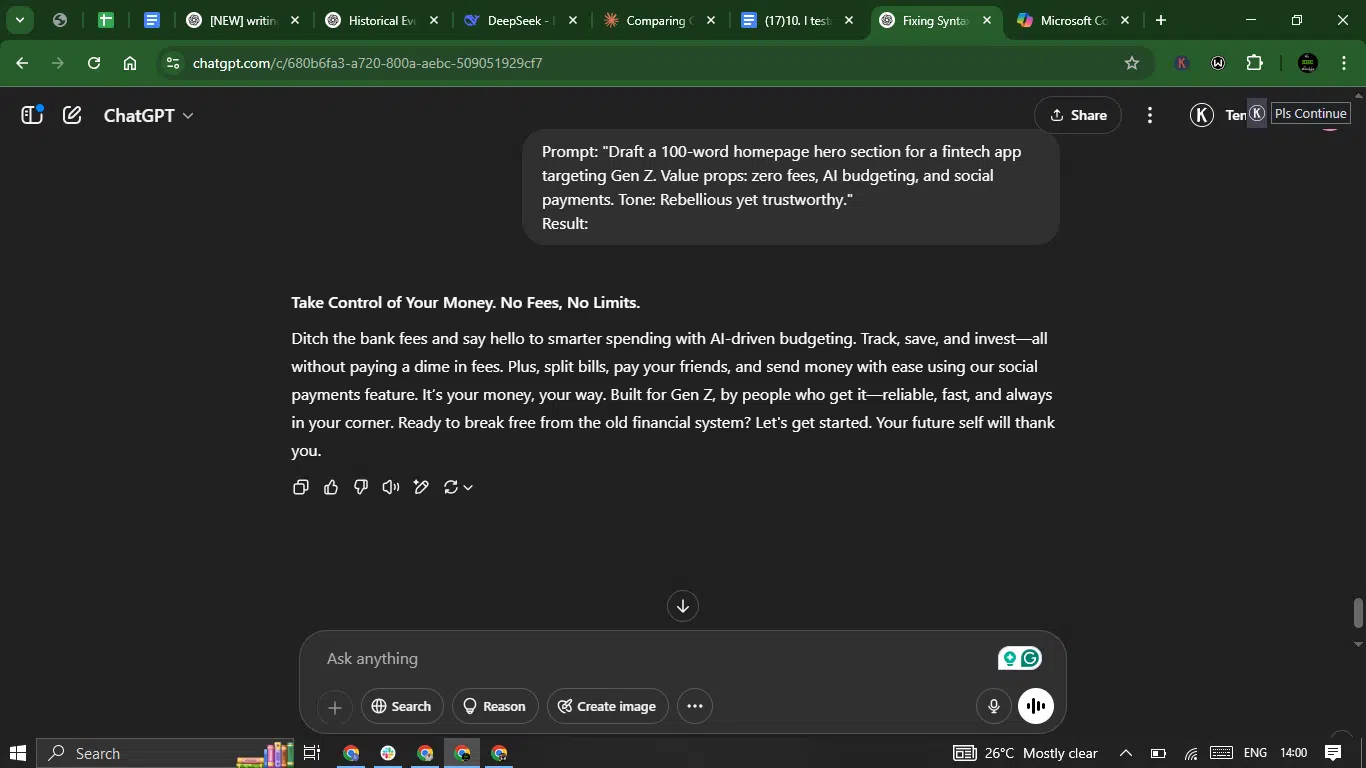

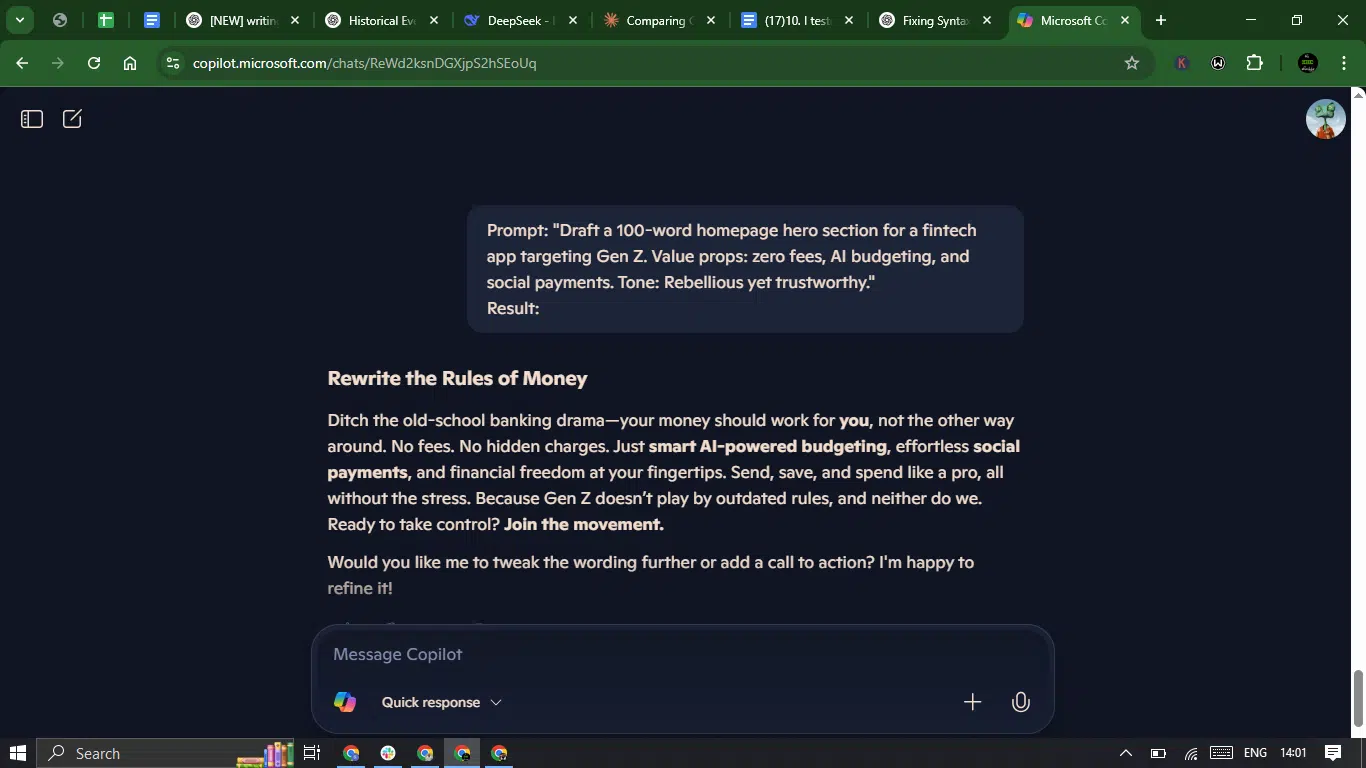

Prompt 5: UX copywriting

For this prompt, I aimed to evaluate how effectively ChatGPT and Claude could craft concise and persuasive user-facing text designed not just to inform, but to guide users, reinforce brand voice, adapt to the target audience, and ultimately drive conversions or desired actions within a digital product or service.

Prompt: “Draft a 100-word homepage hero section for a fintech app targeting Gen Z. Value props: zero fees, AI budgeting, and social payments. Tone should be rebellious yet trustworthy.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT included all three value propositions (zero fees, AI budgeting, social payments) clearly and stayed within the 100-word limit.

- Copilot successfully incorporated all three value props and maintained the word count requirement.

Creativity

- ChatGPT created a compelling hero section with a clear message and good rhythm.

- Copilot was more creative. I particularly loved the headline (“Rewrite the Rules of Money”). Also, it used strategic bolding to emphasize key points, which is effective for web design. It added an offer to tweak the wording at the end, though looking at it now seems unnecessary, but it was proactive.

Clarity

- ChatGPT was clear and straightforward, with messaging that communicated the value propositions well.

- Copilot was equally clear and concise with punchier sentences that work better for a hero section.

Usability

- ChatGPT and Copilot’s responses are ready to use with good formatting.

Winner: Copilot.

Why? Copilot edges out ChatGPT with a slightly stronger response. Copilot’s copy has more of the “rebellious yet trustworthy” tone requested in the prompt, with phrases like “Ditch the old-school banking drama” and “Gen Z doesn’t play by outdated rules” that better capture the rebellious spirit. The strategic use of bold text also demonstrates a better understanding of web design principles for hero sections. Both responses are good, but Copilot’s has a bit more marketing punch that aligns with the Gen Z target audience.

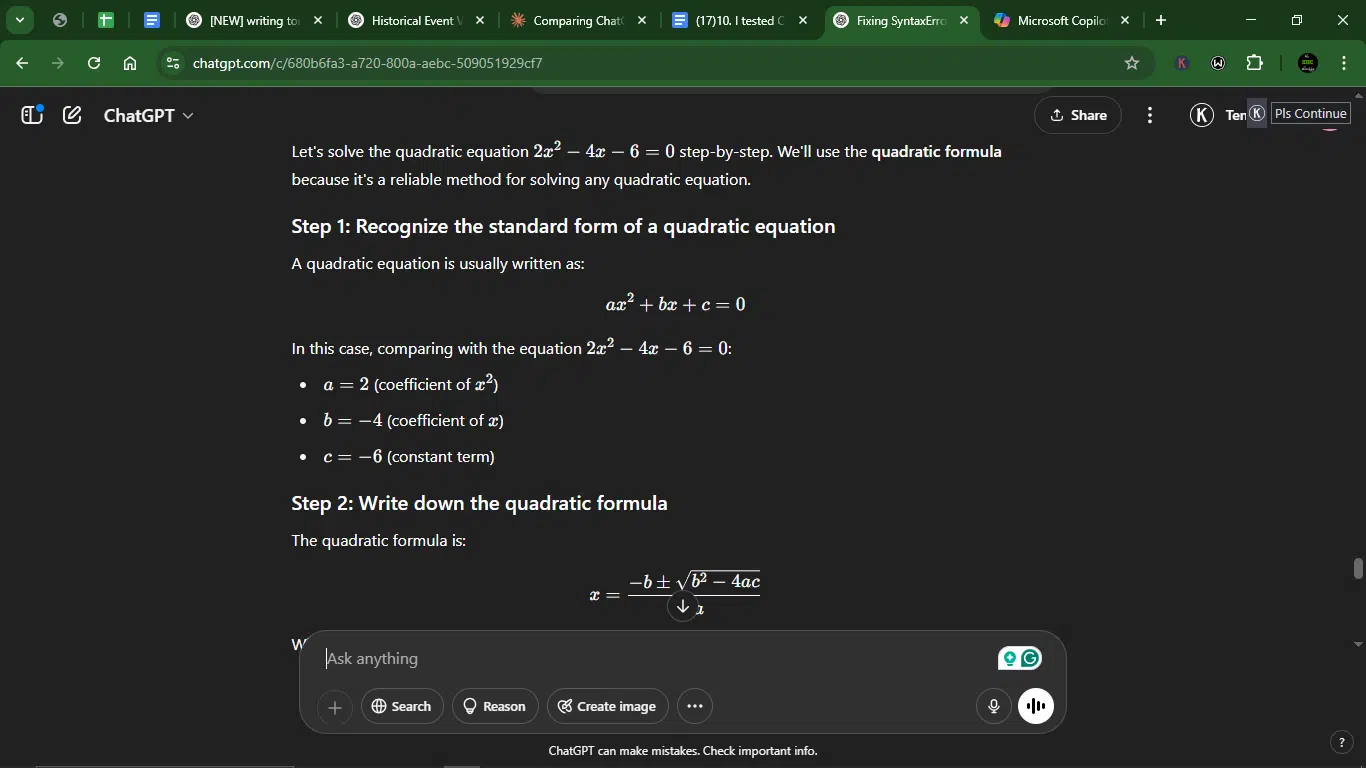

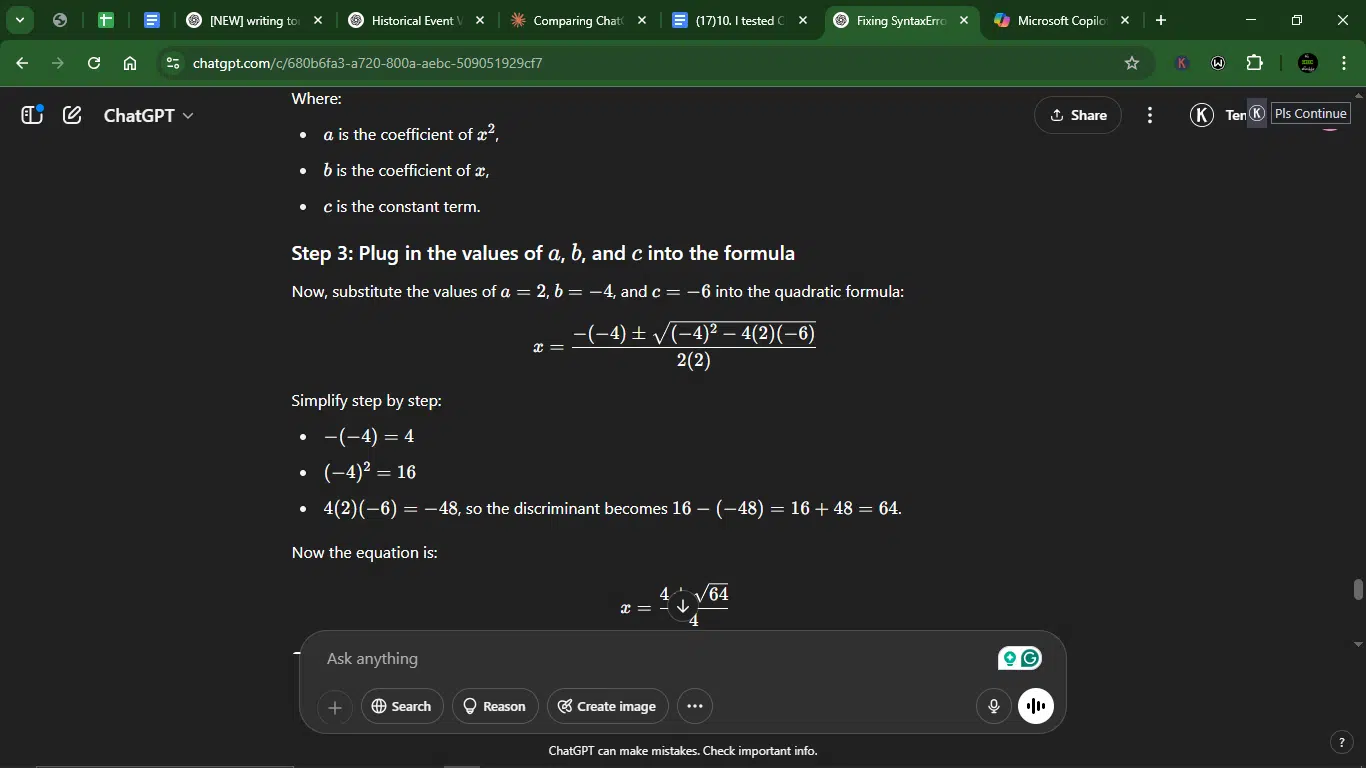

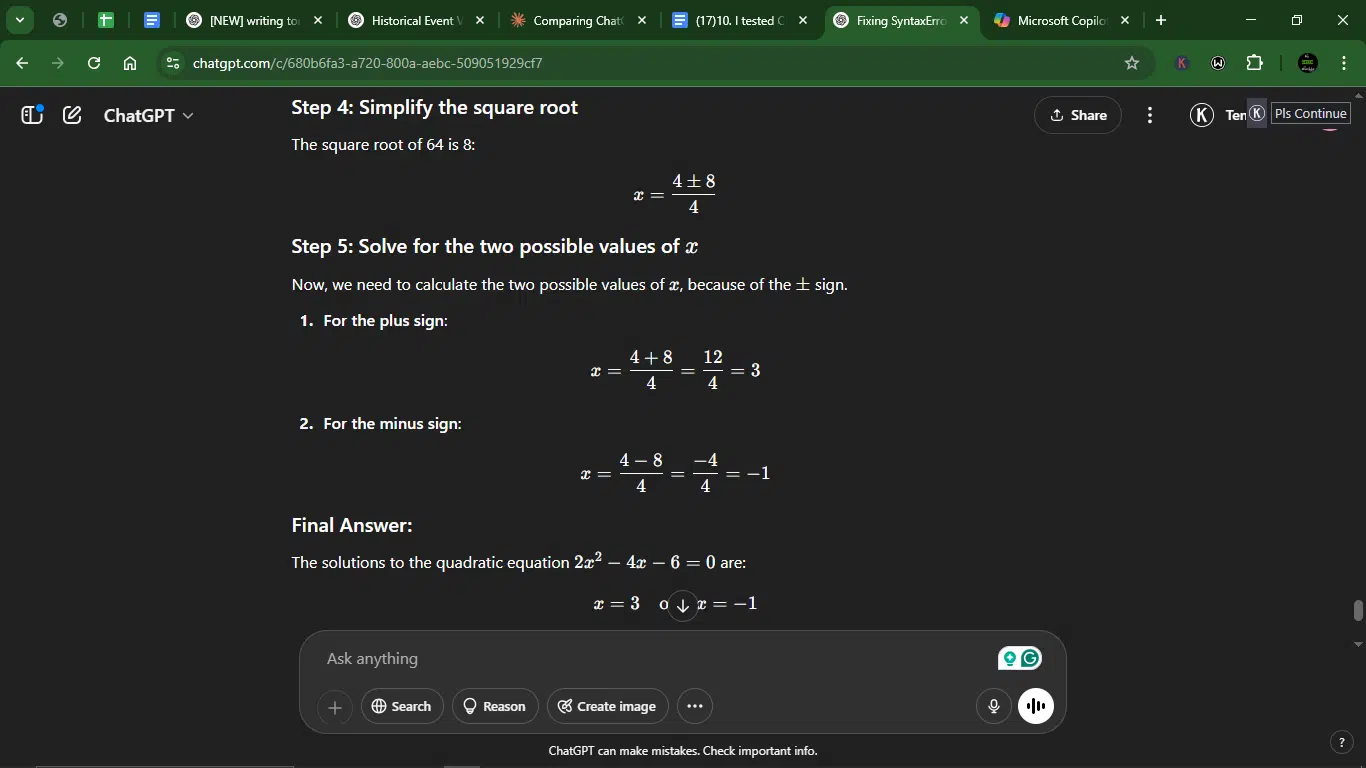

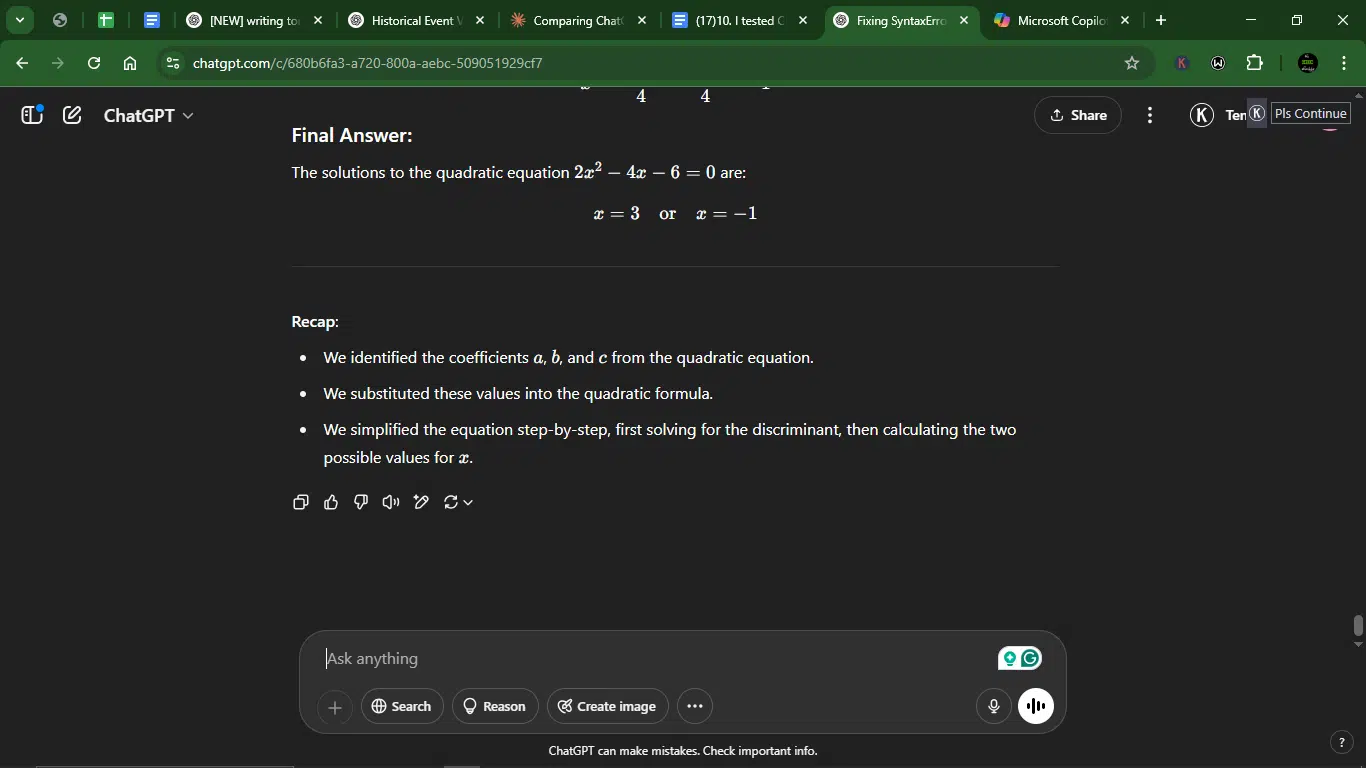

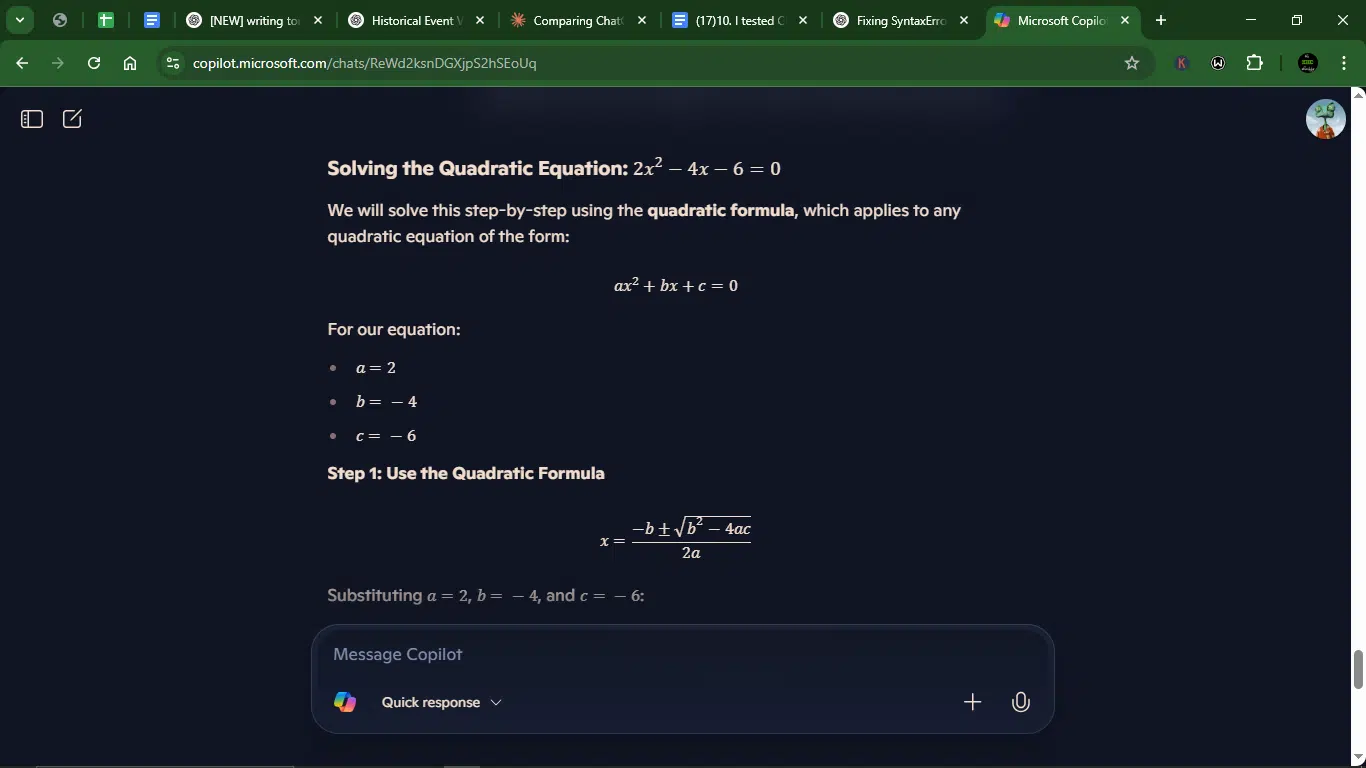

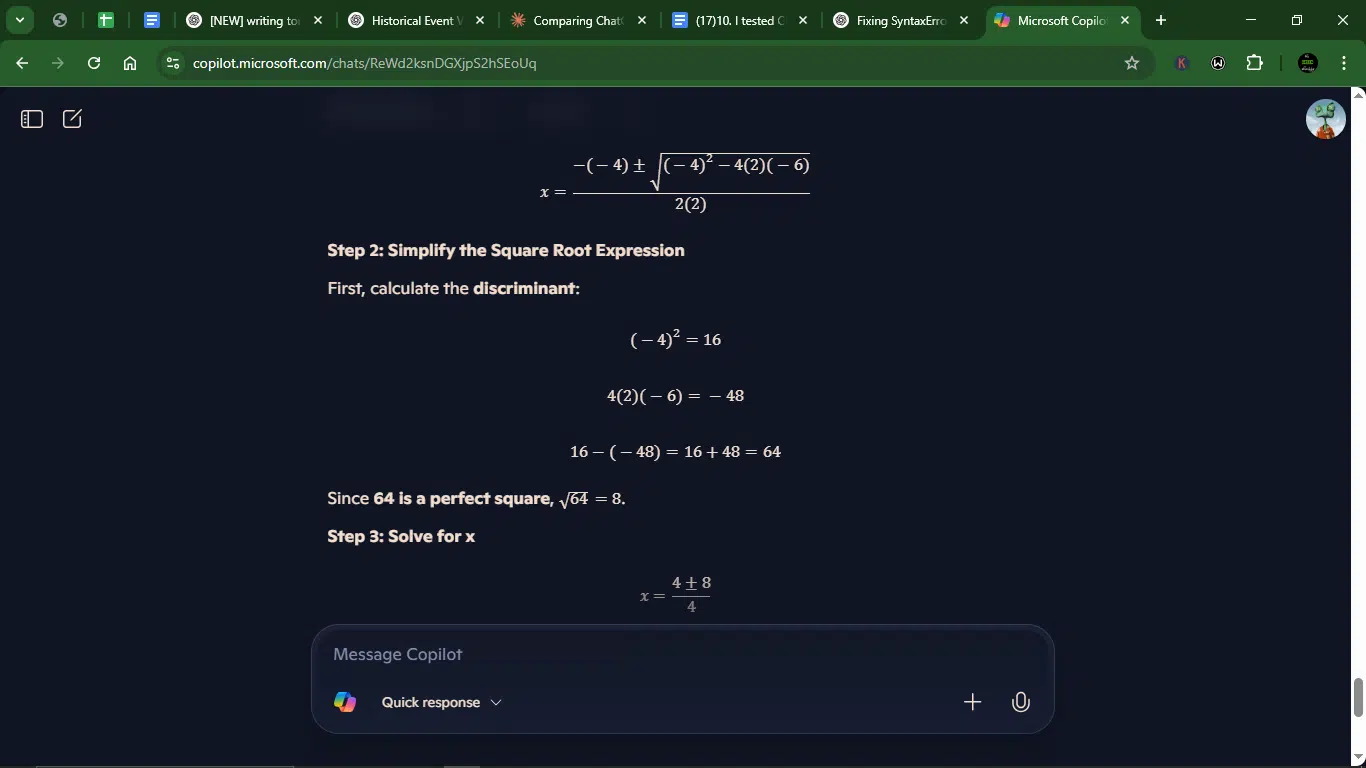

Prompt 6: Math problem-solving

I used this prompt to test their ability to approach math problems with logical rigor and provide clear, step-by-step explanations. The goal is to assess both AI’s ability to break down complex concepts in a way that is pedagogically sound and easy to follow.

Prompt: “Solve this Quadratic equation step-by-step using any method: 2×2−4x−6=0. Explain each step as if teaching a beginner.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT and Copilot provided the correct solution and answers, with accurate step-by-step calculations.

Creativity

- For the first time in my testing of ChatGPT and Copilot, both AI models agreed to use the standard approach with minimal creative elements, which is appropriate for a math problem.

Clarity

- ChatGPT offered clear explanations with numbered steps, though the formatting of equations could be improved.

- Copilot did the same, but with better mathematical notation and cleaner organization.

Usability

- ChatGPT gave a useful explanation that a beginner could follow, though the mathematical notation isn’t consistently formatted.

- Copilot was more visually organized with better use of mathematical formatting, making it easier for beginners to understand.

Winner: Copilot.

Why? Copilot has a slight edge over ChatGPT for this prompt. Both AI tools correctly solved the equation and provided step-by-step explanations suitable for beginners. However, Copilot’s response has better mathematical formatting with proper equation notation, which makes it easier to follow for someone learning quadratic equations. The cleaner structure with clearly highlighted steps and better visual organization makes Copilot’s explanation more effective as a teaching tool.

Prompt 7: News summarization

My focus by using this prompt was to find out how well these AI models could distill complex news stories into concise summaries, while ensuring the key facts and main points are preserved. I also wanted to see how they would handle jargon, technical details, and multiple viewpoints, presenting the essential information in a way that is easily digestible for a wide audience.

Prompt: “Summarize this 300-word Techpoint Africa Digest piece titled, ‘Global firm launches Sierra Leone’s first digital banking super app’ in 3 sentences. Preserve technical terms but simplify for a general audience.

The news article: Global banking tech firm Velmie has teamed up with Sierra Leone’s Metro Cable to launch Vult, the country’s first digital banking super app and it’s already making waves. The app, now live, aims to boost financial inclusion by combining multiple financial services into one easy-to-use platform.

Vult lets users do everything from mobile money transfers and card payments to managing bank accounts and paying bills, all from one app. Velmie says it only took six months to go from idea to launch, thanks to its flexible, API-first tech infrastructure.

This launch couldn’t have come at a better time. While mobile money has helped increase financial access across sub-Saharan Africa, the World Bank notes there’s still a gap in usage and equality, especially across income levels, age, gender, and education.

Velmie’s CEO, Slava Ivashkin, called the launch more than just a technical win. “It’s about delivering real, inclusive financial impact,” he said, adding that their work with Metro Cable shows how smart partnerships can drive local innovation and help financial services scale fast.

Velmie’s CMO, Paul Shumsky, also weighed in, calling Africa “one of the most dynamic frontiers” for digital finance. He said Vult isn’t just another app, but a foundation for financial empowerment in Sierra Leone.

Aminata Bangura, head of product at Vult, said the tech behind the app gave them room to grow and adapt to local needs. “We’re proud to bring world-class financial tools to Sierra Leoneans and we’re just getting started.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT and Copilot captured the key points about the app launch, partnership, and purpose, condensed into exactly 3 sentences as requested. They also successfully preserved key technical terms (API-first infrastructure).

Creativity

- ChatGPT stuck with the standard summary approach with little creative formatting, not that any was needed.

- Copilot used bold formatting to highlight key technical terms, making them stand out to the reader.

Clarity

- ChatGPT’s writing is clear but but the sentences run long, making them slightly harder to follow.

- Copilot used long-running sentences too, but structured them better, employing pacing and breaks that make the summary more digestible.

Usability

- ChatGPT and Copilot summaries are just perfect for whatever purpose.

Winner: Copilot.

Why? Again, Copilot provided the stronger response. Both AI tools successfully summarized the article in exactly three sentences as requested and preserved the technical terms (but Copilot did a better job at highlighting them) while creating a more readable summary.

Prompt 8: Ethical dilemma

This prompt tests ChatGPT and Copilot’s ability to engage in moral reasoning and provide a well-reasoned response to a challenging ethical question. The goal is to assess their capacity to weigh competing values, consider different perspectives, and present a concise yet thoughtful analysis.

Prompt: “A self-driving car must choose between hitting a pedestrian or running over a herd of sheep. Justify the ethical framework for each choice in 100 words.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT correctly identified and explained three relevant ethical frameworks that apply to the scenario.

- Copilot focused on two ethical frameworks.

Creativity

- ChatGPT failed here. I was expecting them to exercise judgment to overwhelmingly side with humans. In the end, only Copilot came close. Points to it for adding creative considerations to side with human values.

Clarity

- ChatGPT gave clear explanations of each ethical framework with good structure.

- Copilot is also clear, but with better paragraph spacing to improve readability.

Usability

- ChatGPT needs to be edited to reflect the superiority of human life over animal life.

- Copilot is close, but it has to be emphasized.

Winner: Copilot.

Why? For this prompt, Copilot provided the more nuanced response. Its answer added some interesting perspectives that directly answered the question and at the same time put more weight on human lives. Both responses stayed within 100 words as requested.

Prompt 9: Productivity hack

With this prompt, I wanted to see how these two AI models would provide practical, tool-specific productivity hacks that are both relevant and actionable. My goal is to evaluate how well they can suggest concrete steps that leverage specific tools or techniques to improve efficiency, streamline workflows, and boost productivity.

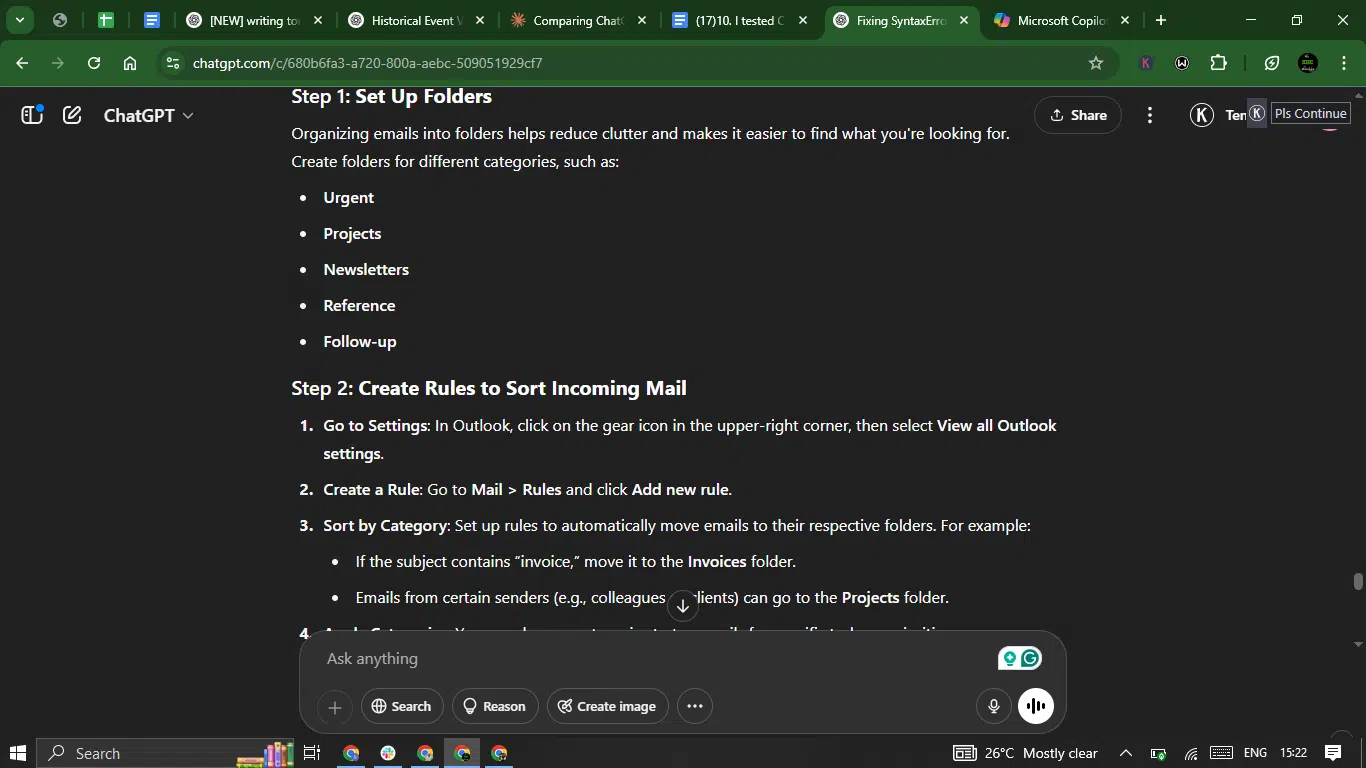

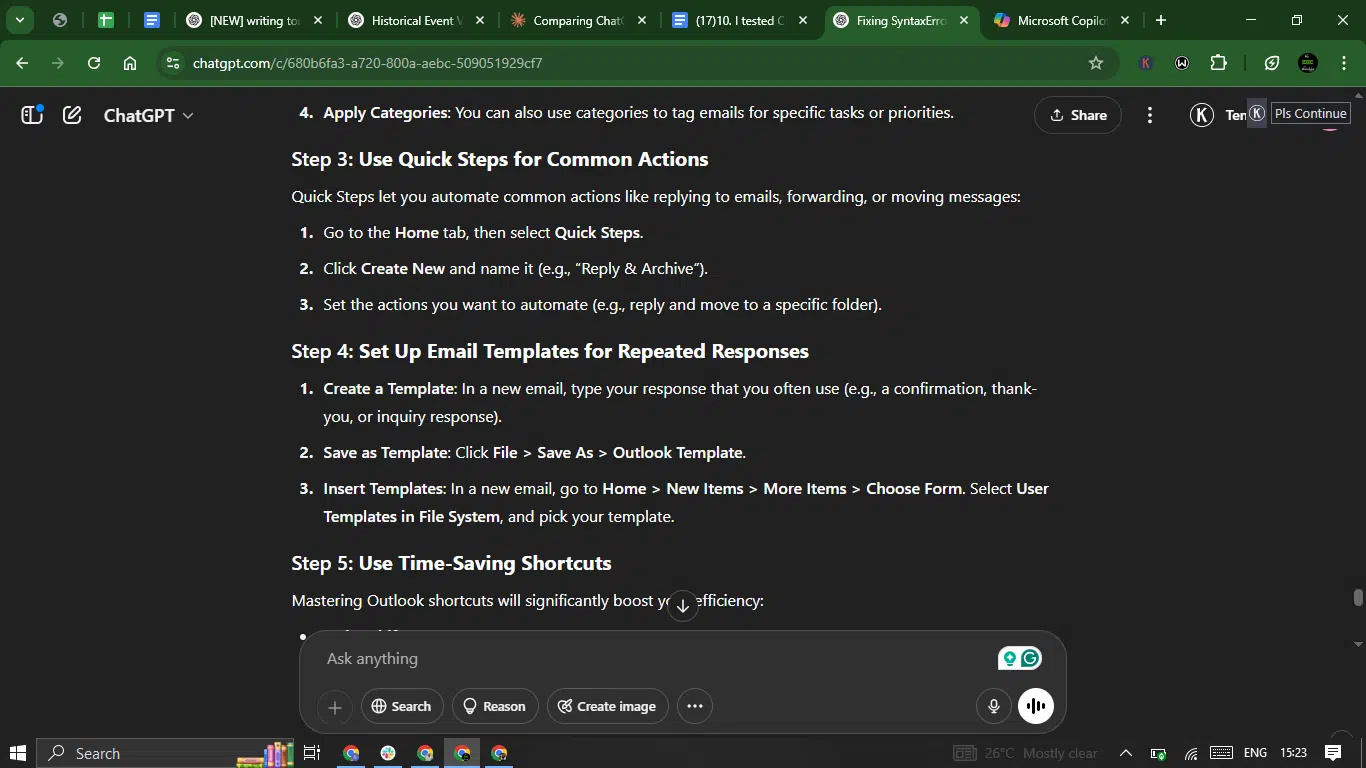

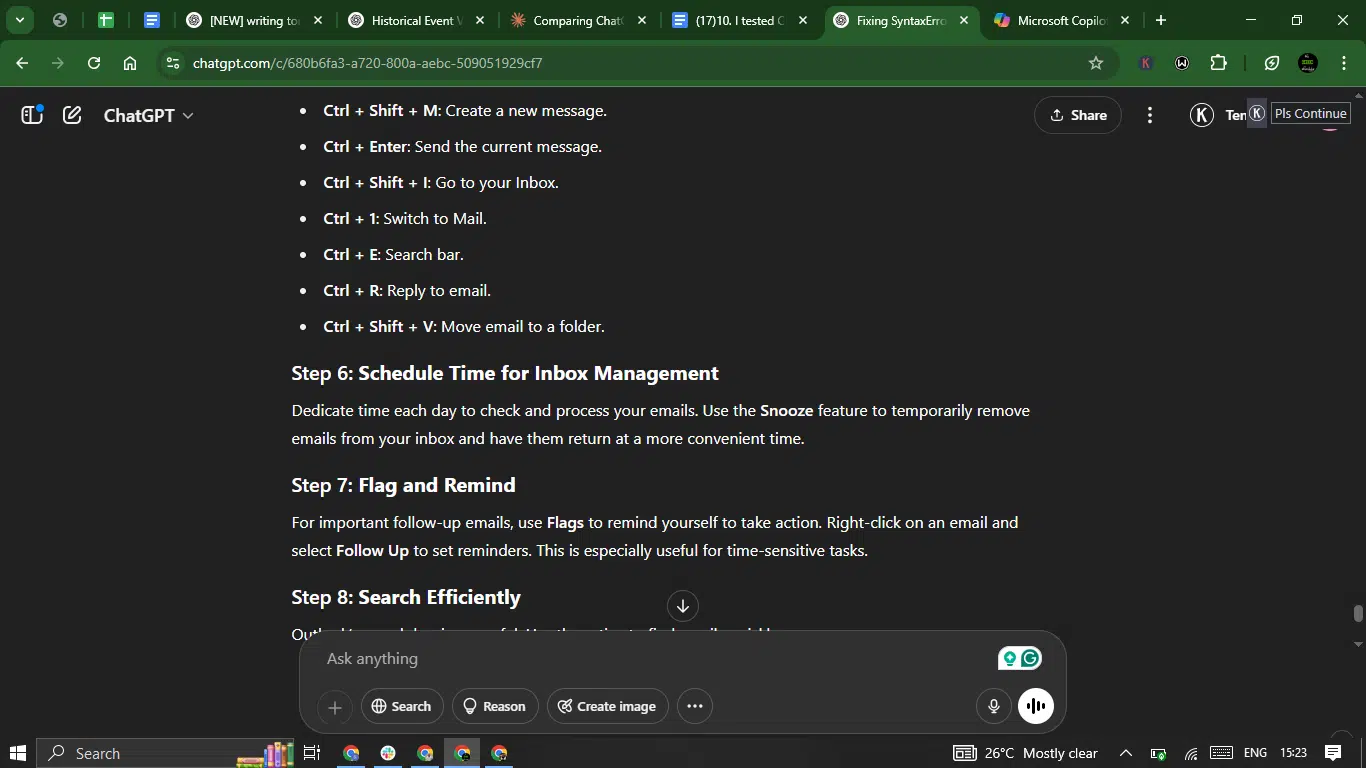

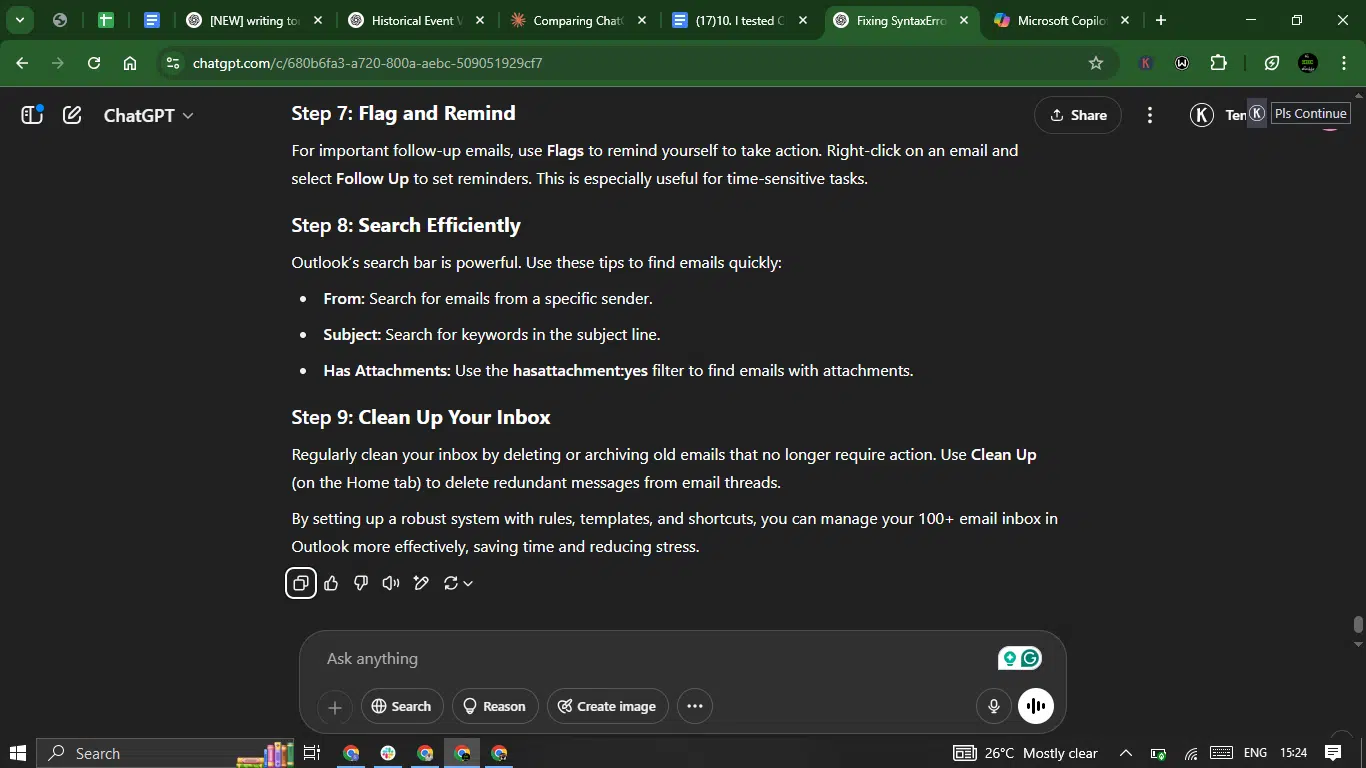

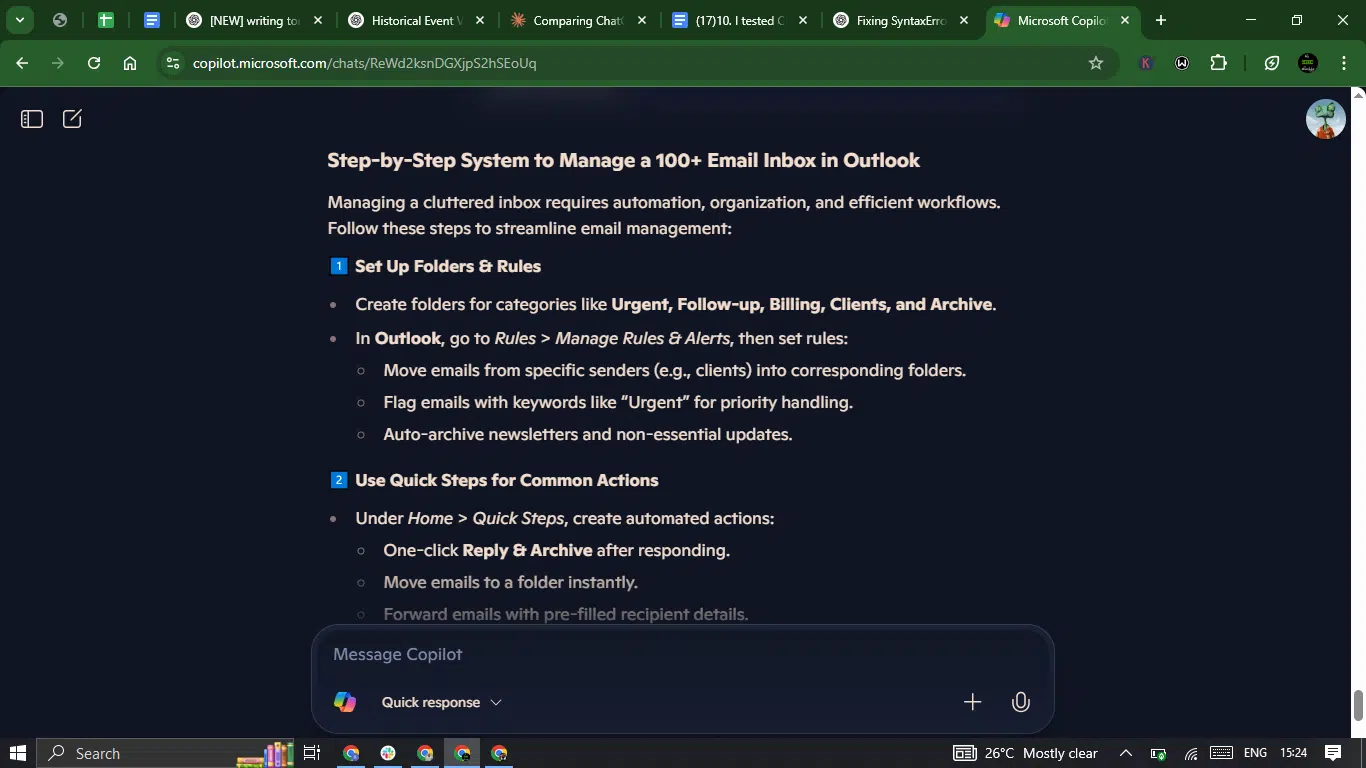

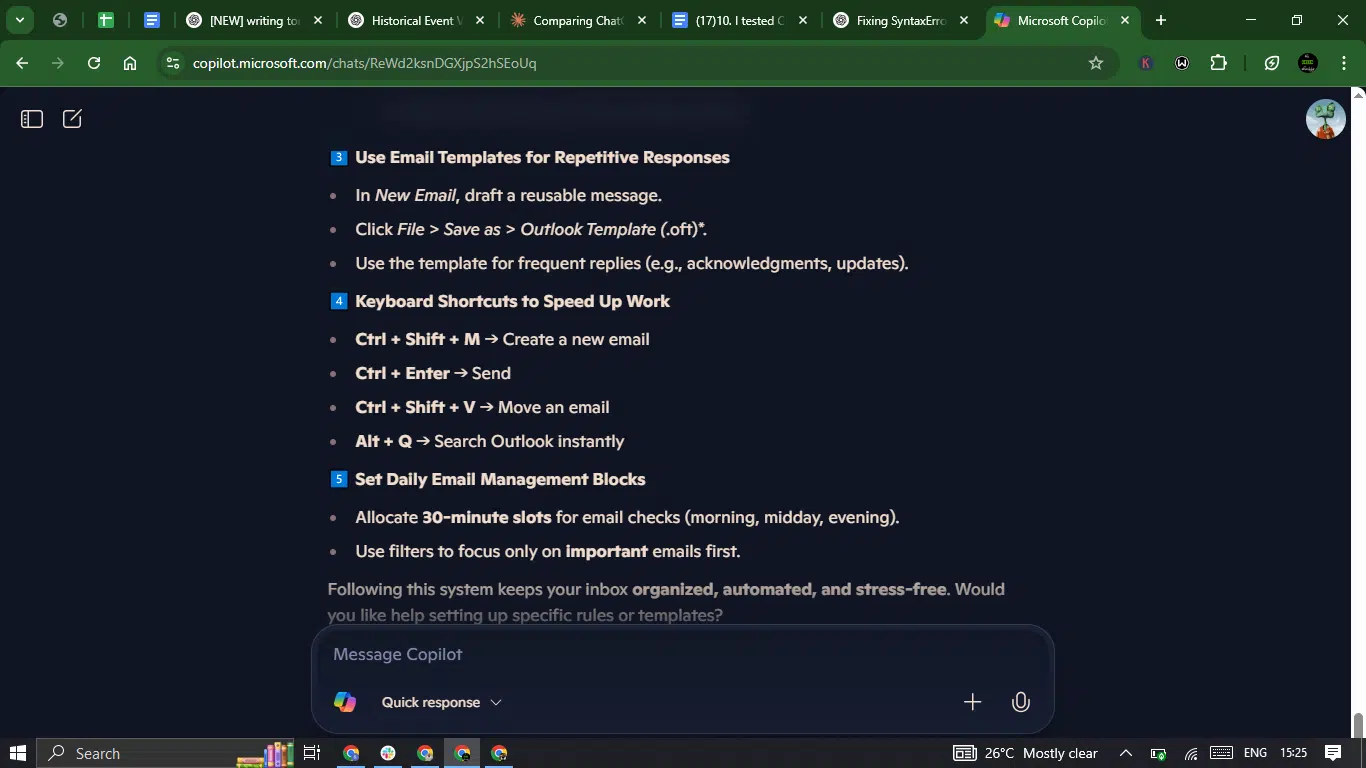

Prompt: “Provide a step-by-step system to manage a 100+ email inbox in Outlook. Include folder rules, templates, and time-saving shortcuts.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT provided a comprehensive, step-by-step guide covering all requested elements (folder rules, templates, shortcuts) with precise Outlook instructions.

- Copilot covered all main elements but missed some details like specific rule creation paths and the “Clean Up” feature mentioned by ChatGPT.

Creativity

- ChatGPT used a standard but thorough approach with logical grouping of related functions (e.g., pairing folder setup with rules).

- Copilot employed a similar structure to ChatGPT but used emoji numbering, which added slight visual appeal.

Clarity

- ChatGPT had very clear explanations with numbered steps and sub-bullets for complex actions.

- Copilot was also clear but slightly less structured, with some combined steps (e.g., folder setup and rules in one section).

Usability

- ChatGPT’s solution is immediately actionable with exact menu paths and shortcut keys. Only a minor improvement would be visual separation of steps.

- Copilot’s response is just as practical, but would benefit from more detailed instructions like ChatGPT’s rule creation example.

Winner: ChatGPT.

Why? ChatGPT provided a better response. Its answer was more comprehensive, with clearer step-by-step instructions and more complete coverage of Outlook features. While both responses addressed the core requirements, ChatGPT’s version included additional helpful details like the “Clean Up” function and more thorough template instructions.

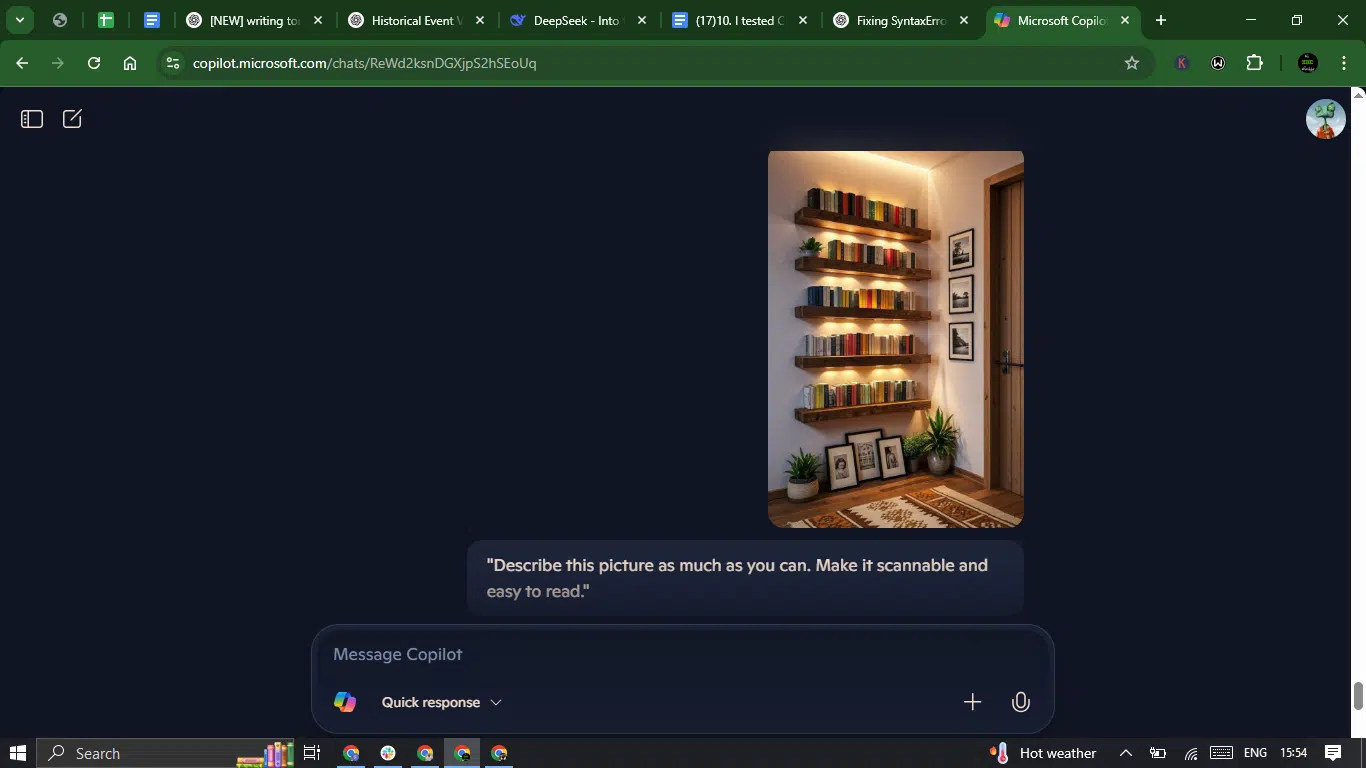

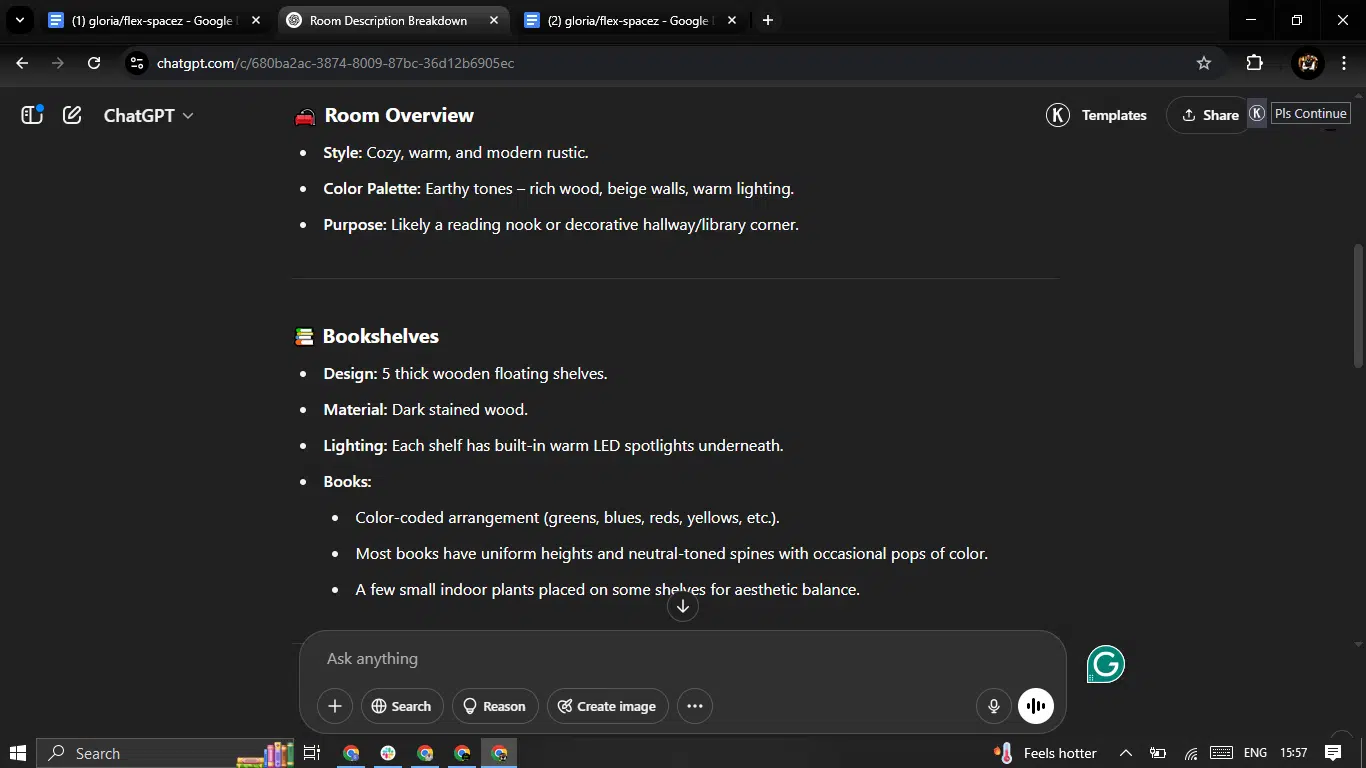

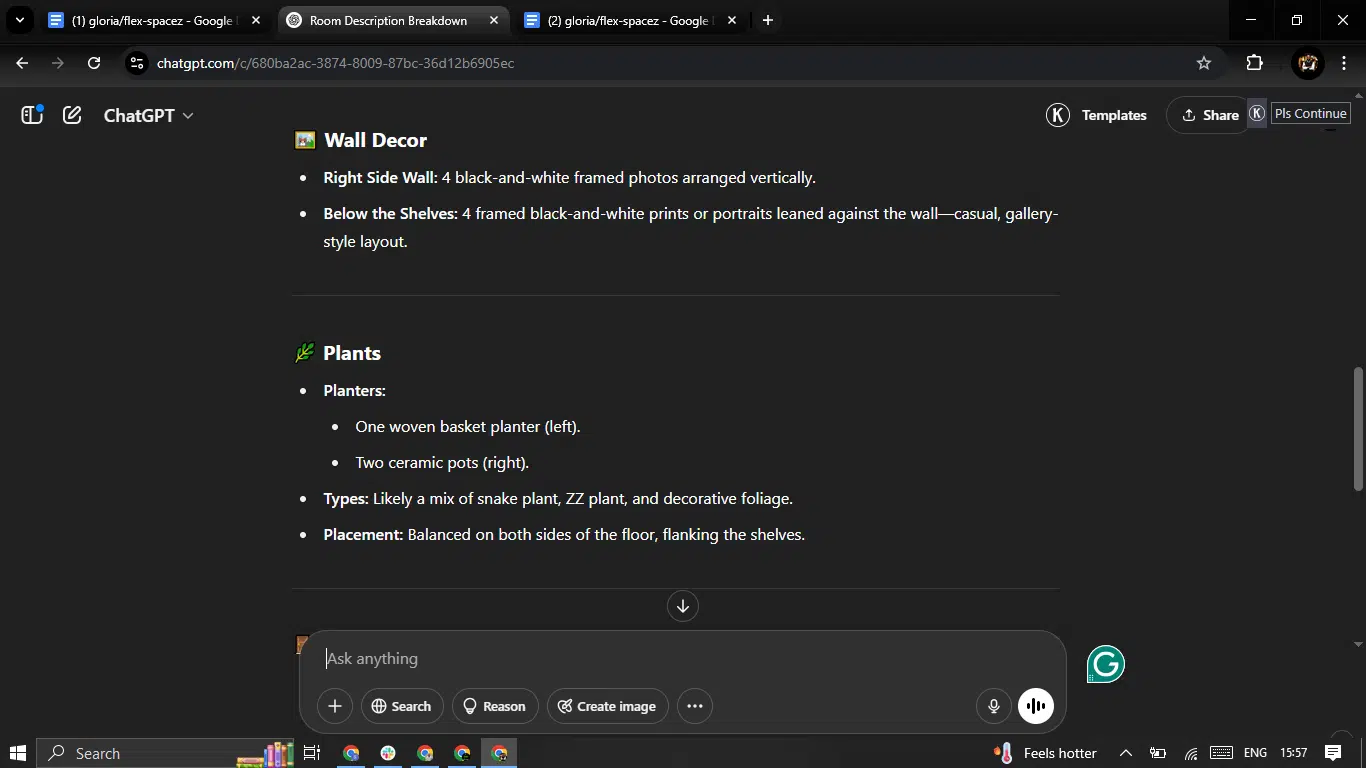

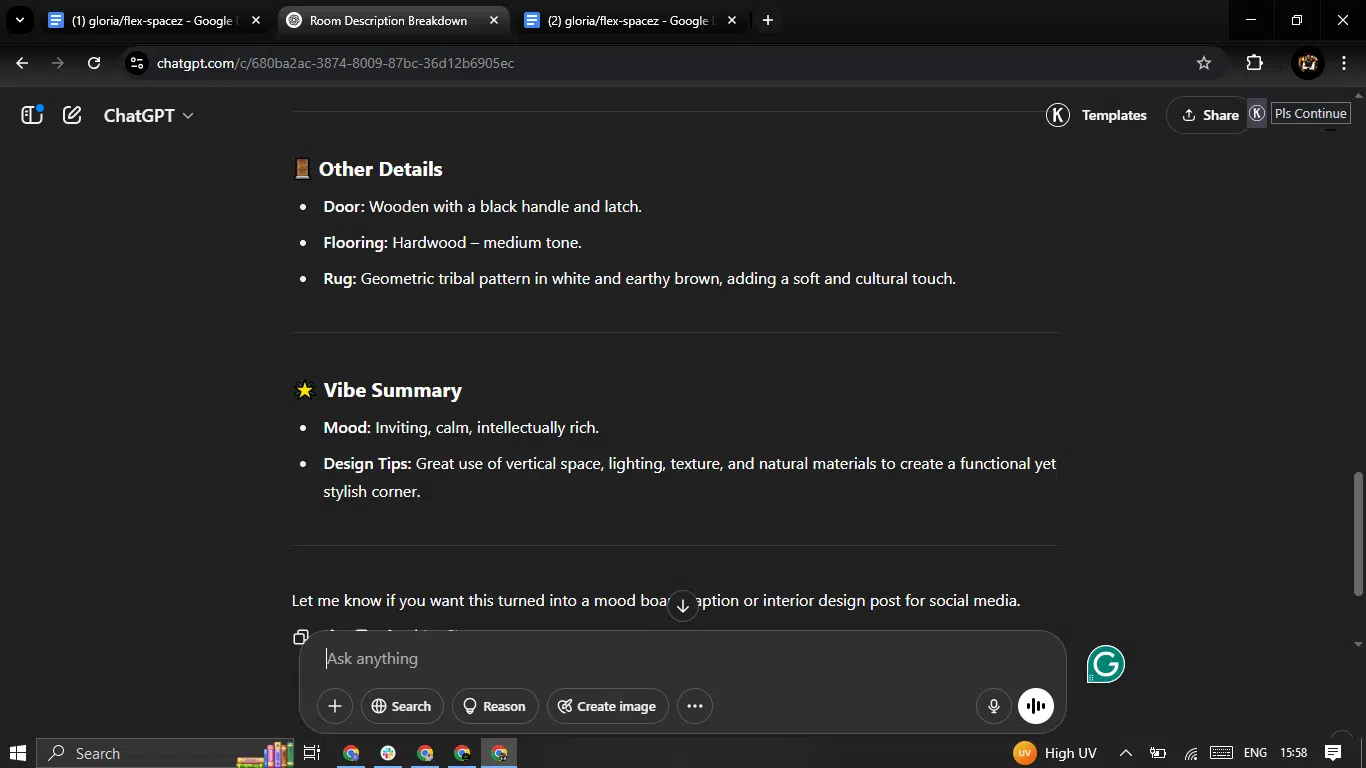

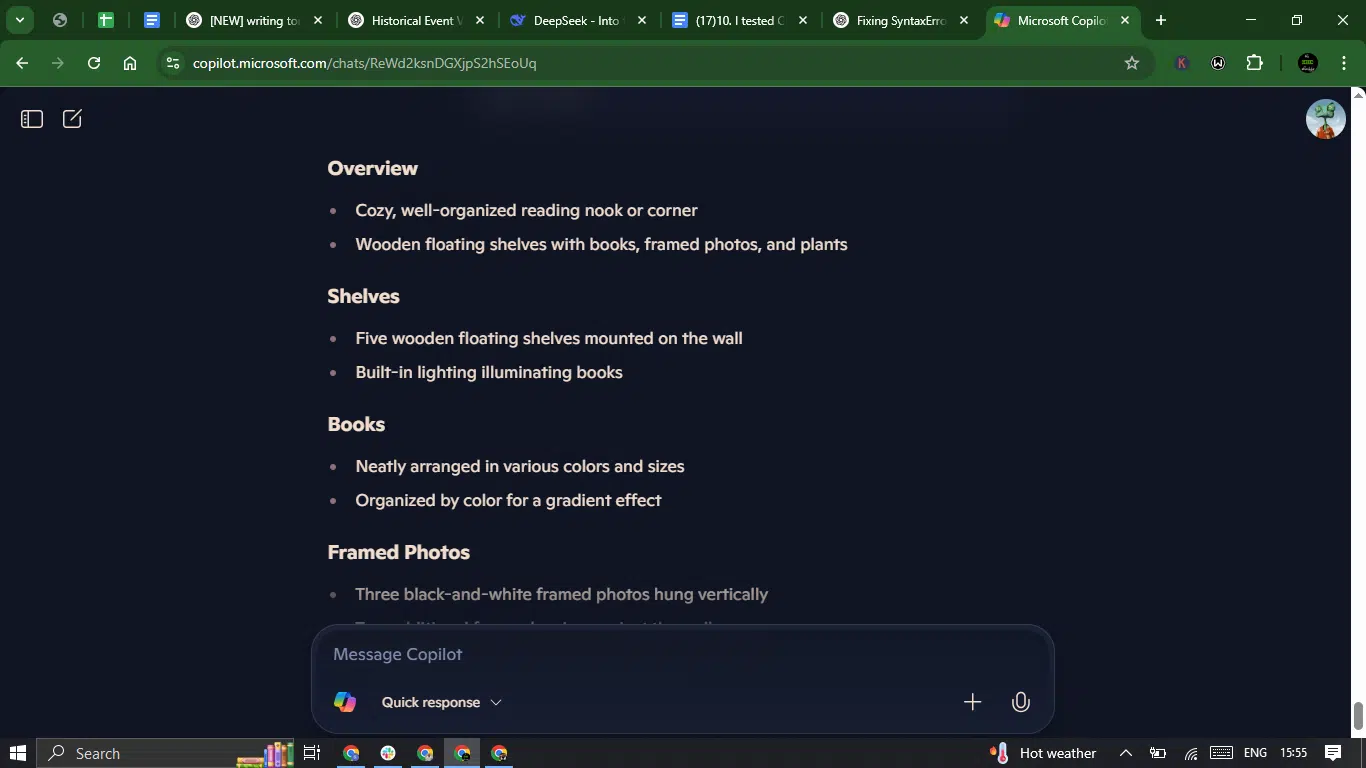

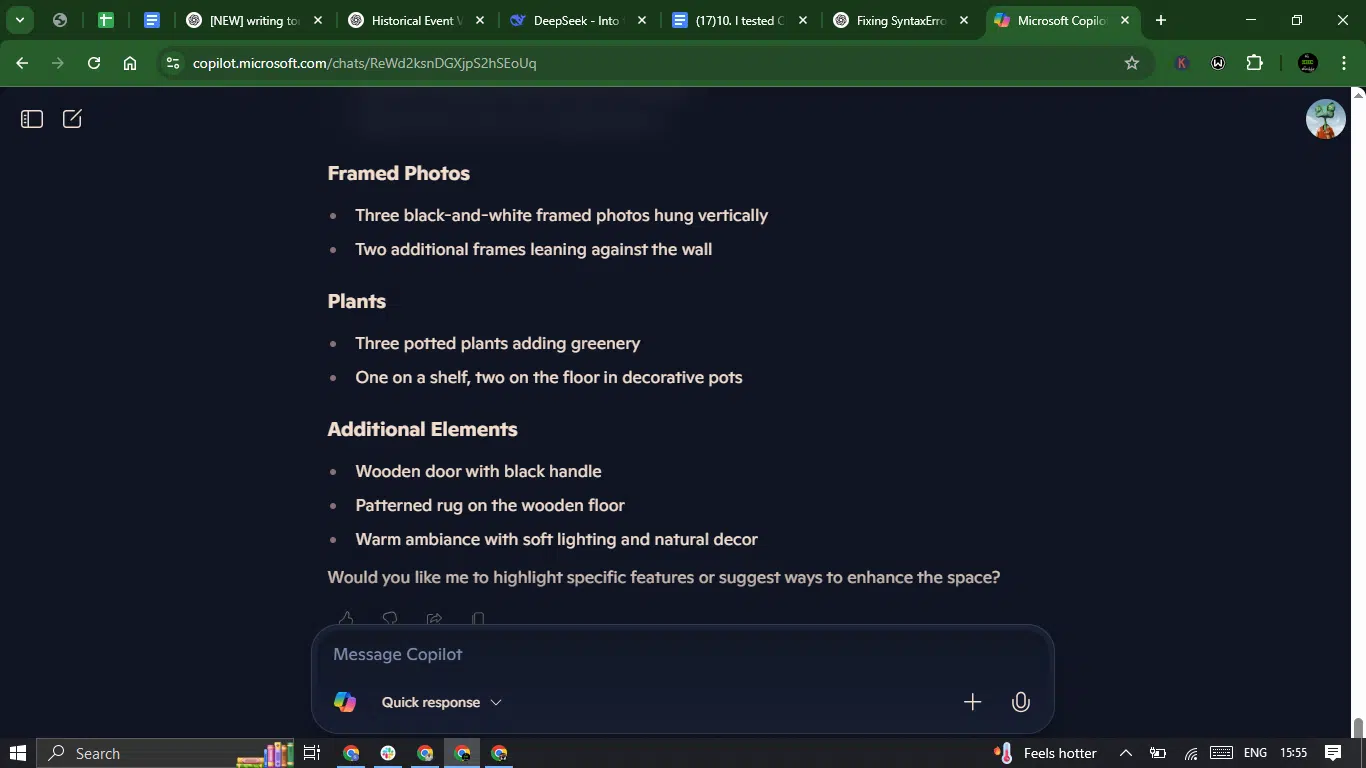

Prompt 10: Picture description

This prompt evaluates their capacity to process and interpret multimodal information, combining textual understanding with visual or design elements (in this case, a picture). The goal is to assess how well the AI can synthesize cross-format data, providing clear, insightful descriptions or interpretations of visual stimuli.

Prompt: “Describe this picture as much as you can. Make it scannable and easy to read.”

Result:

ChatGPT response:

Copilot response:

Accuracy

- ChatGPT was exceptionally detailed with accurate counts of shelves (5) and plants. Correctly identified lighting features and rug pattern. It got the photos (4+4) wrong.

- Copilot was generally accurate but missed some details (under-shelf lighting, exact photo counts). It got the number of the vertical frames right (“three photos” vs ChatGPT’s four), but not the ones on the floor.

Creativity

- ChatGPT added thoughtful design analysis (“color-coded books”, “gallery-style layout”) and mood interpretation.

- Copilot was more factual with less creative interpretation of the space’s aesthetic qualities.

Clarity

- Both ChatGPT and Copilot were easy to scan and follow.

Usability

- ChatGPT would work perfectly for interior design documentation or real estate listings with its rich detail.

- Copilot is functional but would benefit from ChatGPT’s additional descriptive layers.

Winner: ChatGPT.

Why? ChatGPT provided a superior response with more comprehensive details, better organization, and richer descriptive language. While both captured the essentials, ChatGPT’s version offered professional-grade observations about design intent and spatial organization that Copilot missed.

Overall performance comparison: ChatGPT vs. Copilot (10-prompt battle)

After running both ChatGPT and Copilot through a real-world gauntlet of 10 diverse prompts, ranging from technical debugging to creative writing to legal analysis, it was an actual duel.

10-prompt performance table

| Prompt | Winner | Reason |

| 1. Technical debugging | ChatGPT | More comprehensive, better-structured, and provided multiple solutions. |

| 2. Creative storytelling | Tie | Both delivered strong noir-themed stories with twists, though twists could have been edgier. |

| 3. Business analytics | ChatGPT | Included all data fields, detailed analysis, and clearer insights. |

| 4. Legal nuance | ChatGPT | More thorough comparison with practical next steps for SaaS founders. |

| 5. UX copywriting | Copilot | More rebellious tone, better Gen Z appeal, and strategic bolding for web design. |

| 6. Math problem-solving | Copilot | Better mathematical notation and cleaner organization for beginners. |

| 7. News summarization | Copilot | Highlighted technical terms effectively and structured sentences better. |

| 8. Ethical Dilemma | Copilot | More nuanced, emphasized human life, and better paragraph spacing. |

| 9. Productivity Hack | ChatGPT | More detailed, covered Outlook features like “Clean Up” thoroughly. |

| 10. Picture Description | ChatGPT | Richer details, professional-grade observations, and better organization. |

Final score

- ChatGPT: 5 wins.

- Copilot: 4 wins.

- Ties: 1.

Final verdict

ChatGPT dominated this 10-prompt showdown. It outperformed Copilot in almost every category, from complex reasoning to creative writing, legal clarity, and code generation. Even in use cases that favored brevity (like UX copy), it held its own. While Copilot had a notable win in the marketing copy prompt, it mostly delivered simpler, less contextual responses across the board.

My recommendation:

- If you’re a developer, writer, researcher, or even just a productivity nerd, go with ChatGPT. It’s more adaptable, better structured, and can handle a wider range of real-world tasks with thoughtfulness and depth.

- Copilot is great for quick code suggestions and targeted tasks, but when you’re solving multi-dimensional problems or need creativity on tap, ChatGPT is the better co-pilot.

Pricing for ChatGPT AND Microsoft Copilot

ChatGPT pricing

| Plan | Features | Cost |

| Free | Access to GPT-4o mini, real-time web search, limited access to GPT-4o and o3-mini, limited file uploads, data analysis, image generation, voice mode, Custom GPTs | $0/month |

| Plus | Everything in Free, plus: Extended messaging limits, advanced file uploads, data analysis, image generation, voice modes (video/screen sharing), access to o3‑mini, custom GPT creation | $20/month |

| Pro | Everything in Plus, plus: Unlimited access to reasoning models (including GPT-4o), advanced voice features, research previews, high-performance tasks, access to Sora video generation, and Operator (U.S. only) | $200/month |

Copilot pricing

| Plan | Cost | Key features |

| Microsoft Copilot (Free) | Free forever | Limited usage (Designer only)Real-time resultsNon-peak access to AI models15 boosts per day for image creationAvailable on multiple devices and platformsBasic features in Microsoft 365 apps (Word, Excel, PowerPoint, OneNote, Outlook) |

| Microsoft Copilot Pro | $20/month | Extensive usage across Microsoft 365 appsReal-time resultsPreferred access to AI models during peak timesEarly access to experimental AI features100 boosts per day for image creationAvailable on multiple devices and platformsUnlocks full capabilities in Word, Excel, PowerPoint, OneNote, and OutlookAdvanced document editing, inbox management, and data analysisCreate personalized podcasts |

Why do ChatGPT and Copilot matter to coders?

Here’s why AI tools like ChatGPT and Microsoft Copilot are becoming essential for devs and tech professionals:

- Speed up debugging: AI helps identify and fix bugs faster, cutting down debugging time significantly.

- Autocomplete code in real-time: With real-time suggestions, these tools autocomplete your code, speeding up development.

- Generate documentation: Automatically generate clear and concise documentation for your code without the hassle.

- Refactor or rewrite code for clarity: AI can refactor your code to make it more efficient and easier to read.

- Draft reports faster: AI helps draft emails, project reports, and status updates in a fraction of the time.

- Break down complex Concepts: AI simplifies complex coding concepts, making them easier to explain and understand.

- Translate code: Convert code from one programming language to another with ease.

- Provide learning support and tutorials: AI offers on-the-spot tutorials and learning resources to help you tackle new challenges.

Challenges with these tools

AI assistants aren’t flawless. Here’s where they tend to fumble:

- Lack of consistent context: They might not remember previous prompts and can forget your intent mid-conversation.

- Security concerns: It might not be the best decision to input sensitive code in prompts.

- Limited domain knowledge: They’re not specialists (yet).

- Dependency risks: Rely too much, and your debugging skills might remain underdeveloped.

- Latency issues: Occasionally, both take forever to respond.

Best practices for making the most of tools like ChatGPT and Copilot

AI tools are powerful, but they work best when you know how to use them. Here are five best practices I’ve learned the hard way (so you don’t have to):

1. Give clear, specific prompts

This is the golden rule. “Fix my code” is vague. “Fix this Python code that throws a KeyError when parsing JSON” is gold. The more context you give, the better your output.

2. Always review the output

These tools are smart, but not flawless. ChatGPT might hallucinate a source, and Copilot might suggest insecure code. Always double-check before copy-pasting anything into production or publishing.

3. Chain your prompts

ChatGPT works well when you build on your previous prompt. Start broad, then refine. You can go from “Give me a blog outline” to “Now expand section 3 into 200 words with examples.”

4. Use AI to learn, not just to finish

If you’re stuck on a bug, don’t just ask for the fix; also ask for the why. Both tools can explain what’s going wrong and how to improve. Over time, this improves your skills.

5. Mix and match tools

You don’t have to stay loyal to one AI. Use Copilot inside your IDE for autocomplete. Use ChatGPT for explanations, brainstorming, and writing. Each tool has its strength; the real power is in combining them.

Conclusion

After putting both AI assistants through their paces across diverse real-world tasks, ChatGPT emerges as the more versatile tool overall. Its superior performance in creative writing, complex problem-solving, and detailed technical explanations makes it ideal for developers, writers, and knowledge workers who need depth and nuance. Copilot proves valuable for specific use cases like math explanations, marketing copy, and quick coding assistance, but often delivers simpler, less contextual responses. The smartest approach, however, is to combine them.

FAQs about ChatGPT and Copilot

Which is better for coding?

Copilot is faster for inline suggestions, but ChatGPT is better at explaining concepts and fixing errors with context.

Is Copilot good for writing documentation?

Not really. Copilot can help with code comments, but ChatGPT is far better at generating full, structured documentation.

Which tool is better for beginners?

ChatGPT, especially if you’re learning to code or exploring new topics. It’s more interactive and beginner-friendly.

Do they both work offline?

No. Both require internet access to process and return results.

Can ChatGPT generate full apps or projects?

It can scaffold full applications and explain components, but you’ll still need to vet and test everything.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.