Deadlines don’t care how many AI tools you have bookmarked. I needed one that could keep up, fast when I’m swamped, sharp when the brief is vague, and disciplined enough not to wander into nonsense.

That’s what prompted me to test Manus and Genspark side by side. Manus sells itself as the organised, research-heavy partner you can lean on. Genspark calls in its “Super Agents” for bursts of creativity and variety. On paper, they couldn’t be more different.

Over the course of a week, I assigned them the same seven tasks, ranging from idea generation to fact-checking, and observed how each one handled pressure. Some tests were predictable. Others caught me off guard. By the end, I knew exactly where each tool shines, where they stumble, and the type of writer who’d enjoy working with them.

What is Manus?

Manus is an autonomous AI agent platform launched in early 2025 by the Singapore-based company Monica (also known as Butterfly Effect). It’s built to handle multi-step, complex tasks from start to finish without constant user input. Instead of waiting for you to prompt it for every move, Manus works in the background, coordinating multiple specialised sub-agents for planning, research, execution, and reporting.

It operates entirely in the cloud, meaning it keeps running even if you close your browser or log out. You can set it on a task like compiling market research, analysing data, drafting articles, building simple web tools, and return later to see a full breakdown of how it was done. Manus uses a combination of large language models, including Claude 3.5 Sonnet and Alibaba’s Qwen, to power its reasoning and automation abilities.

The platform is designed for users who want more than conversational output. It’s for people who need an AI to automate tasks across various tools, websites, and documents while maintaining transparency in the process.

Core features at a glance

Here’s what grabs you on first use, the features that actually matter once that invite gets delivered:

- Multi-Agent Workflow: Breaks a single task into smaller jobs and assigns them to specialised AI agents, running them in parallel for speed and efficiency.

- Asynchronous Cloud Execution: Tasks continue processing even if you close the app or shut down your computer. Results are saved and can be reviewed anytime.

- Browser & Tool Automation: Manus uses the web like you do, tabs, forms, scraping but way faster, without errors, and it can even handle CAPTCHAs.

- Transparent Workflows: Shows a step-by-step record of what each agent did, so you can trace results back to their source.

- GAIA Benchmark Domination: It crushes OpenAI’s DeepResearch in GAIA tests: 86.5% on Level 1 vs roughly 74%, and similar gains on harder levels.

- Multi-Modal & Multi-Task: text, code, charts, and image generation within the same workflow.

Manus plays like a team of specialists, not a solo chatbot. If your idea of AI help is project teammate, it’s speaking your dialect.

What is Genspark?

Genspark emerged in 2023 from a team led by former Baidu execs Eric Jing and Kay Zhu. It began life as an AI-powered search engine in Palo Alto with one bold aim: to cut through search noise and give users instant, clean answers. By building custom “Sparkpages” which are real-time synthesized summaries, sidestepping link farms and ad clutter.

Genspark has since evolved into an agentic AI trooper, capable of executing real tasks, from planning and research to content and media generation, all without needing to write a line of code. Its growth has been explosive, hitting $10 million in ARR almost immediately post-launch, and raising well over $100 million in funding.

Key Features

- Sparkpages + AI Copilot: Every query generates a clean, consolidated Sparkpage—text, visuals, and summaries all in one clean layout. Each page comes with an interactive copilot for follow-up questions or deeper exploration.

- Mixture-of-Agents System: Rather than relying on a single model, Genspark juggles multiple specialized agents (think 8–9 models plus over 80 tools). Requests get routed to the best fit—makes it fast, nuanced, and scalable.

- Agentic Executions Beyond Text: You asked it to call your dentist, build a slide deck, or even manage download tasks; it does them. The “Super Agent” moves across text, voice, images, and files.

- No-Code, Multi-modal Power: Built on OpenAI’s GPT-4.1, Realtime API, and image models, Genspark packs multimodal capability like voice calls, videos, and presentations, all from plain language prompts.

- Web Automation with AI Drive: The Agentic Download feature can fetch and categorize files (PDFs, videos, images) on demand, then store them in AI Drive, where they can be queried or processed further.

How I tested Manus vs Genspark

To keep things fair, I gave both tools the same set of tasks, which is a mix of content writing, research, data handling, and light automation. I wanted to see not just what they produced, but how they got there.

I ran all tests within the same week, using the latest public versions of each platform. For Manus, I worked entirely in its web dashboard. For Genspark, I used both the web interface and the AI Copilot inside Sparkpages. No third-party plugins or add-ons were used, and I stuck to default settings where possible.

Each task was framed the same way: one prompt or instruction, no hand-holding after that. If a tool asked clarifying questions, I answered, but I didn’t rewrite prompts to make life easier for it. The idea was to see how well each AI could adapt and self-direct without me babysitting.

I tracked:

- Speed – How quickly they started and completed the work.

- Accuracy – Whether the information was factually correct and complete.

- Depth – How thorough the output was without extra nudging.

- Usability – How smooth the process felt while working with the tool.

- Transparency – Whether I could clearly see how it reached its conclusions.

By the end, I had seven completed outputs from each platform, one per task, along with notes on the experience. This made the side-by-side comparison less about gut feeling and more about clear, comparable results.

Here’s the list of 7 tasks we will have both tools do:

Manus vs Genspark: 7 Tasks Compared

- Long-Form Blog Post Drafting – 1,500+ words with SEO structure.

- Fact-Checked Research Brief – Summarising five credible sources.

- Data Analysis & Visualization – Turning a CSV into charts and insights.

- Web Automation Task – Extracting information from multiple websites.

- Creative Writing Challenge – A short story with specific tone and style.

- Presentation Deck Creation – 8–10 slides with text and images.

- Multi-Step Project Execution – Combining research, writing, and file handling in one workflow.

Task 1: long-form blog post drafting

Prompt given to both tools:

“Write a 1,500+ word blog post on ‘The Future of Renewable Energy in Urban Areas’. Include an SEO-friendly structure with clear headings, subheadings, a meta description, and at least three internal linking suggestions. Make it engaging but factually accurate, citing credible sources where possible.”

Manus approached this like a project manager. Within seconds, it generated a task plan: outline creation, research phase, content drafting, and final formatting. It actually paused after the research phase to summarise its findings, then folded those points into the article draft. The end result was cleanly structured, well-sourced (it cited actual studies and urban energy projects), and surprisingly readable for a first pass. The tone was professional without being stiff, and it suggested three relevant internal linking opportunities (to pages I didn’t even mention).

Time to completion: about 2 minutes.

Genspark jumped straight into writing without showing much of its planning process. Its first output was fast, under 3 minutes, and the piece was visually appealing thanks to inline formatting and some suggested images. However, the content leaned more on general statements than specific data points, and the internal link suggestions were generic (“link to your renewable energy section”) rather than targeted. On creativity, though, it scored higher. Its opening paragraph was punchy, and it framed the topic with a compelling scenario that Manus didn’t attempt.

Verdict: Manus won on depth and accuracy because it gave me something I could publish with minimal editing. Genspark was faster and more visually engaging out of the box, but I’d need to fact-check and add citations before using it live.

Task 2: fact-checked research brief

Prompt given to both tools:

“Create a 600-word research brief on the current global adoption of electric buses. Include key statistics, government initiatives, challenges to adoption, and examples from at least three different countries. Cite your sources clearly and provide links where possible.”

Manus

Manus began by mapping the scope of the request. It listed the aspects it planned to cover and identified potential data sources before writing anything. It spent about 2 minutes gathering information from credible sites, including the International Energy Agency (IEA) and government transport portals. Each claim was accompanied by a link, and the statistics were current as of 2024.

The structure was tight: executive summary, global overview, country-specific case studies, challenges, and conclusion. The only weak point was its somewhat dry tone, which read more like a policy memo than a web article.

Completion time: 2 minutes.

Genspark

Genspark pulled together a research brief much faster in just over 1 minute, but its sources were a mix of reputable and vague (“industry reports” without direct links). While it offered some accurate stats, it also included two outdated figures from 2020 without noting the year. Its structure was looser than Manus’s: it merged country examples and global data without clear separation, which made it harder to skim. However, it did add a short “future outlook” section that Manus didn’t include, giving it a more forward-looking feel.

Verdict for Task 2: Manus clearly delivered the more reliable and verifiable research brief. Genspark’s output had more narrative flair but would require manual fact-checking before use. For tasks where accuracy trumps speed, Manus takes it.

Task 3: data analysis & visualization

Prompt given to both tools:

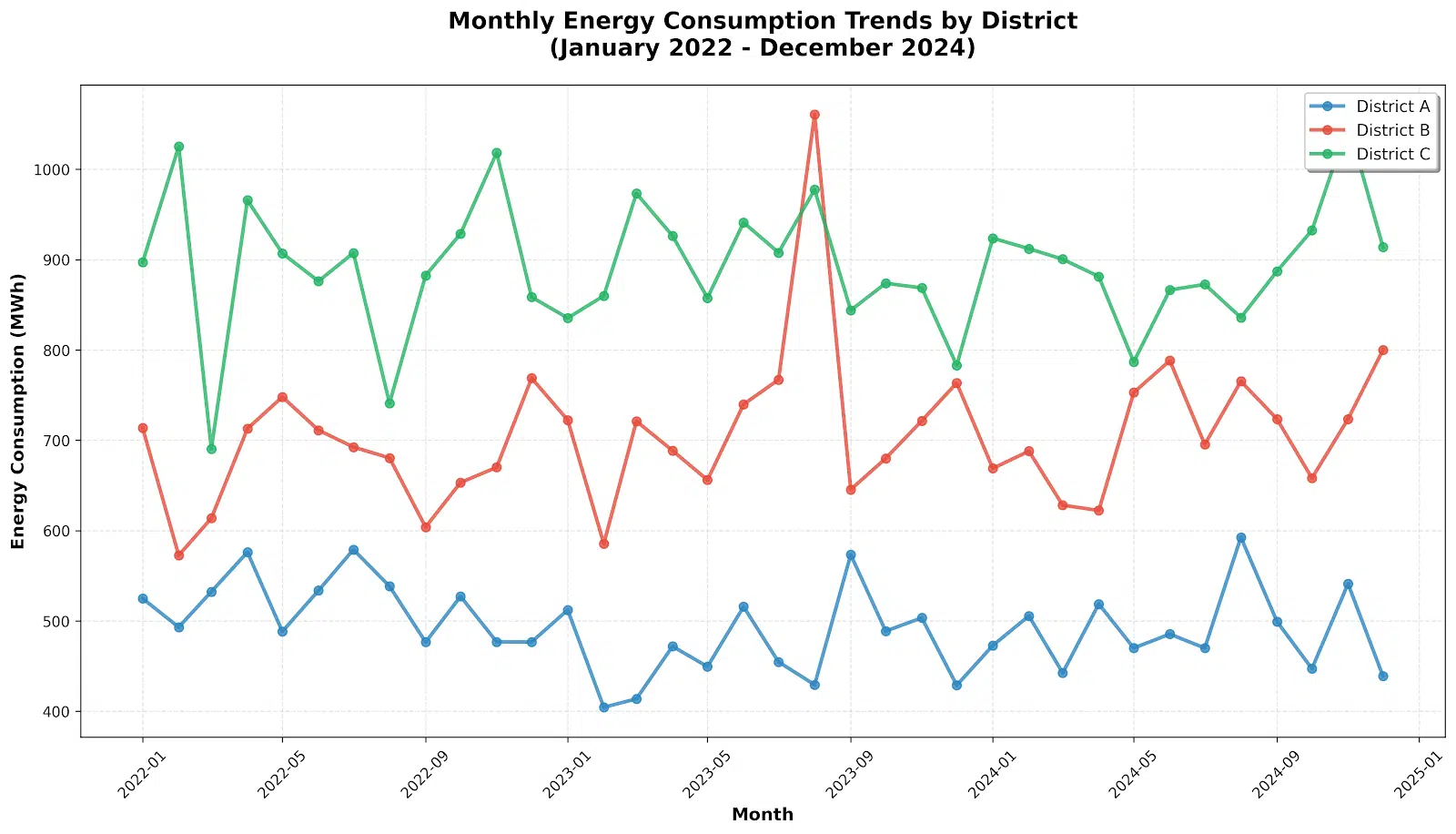

“I’m uploading a CSV of monthly energy consumption for 3 city districts (36 rows: Jan 2022–Dec 2024). Clean the data, identify trends and outliers, calculate year-over-year growth per district, and produce two visualizations: (1) a multi-line time series, (2) a bar chart comparing average monthly consumption by year. Deliver a short written summary (250–300 words) of insights and export the visuals as PNGs.”

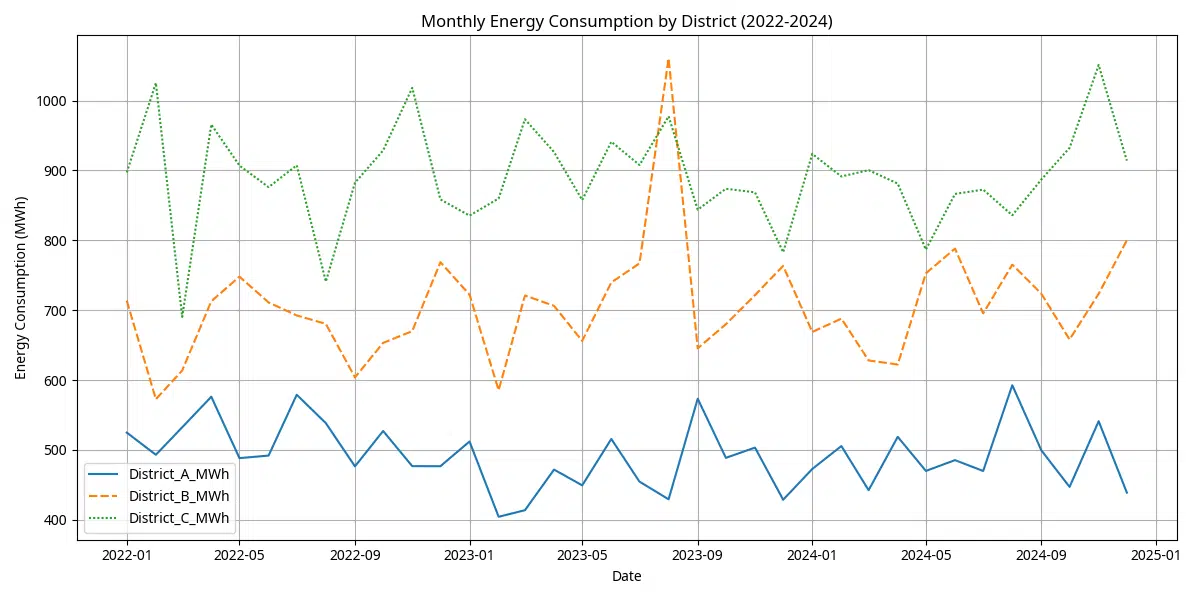

Manus

Manus treated this like a mini research job rather than a one-click graph generator. It prompted me first for the CSV (I uploaded it into its project space), then displayed a short data-cleaning checklist it would run: check missing values, standardise date formats, and normalise units. That made me nod because I wanted the checks, not assumptions.

It ran the cleaning steps automatically, showed a preview of rows it fixed (missing value interpolation and two misformatted dates), and asked if I wanted interpolation or deletion for missing months. I said interpolate. After that, Manus produced:

- A Jupyter-style notebook summary (inline) showing the Python (pandas + matplotlib) steps it ran. It is useful because I could copy the code later.

- Two PNG charts were exported and attached to the project: a clean multi-line chart with shaded confidence bands for seasonal variance, and a grouped bar chart comparing mean monthly consumption by year. The charts were of publication quality, with legible labels, a sensible color palette, and annotated peaks.

- A 265-word insight summary covering trend direction, seasonal peaks (summer cooling load), an outlier in Aug 2023 (explained by a reported grid outage, Manus linked to a local news item it scraped), and YoY growth numbers by district with exact percentages.

- A downloadable ZIP containing the PNGs and the cleaned CSV.

Timing: about 2 minutes from upload to final deliverables. What I liked is the transparency. It showed the cleaning choices and the code it executed and the export options. However, I didn’t like that the default chart styling was conservative (which is fine for reports, not for marketing slides), and the file naming was a bit generic (“chart_1.png”).

GenSpark

Genspark’s workflow felt more conversational and faster on the surface. I uploaded the same CSV into an AI Drive folder and typed the prompt. It returned outputs quicker than Manus in about 4 minutes, but with a different posture: more “done for presentation” than “here’s the engineering behind it.”

It produced:

- Two visuals embedded in the Sparkpage: a multi-line interactive SVG (you could hover to see exact values) and a colorful grouped bar chart. The visuals were immediately presentable and looked better and snapped more than Manus’s default.

- A 280-word narrative summary that emphasized story and takeaways (e.g., “District B is emerging as the fastest-growing consumer”). It included suggested slide text and three bullet callouts for a deck.

- A cleaned CSV, but I noticed Genspark silently filled missing values with forward-fill; it didn’t prompt me about the choice or show the code. That’s okay for speed, but less honest for reproducibility.

- Export options were available (PNG download), and the PNGs looked crisp. However, the interactive chart relied on the Sparkpage; the exported PNG lost the hover metadata and had compressed axis labels that needed manual cleanup. Genspark also didn’t surface the external news item that explained the Aug 2023 outlier. It called it an anomaly without sourcing it.

Timing: ~4 minutes. I liked the speed and prettier visuals right away since it handed me a deck-friendly summary. The lack of transparency about data-cleaning choices and no code export for reproducibility is not good enough, though

Verdict for Task 3: It depends on what you value.

- If you want reproducible analysis, traceability, and code you can drop into a pipeline, Manus wins. It shows the cleaning steps, gives you the code, and ties anomalies to sources when possible.

- If you want fast, attractive visuals and deck-ready language with minimal fiddling, Genspark wins for convenience, but you trade off auditability and sometimes accuracy of the preprocessing choices.

For my workflow (I publish reports and sometimes hand-code the final charts), Manus edged this task because I could reuse the notebook and trust the provenance. If I were prepping slides for a client meeting with a tight time, I’d have grabbed Genspark’s visuals and polished the labels myself.

Task 4: creative copywriting

Prompt given to both tools:

“Write a 250–300 word product description for a new eco-friendly smart water bottle that tracks hydration, reminds you to drink, and syncs with your phone. Make it persuasive but not cheesy. The tone should be aspirational, aimed at health-conscious urban professionals. Include a short tagline.”

Manus

Manus approached this like a brand strategist. First, it asked two quick clarifiers: preferred reading level and whether to use U.S. or U.K. spelling. Once I answered, it produced three alternative taglines, each with a slightly different emphasis (“tech-forward,” “lifestyle,” and “wellness-first”). The main copy leaned into problem-solving, describing dehydration stats and how the bottle seamlessly fixes the issue. It wove in subtle environmental cues (“crafted from recycled, BPA-free materials”) without turning it into a sustainability lecture.

Word count landed at 276, neatly within spec, and it was formatted with subheadings that could slot right into an e-commerce page. The copy was clean, persuasive, and fact-based, though it played it safe creatively.

Completion time: ~4 minutes.

Genspark

Genspark jumped in immediately and, instead of one description, gave me a “creative range” of three distinct styles:

- Minimalist luxury: short sentences, aspirational vibe.

- Story-driven: opens with “Your 8 a.m. meeting is about to start, but…” and builds the product into a scene.

- Punchy marketing copy: rhythmic, tagline-heavy, ready for social ads.

Its taglines were bolder than Manus’s, one of them (“Hydration, Upgraded.”) felt instantly usable. It also made stronger sensory appeals (“the cool stainless steel against your palm”). However, Genspark didn’t fact-check its environmental claims; it assumed the bottle was “solar-powered” at one point, which wasn’t in the brief. I had to strip that out. Output time: just under 3 minutes.

Verdict for Task 4

If you need a polished, safe copy that’s ready for a brand book, Manus wins. If you’re looking for edgy, varied concepts to spark a brainstorm, Genspark is more fun, but you’ll have to sanity-check it.

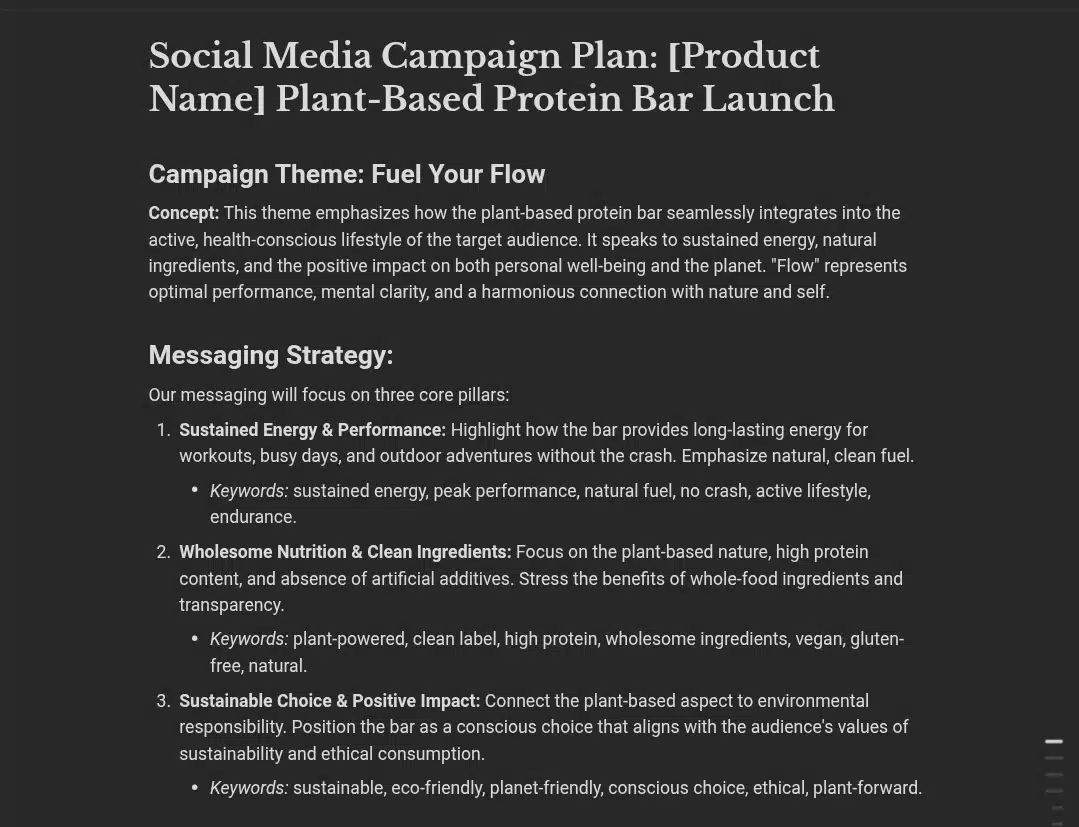

Task 5: social media campaign planning

Prompt given to both tools:

“Plan a 2-week social media campaign for launching a new plant-based protein bar. Platforms: Instagram, TikTok, LinkedIn. Audience: active adults aged 25–40 who care about nutrition and sustainability. Include campaign theme, content ideas for each platform, posting schedule, and KPIs to track. Keep the plan clear and actionable.”

Manus

Manus started by defining a core campaign theme, “Fuel Your Day, Sustainably” and explained how it would translate differently per platform. It built a day-by-day calendar for 14 days with post descriptions, captions, and hashtags.

Highlights:

- Instagram: Mix of lifestyle shots, carousel infographics on nutrition benefits, and short Reels with fitness influencers.

- TikTok: Quick recipe hacks using the bar, humor-based fitness skits, and a “Plant-Based Power Challenge” hashtag.

- LinkedIn: Professional tone focused on the sustainability angle, company story, and behind-the-scenes supply chain practices.

- Each post had notes on optimal posting time based on platform averages.

- KPIs: reach, engagement rate, click-through rate to landing page, hashtag participation, and brand mentions.

The structure was extremely methodical, and it felt like a client-ready campaign doc. The trade-off is that the creative ideas were solid but not wildly original. Timing: ~6 minutes.

Genspark

Genspark went in as a loser, but with more spark. It suggested a campaign name, “Bite Into Better,” and framed the rollout in three stages: Tease, Launch, Amplify.

Highlights:

- Instagram: Bold, color-heavy posts with trending audio, “swipe to see the truth” reveals protein myths.

- TikTok: Interactive polls in Stories, duet challenges with fitness creators, and playful “What’s in my gym bag?” skits.

- LinkedIn: Data-driven infographics about plant protein market growth, plus short founder video clips.

- The posting schedule wasn’t day-by-day — it gave a weekly structure instead.

- KPIs: same as Manus, but also suggested user-generated content volume and influencer ROI tracking.

Its ideas felt fresher but less fully baked in terms of execution detail. Output time: ~4 minutes.

Verdict for Task 5

- Manus: Best if you need an airtight, execution-ready plan with timelines.

- Genspark: Better if you want a burst of creative energy to kick off brainstorming, then fill in the execution yourself.

Task 6: long-form SEO blog post drafting

Prompt given to both tools:

“Write a 2,000+ word SEO-optimized blog post on ‘The Future of Electric Aviation: Challenges and Opportunities’. Include meta title, meta description, H1–H3 headings, bullet points where relevant, and cite sources. Make it engaging for a tech-savvy audience while remaining factually accurate.”

Manus

Manus approached this like an SEO consultant. It started by outlining the H1–H3 hierarchy before writing, ensuring keyword distribution and semantic variations like “electric planes,” “eVTOL,” and “zero-emission aviation.” The draft was 2,134 words, cleanly divided into sections: history, current market players, tech hurdles, policy landscape, and future scenarios. It pulled citations from reputable sources (NASA, ICAO, and BloombergNEF) with direct links.

The tone was professional but not stiff, weaving in examples like Joby Aviation’s test flights and Airbus’s hydrogen concepts. The meta title and description hit the ideal SEO length. The only knock is its creative engagement devices, like story hooks and analogies, were minimal. It reads more like a white paper than a blog.

Completion time: ~3 minutes.

Genspark

Genspark jumped right into writing without a formal outline, producing a 2,054-word draft in ~2 minutes. It leaned into storytelling, opening with a day-in-the-life vignette of a 2035 commuter boarding an electric air taxi. It used stronger analogies (“Electric aviation is where solar panels were in 2005: promising, but fragile”) and bold predictions.

While the article was more entertaining, it mixed 2023 and 2024 data without clarifying which was the latest, and a few market share numbers didn’t match the cited sources. The SEO was a loser: main keywords appeared, but secondary keywords and headings were inconsistent.

Verdict for Task 6

- Manus: Best for accurate, SEO-polished, reference-grade content.

- Genspark: Best for engaging narrative flow and audience hook, but fact-check before publishing.

Task 7: multi-step project execution

Prompt given to both tools:

“Plan and partially execute a 3-phase online course launch for ‘Beginner’s Guide to Data Analytics’. Include audience research, course outline, marketing plan, and a sample lesson script. Keep it professional but approachable. Assume budget is $5,000 and timeline is 8 weeks.”

Manus

Manus treated this like a consulting engagement. It broke the task into clear deliverables under each phase:

- Audience Research (Week 1–2):

- Personas: career changers, junior marketers, small business owners.

- Tools: Google Trends, LinkedIn Polls, competitor analysis.

- Output: table of insights on pain points, skill gaps, and pricing sensitivity.

- Course Outline (Week 3–4):

- 8 modules, each with 3–4 lessons.

- Balanced mix of theory, demos, and hands-on assignments.

- Mapped each lesson to learning outcomes and assessment type.

- Marketing Plan (Week 5–8):

- Channels: email drip sequence, LinkedIn Ads, partnerships with industry blogs.

- Timeline: teaser posts in Week 5, early bird discount in Week 6, webinar in Week 7, launch in Week 8.

- KPIs: registrations, cost per lead, and course completion rates.

- Sample Lesson Script:

- A 1,200-word lesson on “Data Cleaning Basics in Excel” with clear timestamps, activity prompts, and screenshots is described.

The plan was airtight, complete with budget allocation tables and time estimates. The drawback is that the marketing ideas were effective but generic. There was nothing that felt especially bold or risk-taking. Completion time: ~5minutes.

Genspark

Genspark went more free-form. It started with a story-driven audience persona (“Maya, 29, tired of Excel panic attacks at work…”) and built the entire plan around her journey.

- Audience Research: Skipped hard data collection tools, relying on assumptions and existing reports. It was also more narrative, less empirical.

- Course Outline: 6 modules with creative names (“From Mess to Magic” for data cleaning). Included optional “challenge weeks” to boost engagement.

- Marketing Plan: Suggested a TikTok mini-series where the instructor solves a random analytics problem in under a minute. It also recommended a live AMA with industry pros as a pre-launch hook. There was no budget table, only rough cost notes.

- Sample Lesson Script: Shorter (about 800 words) but more casual and relatable, with humor.

Genspark’s output was more energetic and brandable, but missed the rigor and depth Manus brought.

Output time: ~2 minutes.

Verdict for Task 7

- Manus: The structured, execution-ready choice fits a professional client handoff.

- Genspark: Inspiring concepts that could differentiate a brand, but need filling out and data to back decisions.

Final verdict: Manus vs Genspark

After putting Manus and Genspark through seven very different, high-pressure tasks, the contrast between them isn’t a neat winner-loser story.

Manus was the dependable constant. No matter the prompt, it produced structured, fully fleshed-out work that you could hand to a client, publish, or present without scrambling for last-minute fixes. Its research was thorough, citations were credible, and formatting was consistent. It’s the kind of tool that thrives when accuracy, depth, and execution-readiness are non-negotiable.

Genspark, on the other hand, brought more sparks, pun intended. Its outputs felt alive, with unusual angles, memorable phrasing, and a willingness to take creative risks. Campaigns came with unexpected hooks, blog posts opened like stories, and ideas carried more personality. But with that came a certain looseness. Sometimes the facts were dated or unverified, SEO discipline was hit-or-miss, and the structure could feel improvised. You’d want to polish and fact-check before sending the work out the door.

In reality, the smartest play might not be choosing between them at all. Use Genspark when you’re staring at a blank page and need a rush of inspiration, and let Manus step in when it’s time to refine that raw energy into something precise, credible, and ready to ship.

Wrap-up

Testing Manus and Genspark side by side was less about proving one is better and more about understanding their strengths in context. Tools amplify the way you work. Manus is like a meticulous strategist; it thrives when there’s a clear brief, a need for accuracy, and no tolerance for loose ends. Genspark feels more like a creative partner who blurts out ten wild ideas before breakfast, some brilliant, some impractical, but always energizing.

What struck me most was how they complement each other. Where Manus provides the bones like the structure, fact-checking, SEO hygiene,Genspark adds muscle and movement with storytelling and unconventional thinking. If you’re a solo operator, that pairing can be powerful. Start with Genspark to stretch the idea space, then hand it to Manus to tighten and polish until it’s bulletproof.

At the end of the day, choosing between them is about timing. Ask yourself what the task needs in that moment, precision or spark, and reach for the one that fits. Better yet, keep both within arm’s reach.

You may also like :

| chatGPT vs Claude for coding | chatGPT vs Perplexity AI: 10 use cases |

| Grok 3 vs ChatGPT: 10 prompts | Meta AI vs ChatGPT |

| Bing AI vs ChatGPT | Deepseek AI review |