When two AI tools claim they can handle almost anything you throw at them, there’s only one way to find out who’s better: a head-to-head test. That’s precisely what I did with Manus and ChatGPT.

I lined up 10 tasks that covered all kinds of skills: solving tricky math problems, finding up-to-date information, creating images, building slide decks, writing captions, and even coming up with memes.

Some results were exactly what I expected. Others had me double-checking if I’d accidentally pasted the wrong output under the wrong name. By the time the last task was done, I had a scoreboard, a winner, and plenty of surprises to share.

Here’s how it went down.

What is Manus?

Manus is an autonomous AI agent developed by the Chinese startup Monica (also known as Butterfly Effect AI) and officially launched on March 6, 2025. Unlike traditional chatbots, which mainly respond to prompts, Manus is designed to think, plan, and act independently. It uses a multi-agent orchestration system, meaning it breaks a complex request into smaller subtasks and assigns them to specialized sub-agents that work together, much like a virtual team.

Manus can switch between different large language models, including Anthropic’s Claude 3.5 Sonnet and Alibaba’s Qwen, picking the one best suited for the task at hand. It also utilizes a technology known as the CodeAct paradigm, which enables the writing and execution of Python code within a secure sandbox. This allows it to perform real-world actions like browsing the web, scraping data, generating reports, and automating workflows without constant human supervision. Once you give it a task, it can keep working in the cloud even if you close your laptop.

The name “Manus” comes from the Latin word for “hand,” reflecting its goal of bridging the gap between thinking and doing. Its creators market it as the first truly general AI agent, built to go beyond conversation and complete entire projects from start to finish.

Capabilities and features

Here’s what Manus can actually do:

- Autonomous Task Execution: Once set in motion, Manus continues working in the cloud without needing you to stay engaged. It breaks tasks into steps, assigns sub-agents, and iterates on its own.

- Advanced Tool Calling: Manus doesn’t just answer, it acts. It integrates with tools like browsers, code editors, APIs, and databases to carry out tasks like scraping, coding, and automation.

- Multi-Model Flexibility: It can choose the best model, Claude or Qwen, for each task, based on performance needs.

- Multi-Modal Output: Works with text, code, images, and video. Starting June 2025, it offers text-to-video generation: Manus can plan scenes, generate visuals, animate, and stitch them into complete stories from a single prompt.

- Structured Deliverables: Whether it’s creating a polished report, slides, a dashboard, or even building a website, Manus delivers organized, formatted outputs, not just raw data.

- GAIA Benchmark Excellence: Manus has achieved state-of-the-art results across all three difficulty levels of the GAIA benchmark, outperforming models like OpenAI’s DeepResearch.

- Wide Research (Parallel Agent Mode): As of July 31, 2025, Manus introduces “Wide Research” which allows users to deploy over 100 general-purpose agents in parallel to tackle massive or complex tasks in concert.

What is ChatGPT?

ChatGPT is an AI chatbot created by OpenAI, first launched on November 30, 2022. It’s designed to understand and respond to human language in a natural, conversational way, making it versatile enough for writing, brainstorming, coding, research, tutoring, and more. Built on the Generative Pre-trained Transformer (GPT) architecture, it learns from vast amounts of text to predict and generate words in context.

Over the years, ChatGPT has gone through several upgrades including GPT-3.5, GPT-4, GPT-4o, and as of August 2025, the latest model is GPT-5. This version offers more accurate reasoning, better factual reliability, improved memory handling, and multimodal capabilities, allowing it to work with both text and images (and in some applications, audio and video). Users can also switch between modes for faster performance or more detailed, thoughtful answers.

While ChatGPT is widely available through the OpenAI website and app, it’s also integrated into Microsoft products like Word and Excel, and available to developers via the OpenAI API.

Capabilities and features

- Generates human-like text for writing, research, and creative tasks.

- Answers complex questions and explains concepts clearly.

- Writes and debugs code in multiple programming languages.

- Analyzes and interprets images (in supported versions).

- Summarizes long documents or articles.

- Engages in roleplay or scenario-based responses.

- Connects with external tools and APIs for extended functionality.

- Offers customizable modes for speed, creativity, or reasoning depth.

The rules of the game

To make this comparison fair and transparent, I picked 10 diverse real-world tasks, some focused on writing, others on problem-solving, creativity, and even multimedia. The goal is to see how each AI handles challenges that an average user might throw at them, from crunching numbers to making slides.

Both Manus and ChatGPT were:

- Given the exact same prompt for each task.

- Tested under their latest publicly available versions.

- Judged based on accuracy, speed, creativity, usability, and output quality.

There won’t be extra tweaking, re-prompts, or second tries for the rounds. What you’ll see is their first response, just like you’d get if you were using them for the first time.

We’ll go through each task, show you screenshots, and at the end, see which is better for what. But first, let’s reveal the 10 challenges:

The 10 challenges

- Write a Blog Intro – Craft an engaging opening paragraph for a tech article in under 100 words.

- Summarize a Research Article – Condense a 1,000-word article into a concise, readable summary without losing key points.

- Solve a Complex Math Problem – Handle a multi-step algebra and probability problem.

- Generate a Custom Image – Create an AI-generated image based on a specific, detailed prompt.

- Find Credible Sources – Locate and provide direct links to reputable studies or articles on a niche topic.

- Make a Presentation Slide Deck – Produce a clean, 5-slide deck explaining a beginner-friendly tech concept.

- Write a Short Script – Create a 60-second video script for a fictional product launch.

- Translate with Context – Translate a paragraph into French, keeping tone and meaning intact.

- Plan a One-Day Trip – Suggest a full-day itinerary for a specific city, with activities and time slots.

- Brainstorm Product Names – Come up with 10 unique and brandable names for a new fitness app.

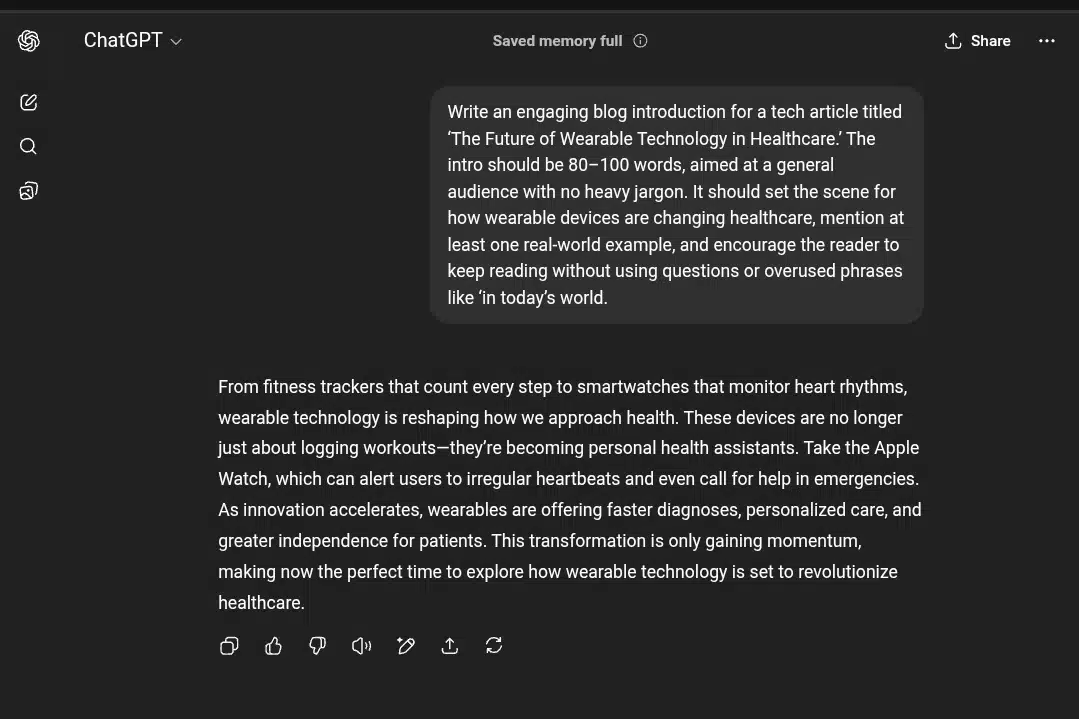

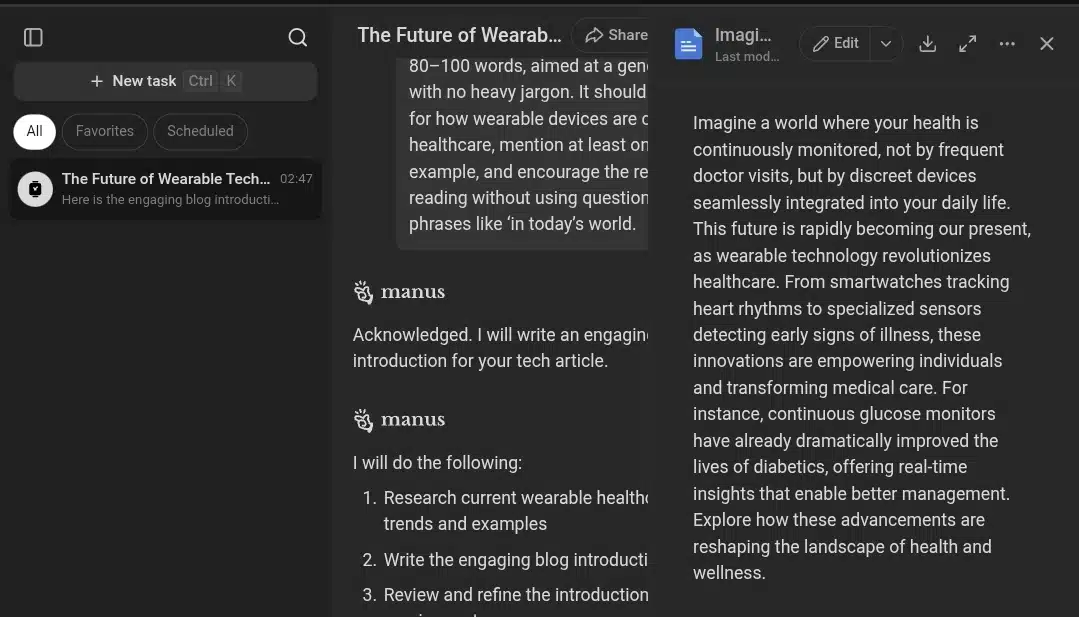

Task 1: Write a blog intro

Prompt:

“Write an engaging blog introduction for a tech article titled ‘The Future of Wearable Technology in Healthcare.’ The intro should be 80–100 words, aimed at a general audience with no heavy jargon. It should set the scene for how wearable devices are changing healthcare, mention at least one real-world example, and encourage the reader to keep reading without using questions or overused phrases like ‘in today’s world.’”

For the first test, ChatGPT absolutely nailed it. The response felt natural, well-structured, and free from the overused phrases you often see in generic AI outputs. The flow was smooth, and it read like something a skilled human writer could have produced without trying too hard.

Manus, on the other hand, struggled here. The output had a stiff, mechanical tone that screamed “AI-generated” in all the wrong ways. It leaned heavily on predictable structures and safe, formulaic expressions, which drained it of personality. While it was technically correct, it lacked the subtle nuance and rhythm needed to make it engaging. If I had to submit Manus’ version as-is, it would be an instant giveaway that it wasn’t written by me.

Winner: ChatGPT for clear, fluid, and convincingly human output.

Task 2: Summarize a research article

Prompt

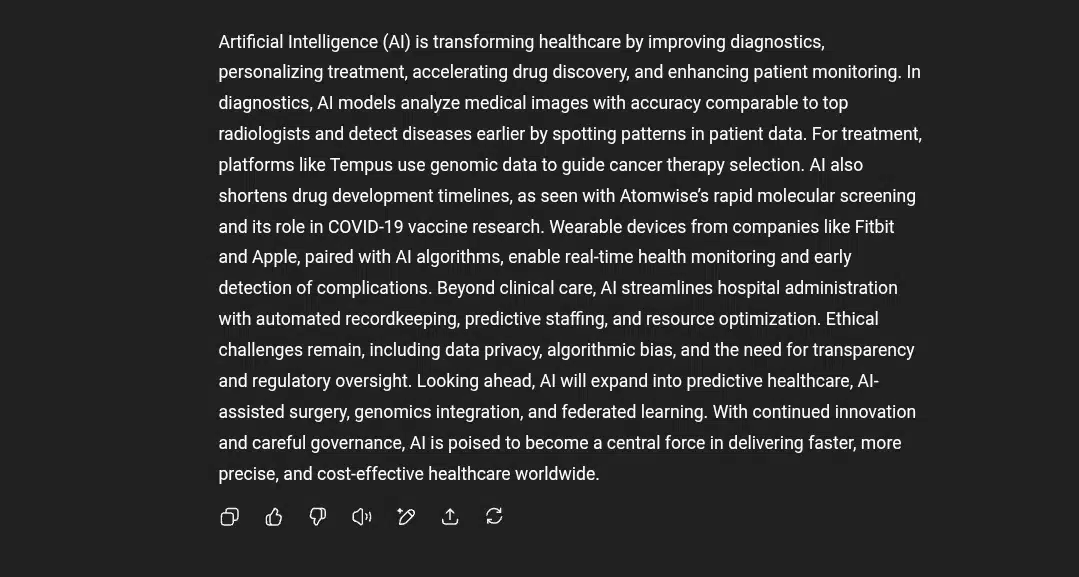

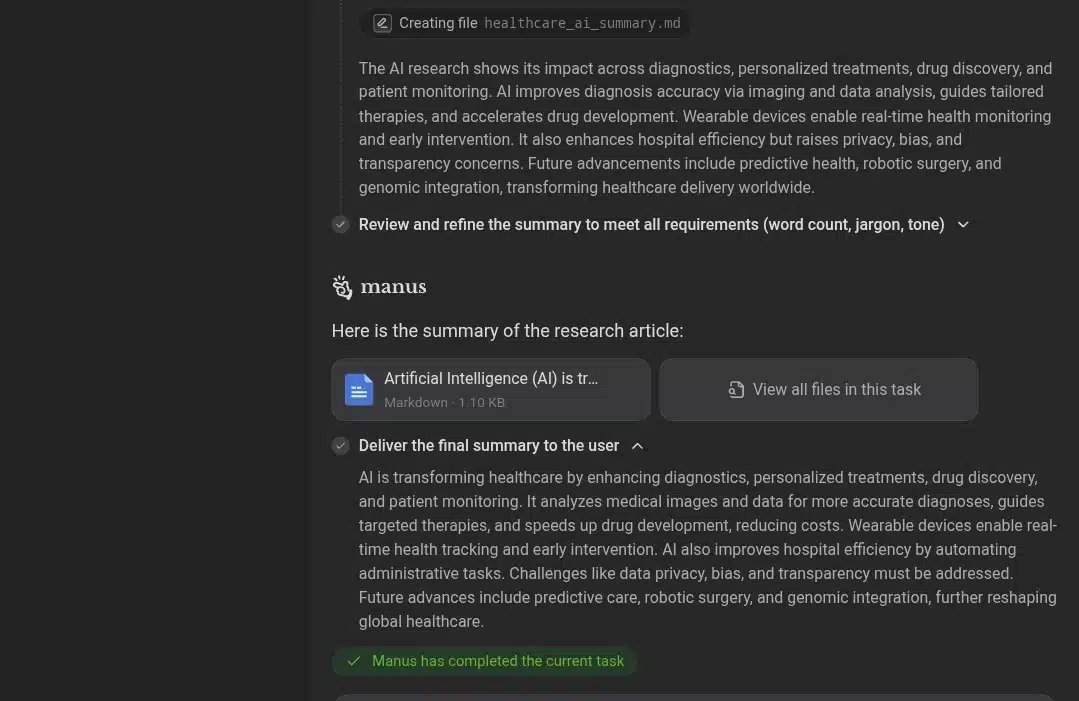

“Summarize the following research article into 150–180 words, ensuring the summary is clear for a non-expert reader while retaining all the key findings, conclusions, and implications. Avoid technical jargon where possible. Do not add opinions or commentary

This challenge was all about condensing a 1,000-word research article into a 150–180 word, beginner-friendly summary without losing the core details.

ChatGPT handled the task impressively. Its summary was concise yet rich with specific examples from the original text, citing platforms like Tempus and Atomwise, referencing the COVID-19 vaccine research, and naming wearable tech companies. It maintained a logical flow, covering diagnostics, treatment, drug discovery, wearables, administration, ethics, and future directions without feeling rushed. The tone was clear and accessible, meeting the brief perfectly.

Manus, while accurate in the broad strokes, oversimplified the content. Its summary lacked concrete examples and read more like a generic overview than a targeted condensation of the source material. Key specifics, such as company names, real-world use cases, and notable projects, were omitted. This made the output feel less engaging and informative, especially for readers wanting a sense of real-world impact.

Winner: ChatGPT for retaining depth, examples, and narrative clarity within the word limit.

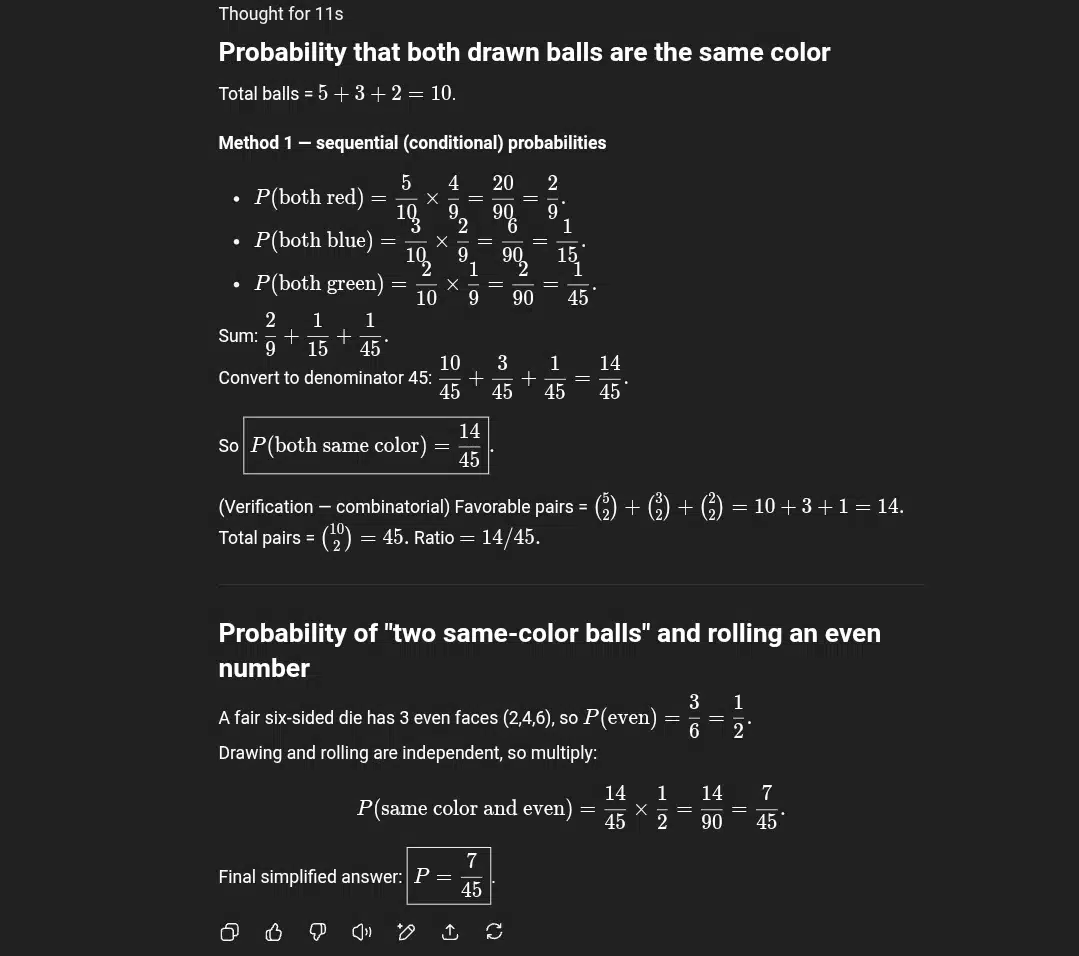

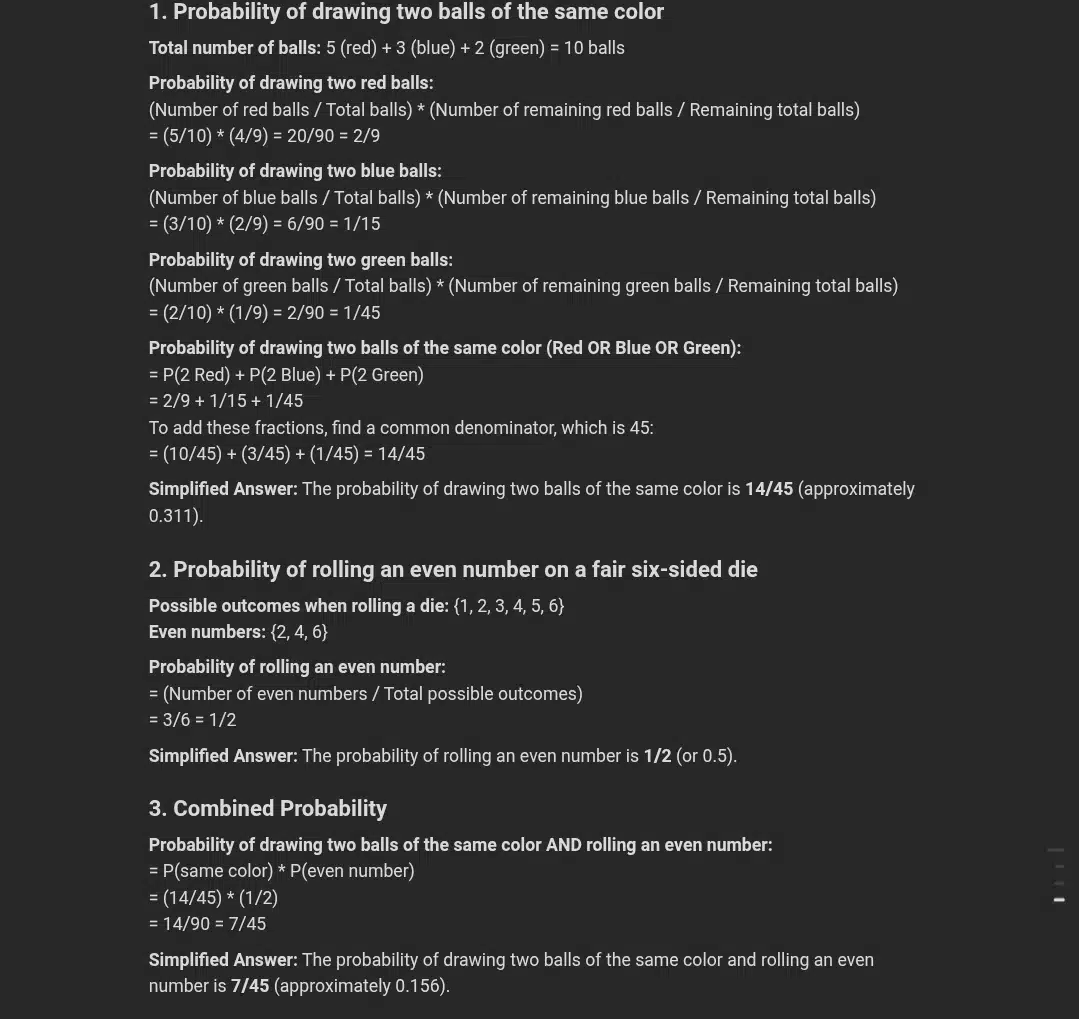

Task 3: Solve a complex math problem

Prompt for Task 3

“A bag contains 5 red balls, 3 blue balls, and 2 green balls. You randomly draw 2 balls without replacement.

- What is the probability that both balls are the same color?

- If the balls are numbered 1 to 10, and you roll a fair six-sided die, what is the probability of drawing two balls of the same color and rolling an even number?

Show your calculations step-by-step and simplify your answers.”

This combines combinatorics, probability, and conditional reasoning, so it’s perfect for spotting errors.

This challenge was meant to test multi-step reasoning: a probability problem requiring both combinatorics and the application of independent events.

ChatGPT delivered a textbook-perfect solution. It used two different approaches (sequential probability and combinatorics) to cross-check the result for the first part, which boosts confidence in the accuracy. The work was neatly structured, with clear fraction simplifications and a logical flow. The independence of the two events (ball drawing and die roll) was explicitly stated, which is an important detail many miss. Overall, it felt precise, well-explained, and mathematically airtight.

Manus also arrived at the correct answers and showed step-by-step reasoning. The explanation was straightforward and easy to follow, which is good for beginners. However, it didn’t verify the result via an alternative method, and the presentation leaned more toward listing formulas than offering deeper conceptual insight. Also, its fractional simplifications were correct but slightly less elegant in narrative compared to ChatGPT’s structured proof style.

Winner: ChatGPT for precision, dual-method verification, and a more polished explanation style.

Task 4: Generate a custom image

Prompt:

“Create a hyper-realistic digital illustration of a futuristic city at sunset, with flying cars, glass skyscrapers reflecting orange light, pedestrians wearing holographic clothing, and a central floating park suspended in mid-air. The scene should be vibrant and cinematic, viewed from a high vantage point.”

ChatGPT’s generated image delivers a breathtaking futuristic city at sunset, with strong cinematic lighting, floating green islands, and sleek hovercrafts. The perspective is immersive, showing a small group of people gazing toward the floating forest platform. There’s an excellent balance between warm sunset tones and cool metallic blues, giving the image depth and vibrancy. The details, from the reflection on the glass towers to the subtle texture on clothing, feel polished and concept-art ready. This result nails both the creative brief and visual appeal.

Manus created a similar futuristic skyline with floating islands and a sunset backdrop. This image uses a wider perspective that captures more of the environment and includes a larger crowd of pedestrians, which adds a sense of liveliness and activity. The lighting is consistent with the sunset theme but is less focused on detailed reflections and textures compared to ChatGPT’s image.

Verdict: Both images successfully capture the core elements of the prompt and showcase different strengths. ChatGPT’s image emphasizes polished lighting and cinematic mood, while Manus’s image offers a wider perspective with more activity and environmental detail. Neither is clearly superior, so both tied in delivering the brief effectively..

Task 5: Find credible sources

Prompt:

“Please find and provide direct links to at least three reputable, credible sources such as peer-reviewed studies, authoritative articles, or well-regarded reports on the topic of the effects of blue light exposure on sleep quality. The sources should be recent (preferably within the last 5 years), relevant, and from trusted websites or academic publishers. Include a brief 1-2 sentence description of each source’s focus or findings.”

ChatGPT provided three clear, reputable sources primarily from well-known organizations and publishers like Harvard Health Publishing and Sleep Foundation, alongside a relevant journal article from Chronobiology in Medicine. Each source includes a succinct summary that explains the core findings related to blue light’s effects on sleep quality. The response leans slightly more towards accessible, reader-friendly sources, which makes it approachable for a general audience. Direct links are included, though two of the three journal citations are presented as references rather than straightforward URLs, which could affect immediate verifiability.

Manus presented three peer-reviewed scientific studies, complete with direct URLs to each article. The selections focus on specific research outcomes, such as the effect of blue light filter apps on sleep efficiency, the influence of blue-enriched light on older adults, and blue light exposure’s impact on young athletes. The summaries provide detailed insights into the methodology and conclusions, demonstrating an academic depth. The URLs direct users to primary sources, increasing transparency and verifiability, though the language and framing may be denser for a general audience.

Verdict: Both tools successfully identified credible, recent sources related to blue light and sleep quality. ChatGPT’s results favor broader accessibility and well-known health authorities, making it easier for casual readers to grasp key points. Manus focuses on direct scientific literature, providing thorough academic references with clear, active links, which is valuable for more research-oriented users.

Given their different strengths, both performed well but serve slightly different user needs. ChatGPT for approachable summaries with authoritative backing, Manus for in-depth academic sourcing. For this task, both tied in delivering credible and relevant content with practical source accessibility.

Task 6: make a presentation slide deck

Prompt

“Create a clean, simple 5-slide presentation slide deck explaining the concept of ‘How Blockchain Technology Works’ for a beginner audience. The slides should include:

- An engaging title slide

- A brief overview of blockchain

- How blockchain transactions work

- Key benefits of blockchain technology

- A simple real-world example of blockchain in use

Each slide should have concise bullet points and clear headings suitable for a presentation.”

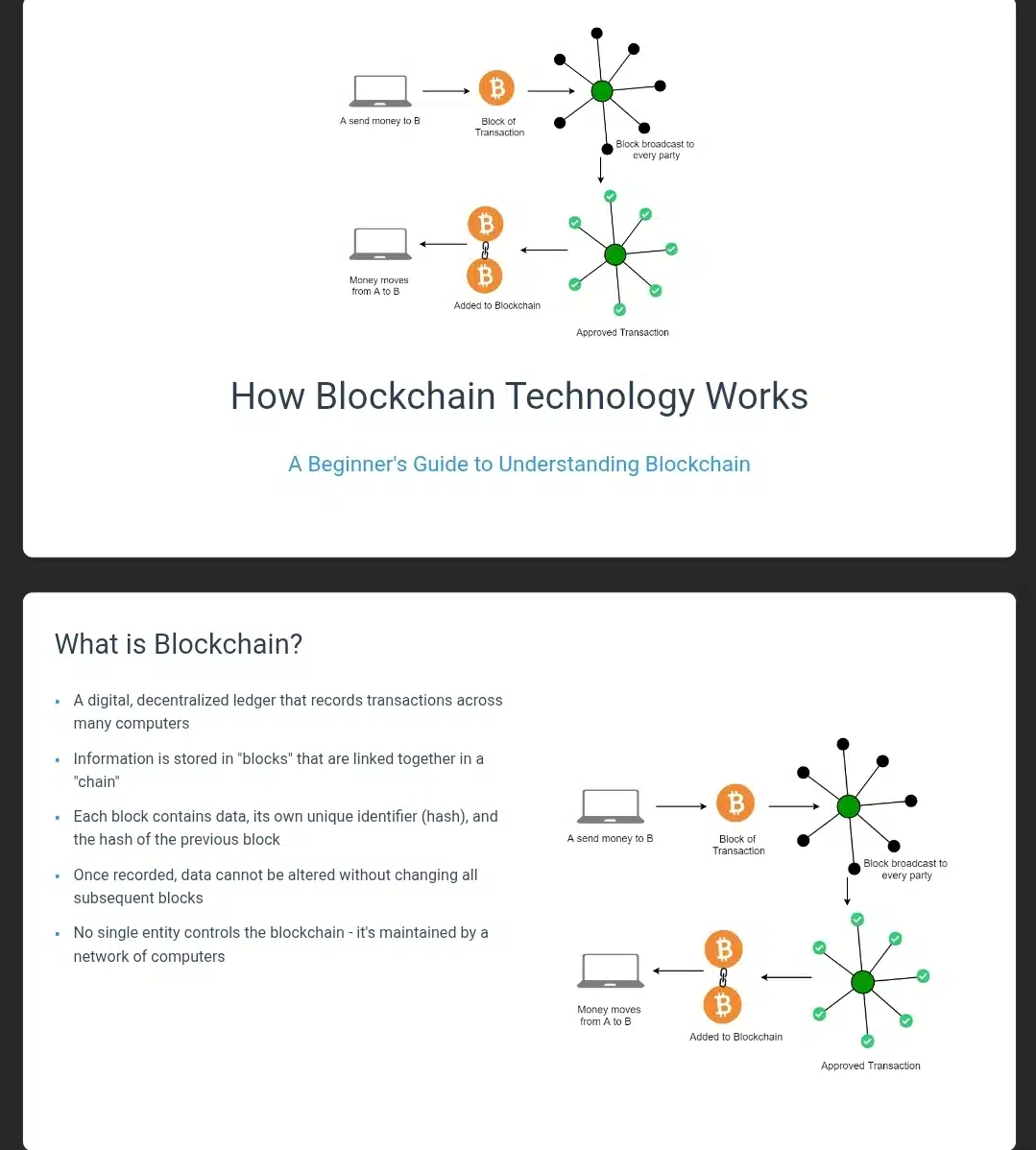

Manus delivered both the content and the actual slide designs, which adds significant value for users looking for a ready-to-use presentation. The slides were clean, visually organized, and suitable for a beginner audience. The content was clear, concise, and well-structured, covering the key aspects of blockchain technology effectively. Manus’s integration of design and content streamlines the user’s workflow, reducing the need for manual formatting and slide creation.

ChatGPT provided a well-organized, clear 5-slide outline with concise bullet points and appropriate headings. The content itself was informative, beginner-friendly, and easy to follow, fulfilling the brief for explaining blockchain technology effectively. However, ChatGPT only produced text content rather than designed slides, leaving the user to format and build the presentation manually. While the content quality is solid, the lack of slide design is a limitation compared to Manus’s more complete deliverable.

Verdict: Both tools offer strong content quality for the task, presenting blockchain technology in an accessible way. Manus goes a step further by delivering finished slide designs along with content, which enhances usability and saves time. ChatGPT provides excellent content but requires additional work to create the actual slides.

For Task 6, Manus takes the lead due to its combination of content and design, offering a more comprehensive solution for presentation creation.

Task 7: Write a short script

Prompt:

“Write a 60-second video script for the launch of a fictional smart home device called ‘GlowSense’, a sleek, voice-activated ambient lighting system that adjusts colors and brightness based on mood and time of day. The script should be engaging, highlight key features, and include a call to action at the end.”

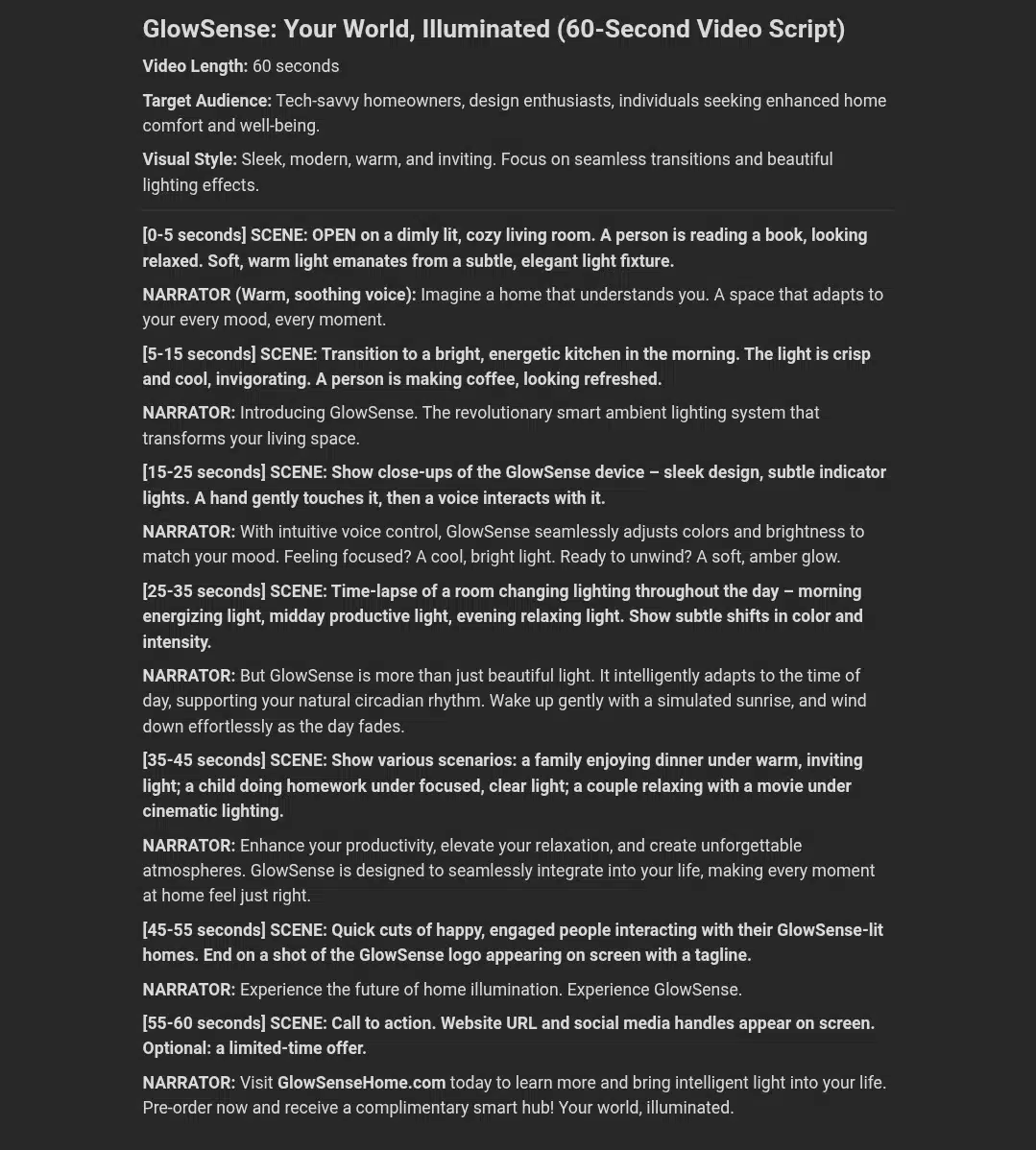

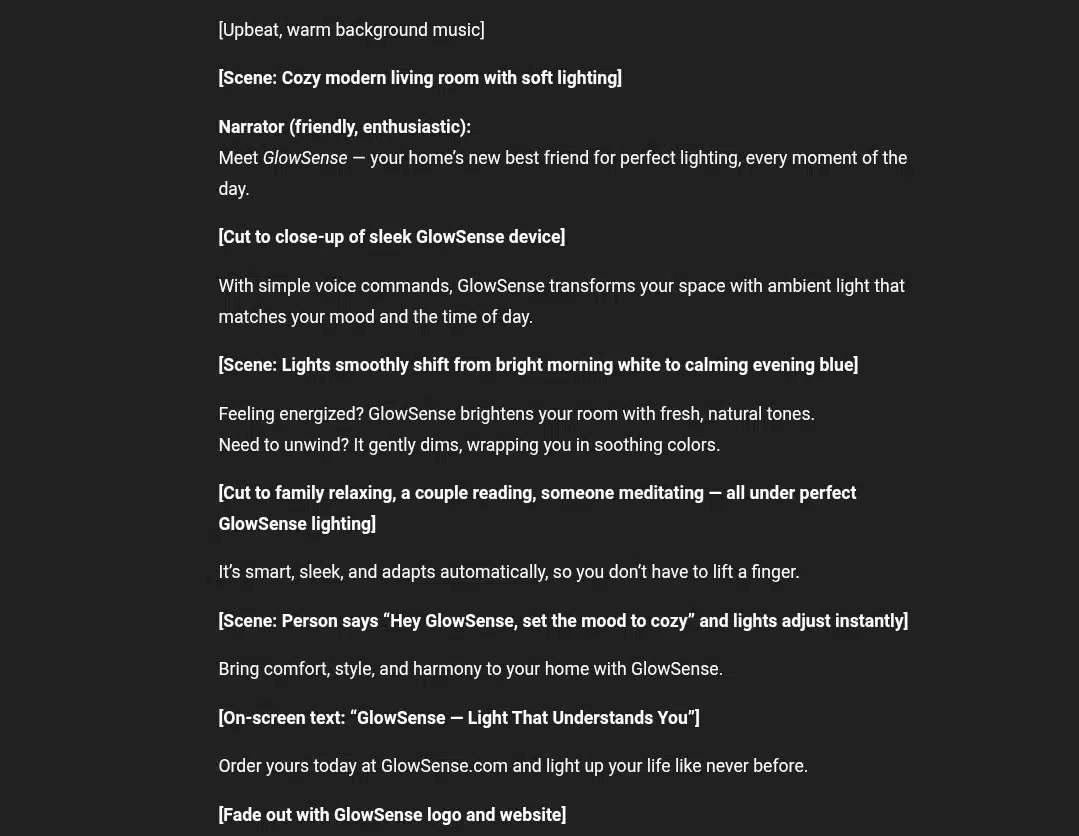

Manus delivered a fully fleshed-out, time-stamped 60-second video script with detailed scene descriptions, narrator tone suggestions, and a clear flow that moves through different lighting scenarios throughout the day. The script emphasizes the product’s features in a storytelling style, creating an immersive experience with visual cues and emotional appeal. The inclusion of specific timings and scene transitions makes it very practical for video production. It also closes with a clear call to action and promotional offer, adding marketing impact.

ChatGPT provided a concise, engaging script with a warm and friendly narrator voice. The script covers the product’s key features clearly and uses simple, relatable scenes to illustrate GlowSense’s benefits. While the script lacks specific timing or detailed scene directions, it is tightly focused and easy to follow. The call to action is direct and effective. The tone is upbeat and approachable, suited to a general audience.

Verdict: Both scripts effectively highlight GlowSense’s features and benefits, making them well suited for a 60-second product launch video. Manus’s script stands out for its detailed production-ready format, including timing and scene directions, which would be highly useful for video creators. ChatGPT’s script excels in brevity and simplicity, delivering a warm, clear message without overwhelming detail.

Overall, Manus offers a more comprehensive and practically oriented script, while ChatGPT delivers a solid, straightforward script ideal for quick production or smaller-scale projects. Both meet the task’s requirements well.

Task 8: translate with context

Prompt:

“Translate the following paragraph into French, preserving the tone, style, and meaning as closely as possible. The paragraph is:

‘Technology has transformed the way we connect with each other, making the world smaller and more accessible. From instant messaging to video calls, communication has become faster and more convenient, allowing people to maintain relationships across great distances.’”

Manus delivers a clear, accurate French translation that closely follows the original sentence structure and meaning. The tone remains neutral and informative, with natural phrasing such as “rendant le monde plus petit et plus accessible” and “permettant aux gens de maintenir des relations.” The translation reads smoothly and preserves the original intent without adding or omitting key details.

ChatGPT offers a slightly more natural and fluid rendition, rephrasing “la façon dont nous nous connectons les uns aux autres” to “notre façon de communiquer,” which simplifies and generalizes the original but fits well in French. The choice of “préserver leurs relations malgré la distance” conveys a similar meaning with a more emotive nuance (“preserving” relationships). The tone is warm and accessible, making the translation feel more conversational.

Verdict: Both translations are accurate and preserve the paragraph’s meaning and tone effectively. Manus sticks closer to a literal translation, which is precise and formal. ChatGPT takes minor liberties to enhance flow and emotional resonance, offering a slightly more natural, conversational style.

For this task, both perform well and the choice between them depends on preference for formality versus conversational tone. Overall, both are solid translations with no significant errors.

Task 9: plan a one-day trip

Prompt:

“Plan a full-day itinerary for a one-day trip to Kyoto, Japan. Include suggested activities, sights to see, meal options, and time slots to help a traveler make the most of their day in the city.”

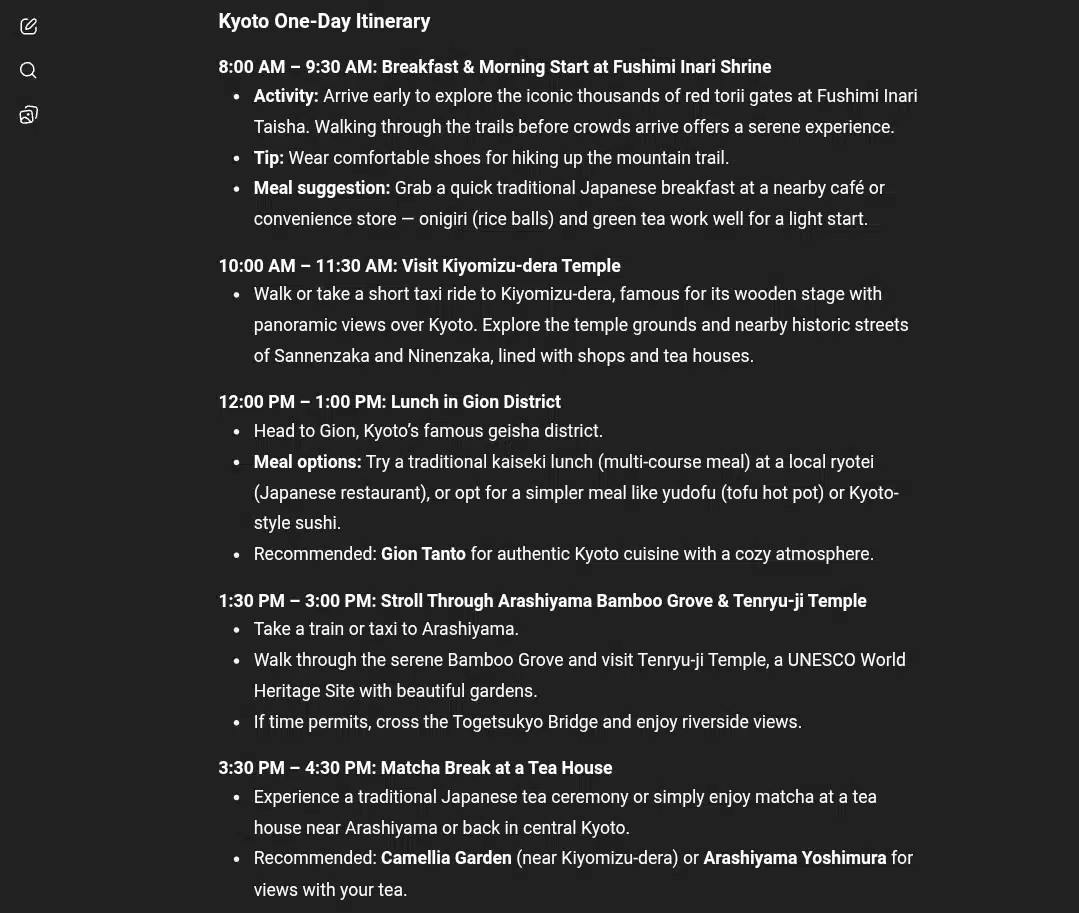

ChatGPT’s itinerary is well-structured and user-friendly, providing clear time slots with suggested activities, meal options, and practical tips. The flow balances major landmarks with cultural experiences like tea ceremonies and market strolls, giving a good mix of sightseeing and local flavor. The timing is realistic, and the inclusion of transport tips and footwear advice shows attention to traveler needs. The language is accessible, making it suitable for a broad audience, including first-time visitors.

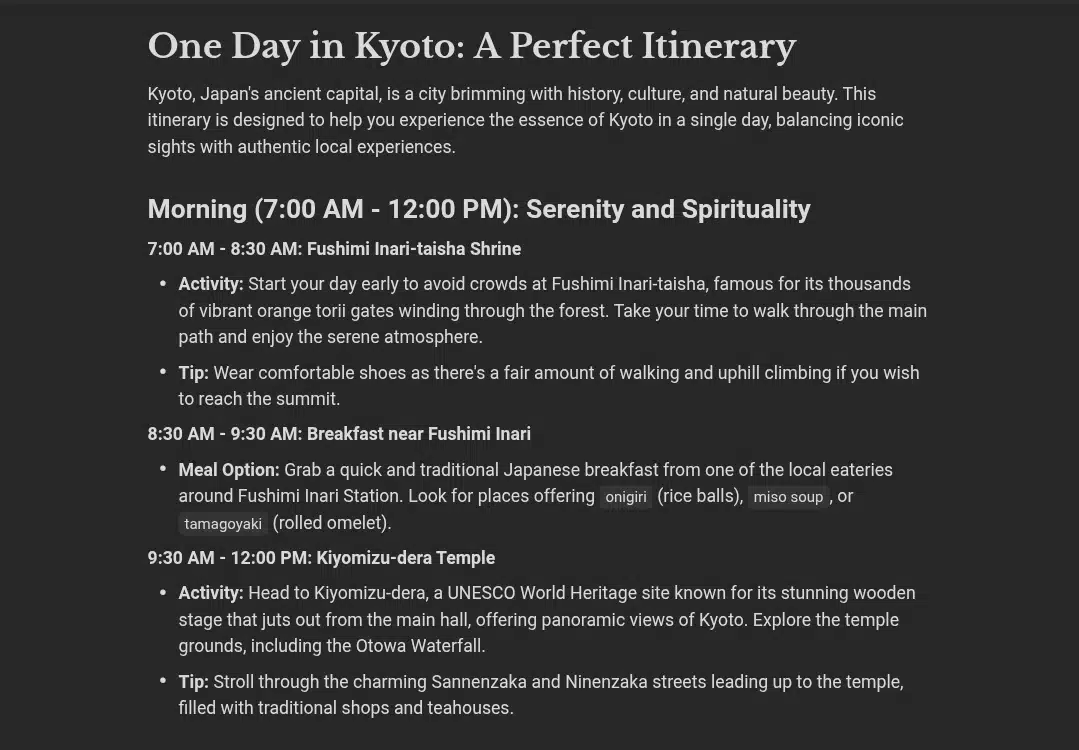

Manus offers a more detailed and comprehensive itinerary with slightly earlier start times and additional landmarks such as Kinkaku-ji (Golden Pavilion) and Kyoto Tower for nighttime views. The itinerary is divided into clear time blocks, morning, afternoon, and evening, with thorough descriptions of each stop and cultural context. Manus also suggests dining options with local dish names, enhancing the authentic experience. The inclusion of a short boat ride at Arashiyama adds a unique element not seen in ChatGPT’s version. Overall, Manus’s plan is richer in detail and covers more ground, though it may feel more ambitious for some travelers.

Verdict: Both itineraries deliver solid, practical plans for experiencing Kyoto in one day, successfully mixing iconic sites with cultural and culinary highlights. ChatGPT’s plan is concise, approachable, and well-paced, ideal for travelers seeking a balanced and manageable day. Manus’s itinerary excels in depth and variety, offering extra activities and local insights for travelers wanting a fuller experience.

Choosing between the two depends on traveler preferences: ChatGPT for a relaxed, easy-to-follow plan; Manus for a more packed, immersive day. Both meet the task well and provide valuable, actionable guidance.

Task 10: brainstorm product names

Prompt:

“Brainstorm 10 unique, catchy, and brandable names for a new fitness app focused on personalized workout plans, progress tracking, and motivational coaching. The names should be easy to remember, reflect fitness and health, and be suitable for app store listings.”

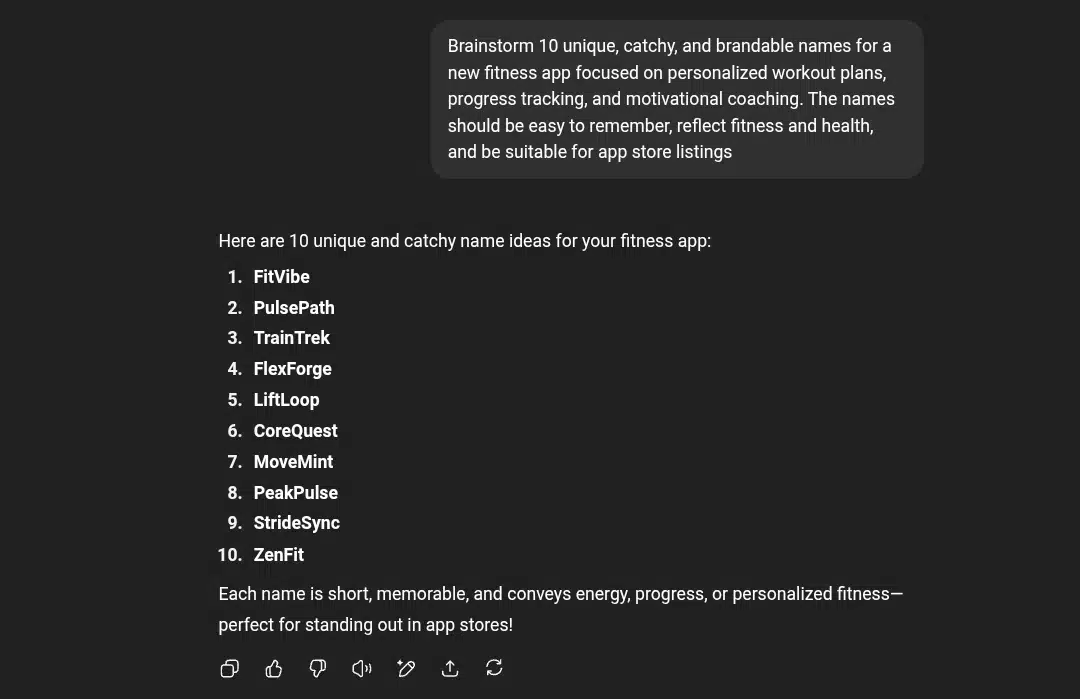

ChatGPT delivers 10 concise, catchy, and easy-to-remember fitness app names. The names are mostly short, with a modern, energetic feel that reflects themes of movement, progress, and fitness (e.g., FitVibe, PulsePath, MoveMint). The list is straightforward and versatile, suitable for app store presence. However, the names come without explanations or deeper branding context, which limits insight into their conceptual strength.

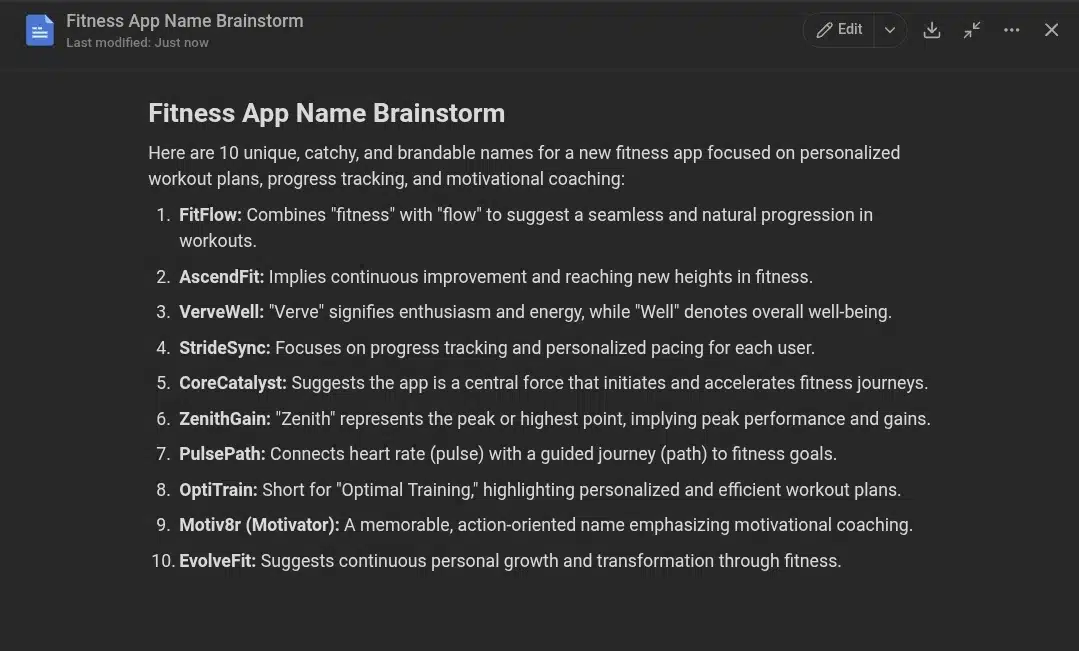

Manus provides 10 creative and brandable names paired with thoughtful, descriptive explanations that clarify the intended meaning and brand positioning of each name. The names like AscendFit, VerveWell, and Motiv8r suggest not only fitness but also motivation, well-being, and progression. This additional context adds depth and helps potential users or developers understand the branding potential behind each option. Manus also mixes straightforward and clever naming styles, enhancing variety.

Verdict: Both tools deliver strong, memorable name ideas fitting a personalized fitness app. ChatGPT’s list is punchy and easy to scan, making it great for quick inspiration. Manus offers richer context that aids in branding decisions and understanding the narrative each name can carry.

For Task 10, Manus has a slight edge due to the added branding insights, but both meet the brief effectively and provide valuable options.

Final verdict: maximizing Manus and ChatGPT

After thoroughly testing both Manus and ChatGPT across a diverse set of 10 creative and practical challenges, it’s clear each AI assistant excels in different areas, making them complementary rather than outright competitors.

Where ChatGPT works

- Clarity and Accessibility: ChatGPT consistently delivers clear, concise, and reader-friendly content that appeals to a broad audience. Its outputs often lean toward straightforward, polished language ideal for general use and quick comprehension.

- Conversational and Warm Tone: Especially in writing tasks like blog intros, translations, and scripts, ChatGPT balances professionalism with a natural, engaging voice.

- Quick Content Drafting: ChatGPT’s strengths include rapid generation of well-structured outlines, summaries, and scripts that users can quickly adapt or expand.

Where Manus excels

- Comprehensive, Production-Ready Outputs: Manus often goes beyond text to deliver fully formatted presentations, detailed scripts with timing and scene directions, or sourced academic references with direct URLs. This adds practical value for users seeking near-finished products.

- Depth and Detail: Manus provides richer context and explanations, especially in creative tasks like naming and research sourcing, helping users understand the why behind each suggestion.

- Design and Visual Integration: Manus’s ability to design slides and visually structure deliverables saves users time and effort, making it ideal for professional or academic settings requiring polished presentations.

How to maximize both tools

- Use ChatGPT for: Quick content creation, approachable summaries, conversational text, and when you need a clear, no-frills draft to build on.

- Use Manus for: Tasks requiring detailed formatting, research-backed sourcing with citations, ready-to-use slide decks, and more elaborated creative brainstorming.

Wrap up

Rather than choosing one tool over the other, leveraging both Manus and ChatGPT strategically unlocks greater productivity and quality. Manus complements ChatGPT’s speed and accessibility with polish, depth, and practical finish. Together, they offer a powerful toolkit for content creators, marketers, educators, and anyone looking to produce high-quality, varied outputs with AI assistance.

You may also like :

| chatGPT vs Claude for coding | chatGPT vs Perplexity AI: 10 use cases |

| Grok 3 vs ChatGPT: 10 prompts | Meta AI vs ChatGPT |

| Bing AI vs ChatGPT | Deepseek AI review |