If you’ve ever wondered which is a better AI model for writing assistance between Claude and ChatGPT, I’m here to answer that question.

Right now, AI tools are reshaping how we create content, brainstorm ideas, and even hit deadlines we forgot about until the last second. Picking the right AI can seriously increase your productivity, whether you’re a seasoned writer, a busy content creator, or just someone who does a lot of writing.

This isn’t the first time I’m comparing ChatGPT and Claude

I’ve previously compared the two AI models specifically for coding and tested them both as general AI assistants. I’ve also lined up ChatGPT against Meta AI and Google Gemini. Plus, we have a pretty extensive review just focused on Claude if you want to deep-dive later.

But today’s spotlight is a little different.

I’m putting Claude and ChatGPT head to head across 10 real-world writing prompts, covering everything from press releases and blog posts to email drafts and social media copy.

Here’s what you can expect from this article:

- A full breakdown of how Claude and ChatGPT tackled 10 different writing tasks, along with screenshots.

- Honest feedback on where each one shone (and where they didn’t).

- A quick comparison table if you just want the highlights.

- Practical advice on which AI might fit your writing style and goals.

By the end, you’ll have a crystal-clear idea of which model suits your writing needs best and maybe even pick up a few workflow tips along the way.

TL;DR: Key takeaways from this article

- Claude excels in structured, technical writing (press releases, case studies, FAQs) while ChatGPT dominates creative/engaging content (blogs, social media).

- From the tests, Claude won 5, ChatGPT won 4, with 1 tie in creative storytelling.

- Pricing for both starts at $20/month for pro features, with free tiers available.

- The hybrid approach works best. Use Claude for research/drafts, ChatGPT for polishing hooks.

How I tested Claude vs ChatGPT for writing

To put Claude and ChatGPT to the test, I chose 10 diverse writing prompts to see how each handled a range of tasks. It covered creative writing, blog posts, technical content, email drafts, and marketing copy, to name a few. I wanted to know how well each AI could tackle real-world writing scenarios.

Criteria for evaluation:

Here’s the criteria I used to judge them:

- Accuracy: Does the writing make sense? Is it factual? Is the structure solid?

- Clarity: Can I understand the piece on the first read, or am I left scratching my head?

- Creativity: Does it bring a fresh angle or a little spark that makes the content stand out?

- Usability: Is the output ready to roll, or would I need to spend half an hour cleaning it up?

My process:

The technique was simple but thorough. I fed the same 10 prompts to both Claude and ChatGPT, collected their outputs, and compared them side by side. No fancy tricks, no cherry-picking. Just a straightforward head-to-head battle.

Let me walk you through how each one performed, prompt by prompt.

Claude and ChatGPT Prompt-by-prompt breakdown: Which performed better?

Prompt 1: Creative short story

I used this prompt to test Claude and ChatGPT’s ability to craft an engaging and imaginative narrative from scratch. I want to see a strong storytelling structure, adherence to a chosen genre, and some plot complexity, all while showcasing stylistic flair.

Prompt: “Write a 300-word noir-style short story about a disgraced detective in 1940s New York who must solve a murder tied to a corrupt mayor. Include:

- A flawed protagonist with a distinct voice.

- At least one red herring.

- A twist ending.

- Period-accurate slang.”

Result:

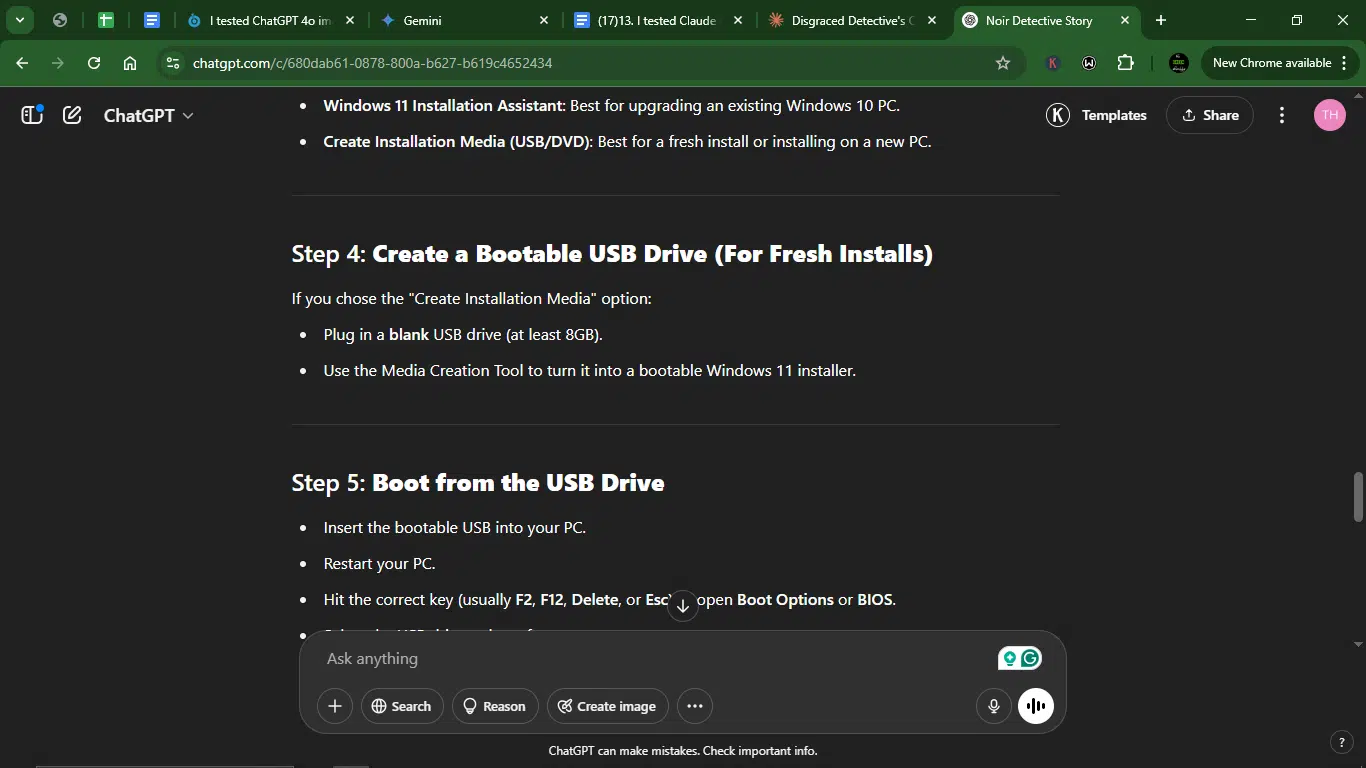

Claude’s response:

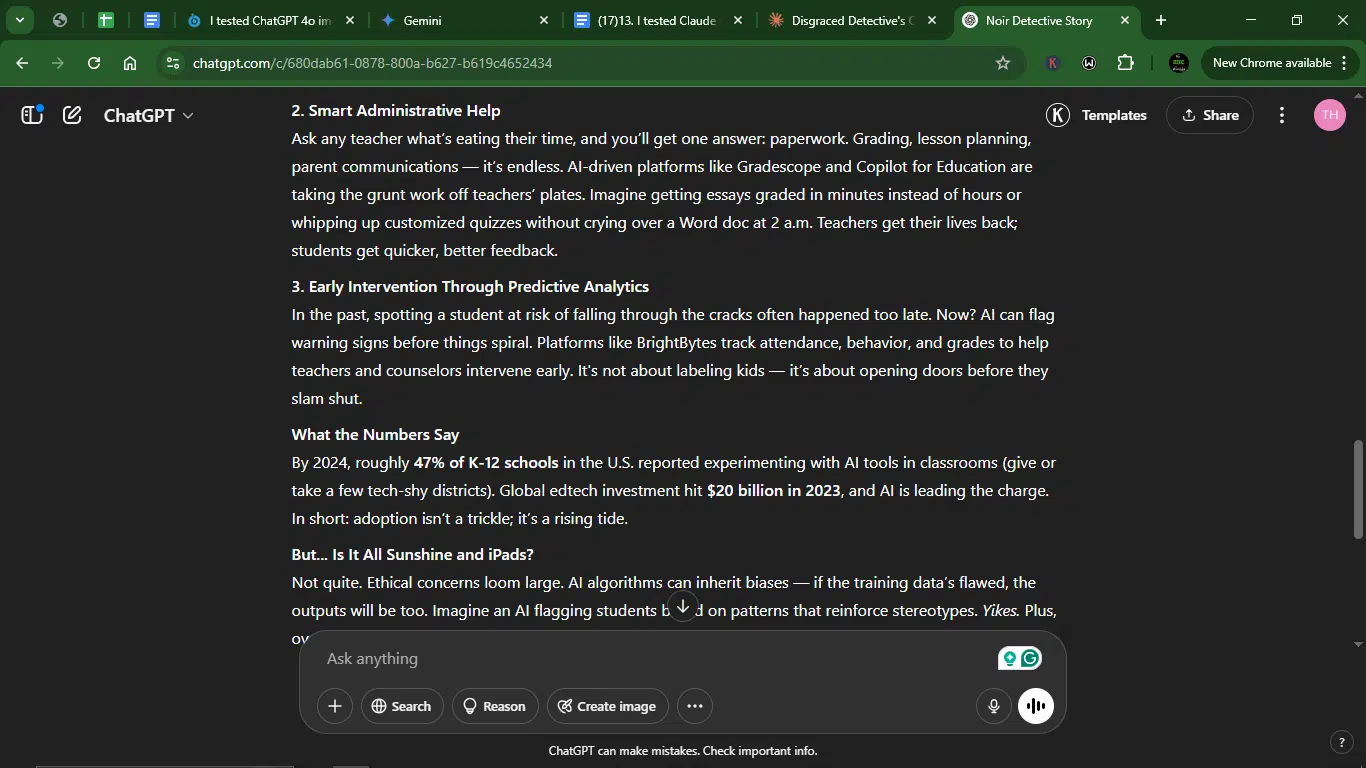

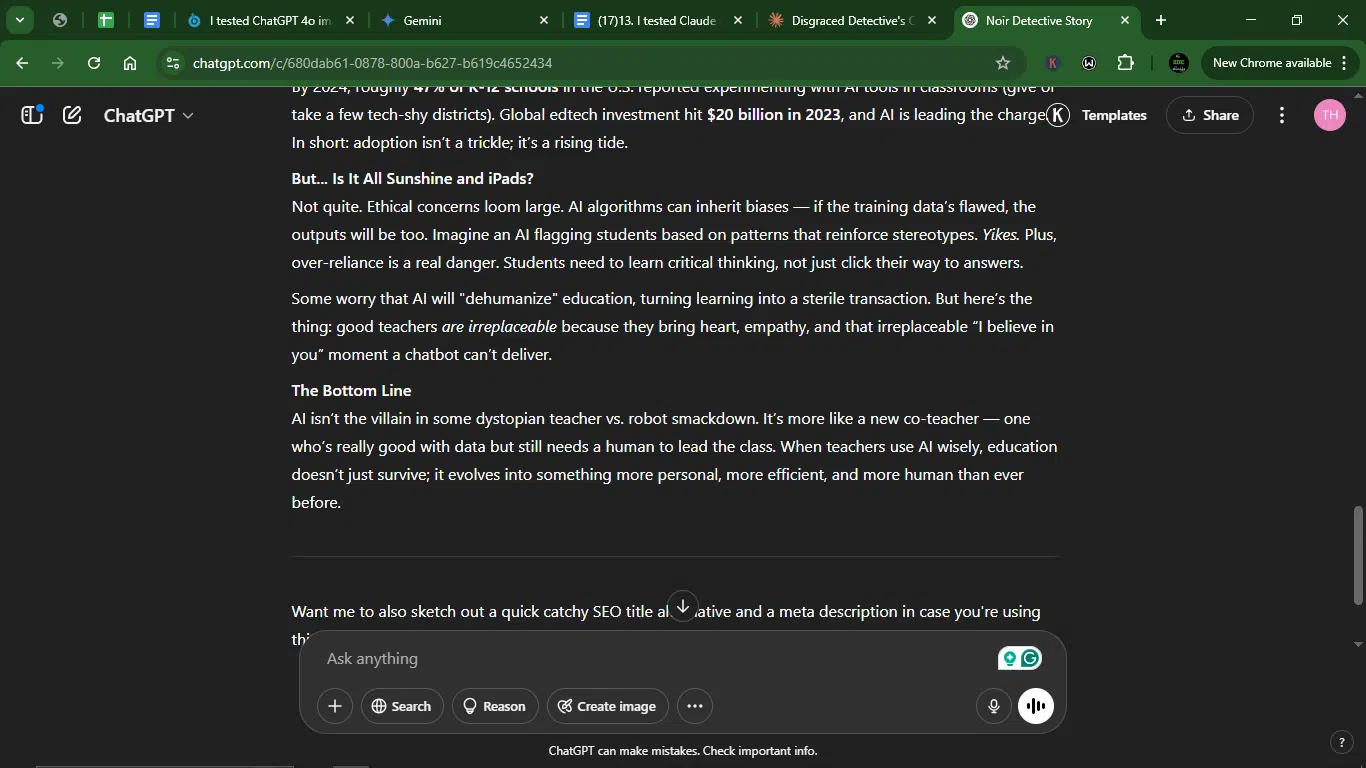

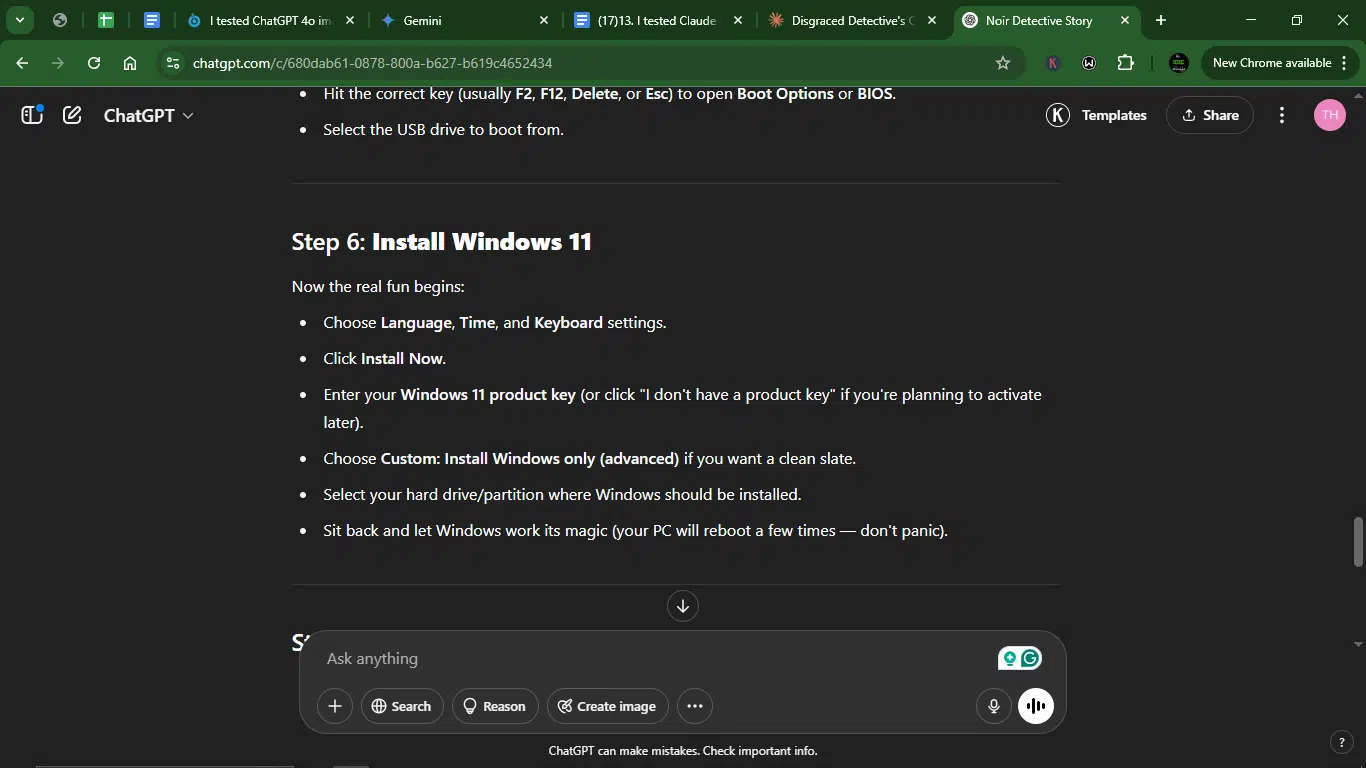

ChatGPT’s response:

Accuracy:

- Claude: Hits all the key elements of the prompt. We’ve got the disgraced detective, the corrupt mayor, a murder, a red herring (Moretti), a twist ending (the mayor hired the detective to kill him), and period-accurate slang (“flatfoot,” “hinky,” “zipped”).

- ChatGPT: It also includes all the requested elements: a disgraced detective, a corrupt mayor (indirectly through his son’s racket), a murder, a red herring (Rosie as the initial suspect), a twist ending (Rosie is the new boss), and some period-appropriate slang (“iced,” “stiff,” “mugs,” “gumshoe,” “heater”).

Creativity:

- Claude: Offers a compelling twist where the detective is unknowingly hired to be the next victim. The protagonist’s internal monologue adds to the noir feel. The connection between the murder and the corrupt contracts provides a solid motive.

- ChatGPT: Presents a clever twist with the seemingly vulnerable singer revealed as the mastermind. The tie-in to a war racket adds a layer of historical context and intrigue. The protagonist’s voice is distinct and suitably cynical.

Clarity:

- Claude: The narrative unfolds logically, and the clues are presented in a way that the reader can follow the detective’s reasoning. The motivation behind the murder becomes clear as the story progresses.

- ChatGPT: The story is also easy to follow, and the protagonist’s investigation is clearly laid out. The red herring is effectively introduced and then debunked, leading to the final twist.

Usability:

- Claude: The story is well-written and could be used with minimal editing. The word count is close to the requested 300 words.

- ChatGPT: The story is also well-written and usable with little editing. The word count is also close to the target.

Winner: Tie

Why? Both AIs crafted compelling noir stories that met all the prompt requirements. While Claude’s story featured a more direct connection to the corrupt mayor in the plot, ChatGPT’s creative twist with the singer as the mastermind showed equal storytelling skill. Each AI demonstrated strong narrative abilities, with neither outperforming the other across all evaluation criteria.

Prompt 2: Niche blog post

With this prompt, I wanted to see how well they can write for a specific audience with specialized interests. I was testing their ability to balance clarity, engagement, and factual accuracy while still sounding natural and insightful.

Prompt: “Compose a 500-word blog post titled ‘Why AI Won’t Replace Teachers But Will Transform Education.’ It should cover 3 concrete examples of AI augmenting classrooms (e.g., personalized learning), data on adoption rates (estimate if unavailable), and ethical concerns (bias, over-reliance). Address 1-2 counterarguments.

Result:

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Accurately addresses all parts of the prompt: the central argument, three concrete examples of AI augmentation, estimated adoption rates (though presented as current usage), ethical concerns, and counterarguments. While Claude mentions current usage of some tools, it doesn’t explicitly frame these as “adoption rates” or provide a broader estimated percentage for AI adoption in education as directly as ChatGPT.

- ChatGPT: Also covers all aspects of the prompt, providing examples, estimated adoption rates, ethical concerns, and counterarguments. While it addresses counterarguments, the exploration could have been a bit more in-depth, perhaps dissecting the underlying assumptions of those who believe AI will replace teachers.

Creativity:

- Claude: Presents a more measured and balanced tone, effectively arguing its central point. The use of a teacher’s quote adds a touch of realism. Compared to ChatGPT, Claude’s tone is less engaging and lacks the same level of enthusiasm.

- ChatGPT: Adopts a more enthusiastic and forward-thinking tone, using lively language and rhetorical questions to engage the reader. The analogies (supercharged toolbelt, personal tutor who never gets cranky) are creative.

Clarity:

- Claude: The blog post is well-structured with clear headings and concise explanations of each point. The arguments are easy to follow.

- ChatGPT: Organizes its points clearly with numbered examples and uses accessible language. The transitions between sections are smooth.

Usability:

- Claude: The blog post is ready to be used with minimal editing. The word count is within the requested range.

- ChatGPT: The blog post is also highly usable with little need for modification. The word count is appropriate.

Winner: ChatGPT.

Why? For this prompt, ChatGPT edges out Claude. While both models provided accurate and usable blog posts, ChatGPT’s more engaging and forward-thinking tone, along with a clearer attempt to quantify adoption rates (even if estimated), makes it slightly more effective as a blog post aimed at a broader audience. Claude’s response was solid and well-reasoned but lacked the same level of stylistic flair.

Prompt 3: Technical guide

This prompt tests the AI’s ability to deliver technically accurate, step-by-step instructions in a way that’s easy to follow. I’m looking for a balance of precision, clarity, and completeness, especially when explaining complex tools, systems, or processes. The goal is to see if the AI can teach effectively without dumbing things down, like a good tech mentor would.

Prompt: “Create a step-by-step guide for installing Windows 11 on a personal computer.

Result:

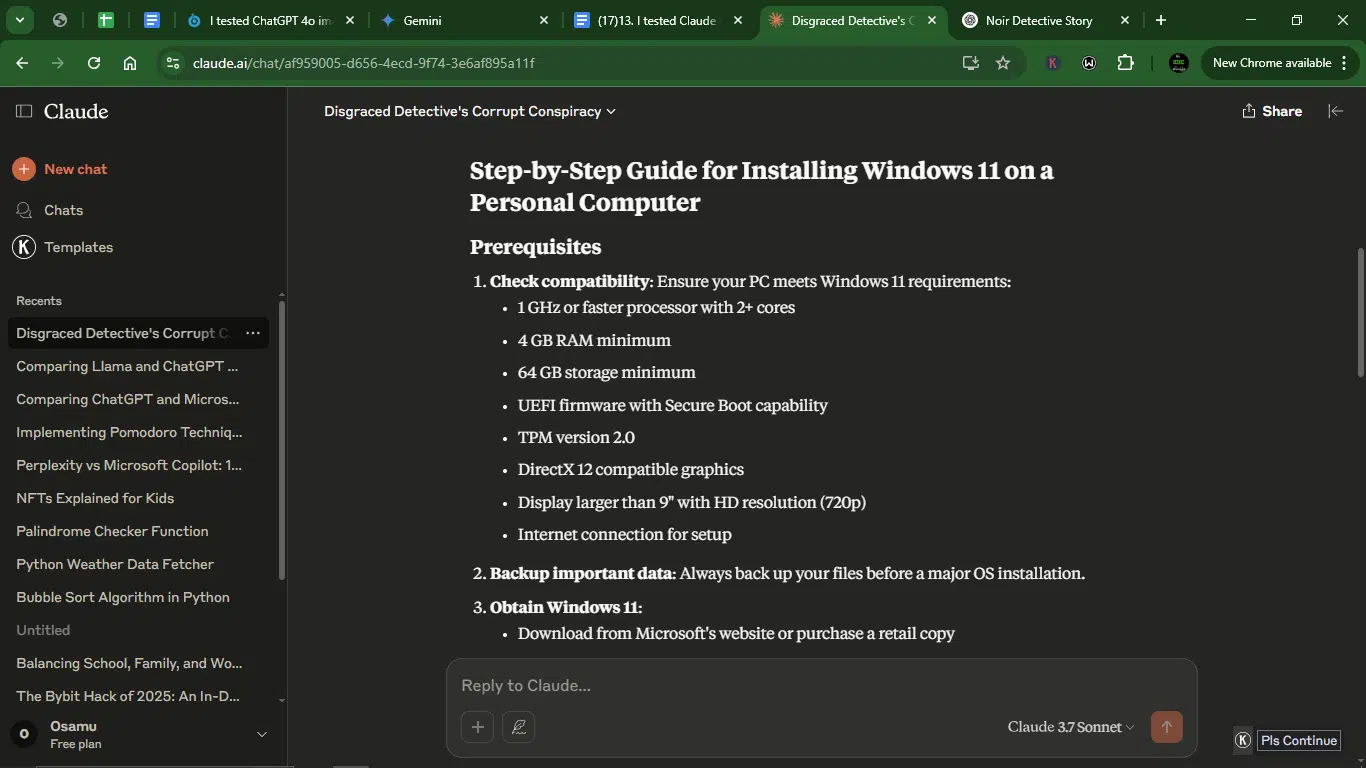

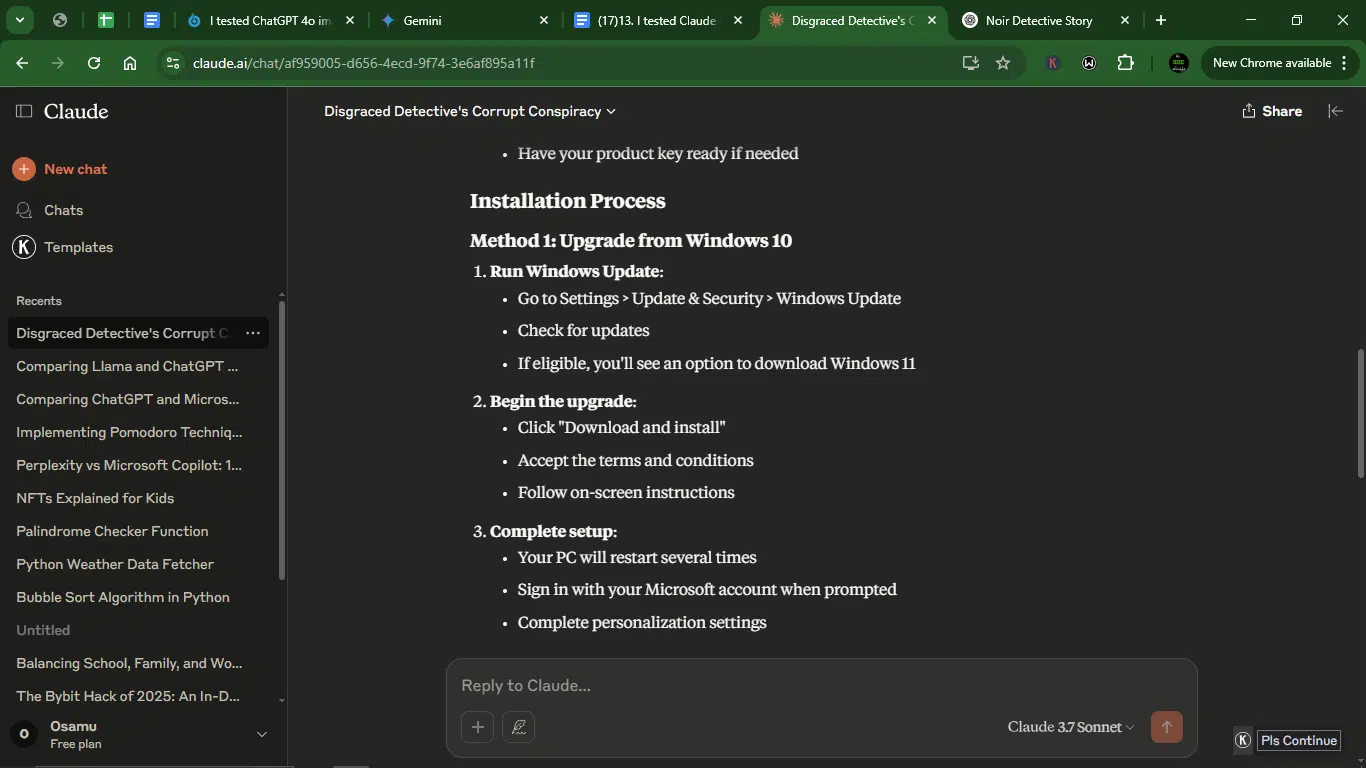

Claude’s response:

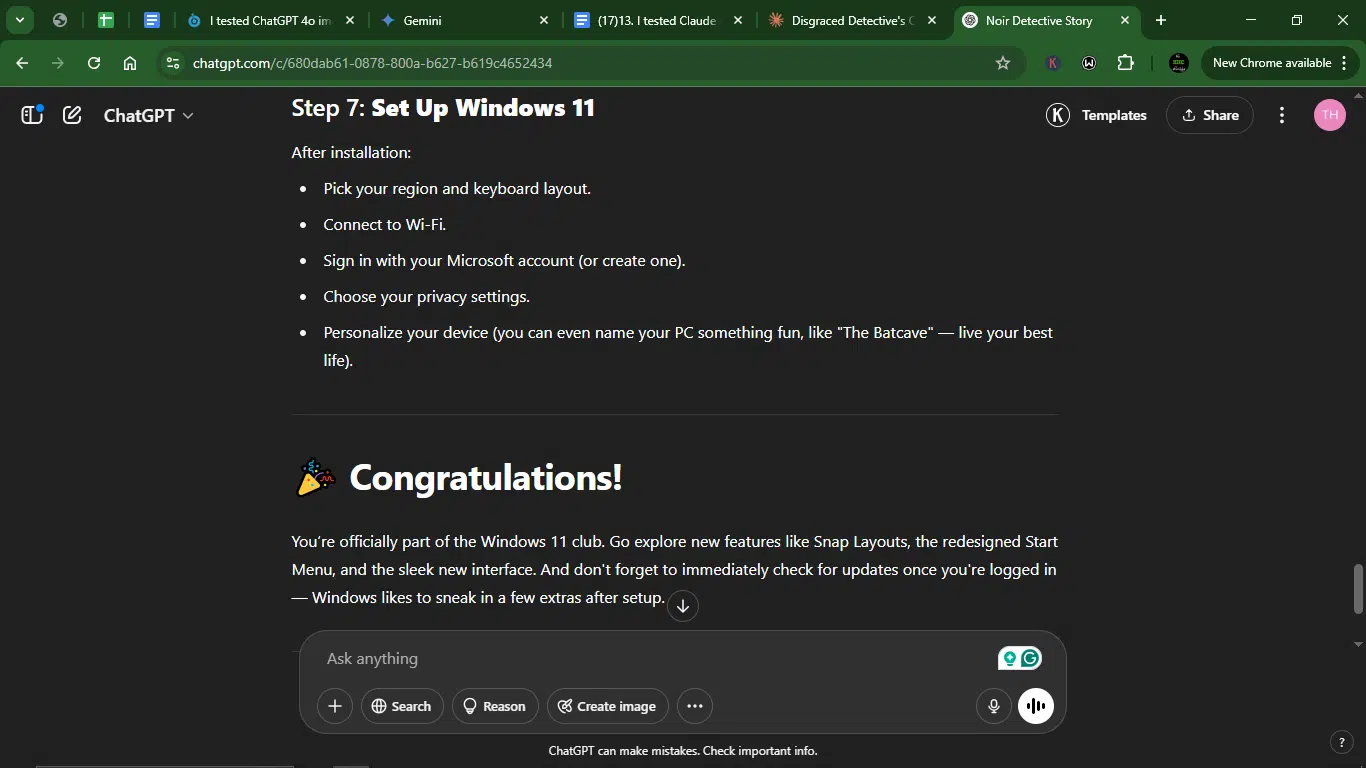

ChatGPT’s response:

Accuracy:

- Claude: Provides a comprehensive and technically accurate step-by-step guide. It correctly lists the prerequisites, outlines both upgrade and clean install methods, and includes essential post-installation steps and troubleshooting tips. While it mentions downloading from Microsoft’s website, it doesn’t provide direct links to the necessary tools (PC Health Check Tool, Media Creation Tool).

- ChatGPT: Also offers an accurate and easy-to-follow guide, covering the key stages of the installation process, from checking requirements to post-setup actions. It correctly identifies the two main installation methods.

Creativity:

- Claude: Presents the information in a straightforward, instructional format, which is appropriate for a technical guide. There isn’t much room for creative flair in this type of content. The tone is purely informational and lacks the encouraging and friendly aspect that could make it more approachable for novice users.

- ChatGPT: Infuses the guide with a friendly and encouraging tone, using analogies (“like making a good cup of coffee”) and engaging language (“tech wizard’s job,” “rocking Windows 11”). The “👉 Quick Tip” and “🎉 Congratulations!” add a touch of personality. ChatGPT does not include a dedicated troubleshooting section for common installation issues.

Clarity:

- Claude: Organizes the information logically with clear headings and bullet points, making it easy to read and follow. The distinction between upgrade and clean install methods is clearly presented.

- ChatGPT: Uses numbered steps and bolded text for key actions, enhancing readability. The inclusion of a “Quick Tip” and a concluding congratulatory message improves clarity by highlighting important advice and signaling completion. While it mentions checking TPM in BIOS, it could have provided slightly more detail on accessing the boot menu and BIOS/UEFI settings, as this can be a stumbling block for some users.

Usability:

- Claude: The guide is highly usable, providing all the necessary information in a structured format. The troubleshooting section is a valuable addition.

- ChatGPT: The friendly tone and clear, concise steps make the guide very user-friendly, especially for less experienced users. The explicit advice to back up files and check for updates is crucial.

Winner: ChatGPT.

Why? While both models provided accurate and usable guides, ChatGPT’s friendly and encouraging tone, combined with clear, numbered steps and helpful tips, makes the installation process seem less daunting for the average user. The analogy and congratulatory message enhance the user experience. Claude’s guide was comprehensive and technically sound, but lacked the same level of user-friendliness.

Prompt 4: Product description

This prompt tests both AI’s ability to write a concise, persuasive description that sells the product while maintaining a consistent brand tone. My goal was to see if it could highlight key features, appeal to the target audience, and create just enough intrigue to prompt action, without sounding robotic or overhyped.

Prompt: “Draft a 150-word product page for a premium smartwatch targeting athletes. Highlight blood oxygen and ECG monitoring, 14-day battery life, proprietary sleep-tracking AI, rugged titanium design. Differentiate from Apple Watch/Garmin.”

Result:

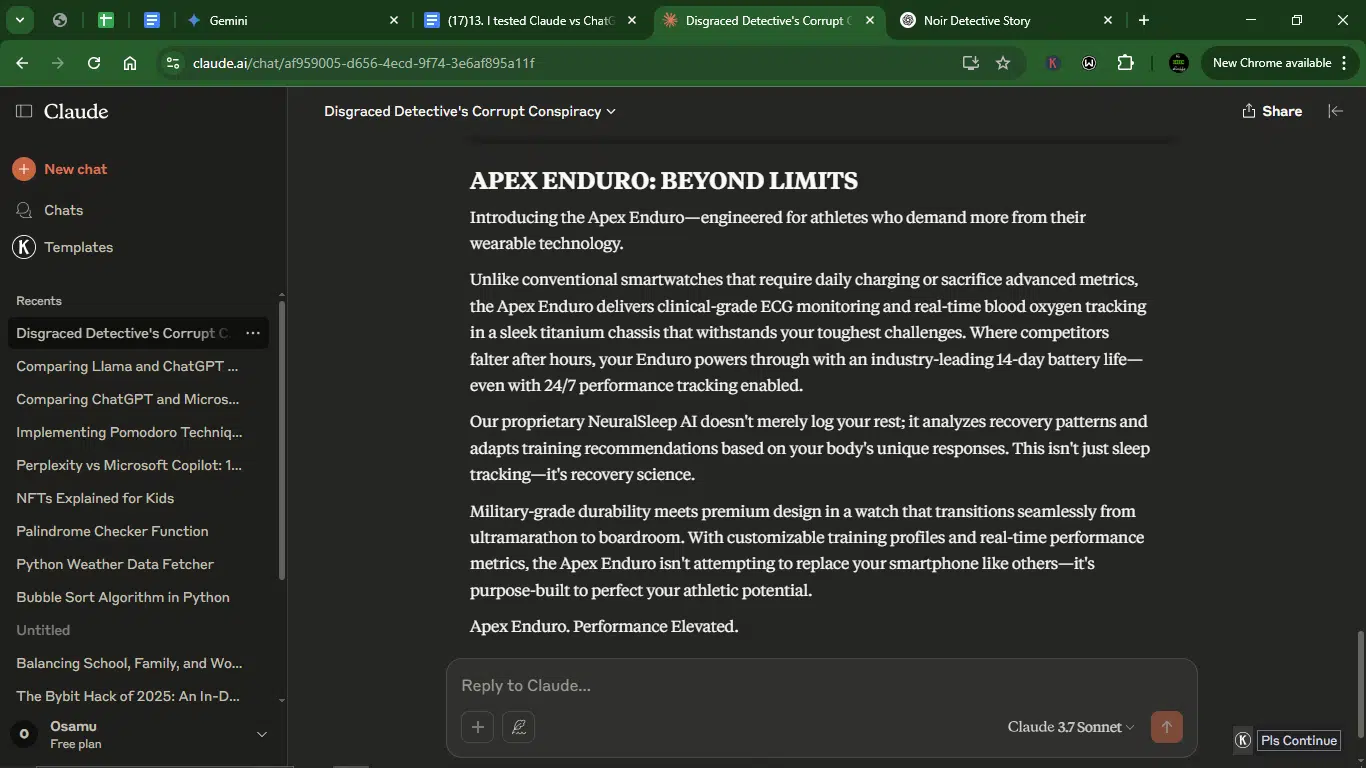

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Accurately highlights all the key features mentioned in the prompt: blood oxygen and ECG monitoring, 14-day battery life, proprietary sleep-tracking AI (named NeuralSleep), and rugged titanium design. It also attempts to differentiate itself from competitors. While it highlights features that differentiate it, Claude doesn’t directly name Apple Watch or Garmin for a clear point of comparison.

- ChatGPT: Similarly, accurately includes all the specified features: blood oxygen and ECG monitoring, 14-day battery life, proprietary sleep-tracking AI (named SleepSense), and rugged titanium design. It also directly mentions and differentiates from Apple Watch and Garmin.

Creativity:

- Claude: Uses strong, evocative language (“Beyond Limits,” “engineered for athletes who demand more,” “recovery science,” “performance elevated”). It creates a sense of a high-performance, specialized device.

- ChatGPT: Aims for a more direct and impactful tone by employing energetic and action-oriented language (“Built for the Relentless,” “conquer every terrain,” “rest into a weapon,” “won’t quit before you do”).

Clarity:

- Claude: Clearly articulates the benefits of each feature and how they cater to athletes. The differentiation from competitors is implied through highlighting superior battery life and specialized sleep analysis.

- ChatGPT: Clearly states the target audience and the advantages of each feature. It explicitly differentiates by suggesting TitanX Pro performs where Apple Watch and Garmin might fall short in ruggedness and battery life.

Usability:

- Claude: The product page is concise and within the word limit, making it suitable for online display. The call to action is implied through the overall persuasive language.

- ChatGPT: The page is also within the word limit and uses compelling language to attract the target audience. The concluding questions act as a soft call to action.

Winner: ChatGPT

Why? While both models created effective product pages, ChatGPT’s direct mention of Apple Watch and Garmin provides a clearer differentiation, which was part of the prompt. Claude’s page was strong and sophisticated, but the lack of explicit competitor mention was a slight drawback in this specific context.

Prompt 5: Email draft

How well can generative AI write a clear, professional email tailored to a specific purpose, whether it’s a request, follow-up, apology, or announcement? Here, I’m looking for tone appropriateness, structural clarity, and effective communication, all wrapped in a message that feels human and to the point.

Prompt: “Write a 100-word email inviting remote team members to a quarterly planning session. Include a clear agenda (strategy, OKRs, feedback), hybrid details (Zoom + office location), and pre-work (review linked dashboard). Be professional but warm, for a tech startup culture.”

Result:

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Includes all the requested elements: clear agenda (strategy, OKRs, feedback), hybrid details (HQ Innovation Room + Zoom link), pre-work (review linked Q1 Performance Dashboard), professional yet warm tone, and specifies a date and time (assuming the user provided context for “next Friday”). While it mentions the dashboard, it doesn’t use a clear placeholder for the link, assuming it will be embedded in the text or easily found.

- ChatGPT: Also includes all the required elements: clear agenda (strategy updates, OKRs, feedback), hybrid details (Office Location + Zoom link), pre-work (review linked updated dashboard), professional but warm tone, and placeholders for date, time, and links.

Creativity:

- Claude: Uses a straightforward and efficient approach while maintaining a warm tone (“your voice matters,” “chart our next chapter together”). The agenda is clearly laid out.

- ChatGPT: Employs emojis (🌟) and more enthusiastic language (“kicking off,” “keep leveling up,” “hit the ground running,” “build something amazing together”) to foster a tech startup vibe.

Clarity:

- Claude: The email is concise and easy to understand. The agenda and logistics are separated for better readability.

- ChatGPT: The email is also clear and uses bullet points for easy scanning of key information. The placeholders make it obvious where specific details need to be inserted.

Usability:

- Claude: The email is ready to be used with minimal edits, assuming the date and time are correct based on the user’s context.

- ChatGPT: The email requires the user to fill in the bracketed placeholders for the date, time, office location, and Zoom/dashboard links before sending.

Winner: Claude.

Yes, both models successfully included all the requested information and maintained a professional yet warm tone suitable for a tech startup, but Claude provided a more immediately usable email by including a specific date and time (based on the prompt’s “next Friday”) and laying out the agenda (with duration) and logistics without requiring the user to fill in placeholders. ChatGPT’s use of placeholders makes it slightly less immediately ready for use.

Prompt 6: Press release

This prompt was aimed at testing Claude and ChatGPT’s ability to craft formal, corporate-style content that is clear, concise, and newsworthy. What I was looking for here was to see if it could adopt the right tone of professionalism, structure information in a journalist-friendly format, and highlight key messages that resonate with stakeholders and media outlets alike.

Prompt: “Announce a new SaaS product ‘FlowForge’ (no-code automation tool) with two customer testimonials (fake but plausible), Differentiators from Zapier/Make, Pricing tiers ($29−299/mo), launch webinar details. Format is AP style, with boilerplate and media contact. Max 300 words.”

Result:

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Adheres to AP style conventions for dateline and “For Immediate Release.” Includes all requested information: product name, description, two plausible fake testimonials with attributed roles and companies, differentiators from Zapier/Make (Adaptive Logic, higher operation capacity), pricing tiers, launch webinar details (date, time, call to action for registration), boilerplate, and media contact information. While it includes the date, the dateline includes “SEATTLE,” which might not be accurate if the company’s location was not specified in the initial prompt.

- ChatGPT: Also follows AP style. Contains all the required elements: product name, description, two plausible fake testimonials with attributed roles and companies, differentiators from Zapier and Make (deeper customization, greater scalability), pricing tiers, launch webinar details (date, time, call to action placeholder), boilerplate, and media contact information. The boilerplate is a bit more generic compared to Claude’s inclusion of the founding year and seed funding.

Creativity:

- Claude: Presents a professional and informative tone, highlighting specific technical differentiators and quantifiable benefits in the testimonials. The “Adaptive Logic” concept is a creative differentiator.

- ChatGPT: Uses slightly more evocative language (“built for speed and scale,” “duct tape vs. custom-built engine”) while maintaining a professional tone. The differentiators are framed in terms of user benefit (faster, more scalable).

Clarity:

- Claude: The press release is well-organized with clear paragraphs for each key piece of information. The pricing tiers are easy to understand.

- ChatGPT: The information is presented logically and concisely. The testimonials are integrated smoothly into the narrative.

Usability:

- Claude: The press release is ready for distribution with complete information, including a specific webinar date and time and a call to action with a plausible URL structure.

- ChatGPT: The press release requires the user to insert the registration link for the webinar. After that, it’s good to go.

Winner: Claude.

Why? It provided a more complete and immediately usable press release by including a specific date and time for the webinar and a plausible URL structure for registration. Additionally, its boilerplate included more specific details about the company’s founding and funding. While ChatGPT’s language was engaging, the missing webinar link and less detailed boilerplate make it slightly less ready for immediate use.

Prompt 7: Social media post

This prompt tests both AI’s ability to be creative, punchy, and engaging within a tight word or character limit. Can they capture attention quickly, maintain brand voice, and encourage interaction, all while staying brief, relevant, and scroll-stopping?

Prompt: “Create 3 Instagram captions (under 50 words each) for a 24-hour 30%-off sale at an eco-friendly shoe brand. Add urgency triggers (e.g., ‘Almost gone!’) and one UGC-style testimonial.”

Result:

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Provides three distinct captions, all under 50 words. Each clearly states the 30% off sale duration (24 hours) and includes urgency triggers (“Almost Gone,” “Last Chance,” “before time runs out”). One caption features a UGC-style testimonial with a username.

- ChatGPT: Also delivers three captions, all within the word limit. Each mentions the 24-hour sale and incorporates urgency triggers (“Almost gone,” “before it’s too late,” “Ends in 24 hours,” “flying off the shelves,” “Sale ends tomorrow — or when they’re gone!”). One caption includes a UGC-style testimonial with a username.

Creativity:

- Claude: Uses a mix of direct calls to action and benefit-driven language (“sustainable style fix,” “save the planet—they’ll save your wallet too”). The UGC testimonial feels plausible. Could have incorporated more emojis to enhance visual appeal on the platform.

- ChatGPT: Employs emojis to add visual appeal and a slightly more enthusiastic tone. The UGC testimonial uses relatable language (“Obsessed”). The urgency triggers are varied and effective.

Clarity:

- Claude: The sale details (30% off, 24 hours) are clearly stated in each caption. The hashtags are relevant to the brand and sales.

- ChatGPT: The discount and time limit are also clearly communicated. The hashtags are relevant and aim to increase visibility.

Usability:

- Claude: The captions are ready to be posted with minimal editing. The inclusion of a discount code in the first caption is a practical touch.

- ChatGPT: The captions are also immediately usable. The use of emojis can enhance engagement on Instagram.

Winner: ChatGPT

Why? Its captions effectively use emojis to increase visual engagement and employ a slightly more enthusiastic tone that might resonate well on Instagram. The urgency triggers are also varied and compelling. While Claude’s captions were clear and included a discount code in one instance, ChatGPT’s overall approach feels more tailored to the platform’s style and potential for engagement.

Prompt 8: Editorial

Claude and ChatGPT might be the rave right now, but can they write a provocative, opinion-based editorial that still leans on data, insight, and structured logic? Think Forbes, The Economist, or Harvard Business Review.

Prompt: “Write a 500-word op-ed arguing ‘Electric Vehicles Alone Won’t Save the Planet.’ Cover Grid sustainability challenges, Rare mineral ethics, the role of public transit/policy, and one to two counterarguments rebutted.“

Result:

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Accurately covers all the required points: grid sustainability challenges, rare mineral ethics, the role of public transit/policy, and rebuts two counterarguments (practicality of car dependency, future technological improvements). The opening is effective, but it could have been slightly more attention-grabbing to immediately draw the reader in.

- ChatGPT: Also addresses all the specified areas: grid sustainability, rare mineral ethics, the need for public transit/policy, and rebuts two counterarguments (EVs as a necessary first step, EVs being better than combustion engines). While the counterarguments are addressed, Claude’s rebuttals felt slightly more specific and detailed. For example, Claude addresses the “future tech will fix it” argument more directly.

Creativity:

- Claude: Presents a well-structured and reasoned argument with a clear thesis. The language is sophisticated and persuasive (“aerodynamic shoulders,” “inconvenient truth,” “shifting the tailpipe elsewhere”).

- ChatGPT: Uses strong opening and closing statements to engage the reader. The language is direct and impactful (“buying our way out,” “false sense of progress,” “ethical dilemma”). The analogies (“greenwashing,” “trading oil wars for mineral exploitation”) are effective.

Clarity:

- Claude: Clearly explains each challenge and the limitations of relying solely on EVs. The rebuttals to counterarguments are well-articulated.

- ChatGPT: Presents the arguments in a straightforward and easy-to-understand manner. The need for systemic change is emphasized throughout.

Usability:

- Claude: The op-ed is ready for publication with a clear and compelling argument. The length is appropriate for the format.

- ChatGPT: The op-ed is also well-written and suitable for publication. The conclusion leaves the reader with a thought-provoking question.

Winner: Claude

Why? Both models provided strong and well-reasoned op-eds. However, Claude’s arguments felt slightly more developed, and their rebuttals to the counterarguments were more specific and persuasive. The overall structure and sophisticated language also gave Claude a slight edge in presenting a compelling case.

Prompt 9: Service FAQ

This prompt tests the AI’s ability to write clear, concise, and helpful responses to frequently asked questions. I want to see how well it can anticipate user confusion, explain concepts in plain language, and provide quick, actionable answers, while maintaining an organized and approachable tone.

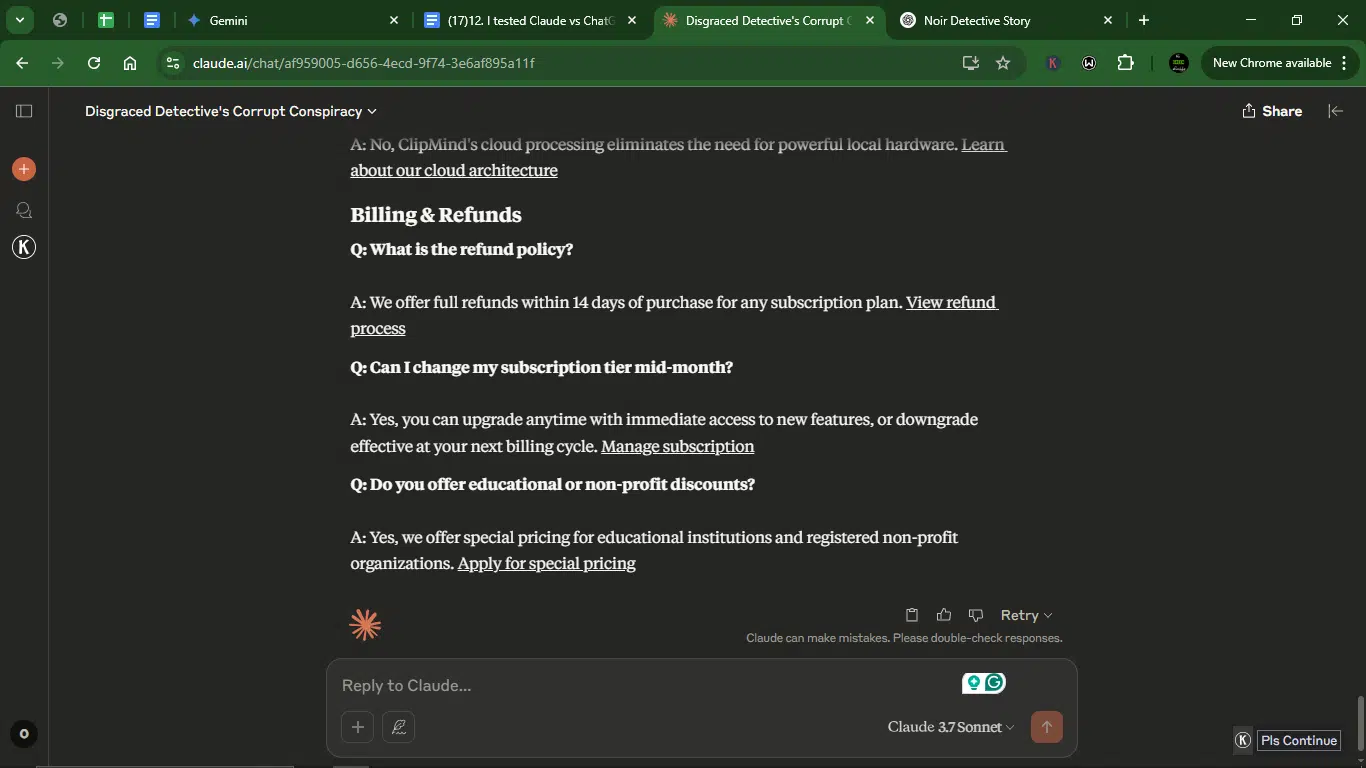

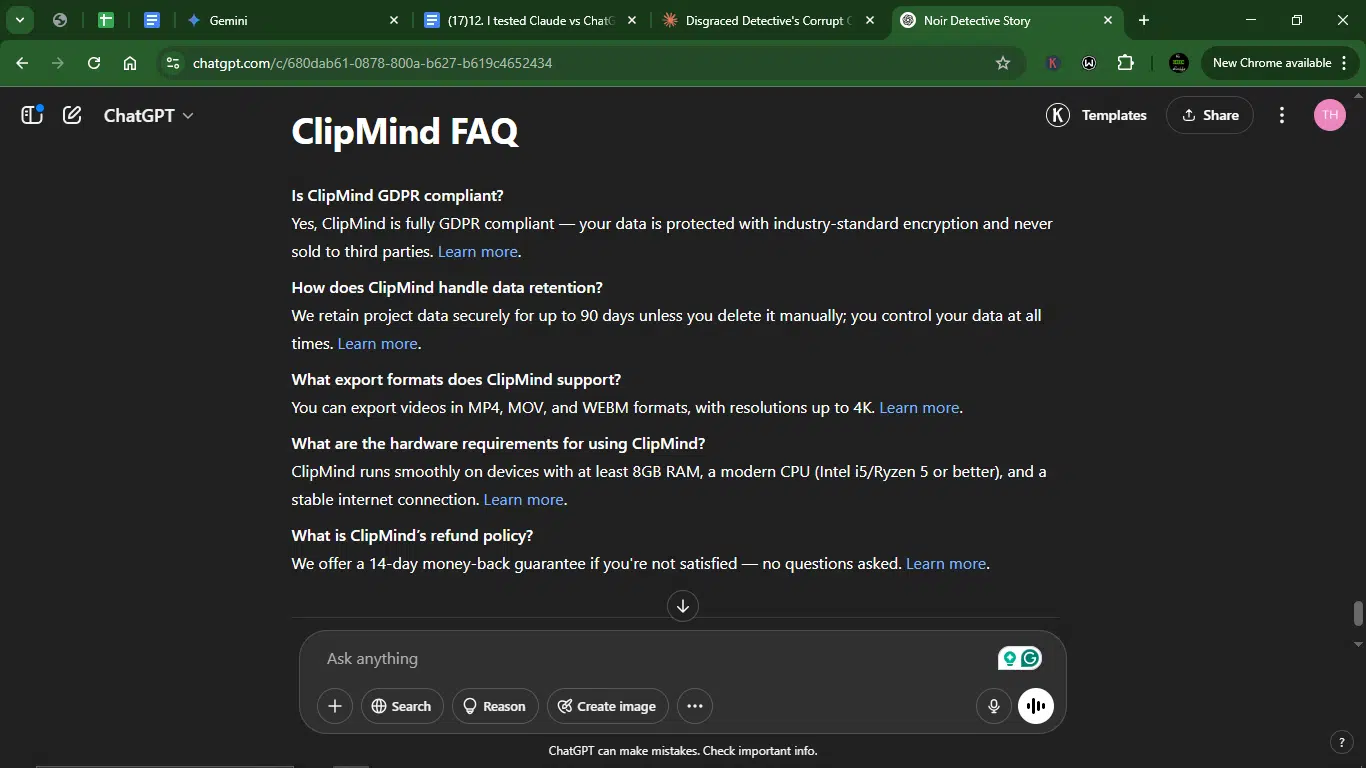

Prompt: “Draft an FAQ for ‘ClipMind’ (AI video editing tool). Address privacy/data retention (GDPR compliance), export format options, hardware requirements, and refund policy. It should be a concise Q&A with one-sentence answers and ‘learn more’ links.

Result:

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Accurately addresses all the requested topics: privacy/data retention (including GDPR), export format options, hardware requirements, and refund policy. The answers are concise and provide additional relevant details.

- ChatGPT: Also accurately addresses all the required topics with concise, one-sentence answers. The answers are very concise, potentially lacking some helpful details that Claude included within their one-sentence structure (e.g., specific export resolutions, mobile app availability).

Creativity:

- Claude: Organizes the FAQ into logical categories (Privacy & Data, Export Options, Hardware Requirements, Billing & Refunds), which enhances readability and user experience. The additional details within the one-sentence answers add value.

- ChatGPT: Presents the FAQ as a straightforward list of questions and answers. The lack of categories might make it slightly less user-friendly for quickly finding specific information.

Clarity:

- Claude: The questions are clear, and the one-sentence answers are easy to understand. The “learn more” links are implied by the bolded phrases at the end of each answer.

- ChatGPT: The questions are clear, and the one-sentence answers are direct and to the point. The “Learn more” is explicitly stated at the end of each answer.

Usability:

- Claude: The categorized format makes it easy for users to find information relevant to their needs. The additional details within the concise answers are helpful.

- ChatGPT: The simple Q&A format is straightforward and easy to navigate.

Winner: Claude

Why? While both models provided accurate and concise answers, Claude’s categorized format improves usability by allowing users to quickly find related information. Additionally, Claude managed to include more valuable details within their one-sentence answers, providing a slightly more comprehensive overview while still adhering to the conciseness requirement. The implied “learn more” links are understandable, even if not explicitly stated.

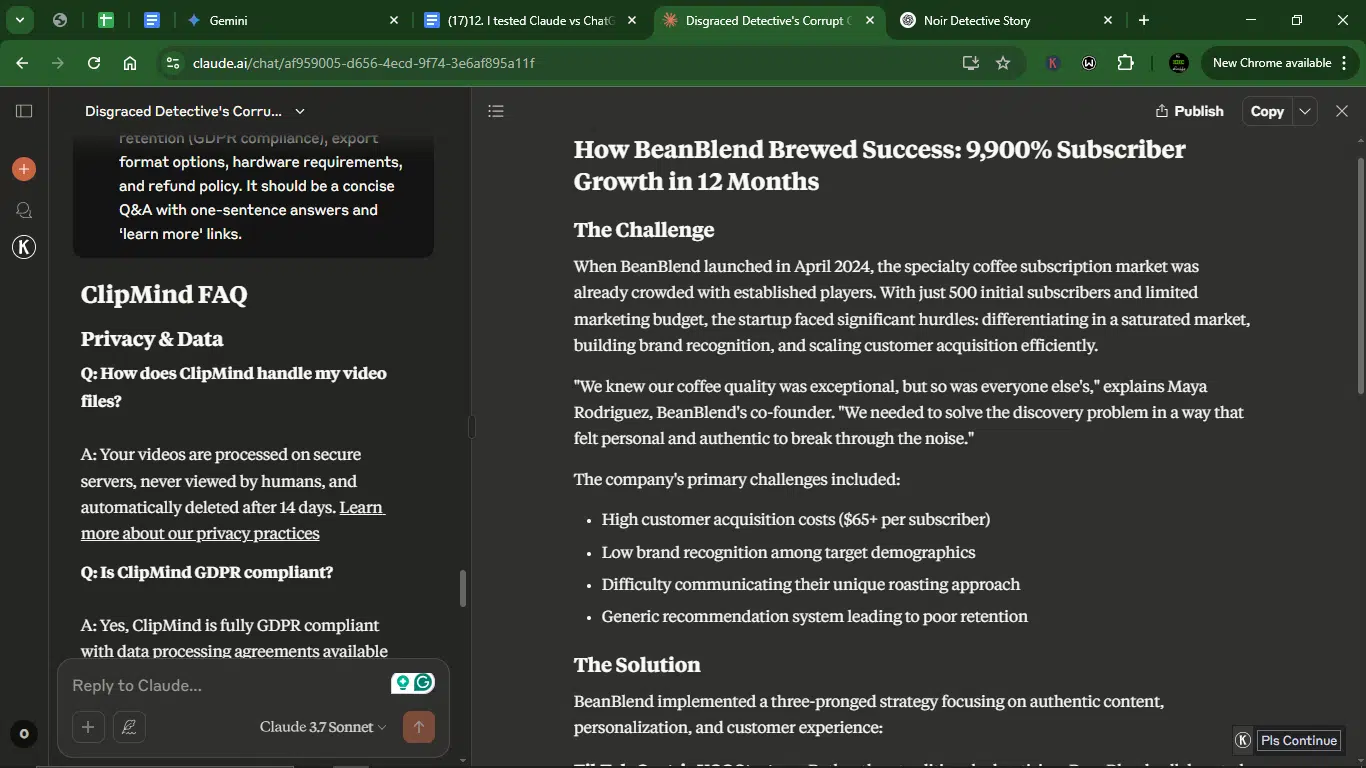

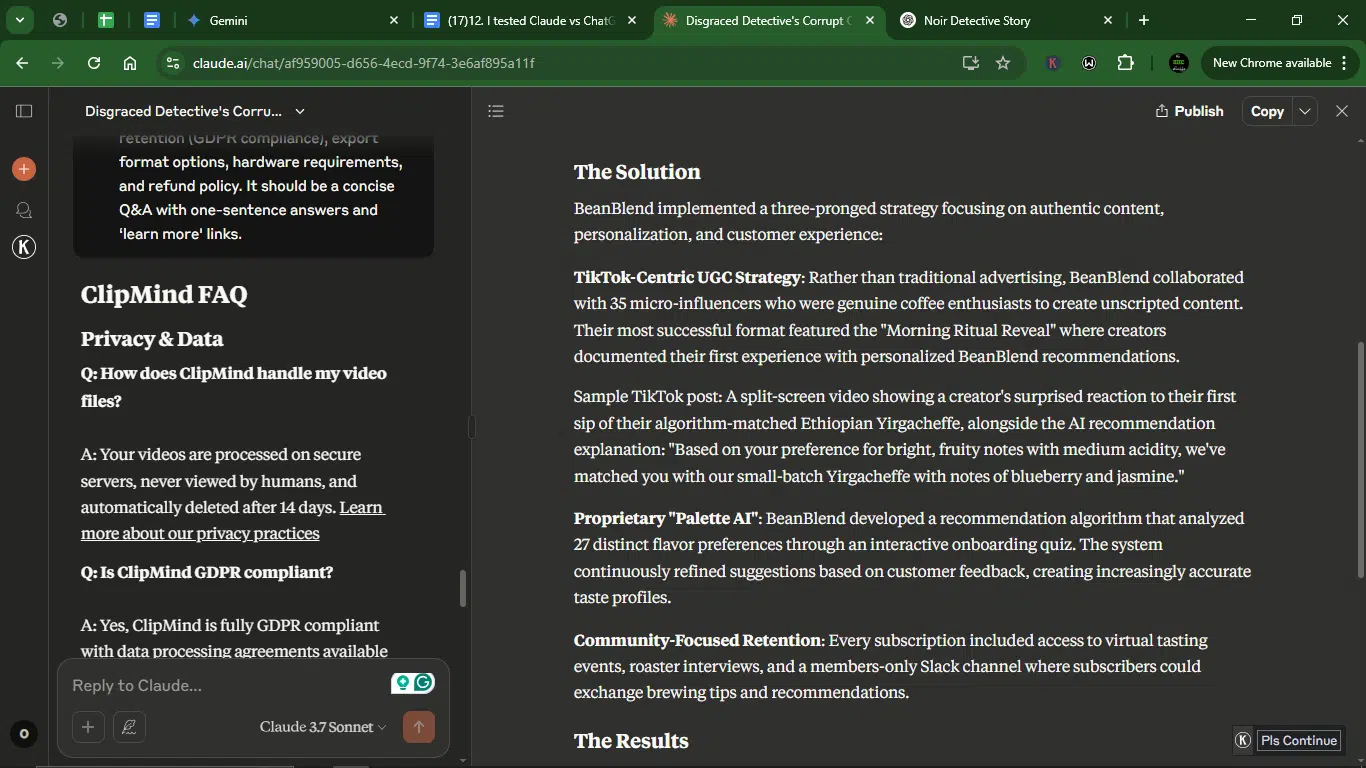

Prompt 10: Marketing case study

This prompt tests the ability of both AI models to write a well-structured, professional case study that clearly outlines a problem, solution, and measurable results. I’m looking for clarity in storytelling, logical flow, and a tone that speaks to business stakeholders, showing not just what was done, but why it mattered and what others can learn from it.

Prompt: “Create a 400-word case study for ‘BeanBlend’ (a coffee subscription) detailing how they:

- Grew from 500 to 50K subscribers in 1 year.

- Used TikTok UGC campaigns (+ sample post).

- Leveraged AI for personalized roast recommendations.

Format should follow “Challenge — Solution — Results (with % increases). Include 2 pull quotes.”

Result:

Claude’s response:

ChatGPT’s response:

Accuracy:

- Claude: Accurately details the initial challenge, the two key solutions (TikTok UGC and AI personalization), and presents results with percentage increases. It includes two relevant pull quotes attributed to company roles. The timeline (April 2024 launch) adds context.

- ChatGPT: Also accurately covers the challenge, solutions (TikTok UGC and AI personalization), and provides results with percentage increases. It includes two fitting pull quotes with attributed roles. The early 2024 launch provides a similar timeframe. The sample post is very brief and lacks the specific element of showcasing the AI recommendation, which was a key part of the solution.

Creativity:

- Claude: Provides a clear and well-structured narrative, effectively explaining the strategies and their impact. The names for the AI (“Palette AI”) and the UGC campaign (“Morning Ritual Reveal”) add a touch of branding. The inclusion of specific metrics like customer acquisition cost and retention rate provides a deeper analysis.

- ChatGPT: Presents a more concise and punchy narrative, using evocative language (“brewed success,” “rocket-fueled growth”). The sample TikTok post is brief but effective.

Clarity:

- Claude: The case study is well-organized with clear headings implied by the “Challenge — Solution — Results” structure. The explanations of each strategy are easy to follow.

- ChatGPT: The structure is evident, and the language is straightforward and accessible. The impact of each strategy is clearly articulated.

Usability:

- Claude: The case study is ready for use, providing a comprehensive overview of BeanBlend’s growth. The detailed metrics and attributed quotes enhance its credibility.

- ChatGPT: The case study is also highly usable, offering a compelling and concise account of BeanBlend’s success. The impactful language makes it engaging.

Winner: Claude.

Why? Both models effectively addressed the prompt’s requirements, however, Claude provided a more detailed and comprehensive case study. The inclusion of specific metrics like customer acquisition cost and retention rate, along with the well-integrated and attributed pull quotes, makes its analysis more robust. Additionally, its sample TikTok post better illustrated the connection between UGC and the AI recommendation aspect of the solution.

Overall performance comparison: ChatGPT vs Claude (10-prompt test)

| Prompt | Winner | Reason |

| Creative short story | Tie | Both models effectively fulfilled all the prompt’s requirements, showcasing strong storytelling and stylistic flair. Claude had a more direct thematic tie-in, while ChatGPT offered a more unexpected twist. |

| Blog post | ChatGPT | More engaging and forward-thinking tone, combined with a clearer attempt to quantify adoption rates, made it slightly more effective for a broader blog audience. |

| Technical guide | ChatGPT | Friendly and encouraging tone, clear numbered steps, and helpful tips made the installation process seem less daunting for the average user. |

| Product description | ChatGPT | Direct mention of competitors (Apple Watch and Garmin) provided a clearer differentiation as requested. |

| Email drafting | Claude | Provided a more immediately usable email by including a specific date and time and laying out the agenda and logistics. |

| Press release | Claude | Delivered a more complete and immediately usable press release, including a more detailed boilerplate. |

| Social media post | ChatGPT | Effective use of emojis for visual engagement and a more enthusiastic tone felt better suited for the Instagram platform. |

| Editorial | Claude | Arguments were more developed, and their rebuttals to counterarguments were more specific and persuasive. |

| FAQ | Claude | Categorized format improved usability, and it included more valuable details within its concise answers. |

| Case study | Claude | Had a more detailed and comprehensive case study with specific metrics. |

Final score: Claude 5, ChatGPT 4, Tie 1.

My recommendation:

The choice between Claude and ChatGPT isn’t a matter of one being definitively “better” than the other. Instead, their strengths lie in different areas.

- For tasks requiring in-depth analysis, adherence to specific formats (like press releases and case studies), and a more formal or nuanced tone, Claude appears to be the slightly more reliable choice.

- ChatGPT demonstrates a stronger aptitude for content aimed at broader audiences, where engagement, creativity, and a user-friendly tone are paramount (like blog posts, social media, and guides).

- Ultimately, the “better” AI depends on the specific writing task at hand and the desired outcome. Both are powerful tools, and understanding their respective strengths can lead to more effective content creation.

Pricing for comparison

Claude pricing

| Plan | Features | Cost |

| Free | Access to the latest Claude model, use on web, iOS, and Android, ask about images and documents | $0/month |

| Pro | Everything in Free, plus more usage, organized chats and documents with Projects, access to additional Claude models (Claude 3.7 Sonnet), and early access to new features | $18/month (yearly) or $20/month (monthly) |

| Team | Everything in Pro, plus more usage, centralized billing, early access to collaboration features, and a minimum of five users | $25/user/month (yearly) or $30/user/month (monthly) |

| Enterprise | Everything in Team, plus: Expanded context window, SSO, domain capture, role-based access, fine-grained permissioning, SCIM for cross-domain identity management, and audit logs | Custom pricing |

ChatGPT pricing

| Plan | Features | Cost |

| Free | Access to GPT-4o mini, real-time web search, limited access to GPT-4o and o3-mini, limited file uploads, data analysis, image generation, voice mode, Custom GPTs | $0/month |

| Plus | Everything in Free, plus: Extended messaging limits, advanced file uploads, data analysis, image generation, voice modes (video/screen sharing), access to o3‑mini, custom GPT creation | $20/month |

| Pro | Everything in Plus, plus: Unlimited access to reasoning models (including GPT-4o), advanced voice features, research previews, high-performance tasks, access to Sora video generation, and Operator (U.S. only) | $200/month |

How do AI tools like Claude and ChatGPT benefit writers?

Here’s why these AI tools matter for writers:

- Speed and efficiency: Both tools can help you create content faster, freeing up time for other tasks. AI can catch mistakes or inconsistencies in grammar, syntax, and spelling that might slip through human writers’ eyes.

- Scalability: Writers can produce much more content in less time, making these tools ideal for content-heavy industries.

- Consistency: They help maintain a consistent tone and style across multiple pieces of content.

- Creativity boost: When stuck, these tools can offer new perspectives, ideas, or ways to approach a topic.

- Factual accuracy: AI tools, especially ChatGPT, can help ensure factual accuracy by pulling from up-to-date databases and sources.

- Customization: Some AI models, like Claude, can tailor writing to specific audiences or brand voices, making it versatile for marketers..

- Collaboration: They work alongside writers, improving drafts without replacing them.

- Accessibility: They make writing more accessible to non-experts, reducing barriers to content creation.

What are some challenges with these AI tools?

- Creativity limits: While AI can produce creative work, it still struggles with truly original ideas and nuances in storytelling.

- Over-reliance on AI: Writers might become too dependent on these tools, which could stunt personal development.

- Quality control: Even the best AI tools still require human oversight to ensure high-quality output.

- Bias: AI models are trained on large datasets, and sometimes this can lead to biased or inaccurate information in the output.

- Price: While these tools are affordable compared to hiring writers, they still come with a subscription cost to access premium features, which may not fit every budget.

- Lack of emotional intelligence: AI can mimic human conversation, but it can’t truly understand or evoke emotions like a human can.

Best practices: How writers can make the most of these tools

- Provide clear prompts: The more specific you are in your prompts, the better the output you’ll receive. Be as detailed as possible when asking for help.

- Use AI for brainstorming: Use AI to help you come up with topic ideas, outlines, and structures to kickstart your writing process.

- Use AI as a co-writer: Always try to edit and refine AI-generated content rather than publishing it as-is. Use AI-generated content as a base and refine it to match your voice, tone, and style.

- Maintain human oversight: Always review AI-generated content for accuracy, relevance, and quality.

Conclusion

From my test, Claude surpassed ChatGPT by one point (Claude 5/10; ChatGPT 4/10).

That said, in the battle of AI writing assistants, there’s no universal winner; only the right tool for your specific need. Claude proves indispensable for analysts and corporate writers who prize accuracy and structure, while ChatGPT remains the go-to for marketers and creators seeking sparkle and speed.

As AI evolves, the smartest strategy isn’t choosing sides, but mastering both: leveraging Claude’s analytical rigor to build strong foundations, then applying ChatGPT’s flair to make content shine. Remember, these tools amplify human creativity; they don’t replace it.

The future belongs to writers who can harness their complementary strengths while maintaining their unique voice.

FAQs About Claude and ChatGPT

Can AI replace human writers?

While AI tools can assist in the writing process, they cannot fully replace the creativity and emotional intelligence of human writers.

Which AI tool is better for marketing copy?

ChatGPT is better for marketing content aimed at broader audiences, where engagement, creativity, and a user-friendly tone are paramount (like blog posts, social media, and guides).

Is ChatGPT better for technical writing?

No, Claude is better for technical writing tasks requiring in-depth analysis, adherence to specific formats (like press releases and case studies), and a more formal or nuanced tone.

Are Claude and ChatGPT free?

Both Claude and ChatGPT offer free versions, but their full capabilities are unlocked with premium plans.

Can these tools write in multiple languages?

Yes, both Claude and ChatGPT support multiple languages, making them versatile for international content creation.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.