Key takeaways:

- DeepSeek and ChatGPT can handle a wide range of tasks, but they perform differently depending on the scenario. One isn’t always better than the other; it depends on your needs.

- DeepSeek often goes deeper in explanations, especially in tasks like solving math problems or offering detailed startup ideas.

- ChatGPT tends to format content more neatly, especially in learning modules, summaries, and news articles. It’s better suited for users who want clarity and a clean layout.

- When asked to chat like a friend, ChatGPT delivered a more engaging conversation. DeepSeek’s responses were overwhelming.

- ChatGPT can actually generate images (like book covers), while DeepSeek only provides a detailed text description or external links.

- Unlike ChatGPT, which has both free and paid plans, DeepSeek currently offers all features for free.

If you already use ChatGPT, DeepSeek, or any other AI tool, you’ve probably seen what they can do, or at least you know someone who has. But this isn’t just about what these AIs can do. It’s about which one actually does it better, or even best.

Both ChatGPT and DeepSeek have generated a lot of hype and recommendations. When DeepSeek launched, people claimed it offered deeper reasoning and handled certain tasks better than other AIs. As for ChatGPT, OpenAI has rolled out several models since its launch. Just the other day, I saw that OpenAI even wants you to shop directly through ChatGPT.

DeepSeek and ChatGPT aren’t the only AIs out there. But to help you shake off the curiosity that there might be a better tool, I’ll cut through the hype in this article and focus on comparing just these two. Especially if you’re thinking about switching to the other or even combining both to get better results.

In the end, you just want the tool that gets the job done best.

This article documents my hands-on experience using DeepSeek vs. ChatGPT for a variety of tasks. I created 10 real-world prompts covering different scenarios, fed them to both AIs, and recorded my honest results. By the end, you’ll see how each one handles practical tasks, so you can decide for yourself based on performance, not just hype.

DeepSeek vs. ChatGPT: An overview

There’s a lot to know about DeepSeek, but let me give you a quick overview. DeepSeek AI is an open-source large language model (LLM) developed in China and competes with other well-known AIs, including ChatGPT.

DeepSeek gets a lot of attention for its advanced reasoning capabilities. One of its latest updates is the DeepSeek-R1. You can use DeepSeek to search for information, analyze data, and handle tasks that require critical thinking. You can write test prompts and also upload files.

Unlike many other AIs that have paid subscriptions, DeepSeek is currently free and doesn’t charge for access to any of its features. While it hasn’t introduced any paid plans yet, future premium options might include priority access during peak times, advanced text and data processing, and faster support.

On the other hand, OpenAI’s ChatGPT runs on the Generative Pre-trained Transformer (GPT) models, including GPT-3 and GPT-4.

Both DeepSeek and ChatGPT give you a similar interface to enter prompts and interact with the models.

However, unlike DeepSeek, while ChatGPT offers a free plan, it also provides paid options. You can subscribe to the Plus plan for $20 per month or the Pro plan for $200 per month. The Business plan is $25 per user per month if you’re working with a team.

My testing methodology

There are many ways to compare how well each AI performs, but I decided to feed them different prompts across various use cases to see how they respond. People use AI for all sorts of tasks, but some come up almost every day, like asking the AI to explain a term or help you understand something better. Your use of AI might also depend on your profession or skill level.

To keep things practical, I listed several common use cases and selected ten that represent everyday scenarios. I then wrote a unique prompt for each use case, asking the AI to generate a response.

After that, I evaluated the results based on four key criteria:

- Quality of the output

- Ability to understand and follow the prompt

- Accuracy

I tested DeepSeek vs. ChatGPT using the ChatGPT free plan and DeepSeek on web versions. Here are the ten use cases I tested:

- Math problems

- Summary

- Learning companion

- News update

- Idea generation

- Coding problems

- Storytelling

- Fact-checking

- Conversation

- Image generation

Testing DeepSeek vs. ChatGPT with 10 real-world prompts

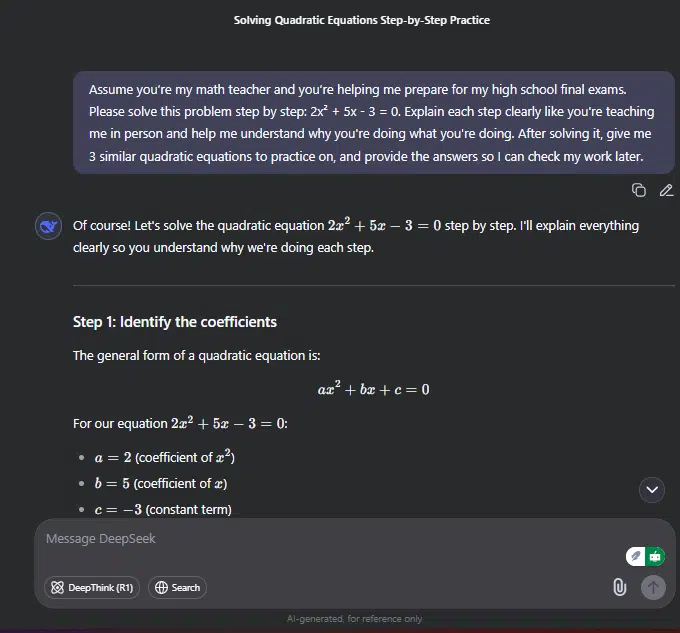

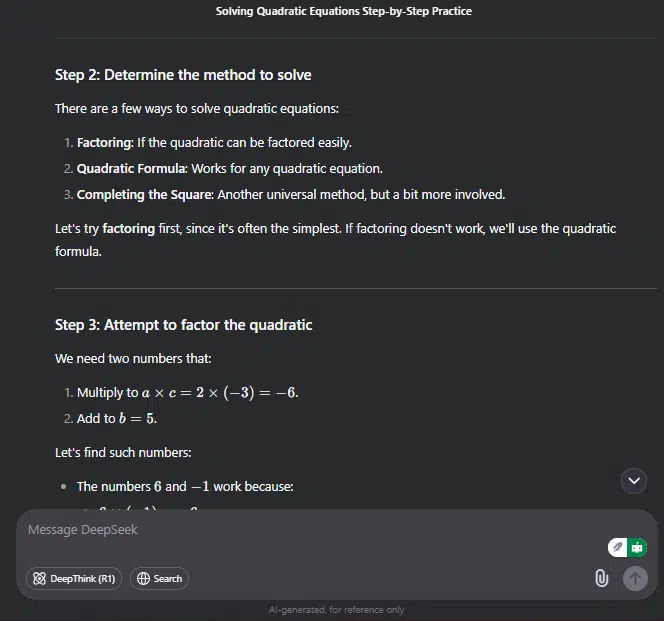

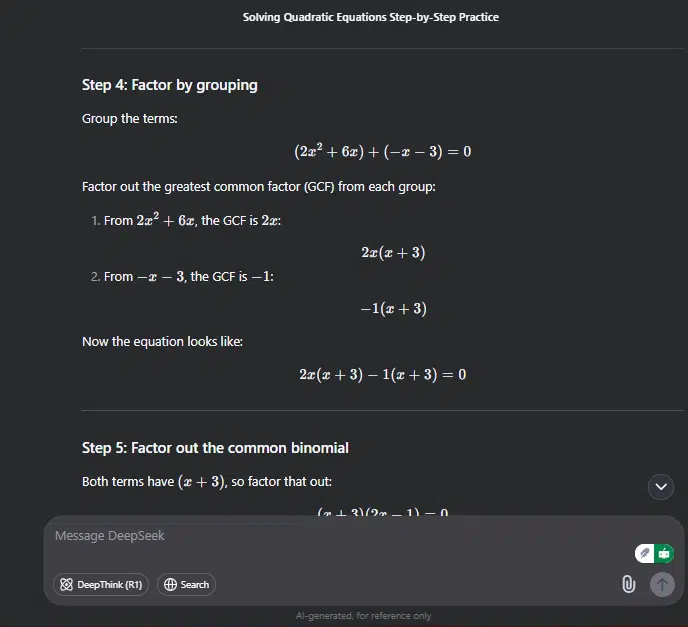

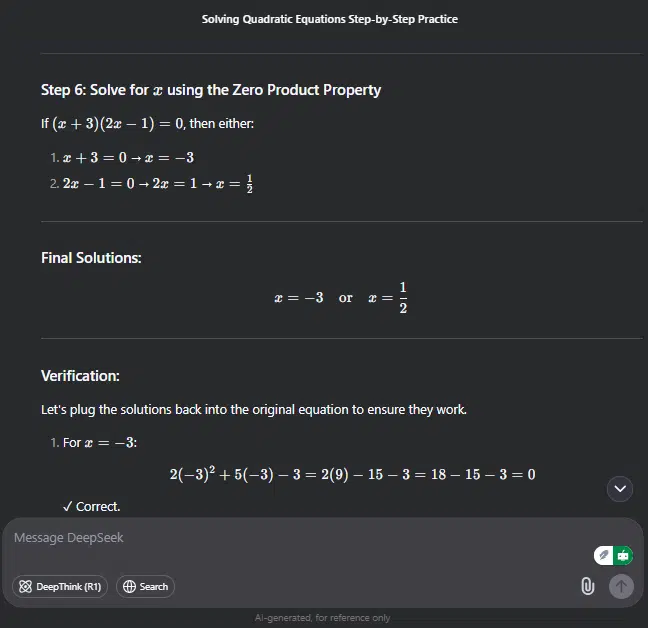

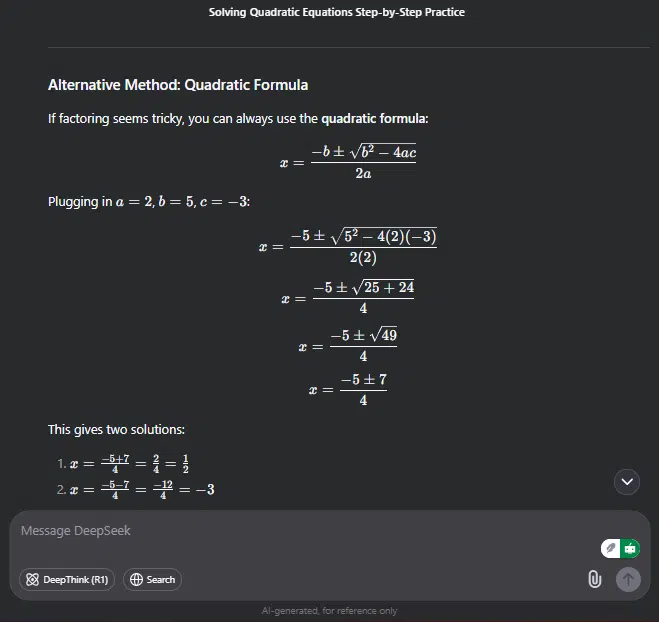

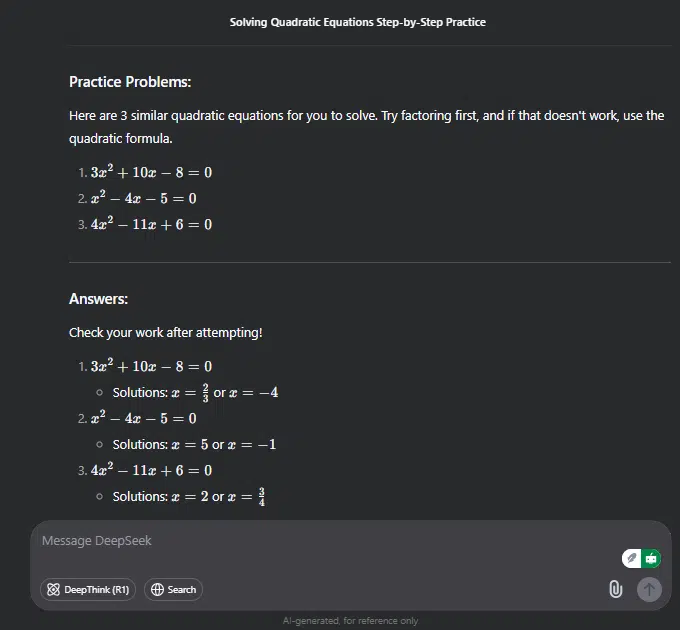

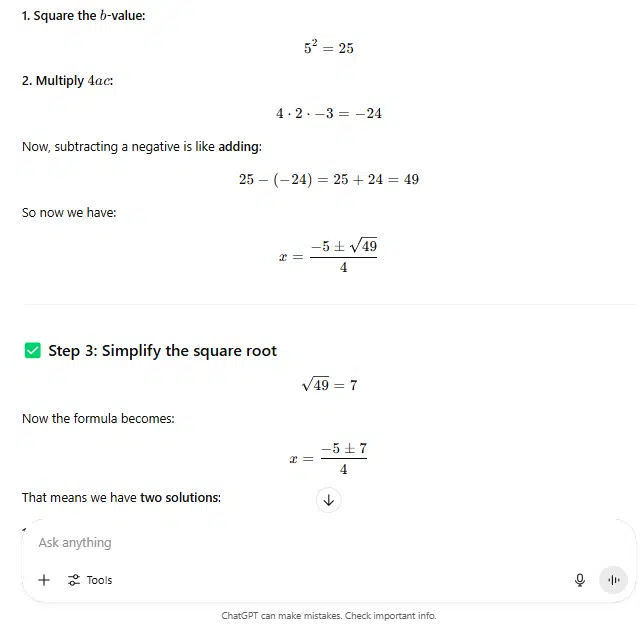

Prompt 1 for math problems

Assume you’re my math teacher and you’re helping me prepare for my high school final exams. Please solve this problem step by step: 2x² + 5x – 3 = 0. Explain each step clearly like you’re teaching me in person and help me understand why you’re doing what you’re doing. After solving it, give me 3 similar quadratic equations to practice on, and provide the answers so I can check my work later.

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: DeepSeek went in-depth, first listing the different methods to solve the problem. Then it walked through each method step by step. It kept the tone conversational, which made everything easy to follow. ChatGPT also provided a step-by-step solution, but it focused on one specific method. It spaced out the explanation, explained why it chose that method, and used a tone that helped me follow along easily.

Prompt understanding: Both DeepSeek and ChatGPT showed that they understood the prompt. They gave step-by-step solutions, offered practice questions, and even provided answers to those questions.

Accuracy: Both tools arrived at the same correct answer based on the problem given in the prompt.

Prompt 2 for summary

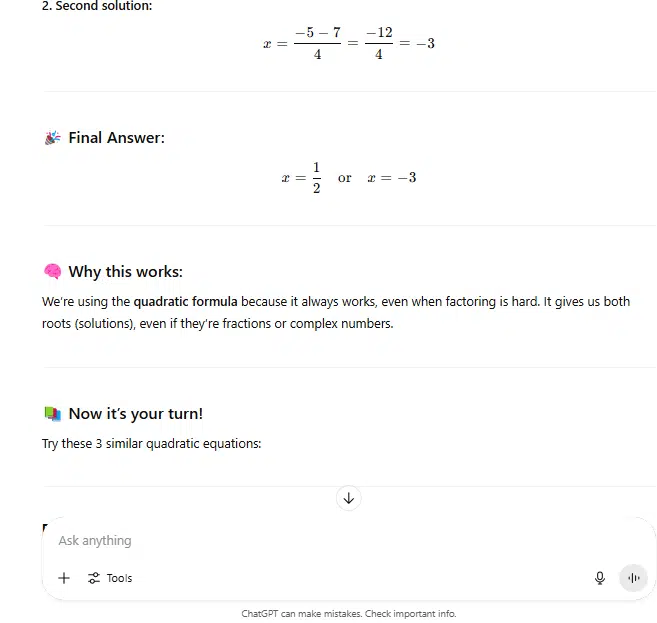

I am running late for work and need to catch up on this Techpoint Africa story that Nigeria teams up with Meta to launch an AI accelerator and deepen tech collaboration. Give me a summary of the story in five bullet points that keeps all the key numbers and mentions. It should feel like I read the whole thing. Make it understandable even if I don’t work in tech.

“Through the Ministry of Communications, Innovation and Digital Economy, the Nigerian Federal Government has partnered with Meta to launch the Nigeria AI Accelerator Programme—another significant step in Nigeria’s expanding efforts to position itself as an African hub for artificial intelligence.

The accelerator aims to support startups building AI-driven solutions tailored to the country’s economic and social challenges. It will be run by the National Information Technology Development Agency (NITDA) and its research arm, the National Centre for Artificial Intelligence and Robotics (NCAIR).

According to the ministry, the programme will offer selected participants access to technical resources, mentorship, and a support system designed to refine AI use cases in sectors like agriculture, health, financial services, and education. The first cohort will run from August to December 2025.

This move strengthens Nigeria’s growing pattern of engaging Big Tech in national innovation efforts. In 2024, the government worked with Google to launch a ₦100 million AI fund that supported ten Nigerian startups. It was followed by a $1.5 million commitment with the support of Luminate, reinforcing the state’s intention to create sustainable AI ecosystems.

Google also announced that it’s ramping up its support for AI training in sub-Saharan Africa with a new $5.8 million commitment to equip the workforce with essential AI skills.

By teaming up with Meta, Nigeria is amplifying its presence on the global AI map. Meta’s involvement provides access to international best practices and a broader innovation network, helping local innovators keep pace with fast-changing developments in machine learning and generative AI.

The government’s long-term ambitions were outlined in 2023 when it commenced work on a National Artificial Intelligence Strategy. That plan involved bringing together local and diaspora talent to develop an institutional framework for AI growth in Nigeria.

Speaking on the new accelerator, Dr. Bosun Tijani, Nigeria’s Minister of Communications, said the initiative is “not just about technology” but about ensuring that Nigeria’s innovation economy remains globally competitive and inclusive.

Applications are now open and will close by July 12, 2025. Shortlisted participants will engage in weeks of intensive mentorship and product development, culminating in a demo day in December.”

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: Both AIs summarized a 300+ word article into bullet points. DeepSeek’s summary was more detailed than ChatGPT’s, but both stayed relevant. However, I’ll pick ChatGPT’s summary because it got straight to the point in each bullet. DeepSeek felt a bit overwhelming for a summary compared to ChatGPT.

Prompt understanding: They both followed the prompt as requested. ChatGPT gave exactly five bullet points and wrote a version that would work well for someone in a hurry. DeepSeek numbered the points and added bullet-point explanations; it felt a bit extra.

Accuracy: Both AIs accurately conveyed the information in the article.

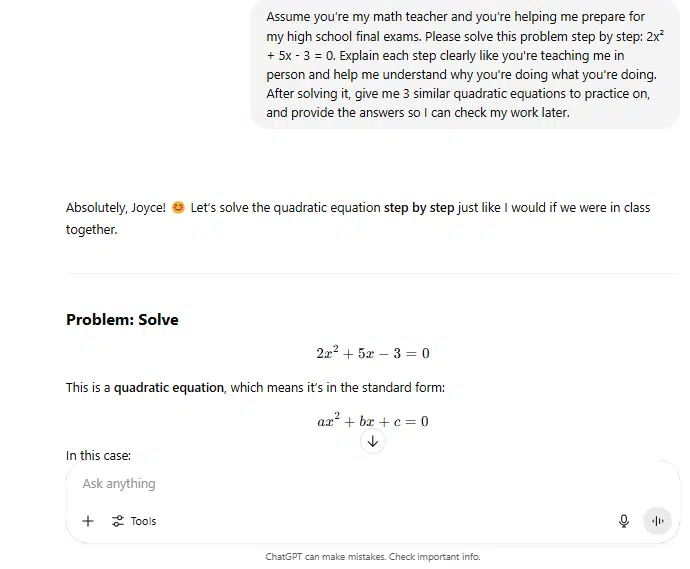

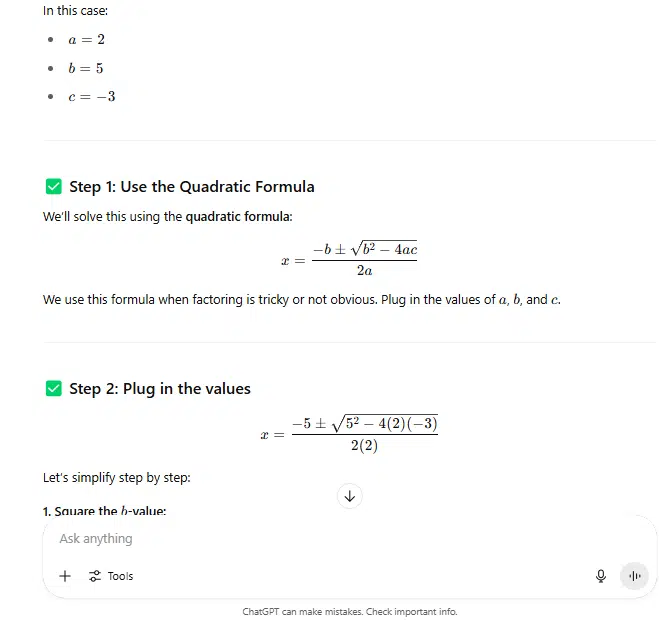

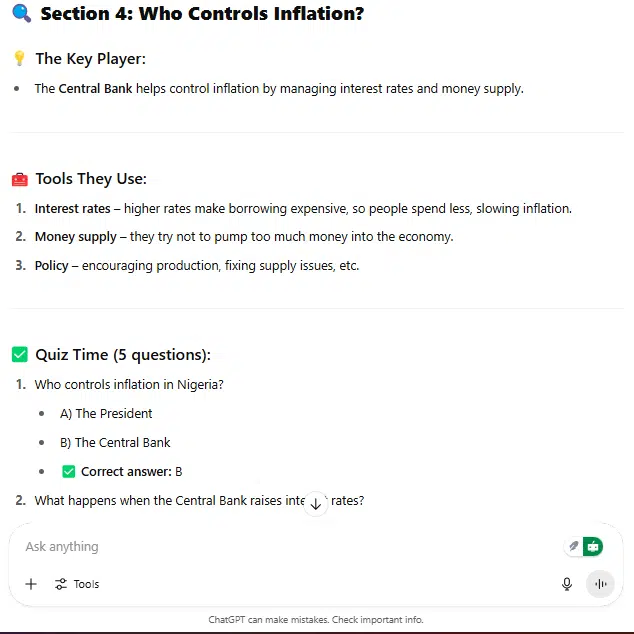

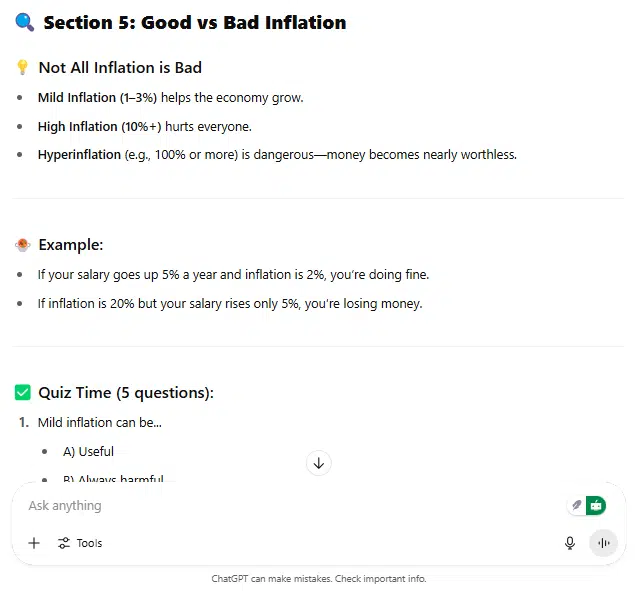

Prompt 3 for learning companion

I’ve always struggled to understand how inflation works. Can you guide me through a self-paced class and explain inflation in simple terms? Teach in a way I will grasp quickly and easily. Please develop the module and content and include five test questions per section. Use examples and avoid jargon.

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: Both gave me a good learning module for understanding inflation. However, ChatGPT delivered content that looked more like an actual learning module. It was well spaced, easy to understand, and started with a clear module outline before building on the content. DeepSeek, on the other hand, did generate a simple module, but it wasn’t as structured like a typical course module compared to ChatGPT’s.

Prompt understanding: Both followed the prompt as required. They gave simple, easy-to-understand explanations, developed a module with content, and included practice questions.

Accuracy: I’d say both were accurate, but if you’re looking for a response that feels more engaging and fun to follow, then ChatGPT came out on top.

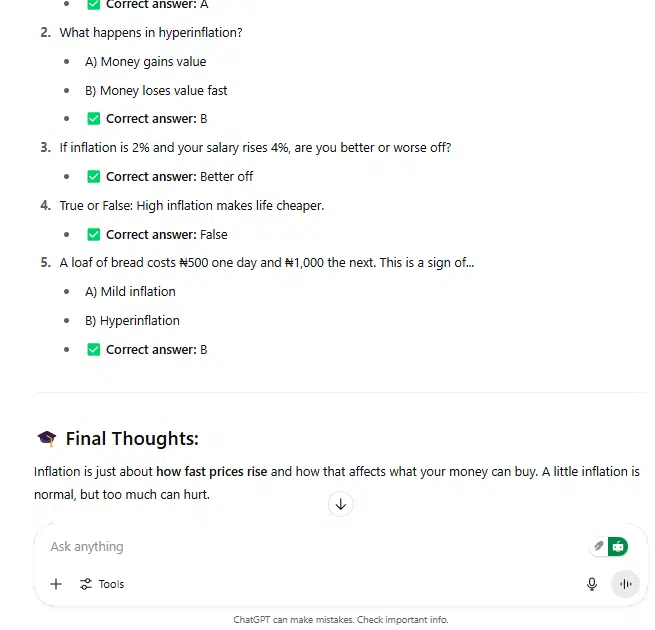

Prompt 4 for news update

I’ve been randomly seeing the ongoing saga between President Donald Trump and Elon Musk. Write a news update that explains when, why, and how it started, and include the latest developments. Write it like a standard news article.

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: Before DeepSeek gave a relevant response to the prompt, I had to prompt it a second time and turn on the search feature. It gave an initial response without sources. However, the second response, which I used, came with the expected news and provided an overview of the topic before including other details. ChatGPT sourced the web for the story, gave a clear rundown of the events, and presented the information in a more organized and structured way.

Prompt understanding: ChatGPT understood the prompt better. It segmented the news into how, when, and why, just like I requested. DeepSeek didn’t really follow the prompt.

Accuracy: DeepSeek wasn’t accurate until I turned on the search feature. Once I did, it added a timeline of events with dates and sources. ChatGPT followed the story up to the day I gave it the prompt. It also included web sources and links to news updates from that day. I didn’t have to toggle any search option on ChatGPT.

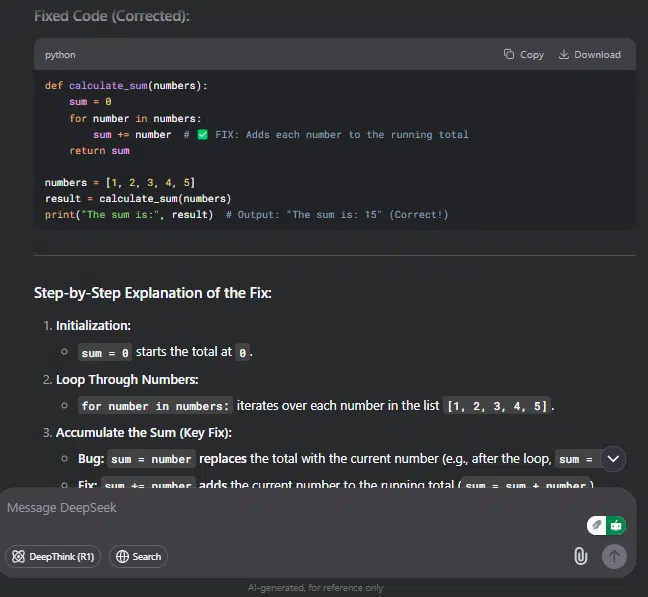

Prompt 5 for idea generation

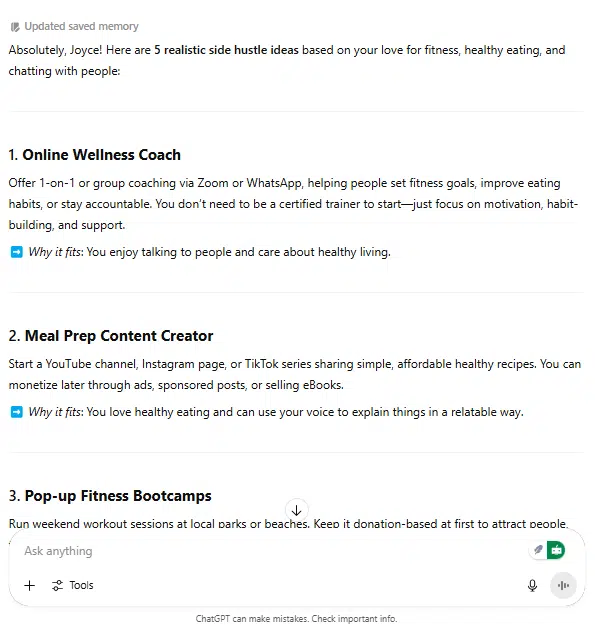

I want to start a small side business, but I don’t know where to begin. I like fitness, healthy eating, and talking to people. Can you give me 5 realistic side hustle ideas based on my interests, with a short explanation of each?

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: DeepSeek didn’t just give suggestions; it explained why I should try each path, including the startup cost and how to get started. It did that for all five ideas and even added a tip on which one to try first. ChatGPT also gave five suggestions and explained why they might be a good fit. However, DeepSeek went the extra mile compared to ChatGPT.

Prompt understanding: Both tools understood the prompt and gave five ideas as requested, explaining what I could do with them. But they handled it differently.

Accuracy: For what I was looking for, DeepSeek gave a more practical response. However, the startup cost would need to be verified.

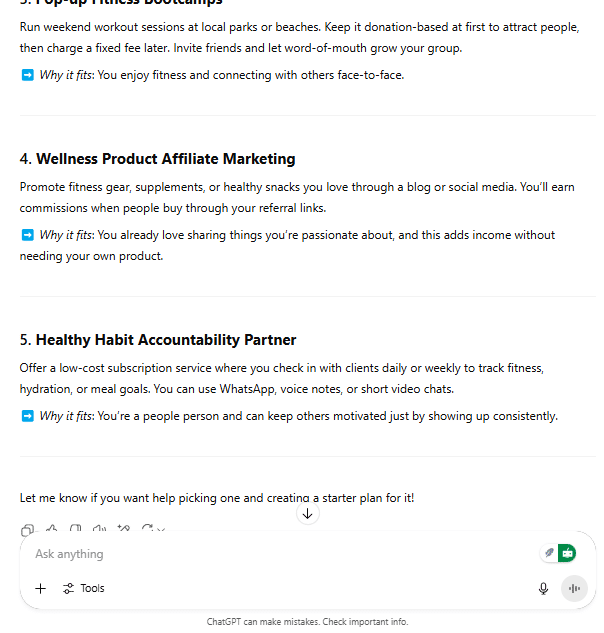

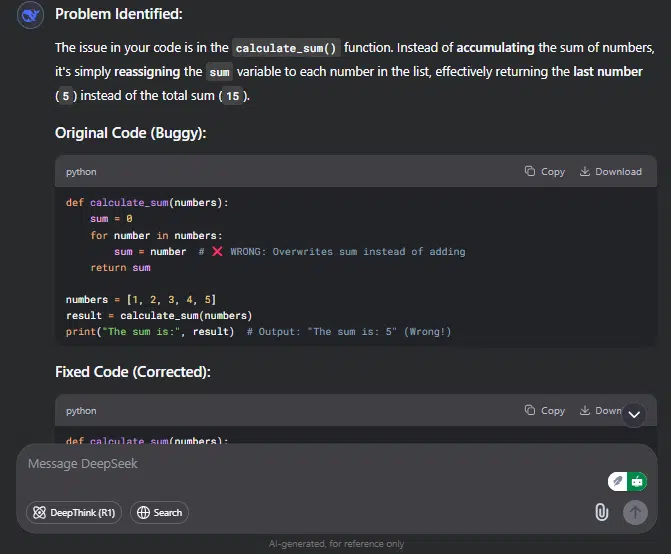

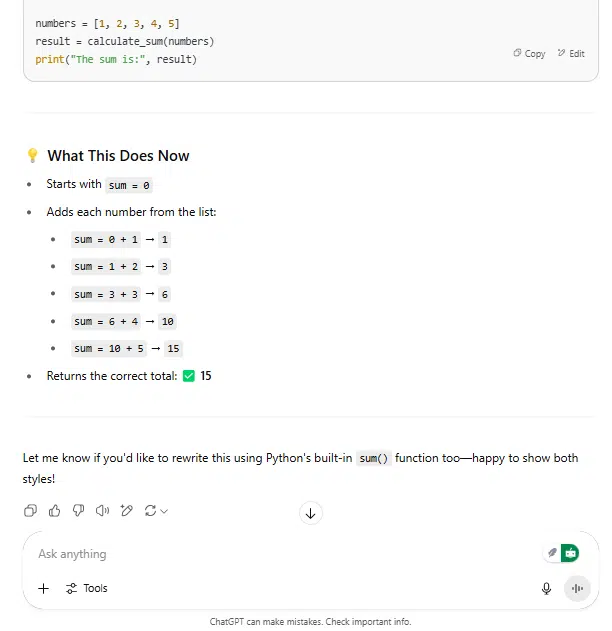

Prompt 6 for coding problems

def calculate_sum(numbers):

sum = 0

for number in numbers:

sum = number

return sum

numbers = [1, 2, 3, 4, 5]

result = calculate_sum(numbers)

print(“The sum is:”, result)

This code is giving me issues. It is not calculating the sum of the numbers correctly. Can you fix the error in this code so that it correctly calculates the sum of the numbers in the list? Please provide step-by-step explanations of how you fixed the issue.

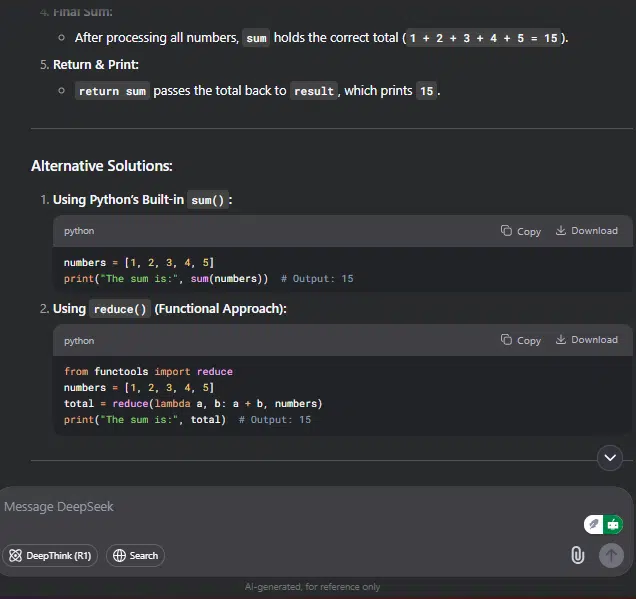

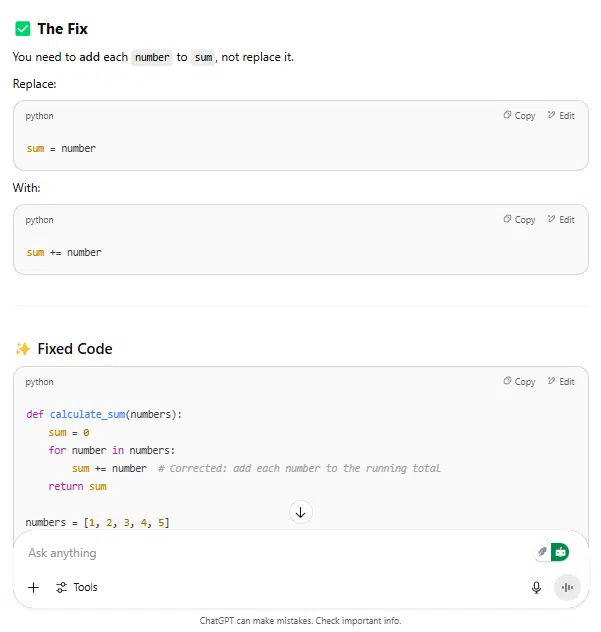

DeepSeek’s response:

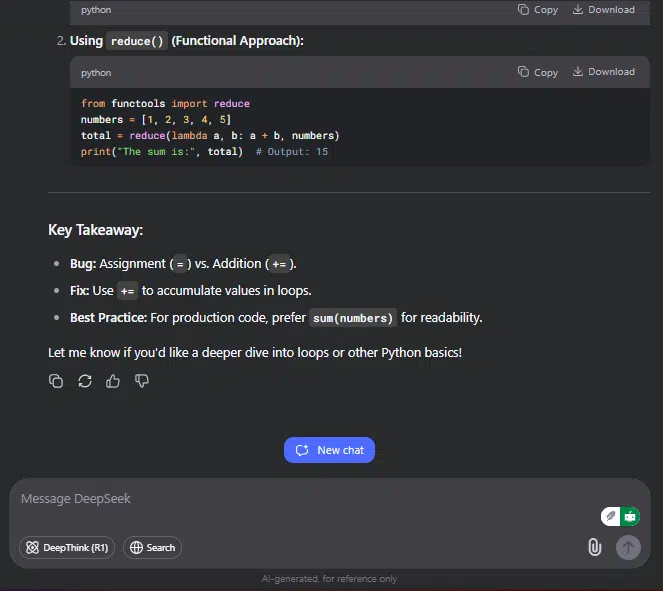

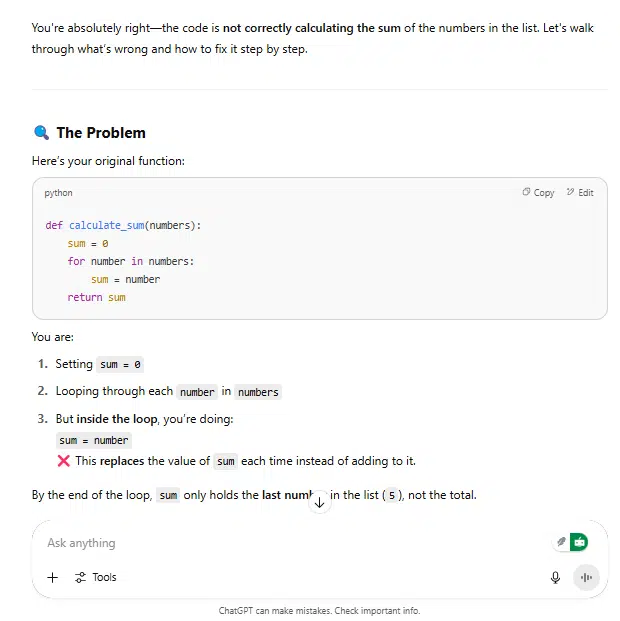

ChatGPT’s response:

My take

Quality: I picked the error from a beginner developer’s code. Even though I’m not a developer myself, I could tell that both tools made fixing the bug simple. They explained the issue clearly and offered solutions. ChatGPT provided a clear step-by-step breakdown that was easy to follow. DeepSeek did the same and even added alternative ways to solve the problem.

Prompt understanding: Yes, both tools understood and followed the prompt correctly.

Accuracy: I didn’t push the fix back into the code to verify its accuracy. However, both tools presented their solutions in a way that was easy to follow. They clearly stated what was missing.

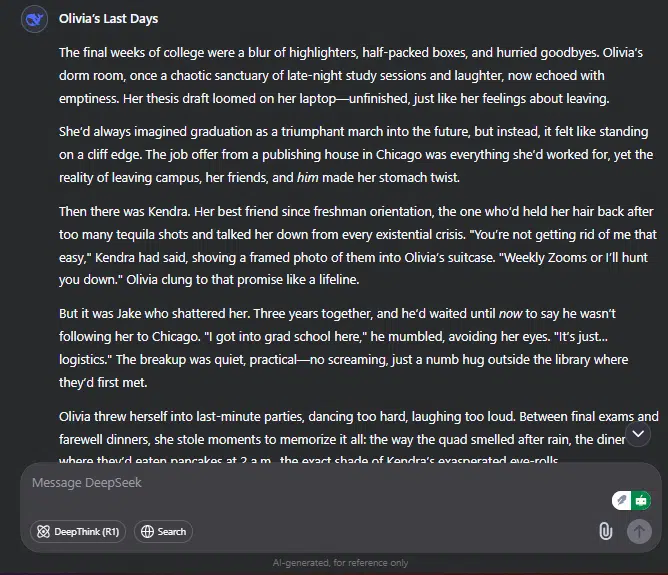

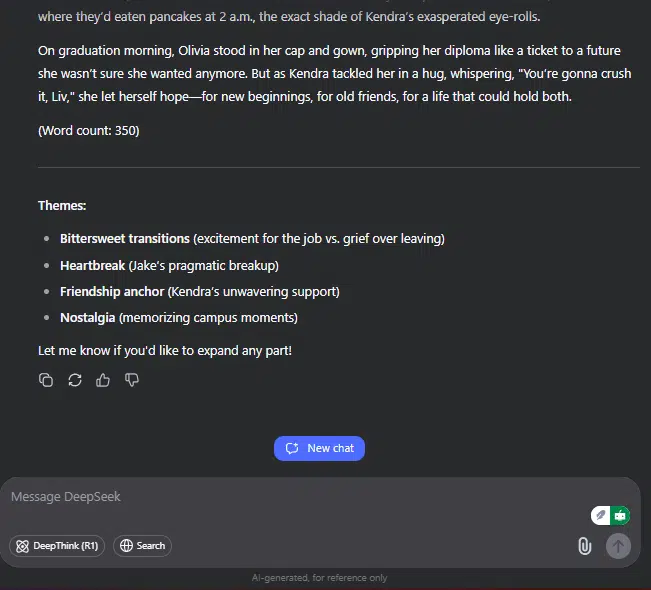

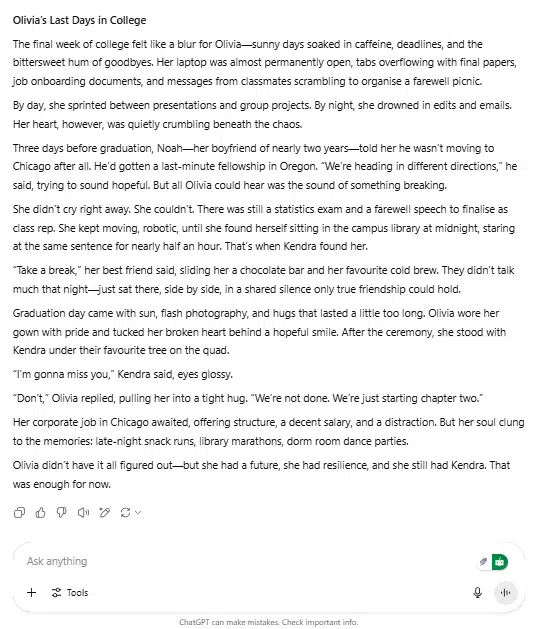

Prompt 7 for storytelling

Write a 350-word story about Olivia’s last days in college. I want a mix of emotions and stress that comes with academic and social activities during that time. Add a heartbreak twist, a job that’s waiting for her after graduation, and her hope to stay connected with her best friend Kendra.

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: I included specific elements I wanted in the story, and both tools used them to write a solid piece. I noticed they followed similar narratives when telling the story. It was easy to follow, engaging, and interesting to read. Both did a good job with the storytelling.

Prompt understanding: Both tools followed the prompt and used the elements I requested.

Accuracy: Both stayed within the word count and delivered a story that matched my prompt.

Prompt 8 for fact-checking

I just overheard someone say that Egypt is an Asian country. I thought it was in Europe. Can you fact-check this claim?

DeepSeek’s response:

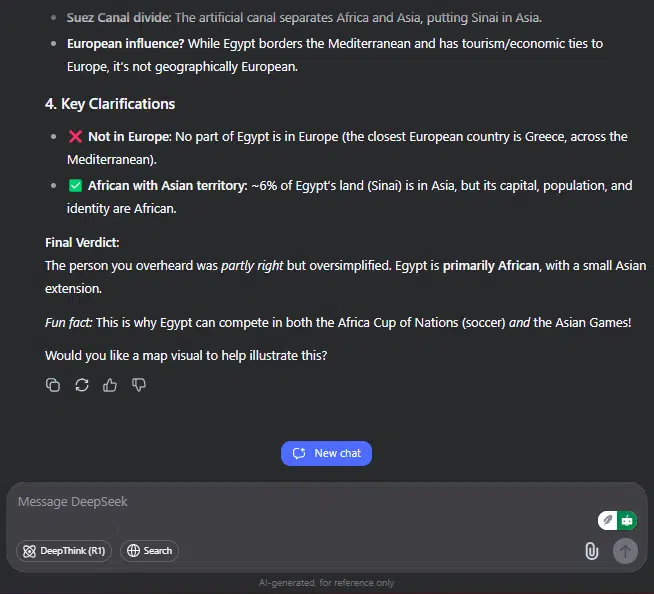

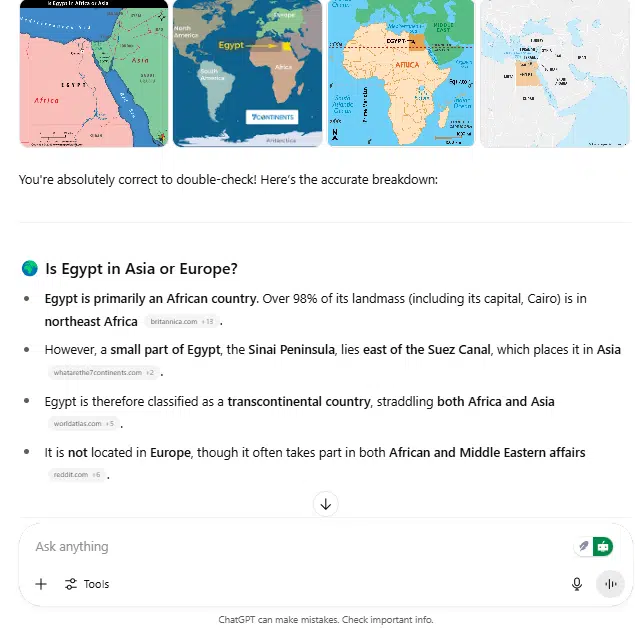

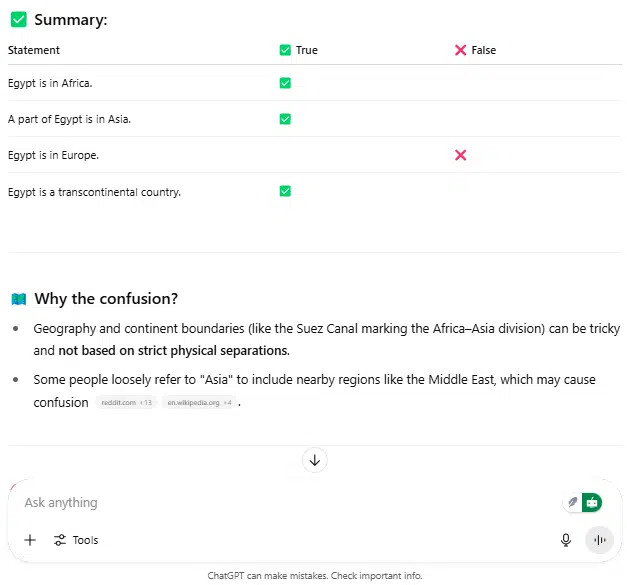

ChatGPT’s response:

My take

Quality: The response I got showed that I could fact-check using either DeepSeek or ChatGPT. However, ChatGPT had a better structure in its explanations. It included maps, pictures, and a summary table. DeepSeek gave a response too, but it felt bare compared to ChatGPT’s fun and engaging presentation.

Prompt understanding: Both DeepSeek and ChatGPT understood the prompt and answered in a way that satisfied it.

Accuracy: Both tools gave accurate responses with clear clarifications and explanations.

Prompt 9 for conversation

I’ve had a stressful day and just want to chat. Can you keep me company for a few minutes and talk to me like a friend? Maybe ask how my day went and keep the conversation going naturally so I don’t fall asleep. I have a meeting to catch.

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: ChatGPT gave me a good conversation that flowed naturally. I liked that its responses weren’t overwhelming and that it asked questions that kept the chat going. It had an interesting opinion about my response and made the conversation feel engaging. On the other hand, DeepSeek’s responses felt overwhelming and didn’t flow like a typical conversation.

Prompt understanding: ChatGPT clearly understood the prompt and played the role of a chat buddy well. DeepSeek did follow the prompt, but its responses felt too heavy for a casual chat.

Accuracy: ChatGPT did a better job than DeepSeek.

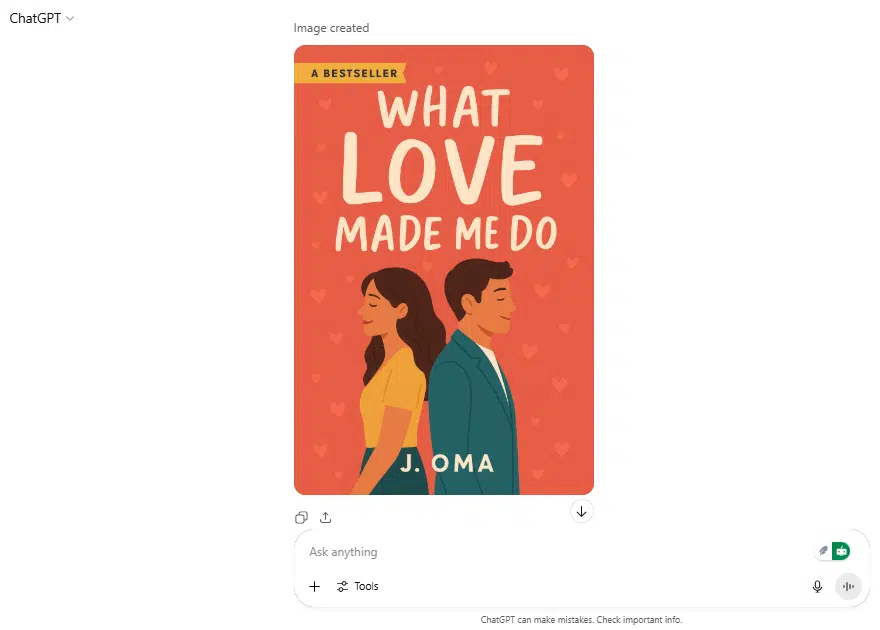

Prompt 10 for image generation

I’m a graphic designer working on the cover of a rom-com fiction book. Generate a book cover for the title What Love Made Me Do by J. Oma, and include a bestselling tag.

DeepSeek’s response:

ChatGPT’s response:

My take

Quality: ChatGPT won this round. It generated a book cover with an appealing style and design, and even added the author’s name and a best-selling tag. DeepSeek didn’t generate an image; instead, it gave me a text description of what the book cover could look like. When I prompted it to create an actual image, it responded that it couldn’t directly generate image files. But it could offer a detailed prompt I could use with AI image generators to create a book cover mockup. In some cases, it also added links to mockup and stock image sites.

Prompt understanding: ChatGPT understood the prompt and delivered. DeepSeek also understood the prompt but couldn’t generate an actual image.

Accuracy: ChatGPT was accurate for this task.

DeepSeek vs. ChatGPT: Side-by-side performance table

| S/N | Prompts | Performance |

| 1 | Math problems | DeepSeek outperformed by exploring multiple solving methods, while ChatGPT focused on one method. |

| 2 | Summary | ChatGPT was more concise and to the point, which was better. DeepSeek offered a more detailed summary with numbered points and extra explanations. |

| 3 | Learning companion | ChatGPT stood out for its structured, course-like format. However, DeepSeek also created a simple and accurate module. |

| 4 | News update | ChatGPT outperformed DeepSeek in quality, prompt understanding, and accuracy. DeepSeek gave a better response after a second prompt and activating the search feature. |

| 5 | Idea generation | DeepSeek and ChatGPT both understood the prompt. However, DeepSeek stood out by offering more detailed, practical suggestions, including estimated startup costs, how to get started, and a tip on which idea to try first. |

| 6 | Coding problems | Both ChatGPT and DeepSeek explained the bug clearly and offered helpful, step-by-step solutions. But DeepSeek did extra; it also suggested alternative fixes. |

| 7 | Storytelling | Both tools effectively followed the prompt, used the requested elements, and delivered accurate, engaging stories. |

| 8 | Fact-checking | Both DeepSeek and ChatGPT gave accurate and prompt-relevant responses. However, ChatGPT added visuals. |

| 9 | Conversation | ChatGPT gave engaging and easy-to-follow responses, whereas DeepSeek felt overwhelming and less conversational. |

| 10 | Image generation | ChatGPT outperformed DeepSeek in generating a book cover. It created an image, while DeepSeek only offered a text description and couldn’t generate an image. |

Conclusion: My overall recommendation

This isn’t the first time I’ve compared two AIs to see how they perform on real-world tasks. I’ve done it before with Grok vs. ChatGPT. In this comparison between DeepSeek and ChatGPT, both tools showed strong capabilities, but each had its own strengths.

From the ten prompts I used in this article, both AIs handled most tasks well and performed equally in many cases. However, DeepSeek did a better job with idea generation and math problems. To get the most out of DeepSeek, though, I often had to turn on its search and DeepThink features.

For conversations, ChatGPT was more conversational and engaging. When I tested visual tasks like image generation, ChatGPT outperformed DeepSeek by actually producing an image, while DeepSeek only described one.

In the end, the best choice depends on what you need. If you’re a designer or want fun, structured content, ChatGPT might be the better option. If you need detailed, practical content, DeepSeek could be better. For the best results, I recommend using both to combine their strengths and get a more complete output.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.