For the longest time, coding felt like a high wall that only the elite few could climb. Between syntax rules, logical flows, and mysterious bugs that show up out of nowhere, the barrier to entry was steep. And honestly, I’ve been there, staring at a blinking cursor, feeling like I needed a PhD in some obscure subject to make the simplest program work.

But that’s changing. Fast.

Thanks to AI, the world of software development is getting a major rewrite. AI tools today don’t just autocomplete your lines now; they help you write better logic, generate entire functions from scratch, explain what your code is doing, and sometimes even catch bugs before you do. It’s like having a supercharged coding buddy who never sleeps.

AI is changing how we write, debug, and ship software. And leading that transformation are two standout tools: Claude by Anthropic and GitHub’s Copilot.

Over the past week, I’ve put both Claude and Copilot through a rigorous series of coding challenges, five real-world prompts that range from writing algorithms and solving logic puzzles to generating documentation and fixing broken code. No fluff. Just real prompts I (a coding enthusiast) or any developer might run into on a typical workday.

My goal was to figure out which of these tools lives up to the hype and which one I’d trust as my daily coding companion.

Here’s what you’ll find in this article:

- A quick primer on what Claude and Copilot are and how they differ.

- The real reason this comparison matters.

- A breakdown of my testing process and prompts.

- A side-by-side comparison of Claude vs Copilot in real coding tasks.

- Benchmarks for code generation, debugging, and clarity.

- Screenshots of actual outputs so you can judge for yourself.

- And finally, my honest verdict on which tool came out on top and why.

If you’re trying to figure out which AI coding tool is worth using in 2025, you’re in the right place.

TL;DR: Key takeaways from this article

- Claude outperforms GitHub Copilot in 4 out of 5 real-world coding prompts, especially when explanation, logic, and edge-case handling matter.

- Copilot is unbeatable for speed and seamless IDE integration. If you’re just trying to get code written fast, it’s the go-to tool.

- Claude excels at teaching, debugging, and long-form thinking. It doesn’t just generate code; it walks you through the why behind it.

- Use Copilot when you know what you want; use Claude when you’re figuring it out.

- For the best of both worlds, combine them. Copilot handles boilerplate and structure; Claude helps you clean it up, explain it, and improve it.

Overview of Claude and Copilot

Claude

What if Claude?

What if an AI could write code like a senior dev, explain it like a patient tutor, and somehow still feel conversational?

That’s Claude.

Claude is named after Claude Shannon, the father of information theory, and that little tribute says a lot about how this model is wired. Claude by Anthropic is built for people who value precision, logic, and depth. It’s not flashy. It’s just smart.

When I started testing Claude, one thing stood out immediately: it doesn’t hallucinate as much. If you’ve ever had an AI confidently serve you a wrong answer and crash your whole codebase, you know how refreshing that is. Claude tends to take a more cautious, context-aware approach to problem-solving, especially in code-heavy prompts. It doesn’t make up imaginary functions or pass off nonsense as facts.

Instead, Claude shines when you need a partner who thinks deeply. With its massive context window, Claude can handle entire codebases, technical documentation, or long email threads, without skipping a beat. That means if I need to feed it a whole project or complex legacy script, it won’t tap out midway. It’ll read, reason, and respond with clarity.

How does Claude work?

Claude doesn’t rely on real-time internet data. That’s by design. It doesn’t browse the web or reference the latest Stack Overflow threads. It works entirely off its pre-trained knowledge, which is vast, and it’s unusually good at sticking to what it knows. If something’s outside its expertise, Claude won’t bluff. It’ll politely pass.

That kind of honesty is rare in AI.

When I threw coding tasks at it, Claude responded with clean, readable, and logically sound code. More than just spit out lines, it explains them. Ask it to debug something, and it’ll walk you through the issue like a peer reviewer, not a magic black box. Need it to generate documentation? You’ll get explanations that make sense.

Claude is built for long-form reasoning, multi-step problem solving, and structuring answers in a way that feels human. If you’re into learning while coding, it’s especially great, it explains as it goes, like that senior dev who mentors instead of just fixing.

Where you can use Claude

You can access Claude on the web or via mobile apps (iOS and Android). That makes it handy when you need insights on the fly, say, reviewing code during a commute or brainstorming logic for a weekend project. The only trade-off is no live internet access.

Claude at a glance:

| Developer | Anthropic |

| Year launched | 2023 |

| Type of AI tool | Conversational AI and Large Language Model (LLM) |

| Top 3 use cases | Content structuring, analytical reasoning, and summarization |

| Who is it for? | Writers, researchers, developers, and business professionals |

| Starting price | $20/month |

| Free version | Yes (with limitations) |

GitHub Copilot

What is Copilot?

GitHub Copilot does a little more than assist with coding. It’s more like a pair programmer that never sleeps. Built by GitHub in collaboration with OpenAI, Copilot is designed specifically for developers. It lives inside your favorite Integrated Development Environments (IDEs) like VS (Visual Studio) Code, JetBrains, and even in the browser, and helps you write code faster, smarter, and with fewer headaches.

What makes Copilot different is how deeply it integrates into the coding experience. It autocompletes lines, understands context, and can generate entire functions, suggest tests, write documentation, and even refactor messy code.

It’s trained on mountains of open-source code, so it draws patterns from real-world projects. That gives it a sort of “been-there, done-that” attitude, especially helpful when you’re stuck on a common implementation or boilerplate you don’t want to write from scratch again.

How does Copilot work?

Copilot is powered by OpenAI’s Codex model (a cousin of GPT-4) and it’s built to understand your intent based on what you’re typing. It analyzes the code in your file, your comments, and even variable names to anticipate what you’re trying to build. Sometimes it nails it with eerie precision; other times, it just tries.

But in general, Copilot thrives in day-to-day development tasks. While it doesn’t do deep codebase reasoning like Claude, it’s brilliant for fast, iterative, test-driven development.

And it’s not just for solo devs. GitHub is slowly rolling out Copilot for Teams and Enterprises, allowing collaborative editing and secure suggestions tailored to your company’s private code.

Where you can use Copilot

Copilot works seamlessly inside most modern development environments, so you don’t have to change your setup to get started. It integrates directly into:

- Visual Studio Code (VS Code).

- JetBrains IDEs (like IntelliJ, PyCharm, WebStorm).

- Visual Studio.

- Neovim.

- GitHub Codespaces (for cloud development).

- Your browser, through GitHub.com, with inline suggestions when reviewing PRs or writing markdown.

So whether you’re building locally, remotely, or somewhere in between, Copilot stays right there with you, ready to jump in with suggestions as you type.

Copilot at a glance

| Year launched | 2021 |

| Type of AI tool | AI coding assistant built into IDEs (powered by OpenAI Codex / GPT-4) |

| Top 3 use cases | Real-time code generation and completion, writing unit tests and documentation, and refactoring and suggesting boilerplate code |

| Who can use it? | Individual developers, teams, and enterprises using supported IDEs like VS Code, JetBrains, and Neovim |

| Starting price | $10/month for individuals, $19/month for businesses |

| Free version | Free for verified students, teachers, and maintainers of popular open-source projects |

Why I tested Claude vs Copilot

Over the past year, I’ve found myself relying more and more on AI tools in my day-to-day work, which is mostly writing. But even as a writer, I dabble in coding from time to time. You can call me an enthusiast.

After I compared Claude to ChatGPT in a previous article (and Claude triumphed), I wanted to see how it’d performed against Copilot.

I wasn’t after flashy demos or marketing hype. I wanted answers. So I decided to test them, head-to-head, with a set of real-world, everyday developer prompts. The kind of stuff we all run into, from edge-case logic and vague client requests to broken code and last-minute documentation demands.

What I found genuinely surprised me. It might surprise you too.

How I tested Claude and Copilot

To make this comparison fair, I gave both Claude and GitHub Copilot the exact same coding prompts, with no shortcuts or tweaks. Then, I evaluated their responses across four core areas that matter when you’re building or fixing software:

- Code generation accuracy: Does the code run? Is it syntactically clean? And more importantly, does it do what I asked?

- Debugging and error handling: Can it spot bugs in broken code and suggest a working fix?

- Clarity and documentation: Does it explain its reasoning clearly, or just throw code at the wall?

- Creativity and adaptability: How well does it handle vague, weird, or open-ended requests?

Throughout the test, I kept detailed notes, including how long each tool took, how often they hallucinated or misunderstood the prompt, and whether they held up under pressure from quirky edge cases. In addition to looking for correct answers, I looked for reliable performance under real-world conditions.

Prompt-by-prompt breakdown for Claude and Copilot

Let’s see how well these two AI models perform.

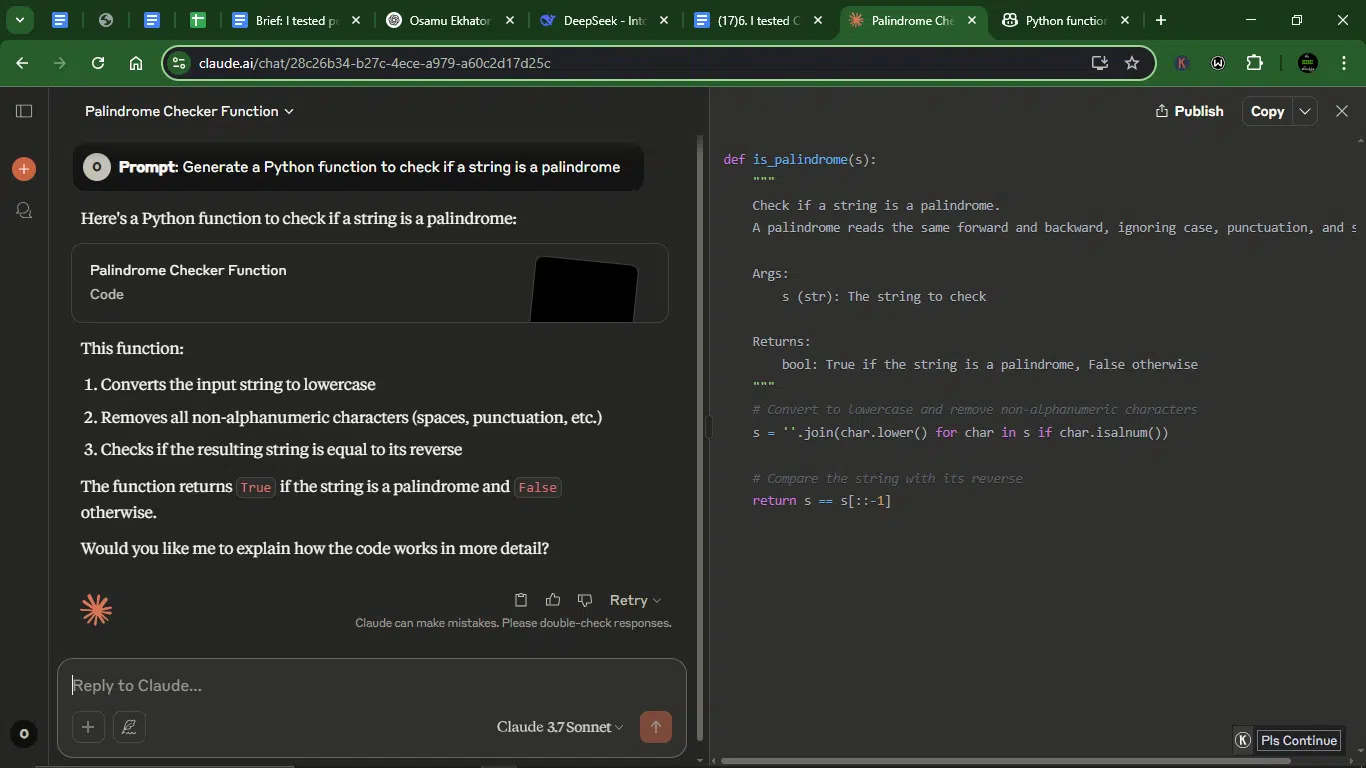

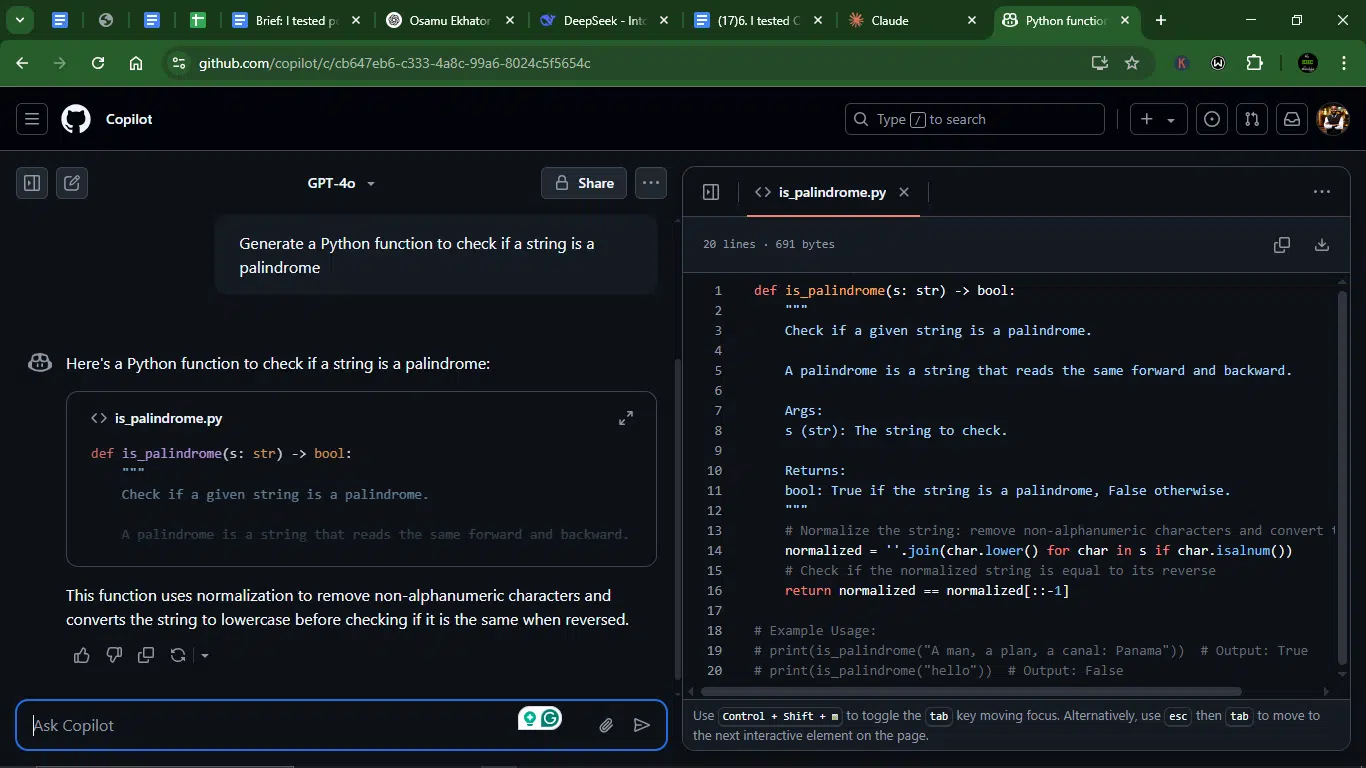

Prompt 1: Generate a Python function

I kicked things off with a classic. I asked each to write a Python function to check if a string is a palindrome. It’s a straightforward task, but perfect for gauging how well these tools handle logic, syntax, and code structure without needing tons of context.

Prompt: Generate a Python function to check if a string is a palindrome.

Result:

Claude’s response:

Copilot’s response:

- Accuracy: Both functions provide correct responses. They normalize the string (lowercase + alphanumeric only), compare against its reverse, and handle edge cases (punctuation, mixed case).

- Debugging and error handling: Neither has bugs, but Copilot includes example usage (helpful for quick testing).

- Clarity and documentation: Claude explains why normalization is needed (ignoring case/punctuation) and offers to elaborate further. Copilot offers a more concise docstring and includes commented examples (great for usability).

- Creativity and adaptability: Claude asks if you’d like a deeper explanation (adaptive to user needs). Copilot assumes you want a ready-to-use solution.

Winner: Claude.

Copilot is better for quick, practical implementation, while Claude is better for learning and adaptability.

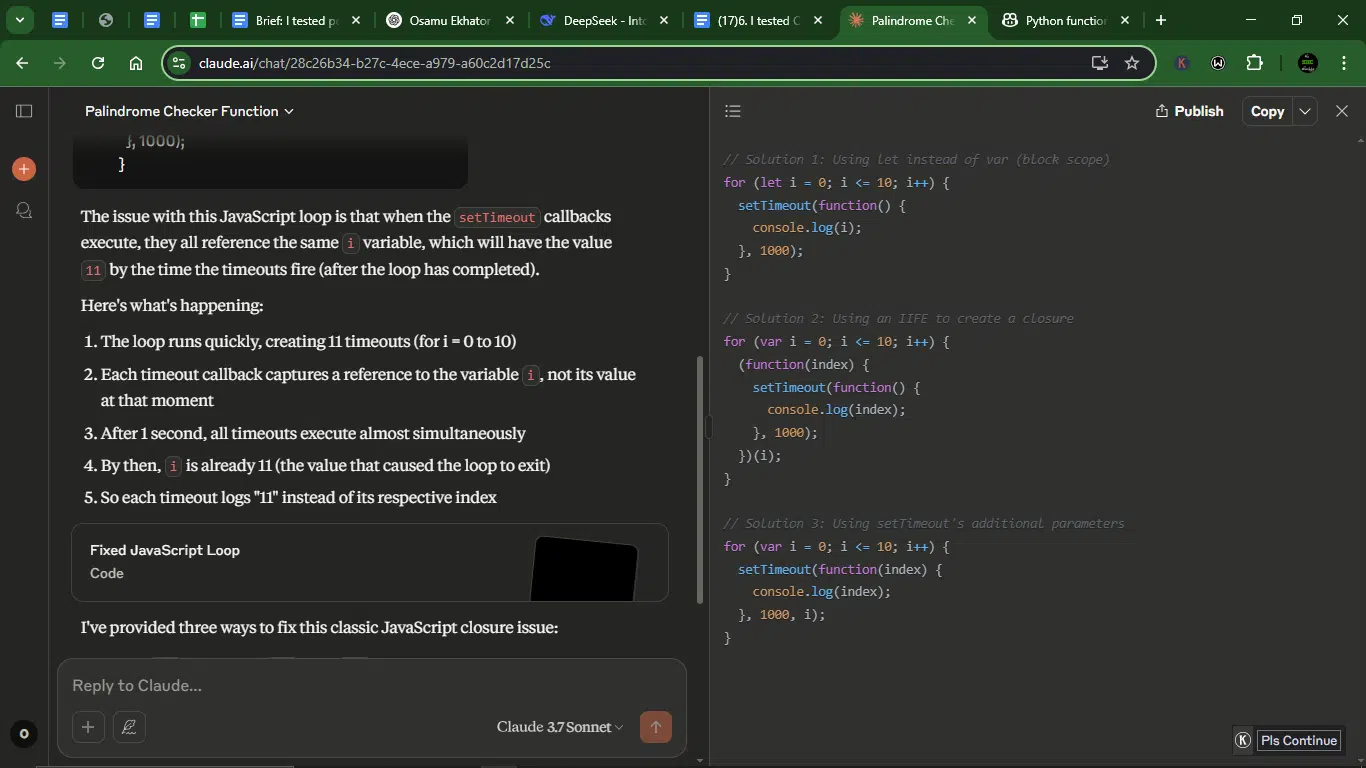

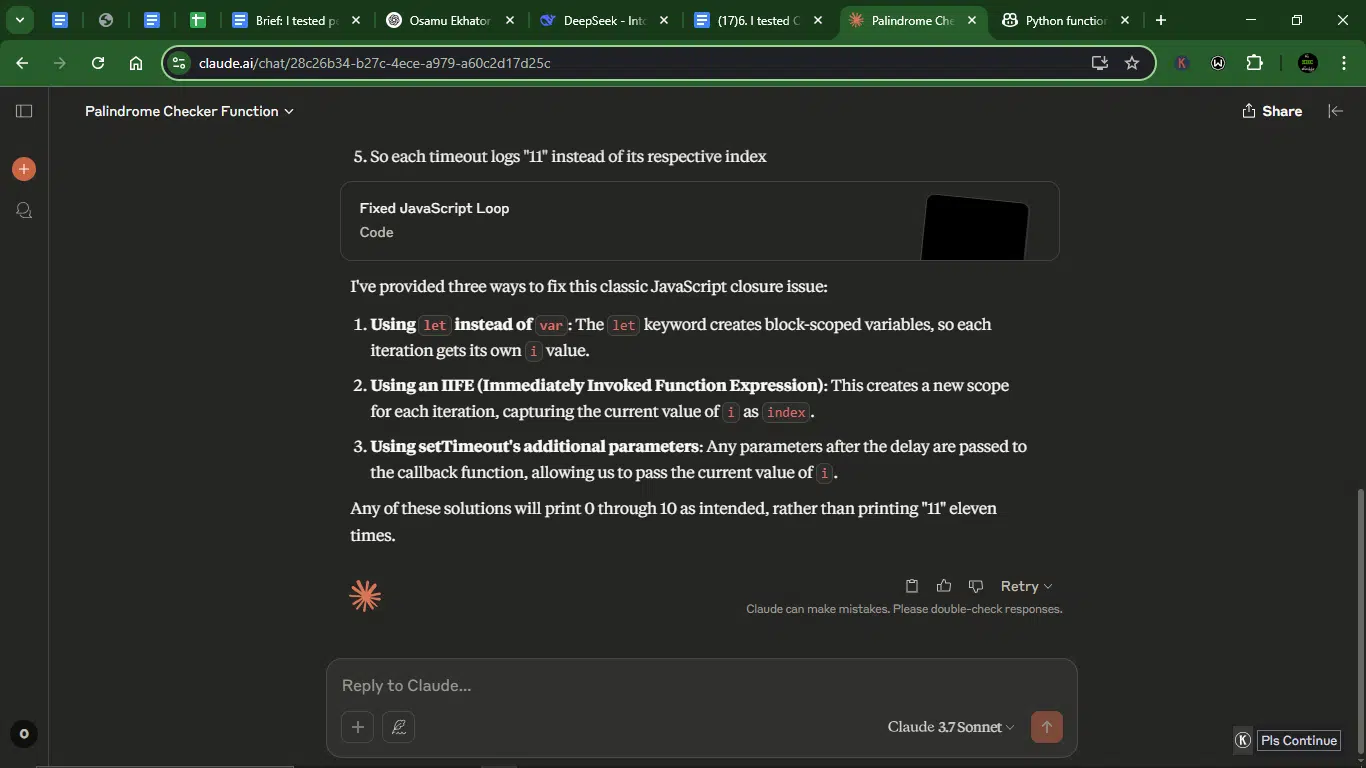

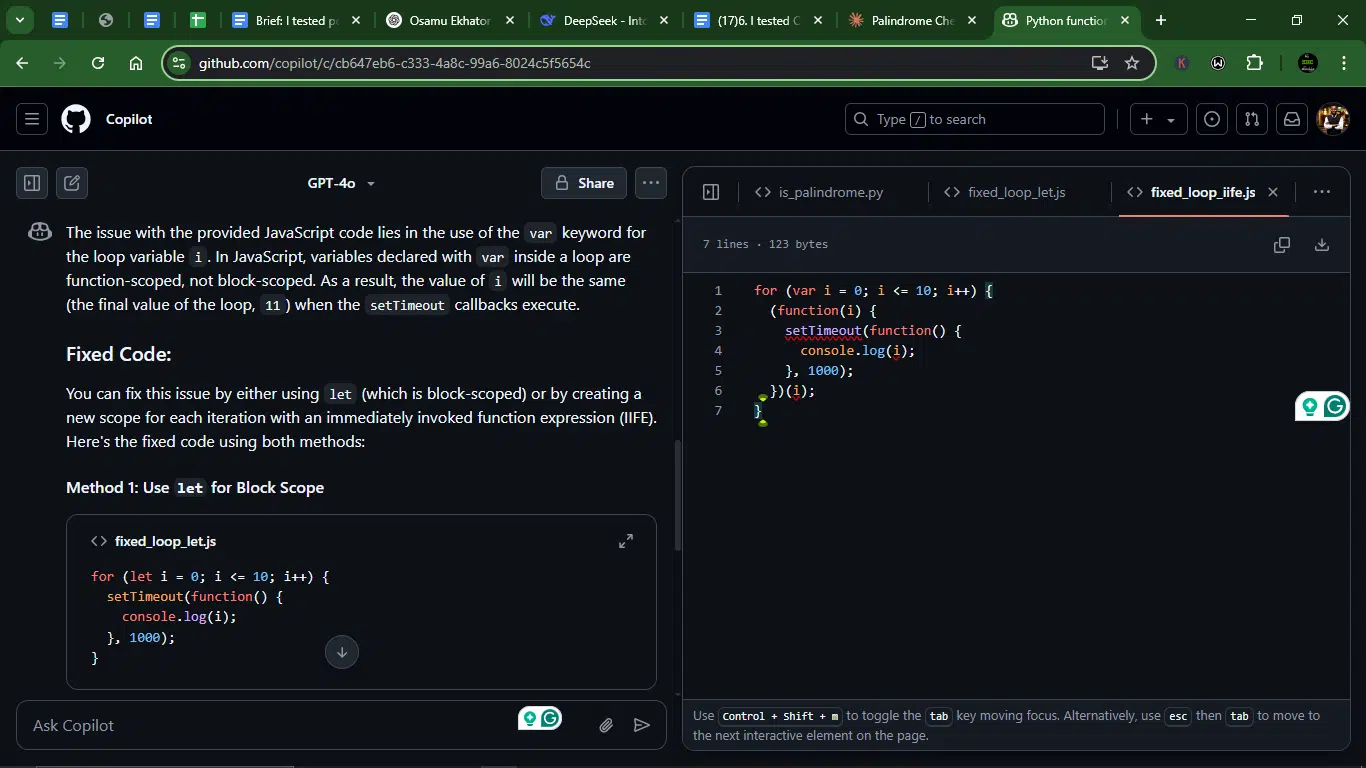

Prompt 2: Debug a broken JavaScript loop

Not every coding task is clean and fresh; a lot of the time, you’re walking into someone else’s half-working code and trying to make sense of it. Debugging is where you separate smart code generators from actual problem solvers.

So, for the next task, I gave both tools something messier: a broken JavaScript loop. This prompt was a good test of how well each AI could identify issues, suggest fixes, and explain why the code was failing in the first place.

Prompt: Debug this broken JavaScript loop.

Javascript:

for (var i = 0; i <= 10; i++) {

setTimeout(function() {

console.log(i);

}, 1000);

}

Result:

Claude’s response:

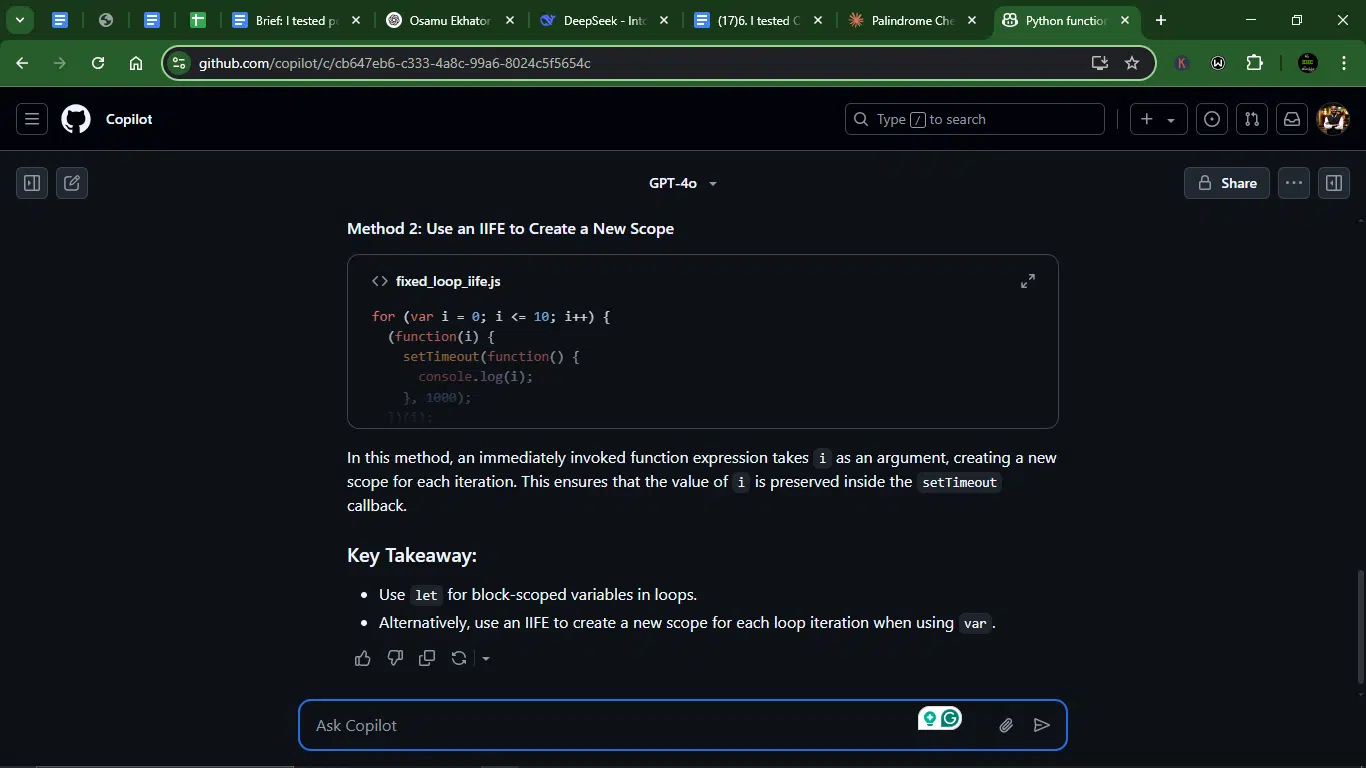

Copilot’s response:

- Accuracy: Both AI models correctly identify the issue (var scoping) and provide three working solutions: Using let (block-scoping), using an IIFE (closure), and using setTimeout’s third parameter (Claude only). However, Claude includes an extra solution with setTimeout parameters.

- Debugging and error handling: Claud explains why the bug occurs (references i after loop completion) and separates each solution. Copilot also explains the issue well, but misses the setTimeout parameter approach.

- Clarity and documentation: Claude breaks down the problem step-by-step and labels each solution clearly. Copilot uses code comments effectively but is slightly less structured.

- Creativity and adaptability: Claude offers three distinct solutions, including a lesser-known one (setTimeout’s third arg). Copilot sticks to two common fixes (let and IIFE).

Winner: Claude.

Copilot’s answer is correct and clear, but Claude goes above and beyond with explanations and solutions.

Prompt 3: Build a REST API

Here, I wanted to see how well Claude and GitHub Copilot handled something more practical. This is the kind of foundational task coders often do in real projects. It was the perfect way to test whether these AIs could follow the standard conventions that developers expect, outside of writing working code.

Prompt: Build a basic REST API in Node.js using Express.

Result:

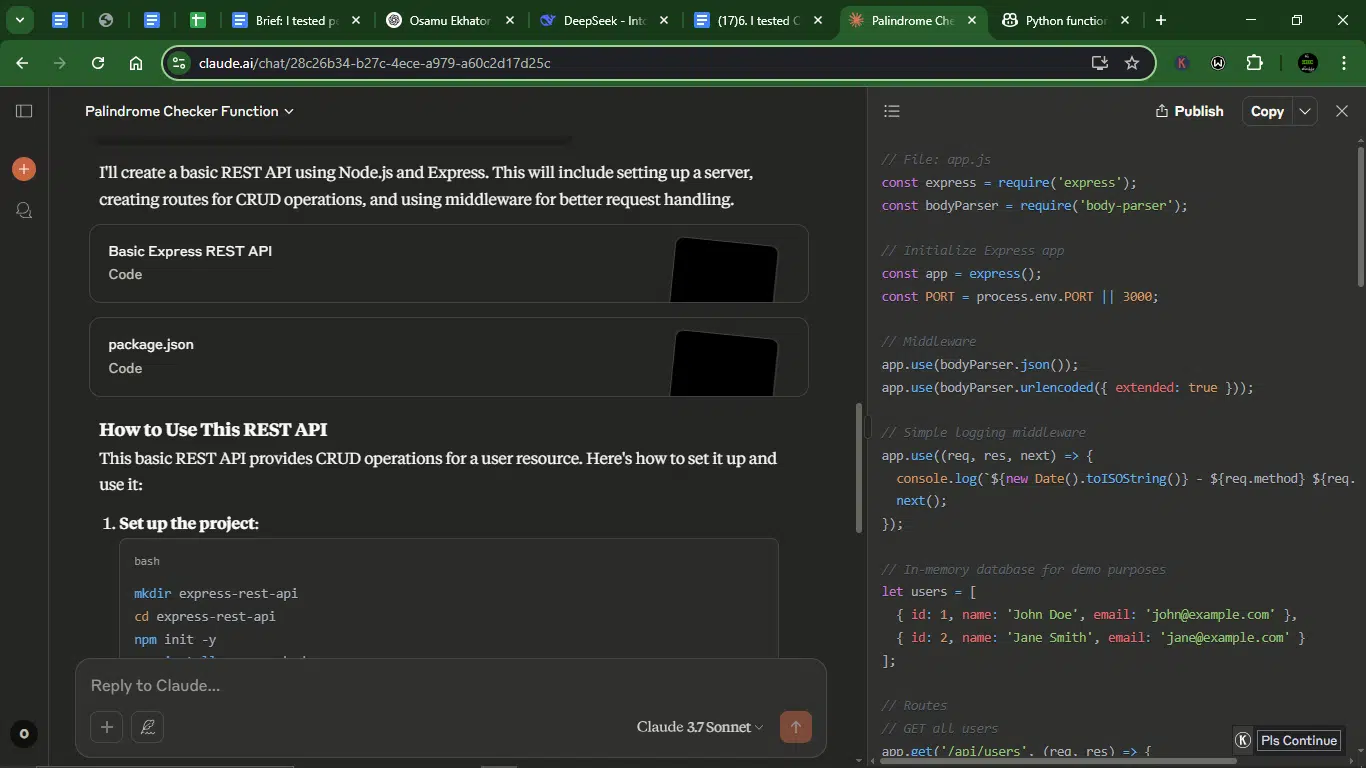

Claude’s response:

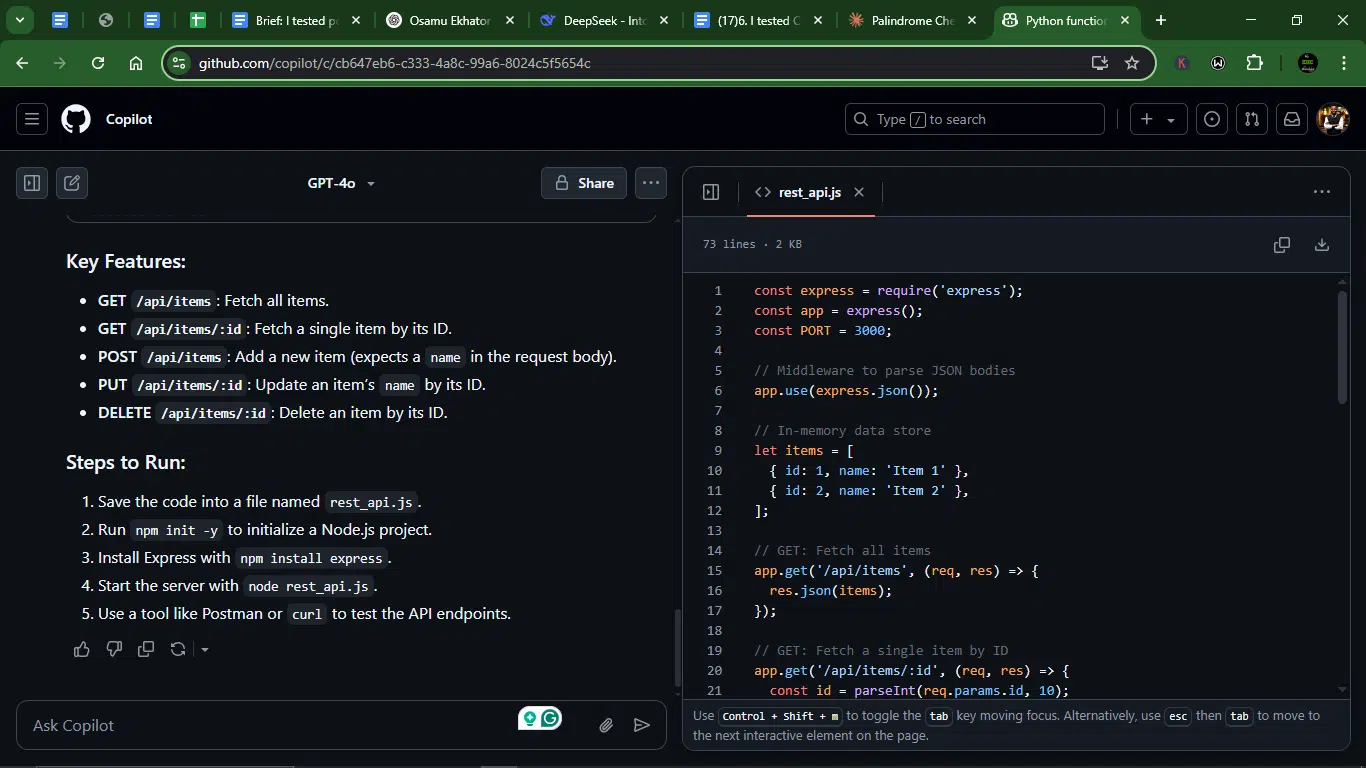

Copilot’s response

- Accuracy: Both provide working CRUD APIs with proper route handling (GET, POST, PUT, DELETE), error handling (404 for missing resources, 400 for bad requests), and in-memory data storage (arrays). That said, Claude includes extra features (logging middleware, default 404 route handler, error-handling middleware, and package.json setup instructions).

- Debugging and error handling: Claude catches missing name/email in POST, has a centralized error handler, and uses res.status() consistently. Copilot, on the other hand, offers basic error checks but no global error handling.

- Clarity and documentation: Claude gives detailed comments for each section (middleware, routes), includes setup instructions and usage examples, and explains how to test with curl/Postman. Copilot provides clean but minimal comments, but it offers no setup instructions or usage guide.

- Creativity and adaptability: Claude adds logging middleware for debugging, suggests future improvements (database integration, auth), and asks if you want elaboration. Copilot is straightforward CRUD with no extras.

Winner: Claude.

Copilot’s API is functional but barebones, but Claude delivers a production-grade starter with teaching moments.

Prompt 4: Write a SQL query

This task was all about precision. What I wanted to see here was if they would go the subquery route, use LIMIT, or fall into one of the many traps that trip up junior devs. And just as important, could they explain their approach clearly?

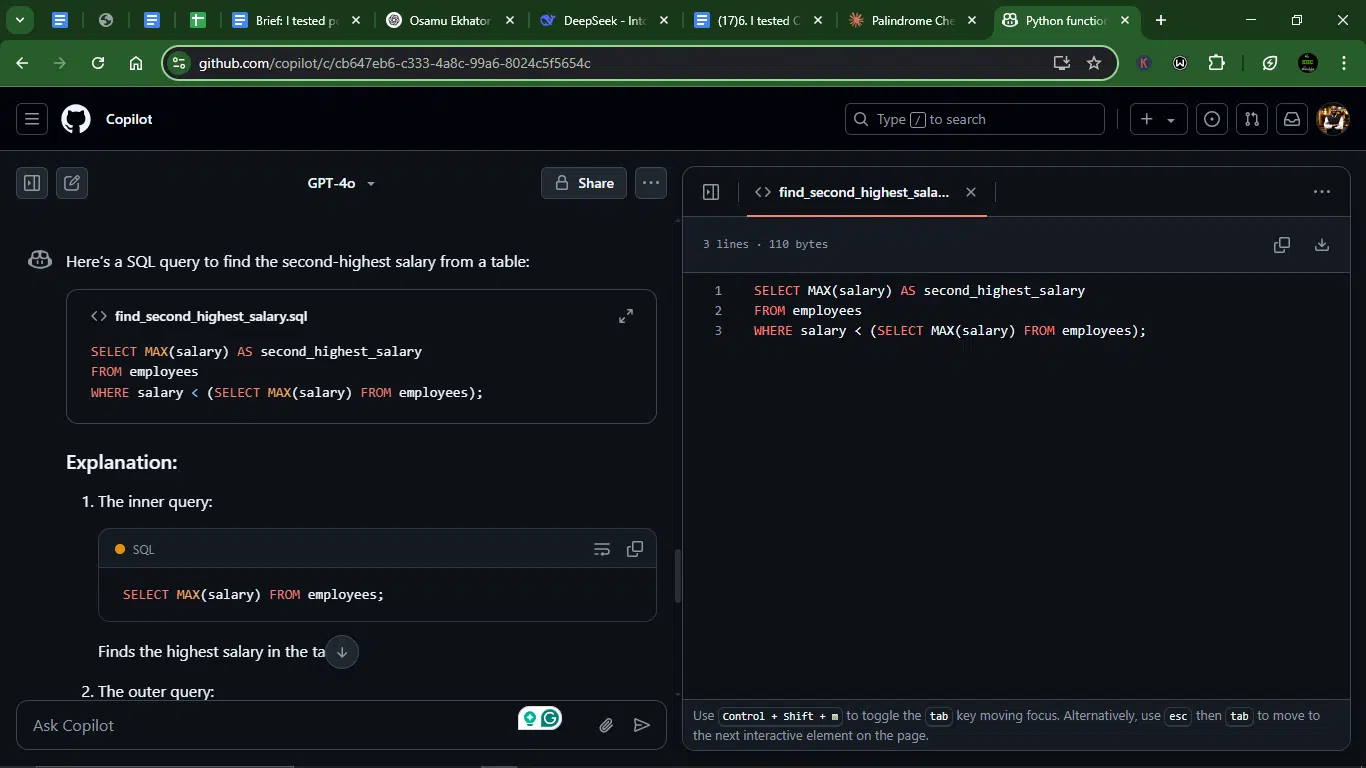

Prompt: Write a SQL query to find the second-highest salary

Result:

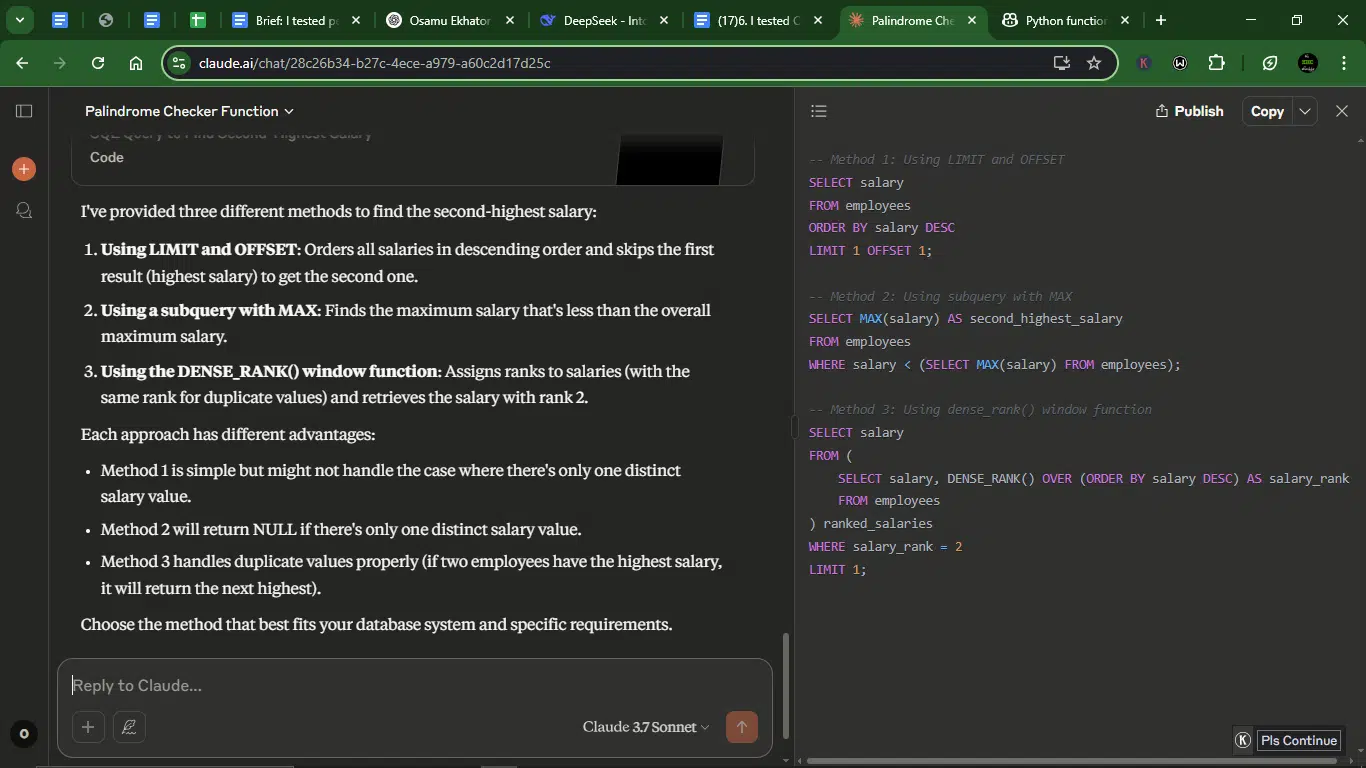

Claude’s response:

Copilot’s response:

- Accuracy: Both provide correct solutions (subquery with MAX, LIMIT/OFFSET). But Claude includes three methods: LIMIT/OFFSET, Subquery with MAX, and DENSE_RANK() (handles ties better). Copilot covers two methods (MAX subquery, LIMIT/OFFSET).

- Debugging and error handling:

- Claude explains edge cases:

- What if there’s only one salary? (DENSE_RANK handles it best.)

- How duplicates affect results.

- Copilot mentions DISTINCT but doesn’t discuss edge cases.

- Claude explains edge cases:

- Clarity and documentation: Claude labels each method clearly and explains when to use which approach. Copilot provides concise explanations but is less structured.

- Creativity and adaptability: Claude offers DENSE_RANK(), a robust solution for ties. Copilot sticks to standard approaches.

Winner: Claude.

Copilot’s answer is correct and clear, but Claude goes deeper with edge cases and advanced techniques.

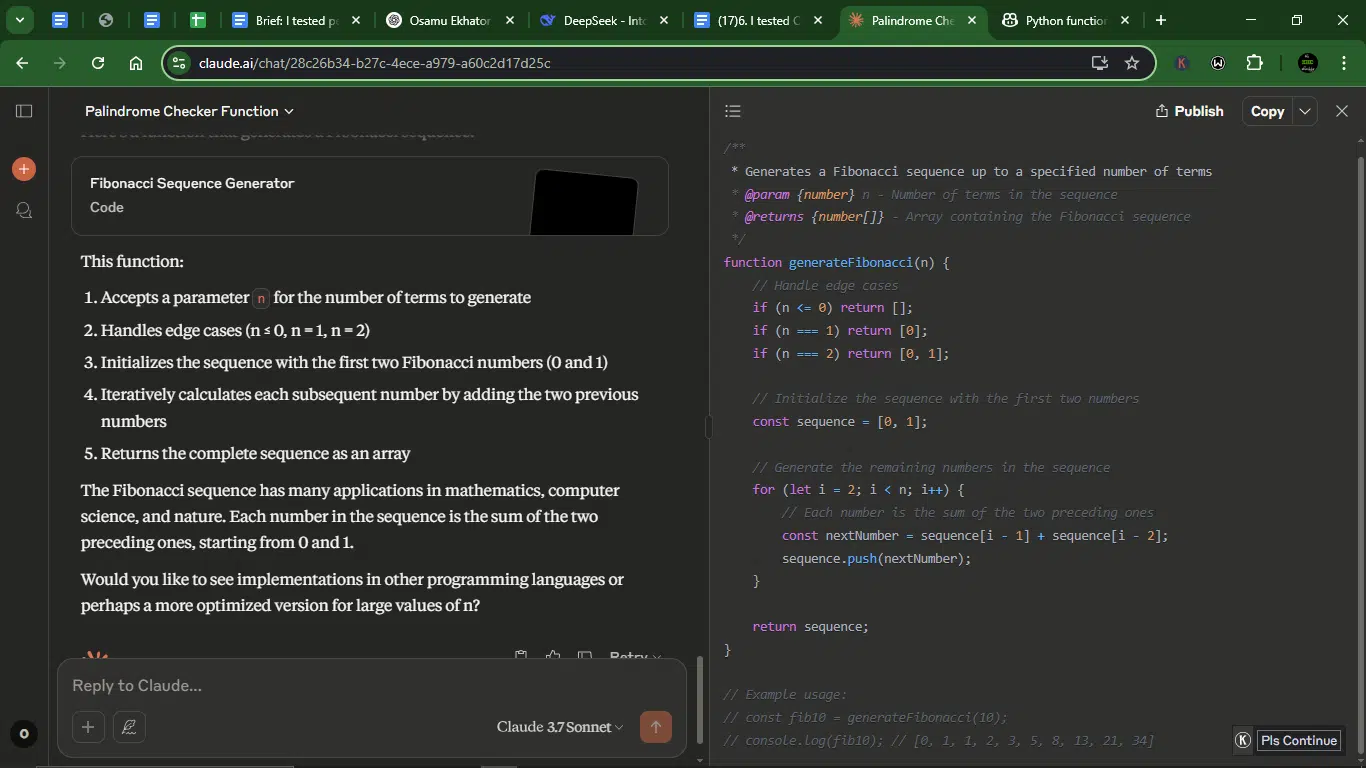

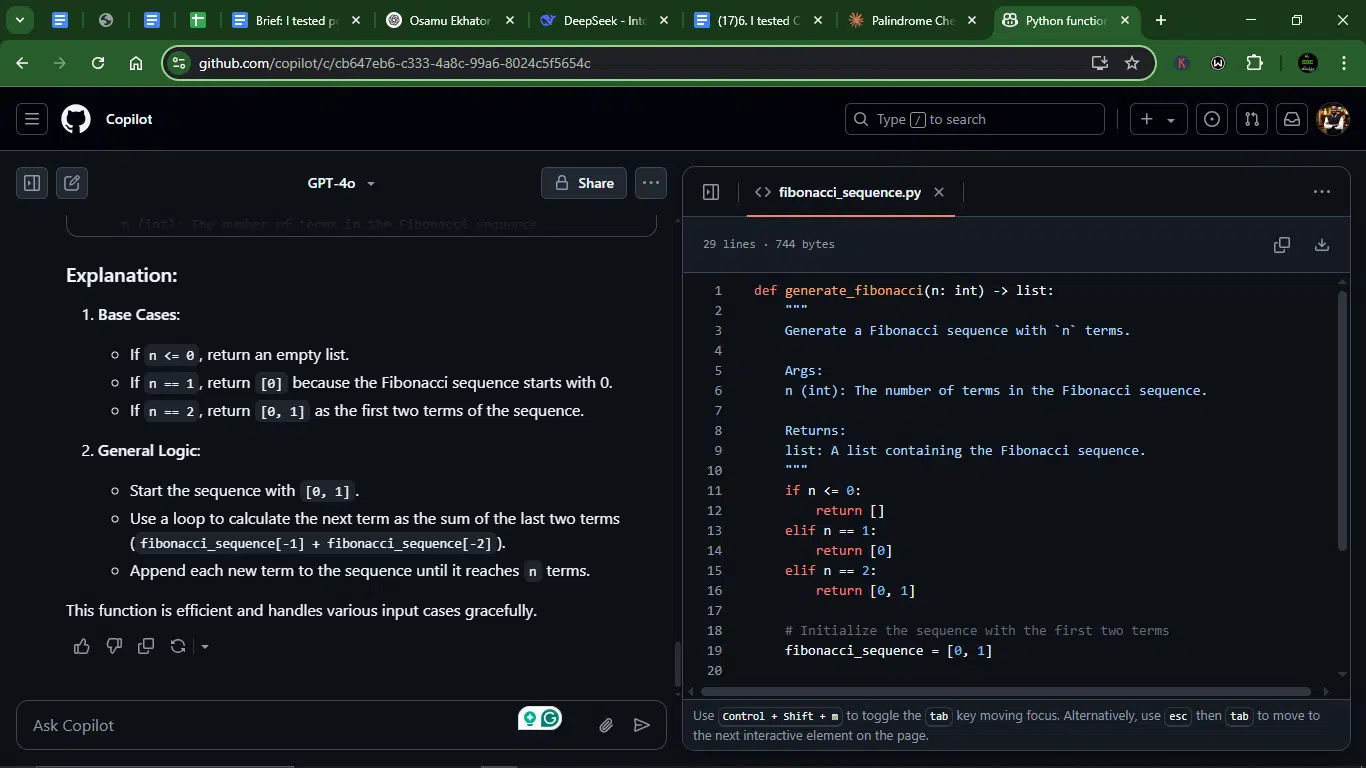

Prompt 5: Create a function that generates a Fibonacci sequence

This one was a test of logic and loops, something every dev bumps into sooner or later. This prompt is a good way to check how cleanly and efficiently each tool writes iterative or recursive logic.

Prompt: Create a function that generates a Fibonacci sequence.

Result:

Claude’s response:

Copilot’s response:

- Accuracy: Both provide correct implementations with Edge case handling (n ≤ 0, n = 1, n = 2) and Iterative generation (efficient for moderate n). Claude uses JavaScript; Copilot uses Python.

- Debugging and error handling: Claude explicitly checks for n <= 0 and returns an empty array for invalid input. Copilot performs similar edge-case handling but lacks type hints in JavaScript.

- Clarity and documentation: Claude’s JSDoc comments explain the params/return value, and the response includes example usage in comments. Copilot’s Python docstring is clean and standardized, and explains base cases and logic separately.

- Creativity and adaptability: Claude offers to extend (other languages, optimization) and mentions real-world applications. Copilot focuses on one clear solution.

Winner: Copilot.

Copilot wins on documentation and clarity (thanks to Python’s conventions)

Overall performance comparison: Claude vs. Copilot (5-prompt battle)

| Prompt | Winner | Reason |

| 1. Palindrome Checker | Claude | Stronger explanations and a flexible approach to logic. |

| 2. Debugging JavaScript Loop | Claude | Offered 3 solutions and dug deeper into the root of the problem. |

| 3. Build a REST API | Claude | More production-ready, with thoughtful structure and extras. |

| 4. SQL Query (Second-Highest) | Claude | Handled edge cases and used DENSE_RANK for accuracy. |

| 5. Fibonacci Sequence | Copilot | Prioritized documentation and clarity (thanks to Python’s conventions) |

*Overall Champion: Claude (Wins 4 out of 5 prompts).

Final verdict

Claude dominated this test round based on how well it explained its logic, handled edge cases, and adapted to real-world needs. GitHub Copilot, on the other hand, is still a solid ally for fast coding. It’s sharp when it comes to brevity, and its tight IDE integration makes it ideal for devs who want to get in, code, and ship.

My recommendation

- Use GitHub Copilot when you want clean, fast code snippets baked directly into your IDE workflow.

- Use Claude when you want to understand what’s going on or when the problem gets complex and you need an AI that thinks before it types.

Pricing for Claude and ChatGPT

When it comes to pricing, both Claude and ChatGPT offer flexible options, though their cost structures and features vary. Here’s a breakdown of their pricing tiers:

Claude pricing

| Plan | Features | Cost |

| Free | Access to the latest Claude model, use on web, iOS, and Android, ask about images and documents | $0/month |

| Pro | Everything in Free, plus more usage, organized chats and documents with Projects, access to additional Claude models (Claude 3.7 Sonnet), and early access to new features | $18/month (yearly) or $20/month (monthly) |

| Team | Everything in Pro, plus more usage, centralized billing, early access to collaboration features, and a minimum of five users | $25/user/month (yearly) or $30/user/month (monthly) |

| Enterprise | Everything in Team, plus: Expanded context window, SSO, domain capture, role-based access, fine-grained permissioning, SCIM for cross-domain identity management, and audit logs | Custom pricing |

Copilot pricing

| Plan | Price | Features |

| Free | $0 | Up to 2,000 code completions per monthUp to 50 chat requests per monthAccess to models like Claude 3.5 Sonnet and GPT-4oIdeal for occasional users and small projects |

| Pro | $10/month or $100/year | Unlimited code completions and chat interactionsAccess to advanced models including Claude 3.7 Sonnet, o1-preview, and GPT-4o6x more premium requests compared to the Free tierFree for verified students, teachers, and maintainers of popular open-source projects |

| Pro+ | $39/month or $390/year | All features of ProAccess to all available models, including GPT-4.530x more premium requests compared to the Free tierDesigned for users requiring maximum flexibility and model choice |

| Business | $19/user/month USD | Unlimited code completions and chat interactionsAccess to models like Claude 3.5/3.7 Sonnet, o1-preview, and GPT-4o300 premium requests per user per monthUser management and usage metricsIP indemnity and data privacy featuresSuitable for organizations aiming to enhance engineering velocity and code quality |

| Enterprise | $39/user/month USD | All features of BusinessAccess to all models, including GPT-4.53.33x more premium requests compared to the Business tierAdvanced customization options for organizational needsBest suited for enterprises requiring scalable AI solutions and comprehensive model access |

Why do Claude and Copilot matter to coders?

Here’s why AI tools like Claude and Copilot are crucial to developers today:

1. They reduce grunt work significantly

No more reinventing the wheel every time you need to write a sorting algorithm or spin up a REST API. Claude and Copilot can generate boilerplate code, setup configs, test scripts, and more, all based on a single prompt. That’s hours shaved off your dev time, instantly.

2. They help you learn as you go

Claude, in particular, doesn’t just dump code. It explains why the code works. If you’re a beginner or someone switching tech stacks, this built-in tutor vibe is invaluable. It’s like having a senior engineer sitting beside you, patiently walking you through every decision.

3. They help you debug faster

Got a broken loop or a nasty exception? These tools can sniff out bugs, suggest fixes, and even rewrite the problematic code. Copilot shines with quick inline suggestions inside your IDE, while Claude offers deeper insight when you paste entire blocks for analysis.

4. They improve code quality

Both Claude and Copilot are trained on high-quality, idiomatic code. That means the code they generate is often cleaner and more consistent than what you might write under time pressure. Claude even goes a step further by offering proper documentation and edge-case handling in many cases.

5. They boost productivity in real-world projects

Whether you’re freelancing, building a side project, or deep in enterprise code, these tools can unblock you, provide quick wins, and speed up everything from ideation to deployment. Copilot’s tight IDE integration makes it feel like part of your keyboard, while Claude handles deep-dive tasks like understanding codebases and writing long-form documentation.

6. They reduce context-switching

Instead of jumping between tabs to Google error messages, browse documentation, or search GitHub, you can just ask your AI assistant. That’s less friction, fewer interruptions, and more time coding.

7. They adapt to your coding style

Over time, especially with Copilot, the suggestions start to feel more personal, like the tool is learning how you code. Claude, with its large context window, can maintain consistent style and logic across longer projects too.

Conclusion

AI models like Claude and Copilot aren’t just novelties anymore—they’re serious productivity boosters. And in my head-to-head test, Claude came out on top as the best AI coding tool for thoughtful code generation, explanation, and creativity.

But Copilot still deserves a spot in your toolkit, especially for day-to-day auto-completion inside your IDE.

Whether you’re debugging at 2 AM or bootstrapping an API from scratch, these tools can save hours. Just don’t forget to think critically about their outputs as they’re assistants, not oracles.

FAQs about Claude vs Copilot

What’s the best AI model for code generation?

From what I’ve seen, Claude consistently generates cleaner, more thoughtful code. It also explains its reasoning, which makes it especially helpful when you’re working with edge cases or complex logic.

Is GitHub Copilot good for beginners?

It’s fast and convenient, especially inside your IDE. But it’s not much of a teacher. If you’re just starting out, Claude might be the better pick because it tells you why the code works, not just what to write.

Does Claude work inside IDEs?

Not directly. You’ll have to copy-paste your code into Claude’s chat interface for now. It’s not ideal for tight development loops, but great for when you need structured thinking, long-form documentation, or step-by-step debugging help.

Which AI tool is better for debugging?

Claude, hands down. It doesn’t just spit out fixes; it walks you through the logic, pinpoints where things went wrong, and often gives multiple solutions. Copilot will silently patch things up, but that’s not always helpful if you’re trying to learn.

Can I use both tools together?

Absolutely, and honestly, that’s where the magic happens. Use Copilot inside your IDE for lightning-fast autocompletions and boilerplate code. Then switch to Claude when you hit a wall, want to clean things up, or need help understanding a gnarly function. Think of Copilot as your speed booster, and Claude as your calm, wise coding tutor.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.