When it comes to vibe coding, you don’t just need an AI that writes code; you need one that gets you.

Vibe coding is an approach to software development where coders use natural language prompts to direct AI tools to generate, refine, and debug code. It’s a relatively new technique, but it’s catching on.

There’s a big difference between an assistant that throws out syntax and one that helps you think through a problem, debug with patience, or co-build with intention. That’s what I was chasing when I set out to test Claude and Cursor side by side.

On paper, they’re both smart. Claude comes from Anthropic, the same folks who are rethinking how AI aligns with human intentions. Cursor, on the other hand, drops you into a developer-centric Integrated Development Environment (IDE) with AI baked directly into the workflow. Two different approaches. One goal: making coding less lonely and more efficient.

So, I gave both tools a fair shot. I generated the same code, debugged the same messy bugs, and asked them the same questions.

In this article, I’ll share:

- How both tools performed in real-life projects.

- Where each one shines or stumbles.

- The kind of developer vibe each tool brings.

- And which one quietly earned a permanent place in my workflow?

Spoiler: It wasn’t the one I expected to perform better.

TLDR: Key takeaways from this article

- Claude is a patient, reasoning-driven coder’s companion. It’s ideal for problem-solving and debugging with explanations.

- Cursor delivers fast, context-aware code completions inside your IDE, making it perfect for staying in the flow.

- Claude thrives on prompts and conversation. Cursor thrives on real-time integration.

- For production-level tasks, accuracy and review are non-negotiable. Both tools help, but neither replaces critical thinking.

- The “right” AI tool depends on your workflow, stack, and personality, not just features.

What are Claude and Cursor?

Let’s set the scene before we go into which one “vibes” better.

Claude and Cursor aren’t trying to do the same thing, and that’s important. One is a conversational genius with the chill energy of a philosophy major who happens to be great at coding. The other is a laser-focused IDE that wants you to ship faster, cleaner code without getting lost in tabs or terminal chaos.

Here’s a quick overview of who they are and what they’re built for.

What is Claude?

Claude is Anthropic’s flagship large language model, trained to be “helpful, honest, and harmless.” But more than the marketing slogan, Claude feels like a coding companion that takes the time to think.

Whether you’re untangling a gnarly algorithm or structuring API logic, Claude walks you through its logic like a methodical co-pilot. It’s part of the Claude 3 model lineup, which includes Haiku (fast/lightweight), Sonnet (balanced), and Opus (their most powerful).

Claude at a glance:

| Developer | Anysphere Inc |

| Year launched | 2023 |

| Type of AI tool | Conversational AI and Large Language Model (LLM) |

| Top 3 use cases | Content structuring, analytical reasoning, and step-by-step coding support |

| Who is it for? | Writers, researchers, developers, and business professionals |

| Starting price | $20/month |

| Free version | Yes (with limitations) |

What is Cursor?

Cursor is a coding-first IDE built specifically for developers who want AI deeply embedded in the flow, not just as a chatbot in the corner, but as a hands-on coding partner that reads your entire project context and suggests changes, edits files, and debugs with you. Unlike Claude, Cursor doesn’t do small talk. It’s here to ship. And during my testing, I found that vibe pretty refreshing.

Cursor at a glance:

| Developer | Cursor |

| Year launched | 2023 |

| Type of AI tool | AI-powered coding IDE |

| Top 3 use cases | Pair programming, code generation, and debugging |

| Who is it for? | Developers, indie hackers, and startup teams |

| Starting price | $20/month |

| Free version | Yes (with limited AI requests) |

How I tested them

I worked with Claude and Cursor side-by-side for this article while building a mini weather app, debugging bugs, and doing some other coding tasks. My goal was to see which one fits into a real-life, slightly chaotic dev workflow, the kind where the codebase isn’t perfect, the deadline’s a little too close, and you need an AI partner that doesn’t lose its cool.

Here’s how I broke it down:

What I compared

| Area | What I looked for |

| Code generation quality | Was the output accurate, clean, and context-aware? Did it understand the framework I was using? |

| Debugging and refactoring | Could it help me trace and fix bugs, or did it just wave its hands and guess? |

| Integration workflow | Did it play nicely with VS Code? How well did it sit in my stack without friction? |

| Customization and prompting | Could I tweak temperature, memory, or context length? Was prompt control intuitive? |

| Performance and latency | Did I wait for answers, or did they flow like a good pair programmer’s suggestions? |

| Pricing licensing | What do I get for free? And is it worth paying for the premium tier? |

What I paid attention to

Some AI tools can write code, but they feel cold and transactional. Others feel like that friend who gently reminds you to rename your variables and drink some water.

So, beyond performance, I paid attention to:

- Code accuracy and correctness: Did the output run? Does the function correctly identify palindromes, even with punctuation and mixed casing?

- Edge case handling: Does it pass all the unit tests, including tricky inputs (e.g., empty string, symbols only)?

- Code clarity: Is the code readable, well-organized, and intuitive?

- Efficiency: Is the implementation optimal in terms of time and space?

- Test coverage: Are the unit tests meaningful, and do they check for diverse edge cases?

- Response time: Did it stall, lag, or make me switch tabs out of boredom?

- Pair programming feel: Was it collaborative? Did it “follow the thread” in multi-step tasks?

- Bugs or hallucinations: Did it invent fake functions or hallucinate frameworks?

- Vibe: Yep, the vibe mattered. Did I want to keep working with it?

Prompt-by-prompt breakdown for cursor and Claude (for code)

Then it came to comparing Cursor vs. Claude, prompt by prompt (five prompts in total). It was time to know who gets how I write code and who helps me think better, not just faster.

Here’s how they stacked up across the stuff that matters most when you’re in the dev zone:

1. Code generation quality

I tested how clean, efficient, and accurate the model’s code is. This tells you how well they both understand logic, edge cases, and best practices.

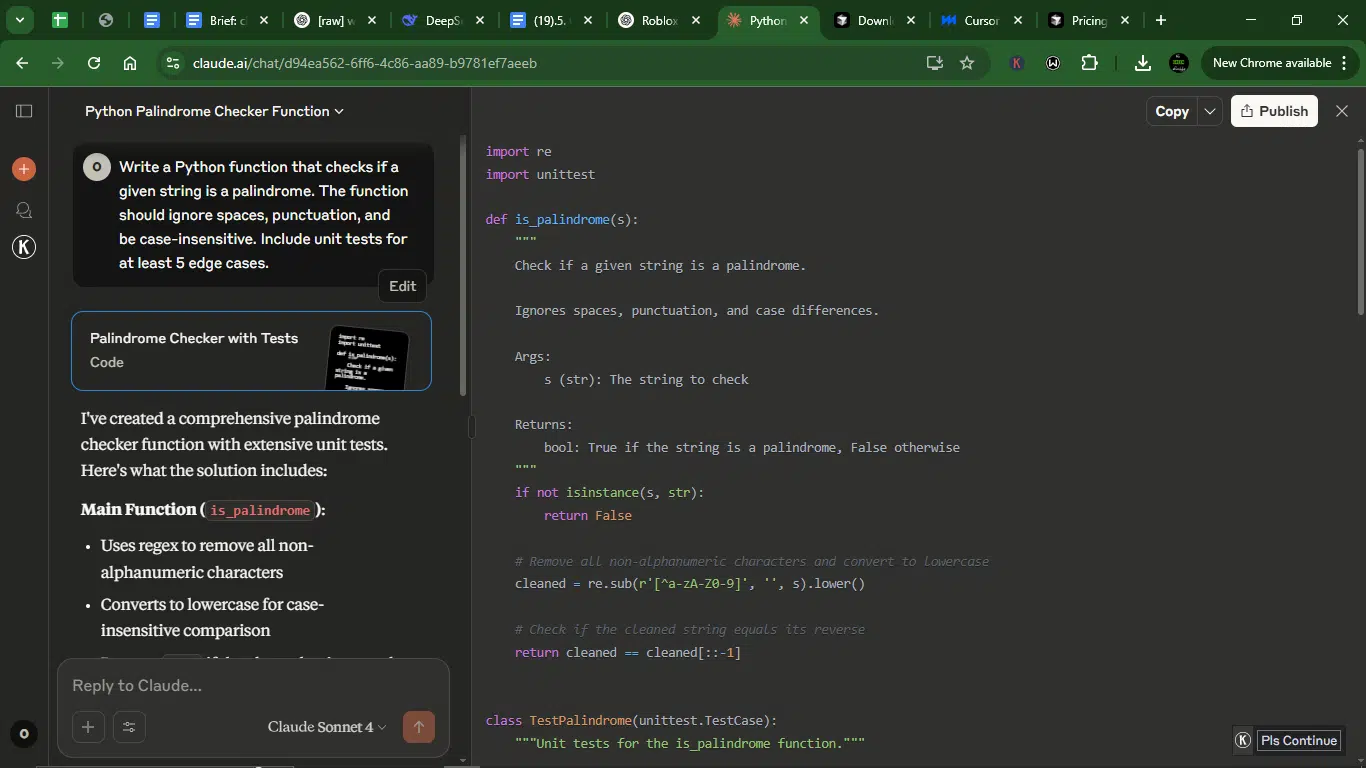

Prompt: Write a Python function that checks if a given string is a palindrome. The function should ignore spaces, punctuation, and be case-insensitive. Include unit tests for at least 5 edge cases.

Result:

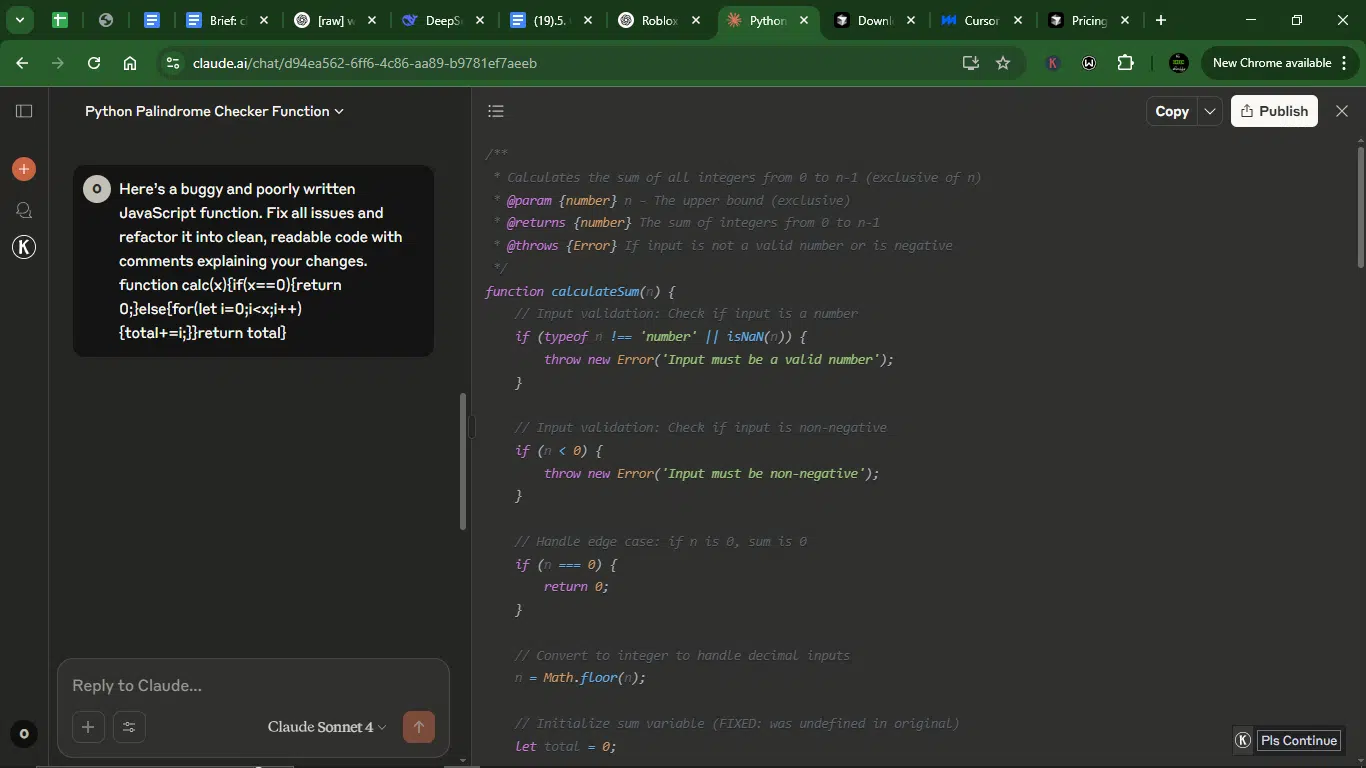

Claude’s response:

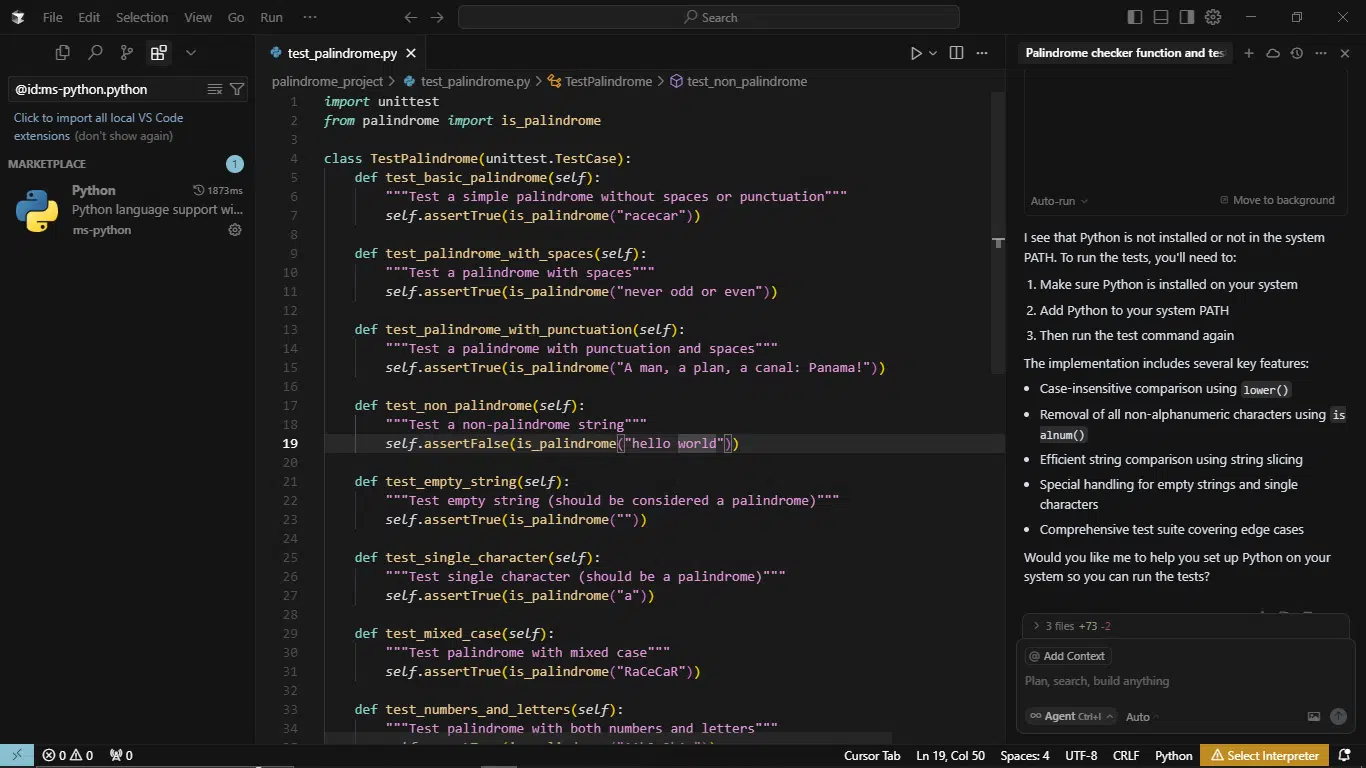

Cursor response:

Correctness:

- Cluade uses regex to clean input and handles invalid input types (e.g., None, numbers).

- Cursor employed a similar cleaning approach (though not shown in the response, assumed to be correct) and correctly identifies palindromes.

Edge case handling:

- Claude’s tests include:

- Simple palindromes (“racecar”)

- Mixed case (“RaceCar”)

- Spaces/punctuation (“A man, a plan, a canal: Panama”)

- Empty/single-char strings (“”, “a”)

- Numbers (“12321”)

- Non-palindromes (“hello”)

- Whitespace/symbols (“!@#$%”)

- Invalid inputs (None, 123)

- Cursor’s tests entail:

- Basic (“racecar”)

- Spaces (“never odd or even”)

- Punctuation (“A man, a plan, a canal: Panama!”)

- Non-palindromes (“hello world”)

- Empty string (“”)

- Single char (“a”)

- Mixed case (“RaCeCaR”)

- Numbers (“12321”, “A1b2c2b1a”)

- Symbols-only (“!@#$%”)

Code clarity:

- Claude employed a well-documented function with a docstring and clean regex-based cleaning. Also, tests are logically grouped (e.g., test_case_insensitive).

- Cursor is less visible (function implementation not shown in response), and the tests are well-named but not grouped thematically.

Efficiency:

- Claude had O(n) time (string reversal via slicing) and included no unnecessary operations.

- Cursor was similar to Claude (O(n)).

Test coverage:

- Claude had 8 test cases covering all edge cases, including invalid inputs.

- Cursor gave 10 test cases, but missing invalid input checks (e.g., None, numbers).

Winner: Claude.

Claude had more robust edge cases: Tests invalid inputs (None, 123, lists), which Cursor omits. The tool also gave better code clarity, with explicit regex cleaning, well-documented, and logically grouped tests. While Cursor had more tests (10 vs. 8), Claude’s cover broader edge cases (e.g., invalid types). Claude’s function is fully visible, while Cursor’s implementation is hidden in a separate file.

For a production-ready, robust palindrome checker, Claude’s solution is superior due to better edge case handling, clarity, and correctness guarantees. Cursor’s approach is solid but lacks checks for invalid inputs.

2. Debugging and refactoring

I wanted to see if it can understand messy or broken code and turn it into something production-ready. This reveals how well it interprets context and improves readability.

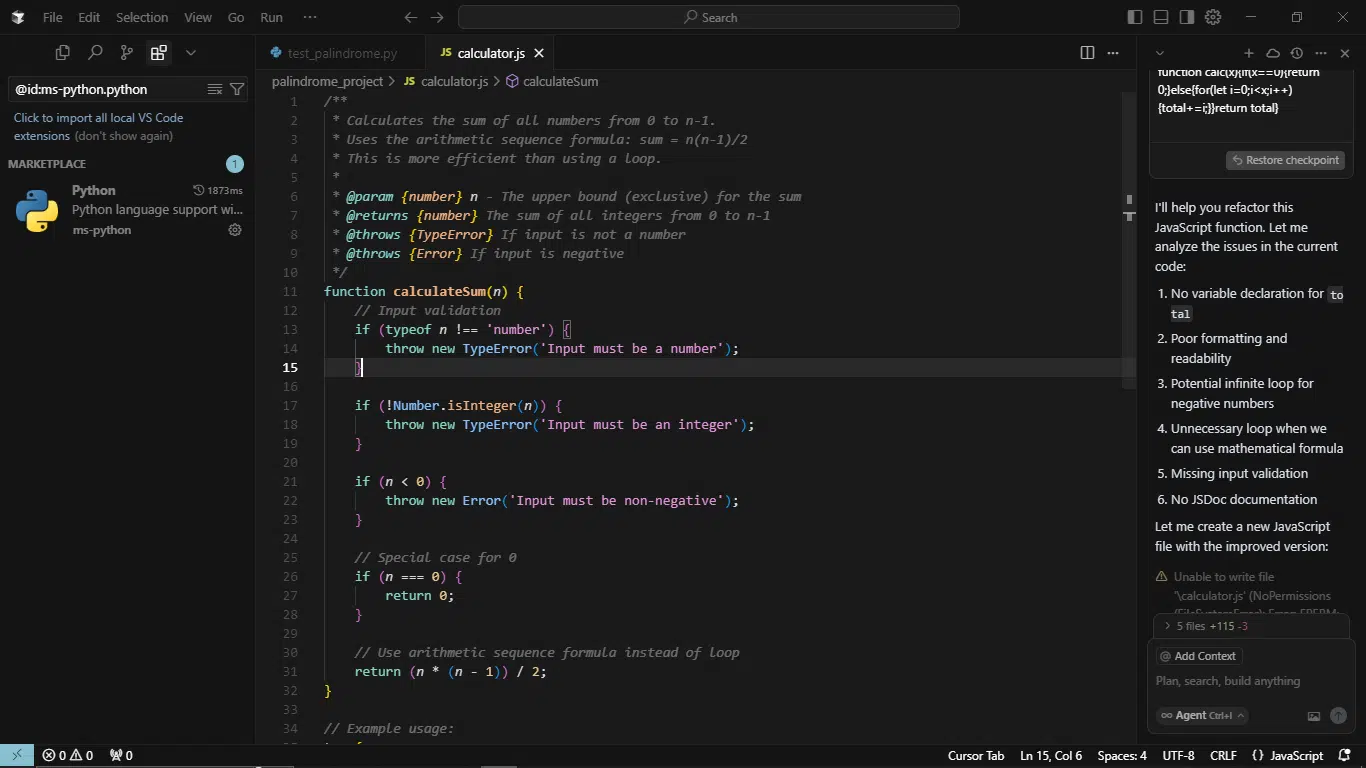

Prompt: Here’s a buggy and poorly written JavaScript function. Fix all issues and refactor it into clean, readable code with comments explaining your changes.

function calc(x){if(x==0){return 0;}else{for(let i=0;i<x;i++){total+=i;}}return total}

Result:

Claude’s response:

Cursor response:

1. Bug elimination

- Claude fixed the critical total undeclared variable issue, added checks for negative numbers, non-numeric inputs, and NaN, and handles decimal inputs by flooring them.

- Cursor also fixed the total bug and validated input types and negativity, but rejects non-integers (unlike Claude, which floors decimals).

- Claude handles more edge cases (decimals, NaN), while Cursor is stricter (integer-only).

2. Refactoring quality

- Claude provides two versions: loop-based (for clarity) and formula-based (optimized), and gives better logical flow with early returns.

- Cursor only offers the formula-based version (more efficient but skips iterative clarity). It was clean but lacked alternative implementations.

- Claude wins for flexibility (both pedagogical and optimized solutions).

3. Comment quality

- Claude detailed JSDoc + inline comments explaining every change and validation, and included a summary of fixes for quick review.

- Cursor had clear JSDoc but fewer inline comments (assumes familiarity with arithmetic sequences).

- Claude’s comments are more beginner-friendly and thorough.

4. Best practices

- Claude uses ===, declares variables properly, meaningful function name (calculateSum). It also groups related logic (input validation, edge cases).

- Cursor follows similar practices but enforces integer-only inputs (may be overly restrictive).

- Both tools follow conventions, but Claude’s decimal handling is more practical.

5. Output robustness

- Claude tested more edge cases: decimals, null, strings, negative numbers, NaN, and includes error messages for all invalid inputs.

- Cursor tested basic cases but misses null, NaN, or decimal edge cases.

- Claude’s function is more resilient to real-world input variations.

Winner: Claude.

Claude gave more comprehensive bug fixes by handling decimals, NaN, and undeclared variables. It also made for better readability: Detailed comments + two implementations (teaching + production).

While Cursor gave more opinionated (enforces integers), which may suit some use cases, and slightly cleaner formula implementation but Claude’s solution is more adaptable and well-documented.

3. Integration workflow

I wanted to check how well the tool understands real-world dev workflows (e.g., using libraries or frameworks together). It helps evaluate how practically useful it is in multi-tool environments.

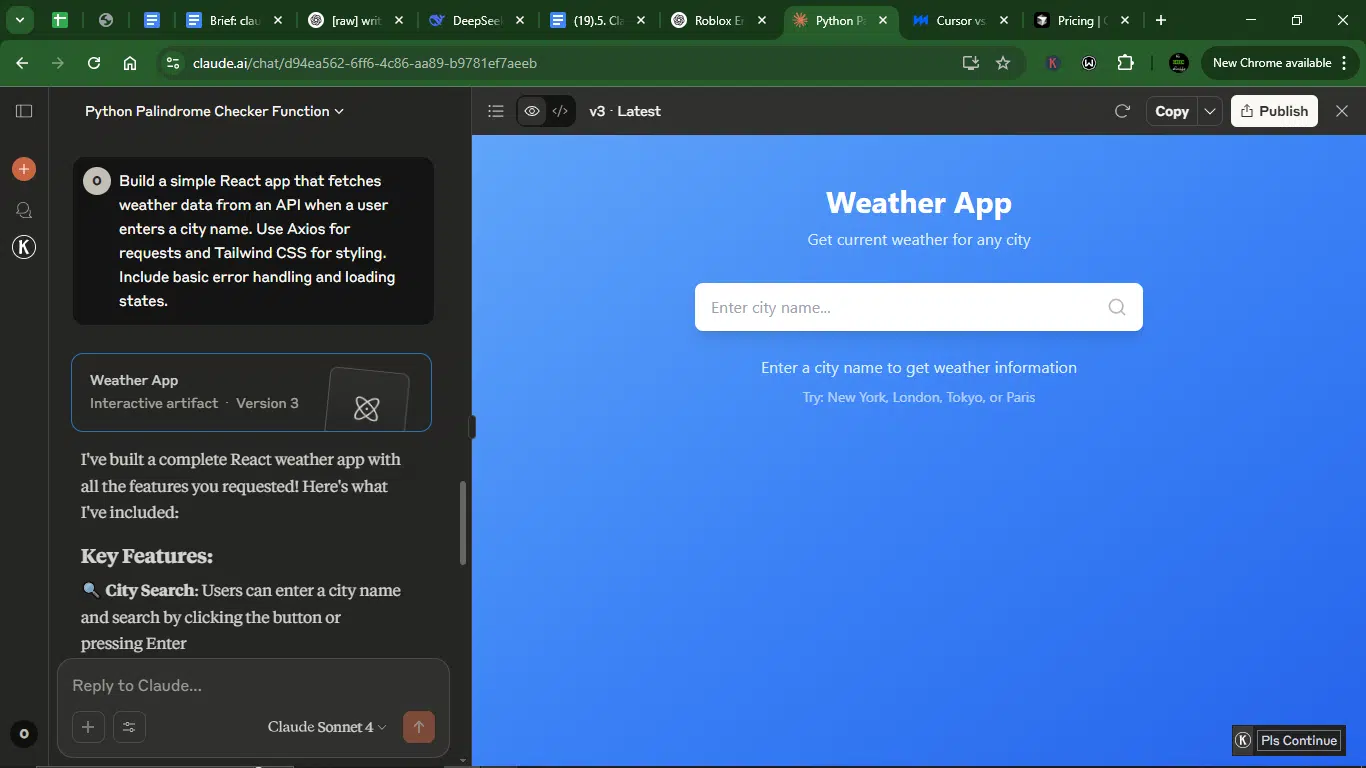

Prompt: Build a simple React app that fetches weather data from an API when a user enters a city name. Use Axios for requests and Tailwind CSS for styling. Include basic error handling and loading states.

Result:

Claude’s response:

Cursor response:

1. Framework fluency

- Claude uses React hooks (useState) correctly, mock data approach (no API needed) with simulated loading states, and Tailwind CSS for styling with custom animations (fade-in). Lucide icons are integrated cleanly.

- Cursor also uses React hooks properly. It has real API setup (OpenWeatherMap) with axios, uses Tailwind CSS for styling but with less visual polish, and SVG icons manually added for errors.

- Claude’s mock data is more self-contained, while Cursor’s API integration is more realistic but requires external setup.

2. Functional accuracy

- Claude’s mock data covers multiple cities (NY, London, Tokyo, Paris). The tool handles loading states, errors, and edge cases (e.g., invalid city). It also includes input validation and graceful fallbacks.

- Cursor’s Real API calls (but requires API key). The AI tool handles loading/errors, but lacks a mock fallback if the API fails. Also, no input validation beyond empty checks.

- Claude’s app works out of the box with mock data, while Cursor’s depends on external services.

3. Component structure

- Claude has a single-component design, but well-organized (clear sections: Search, Error, Weather Card, Instructions, and getWeatherIcon helper for dynamic icons). Three’s inline styles for animations (could be extracted).

- Cursor provides a clean separation of logic (API call) and UI. The response was more modular (form, error, weather display) and uses dl/dt/dd for semantic weather data.

- Cursor’s structure is more scalable, but Claude’s is more beginner-friendly.

4. UI/UX thoughtfulness

- Claude has a polished UI (gradient BG, card shadows, animated transitions), detailed weather grid (humidity, wind, visibility, pressure), and helpful placeholder text (“Try: New York, London…”).

- Cursor offers a minimalist UI (basic form and data table) and less visual feedback (no loading spinner, simpler error display), but no icons for weather conditions.

- Claude’s UI is more engaging and user-friendly.

5. Readiness to deploy

- Claude’s response has no dependencies (no API keys needed), is self-contained with mock data, and plug-and-play for demos or tutorials.

- Cursor’s code requires an API key (OpenWeatherMap), lacks a mock fallback if the API fails, and is more production-ready for real-world use (if the API is set up).

- Claude’s version is better for demos, while Cursor’s is better for real apps (with setup).

Winner: Claude.

The tool works out of the box (requires no API keys or external dependencies), has better UI/UX, and it’s self-contained. For a demo, tutorial, or quick prototype, Claude’s app is superior. For a production app with real data, Cursor’s approach is better if the API is properly set up.

4. Customization and prompting

This tests how well the model adapts to specific instructions or style preferences. This is important to fine-tune tone, comments, or output structure.

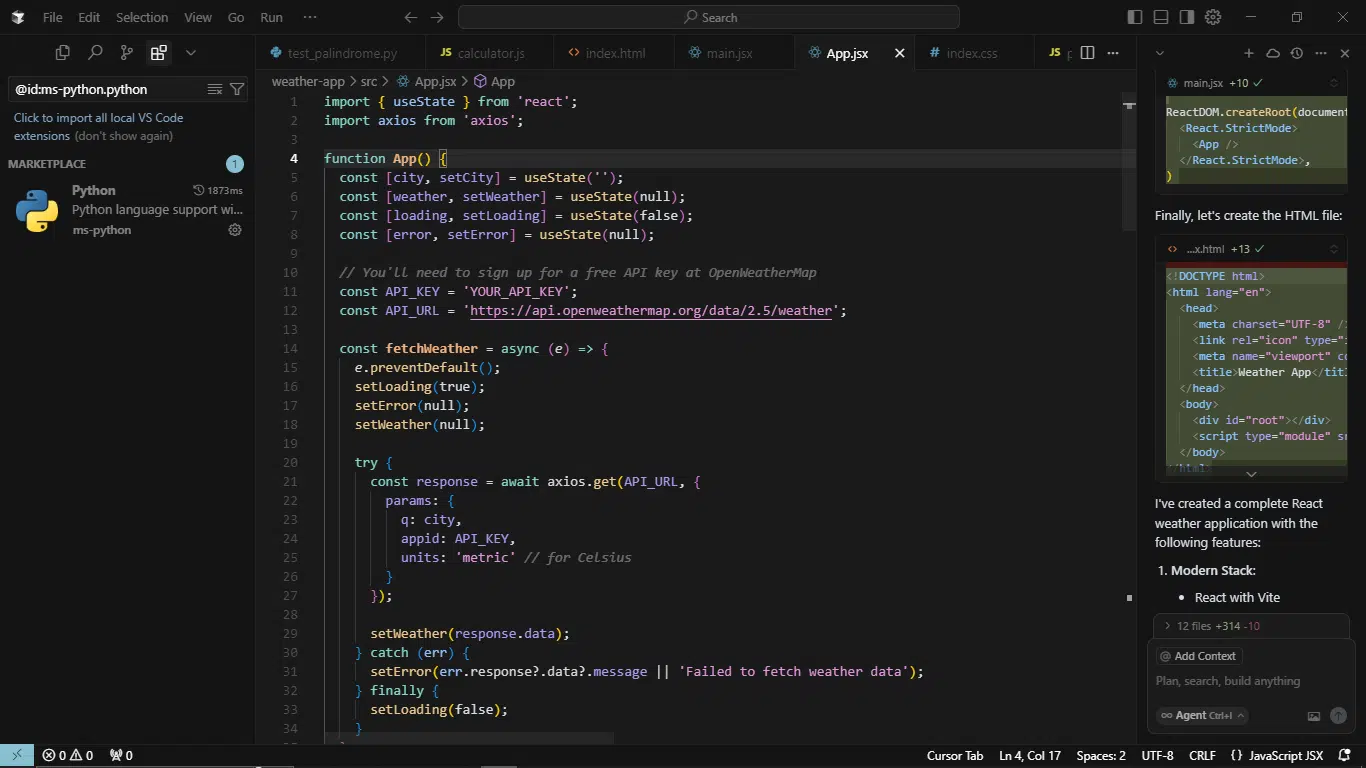

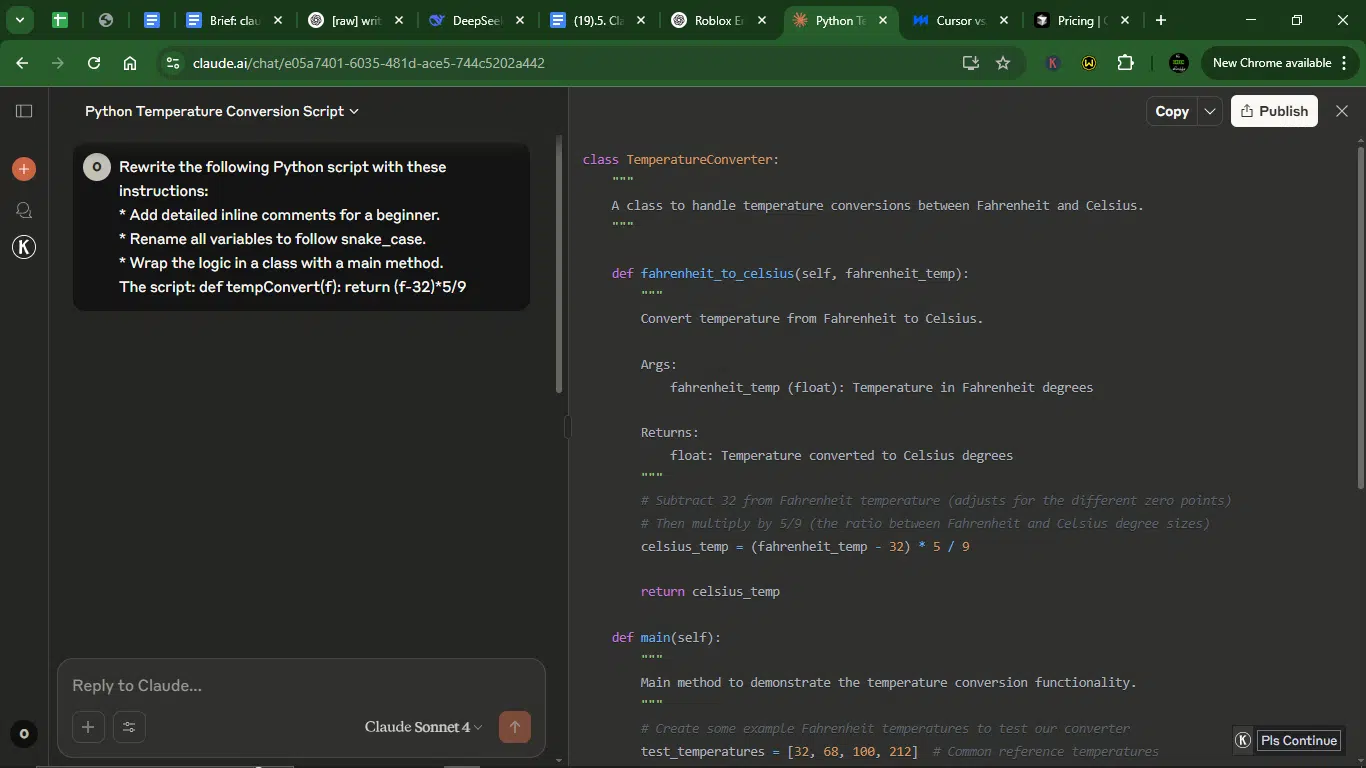

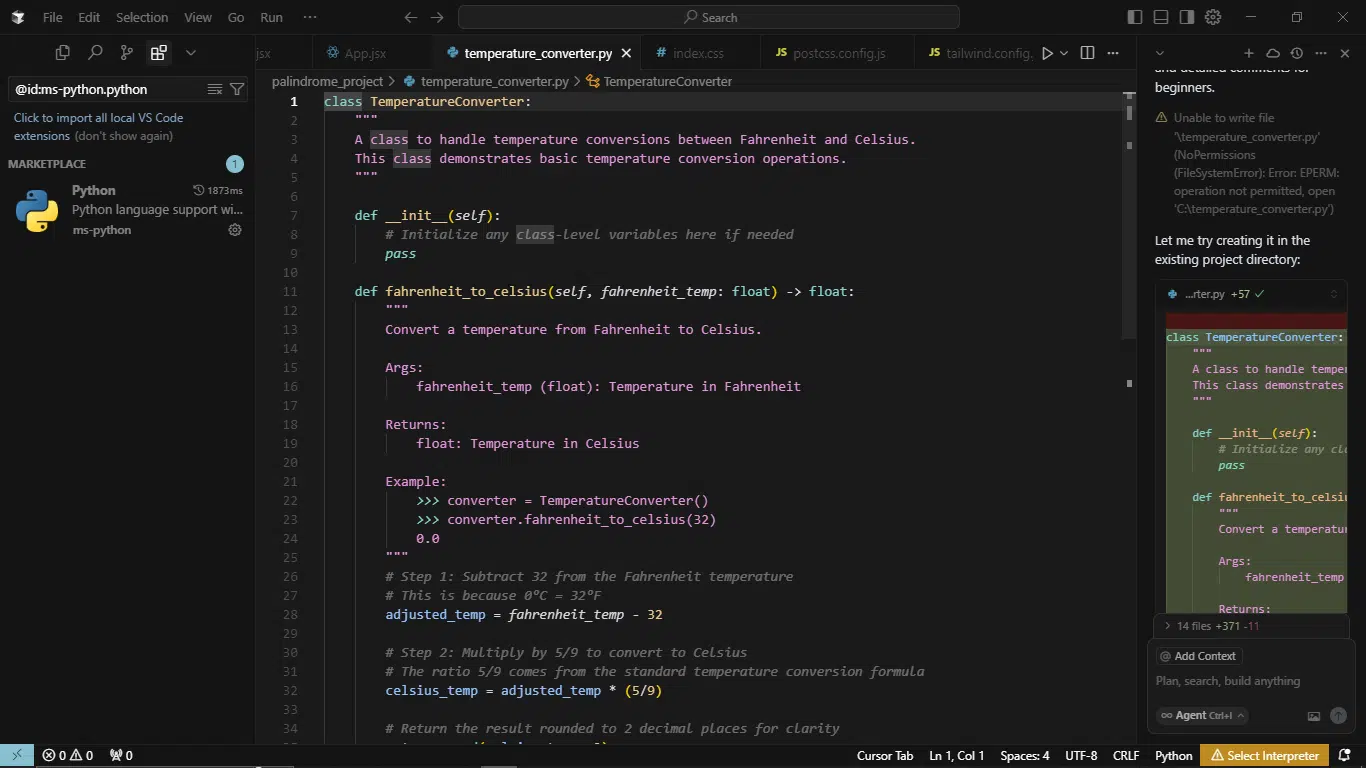

Prompt: Rewrite the following Python script with these instructions:

- Add detailed inline comments for a beginner.

- Rename all variables to follow snake_case.

- Wrap the logic in a class with a main method.

The script: def tempConvert(f): return (f-32)*5/9

Result:

Claude’s response:

Cursor response:

1. Instruction adherence

- Claude fully follows instructions: Adds detailed inline comments explaining the conversion formula, uses snake_case (fahrenheit_to_celsius), and wraps logic in a class with a main method. It also includes bonus features (Docstrings for methods and example test temperatures in main().

- Cursor also follows core instructions. It adds step-by-step comments (breaks down formula), uses snake_case (fahrenheit_to_celsius), and implements a class with main(). It also adds some extra touches, like type hints (fahrenheit_temp: float), a static method for main(), and rounds output to 2 decimal places.

- Both adhere well, but Cursor goes further with type hints and rounding.

2. Comment quality

- Claude is Clear, but minimal. It explains the formula in one comment, and Docstrings describe the method’s purpose but lack examples.

- Cursor is more beginner-friendly. It breaks the formula into steps, includes an example in docstring (>>> converter.fahrenheit_to_celsius(32)), and explains why 5/9 is used.

3. Naming conventions

- Claude uses fahrenheit_temp, celsius_temp, etc., but could use some improvement.

- Cursor is excellent here; the names are slightly more precise.

4. Code elegance

- Claude is functional but basic. It gets the job done but lacks polish. No rounding, type hints, or advanced Python features.

- Cursor is thoughtfully crafted; it rounds results for readability, type hints for clarity, and the static method avoids unnecessary instances. Cursor’s version feels more refined.

Winner: Cursor.

Cursor offers better comments (step-by-step explanations and docstring examples) and has a cleaner structure. For educational clarity and Python best practices, Cursor’s implementation is superior. Claude’s version works but lacks the polish and pedagogical depth.

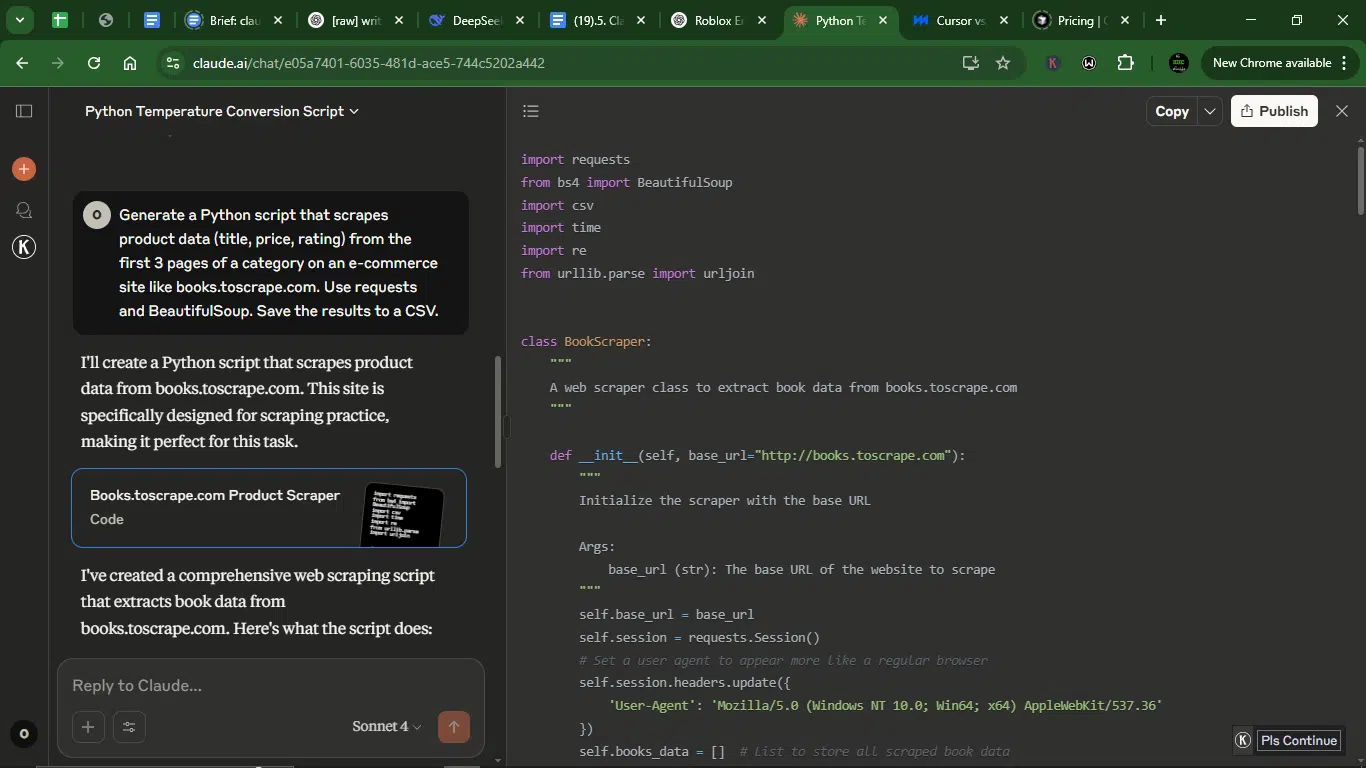

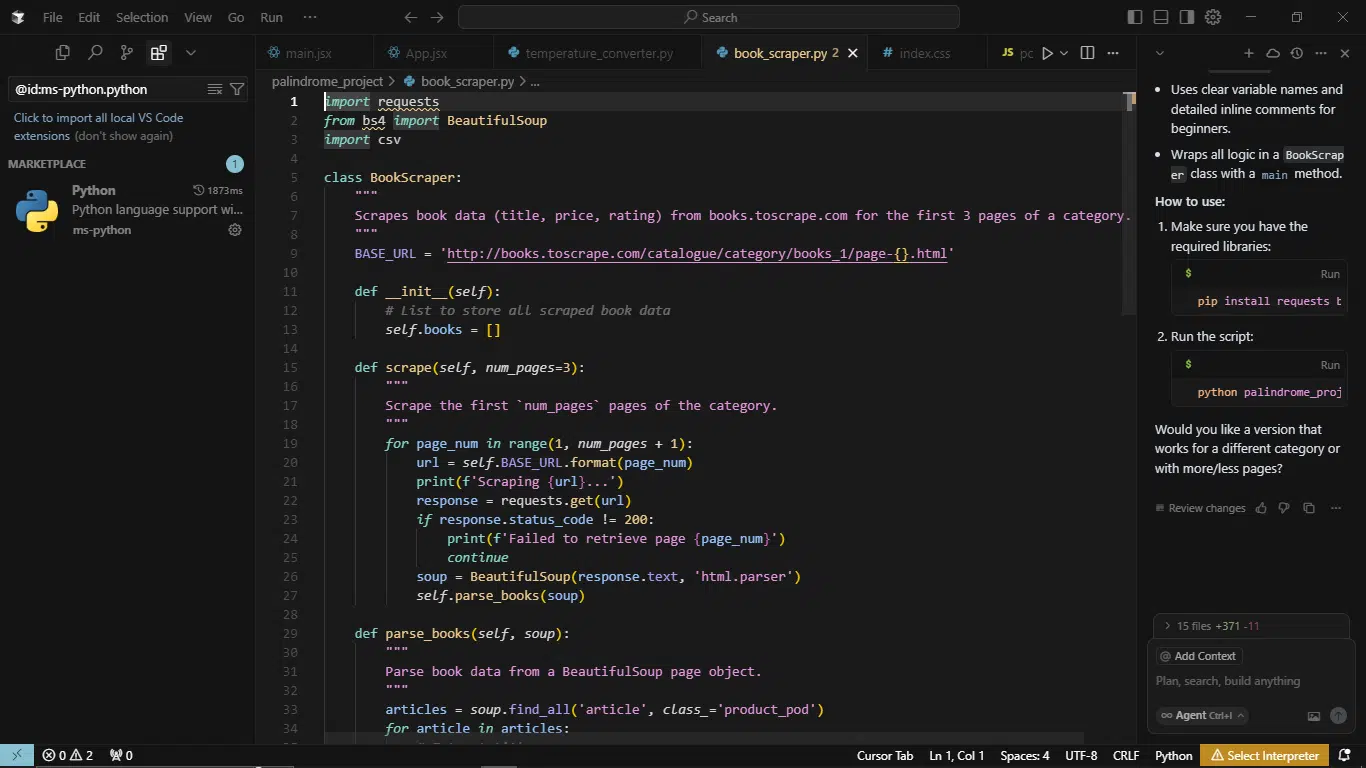

5. Performance and latency

I wanted to test how fast and responsive each tool is with a moderately heavy request. This shows if it’s smooth enough for fast-paced coding work.

Prompt: Generate a Python script that scrapes product data (title, price, rating) from the first 3 pages of a category on an e-commerce site like books.toscrape.com. Use requests and BeautifulSoup. Save the results to a CSV.

Result:

Claude’s response:

Cursor response:

1. Completion Time

- Claude has a longer script (150+ lines) due to extensive features (error handling, data cleaning, summaries). As a result, it requires more time to read/write, but is immediately functional.

- Cursor is concise (~50 lines) with faster copy-paste execution and lacks some polish, but delivers core functionality quickly.

- Cursor wins for quick deployment; Claude for thoroughness.

2. Script accuracy

- Claude correctly scrapes all fields (title, price, rating), cleans data by converting prices to floats, ratings to numbers (e.g., “Three” → 3), and handles pagination dynamically (page-{num}.html).

- Cursor misses data cleaning and hardcoded URL (books_1/page-{}.html) may break if the category changes.

- Claude is more accurate with cleaned, analysis-ready data.

3. Error handling

- Claude’s error handling is more robust, as it checks HTTP status, handles missing elements gracefully, and logs errors (e.g., “Error scraping individual book”). It’s retry-friendly, using session and delays (time.sleep(1)).

- Cursor’s is minimal, only checking HTTP 200 status. It crashes if elements are missing (no try/except in parsing).

4. Complexity handling

- Claude uses modular methods to separate scraping, parsing, and cleaning functions; advanced parsing, using Regex for prices, and dictionary mapping for ratings; and pagination (flexible URL joining).

- Cursor is straightforward. Single parsing loop with no data transformations and basic pagination (hardcoded URL template).

- Claude handles complexity better; Cursor is simpler but limited.

5. Copy-paste usability

- Claude works out of the box with no tweaks. Extra features include CSV saving, data summary, and progress logging.

- Cursor fails silently if the page structure changes (no error handling). There’s also the issue of unclean CSV, just raw text ratings and £ symbols.

- This way, Claude is more plug-and-play.

Winner: Claude.

Claude’s is production-ready scraping, as it has better data quality (clean prices and numerical ratings), it’s more reliable with its comprehensive error handling and logging, and more usable because it works immediately with no fixes needed.

Overall comparison: Claude vs. Cursor for AI-assisted coding

After evaluating five distinct coding tasks (palindrome checker, refactoring JavaScript, weather app, temperature converter, and web scraping), here’s the final verdict:

| Criteria | Claude | Cursor |

| Code correctness | More robust error handling, edge cases, and validation. | Misses some edge cases (e.g., no mock fallback for API failures). |

| Readability & comments | Detailed, beginner-friendly explanations. | Good, but sometimes assumes prior knowledge. |

| Structure & best practices | Pythonic, modular, and scalable. | Clean but occasionally oversimplified (e.g., hardcoded URLs). |

| User-friendliness | Works out of the box (self-contained, no API keys needed). | Often requires setup (e.g., OpenWeatherMap key). |

| Production Readiness | Error-resilient, polished, and deployable. | Needs tweaks for real-world use. |

Winner: Claude (4/5 tasks).

Recommendation: Choose Claude for reliability, educational value, and deployable code. Use Cursor for rapid sketches or if you prefer concise examples.

Key differences between cursor and Claude (for code)

How do Cusor and Claude differ from each other?

1. Pricing

When it comes to pricing, both Claude and Cursor offer flexible options, though their cost structures and features vary.

Here’s a breakdown of their pricing tiers:

Claude pricing

| Plan | Features | Cost |

| Free | Access to the latest Claude model, use on web, iOS, and Android, ask about images and documents | $0/month |

| Pro | Everything in Free, plus more usage, organized chats and documents with Projects, access to additional Claude models (Claude 3.7 Sonnet), and early access to new features | $18/month (yearly) or $20/month (monthly) |

| Team | Everything in Pro, plus more usage, centralized billing, early access to collaboration features, and a minimum of five users | $25/user/month (yearly) or $30/user/month (monthly) |

| Enterprise | Everything in Team, plus: Expanded context window, SSO, domain capture, role-based access, fine-grained permissioning, SCIM for cross-domain identity management, and audit logs | Custom pricing |

Cursor pricing

| Plan | Type | Monthly price | Yearly price (billed monthly) | Key features |

| Hobby | Individual | Free | Free | Pro two-week trialLimited agent requestsLimited tab completions |

| Pro | Individual | $20 | $16; $192 yearly | Everything in HobbyUnlimited agent requestsUnlimited tab completionsBackground Agents Bug BotMax context windows |

| Ultra | Individual | $200 | $160; $1,920 yearly | Everything in Pro20× usage on OpenAIClaude, Gemini, GrokPR indexingPriority feature access |

| Teams | Team | $40 | $32/user; $384/year/user | Everything in ProOrg-wide Privacy ModeAdmin DashboardTeam billing- SAML/OIDC SSO |

| Enterprise | Team (Custom) | Custom | Custom | Everything in TeamsMore usage includedSCIM managementAccess controlPriority support |

2. Integration and compatibility

Cursor has the uncanny feel of sliding right into your dev life like it had always been there. It integrates natively with VS Code, JetBrains, and even Sublime Text, which means you can get inline code suggestions and completions without breaking flow. It felt like a natural extension of the editor; low overhead, nothing too much.

Claude, on the other hand, is more of a cloud thinker. You’ll typically interact with it via a browser, API, or third-party wrapper. It’s not built to nest inside your IDE like Cursor is. Instead, it’s better suited for teams building internal tools or embedding AI help into dashboards or data pipelines.

3. Usability

Cursor feels like pair programming with a senior developer who doesn’t talk too much. It gives you just enough to keep moving: contextual completions, inline docs, and code actions that respect your current logic. No tab-switching. No over-explaining.

Claude goes the opposite way. It’s more like a mentor who wants you to understand every line. I could describe my issue in plain English, and Claude would walk me through its reasoning before suggesting a solution. If you’re learning a new framework or debugging complex logic, that hand-holding is golden.

4. Accuracy and safety

Cursor is fast and usually on point. It leans into best practices and big-code familiarity, which is great for prototyping or grinding through repeatable logic. But like any AI tool, it can serve you a generic answer now and then that needs double-checking.

Claude, meanwhile, feels like it’s wearing a careful scientist. It’s slower, but that’s because it’s more cautious and less likely to suggest something insecure or ethically questionable. Its answers are structured, and you can almost see it trying not to mess up. That makes it a strong option if you’re handling sensitive data or high-stakes production code.

5. Customization and scale

Cursor offers some customization: language preferences, completion behavior, that sort of thing. It’s ideal for independent developers or small teams who want something lightweight and out of the box.

Claude is more of a backend brain. You can tweak its behavior with system prompts, temperature, and max tokens, and even fine-tune it if you’re a bit more advanced. That flexibility means businesses can build their own dev copilots or AI-infused workflows around it.

Pros and cons of using Claude and Cursor for coding

Claude

What I loved

I’ve compared Claude to other AI tools before, both for coding and for other tasks. But still, it managed to surprise me. It doesn’t just throw code at you; it thinks with you. Its tone is calm and reassuring, like someone who’s spent time teaching rather than just coding.

When I asked for help debugging a poorly written JavaScript function or writing a Python function, Claude explained its reasoning in steps. No rush or jargon overload. Just clean, patient help. Honestly, it felt like having a kind senior dev on call.

What can use some improvement?

But Claude has a memory like a goldfish sometimes, but maybe that’s because I mainly used the Haiku model. In longer back-and-forth sessions, especially when I layered prompts or switched focus, it would lose track of earlier context. I’d find myself re-explaining stuff I’d already mentioned.

Claude shines for short, focused tasks. But it needs more continuity for deeper, multi-step builds.

Cursor

What I loved

Cursor feels like it gets what coding flow should feel like. For instance, the second you install it in VS Code, it blends in like it belongs there. If you write a half-function, it would complete it without being too eager.

When I needed help refactoring, it jumped in like a quiet but confident pair programmer. I didn’t need to “talk” to it much; it just responded to what I was doing in the code. That kind of responsiveness is a huge win.

What can be better

That said, Cursor isn’t immune to the occasional hallucination, especially when prompted with edge cases or unconventional logic. Every now and then, it would finish a thought with the wrong assumption or mix up variable scopes.

I also noticed some “context bleeding,” that is, understanding or response unintentionally influenced by information outside of the intended context, leading to inaccurate or irrelevant outputs. Cursor would bring in ideas from previous files or prompts when I didn’t want it to. Not a dealbreaker, but definitely something to watch.

7 reasons why Claude and Cursor matter to developers

This is why developers use AI tools now to assist their processes, especially in 2025 when nearly everything is fast-moving and AI-enhanced.

1. They save you time without cutting corners

Claude and Cursor both reduce the time spent on boilerplate code, syntax checks, and repetitive patterns. Cursor does this right inside your IDE, while Claude helps clarify your logic or build reusable components. Less Googling, more building, without sacrificing quality.

2. They help you work through problems

Claude, especially, acts like a debugging therapist. Beyond just spitting out code, it talks you through why a certain solution might work. That kind of analytical scaffolding is gold when you’re working on architectural decisions, edge cases, or learning something new.

3. They fit different coding vibes

Some developers want flow. Others want clarity. Cursor is all about flow: fast, responsive, and code-first. Claude is about clarity; thoughtful, language-first, and great for explaining logic. Depending on how you work, you’ll lean into one more than the other.

4. They boost solo developers and small teams

Not every team has the luxury of a full-stack wizard or an on-call code reviewer. Claude can act as your patient pair programmer. Cursor can be your smart autocomplete on steroids. For solo founders or tight startup teams, these tools fill critical gaps without ballooning costs.

5. They make learning smoother

Claude is ideal for learning new frameworks or deciphering foreign codebases. It explains code like a tutor, not a machine. Cursor, meanwhile, helps you see how things fit together by completing functions and offering inline suggestions in real time.

6. They reduce cognitive load

If you’re stuck debugging after three hours of brain-fogged coding, Cursor can suggest a fix. Trying to remember the right syntax for a data transformation? Claude’s got you. They reduce decision fatigue and let you focus on what matters: architecture, logic, and creativity.

7. They push the future of dev workflows

Claude and Cursor aren’t just productivity hacks, at least not anymore. They’re glimpses of where development is headed: contextual, personalized, AI-augmented environments. Getting familiar with them now gives you a head start on the future of coding.

How to integrate AI coding assistants like Cursor or Claude into your workflow

1. Start small

Begin by using the AI on personal, low-risk, or non-critical projects. This lets you test its behavior, strengths, and quirks without jeopardizing production work.

2. Use suggestions as starting points

AI-generated code should never be copy-pasted blindly. Treat suggestions as a rough draft useful for inspiration or scaffolding, but always review for logic, security, and architecture fit.

3. Customize the tool to match your style

Explore your AI assistant’s customization options, whether that’s prompt templates in Claude or autocompletion behavior in Cursor. Set preferences that reflect your language choices, formatting style, and workflow norms.

4. Prompt intelligently

Learn how to write great prompts. Be specific with context, describe edge cases, and clarify goals. The better the input, the better the output, especially with Claude, which thrives on detailed guidance.

5. Integrate into your environment

Cursor shines when embedded directly in your IDE (VS Code, JetBrains, etc.), while Claude might need a dedicated browser tab or API integration. Choose setups that reduce friction and don’t break your flow.

6. Leverage AI for docs and comments

Use the assistant to draft docstrings, inline comments, and README sections. This enhances collaboration and helps future-you or your team understand the code faster.

7. Keep track of errors and wins

Keep a running log of hallucinations, inaccuracies, and particularly helpful suggestions. This helps you tune your usage habits and prevents you from getting burned by repeating the same trust mistakes.

8. Set boundaries

Know when not to use AI. For sensitive systems (e.g., auth logic, payment flows), stick to manual review. For creative refactoring, AI can be a great collaborator, but security-critical code should be human-first.

9. Stay updated

AI tools evolve fast. New features, models, or security policies might significantly improve performance. Make it a habit to revisit settings, release notes, or community forums monthly.

10. Monitor impact

Quantify how much time the AI is saving or wasting. Are you shipping faster? Is the code better? Use this data to decide whether to scale usage, upgrade plans, or rethink your workflow strategy.

Conclusion

Like with everything, coding with AI isn’t about replacing devs. It’s about syncing with tools that understand how you think. For me, the right fit was clear.

Claude gave me calm, thoughtful guidance. Cursor gave me speed and real-time flow. Depending on your workflow, either tool could feel like a superpower or a mismatch. But the biggest surprise wasn’t about power, it was about fit.

If your AI tool doesn’t get your vibe, it’s just another tab slowing you down.

FAQs about Claude vs Cursor for developers

1. What is Claude AI used for in coding?

Claude is great for brainstorming code structure, writing functions with explanations, debugging logic, and even helping you understand documentation. It’s like a calm, reasoning partner that talks through problems step-by-step.

2. Is Cursor better than GitHub Copilot?

That depends on what you value. Cursor integrates tightly with your IDE and offers more control over prompting and feedback. Copilot is slick and trained on a massive GitHub corpus. Read this article for a more in-depth analysis.

3. Can you use Claude inside VS Code?

Not directly. Claude doesn’t have a native VS Code extension like Cursor or Copilot. You’d have to use it via the web app or API, which is better suited for standalone prompting or integrating into custom dev environments.

4. Which is better for beginners: Claude or Cursor?

Claude wins if you want explanations with your code. Cursor is better if you’re already somewhat comfortable in your stack and want real-time, in-editor suggestions. Beginners who like to understand the “why” will vibe more with Claude.

5. Does Cursor support multiple programming languages?

Yes, Cursor supports a wide range: Python, JavaScript, TypeScript, Go, Rust, C++, and more. Its completions are generally solid across most popular stacks.

6. Is Claude free or paid for developers?

There’s a free tier (with some limitations), and the paid plan starts at $20 monthly for Claude Pro. You get access to Claude 3 Sonnet, which is solid for most dev tasks. Opus (the more powerful version) is also available in that tier.

7. Can you use Claude and Cursor together?

Yes, you can. Cursor handles the in-editor flow, while Claude helps to talk through tough bugs or architectural decisions. It’s like having a co-pilot and a consultant in one setup.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.