- YouTube has introduced a tool that requires creators to disclose AI-generated content to ensure transparency and curb misinformation on the platform.

- Consequently, content creators must inform viewers when creating realistic content that could be mistaken for real using altered or synthetic media, such as generative AI.

- This news follows the announcement in November 2023 that music partners can request the removal of AI-generated music content that mimics an artist’s distinct singing or rapping voice.

It is not news that several Africans use AI-generated videos to spread misinformation. One such instance is a video purportedly endorsing the effectiveness of a pain relief cream, with Chinonso Egemba, popularly known as “Aproko Doctor,” in it. The video also features a TVC reporter who endorses the product’s effectiveness.

However, the video was later discovered to be a compilation of old recordings, with the audio and mouth movements altered to give the impression of authenticating the product’s effectiveness.

A similar instance involves a manipulated video of Kayode Okikiolu, a Channels TV reporter, by scammers who forced him to endorse various products to deceive people. These videos were said to be extracted from legitimate news reports and manipulated to give the impression that he was endorsing health products or video games.

Nonetheless, with the introduction of this tool by YouTube, there will be fewer instances of manipulated videos, assisting in the fight against misinformation. The tool also promotes trust and transparency between users and creators.

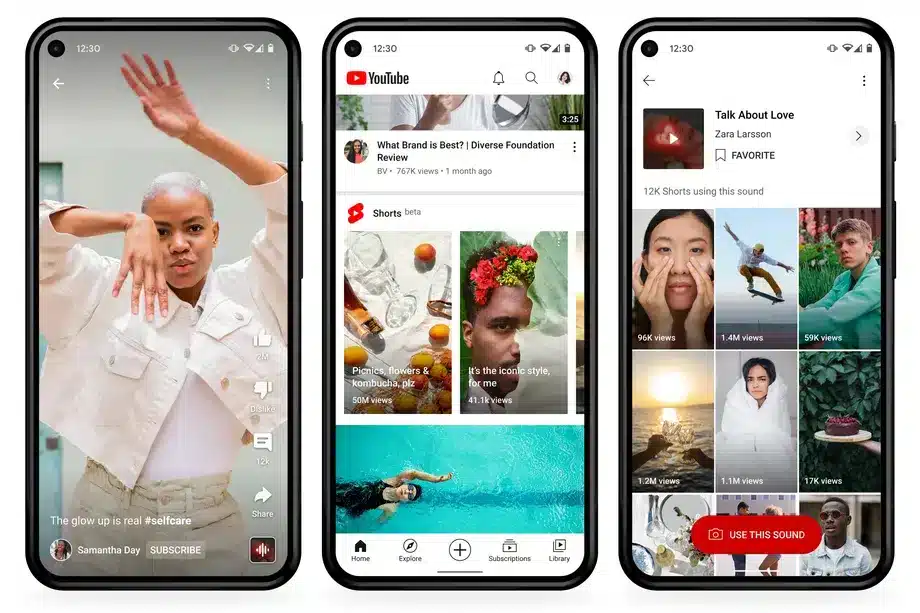

Per the Google-owned platform, when creators upload content, they will be given new options to specify whether it contains realistic altered or synthetic material.

The new feature applies to content, including “digitally altering content to replace the face of one individual with another’s or synthetically generating a person’s voice to narrate a video, manipulating footage of real events or places, and generating realistic scenes.”

Meanwhile, YouTube announced that creators will not be required to disclose when they use generative AI for productivity purposes such as script generation, content ideas, or automatic captioning.

It also stated that when it comes to situations of clearly unrealistic content, colour adjustment or lighting filters, special effects like background blur or vintage effects, beauty filters, or other visual enhancements, creators will not be required to disclose when synthetic media is unrealistic and/or the changes are insignificant.

Moreover, big tech companies have taken measures to address the growing issue of AI-generated misinformation. Meta announced in January 2024 that it was “working with industry partners on common technical standards for identifying AI content, including video and audio” to label images that users post to Facebook, Instagram, and Threads when it detects industry-standard indicators that they are AI-generated.