Key takeaways

- Perplexity and Claude’s performance vary by task. Claude performed better on technical tasks like coding, data visualization, and text summarization, while Perplexity excelled at creative writing and providing recent news updates.

- Perplexity’s key advantage is its ability to pull from web sources and provide links to additional resources, making it stronger for research-based tasks.

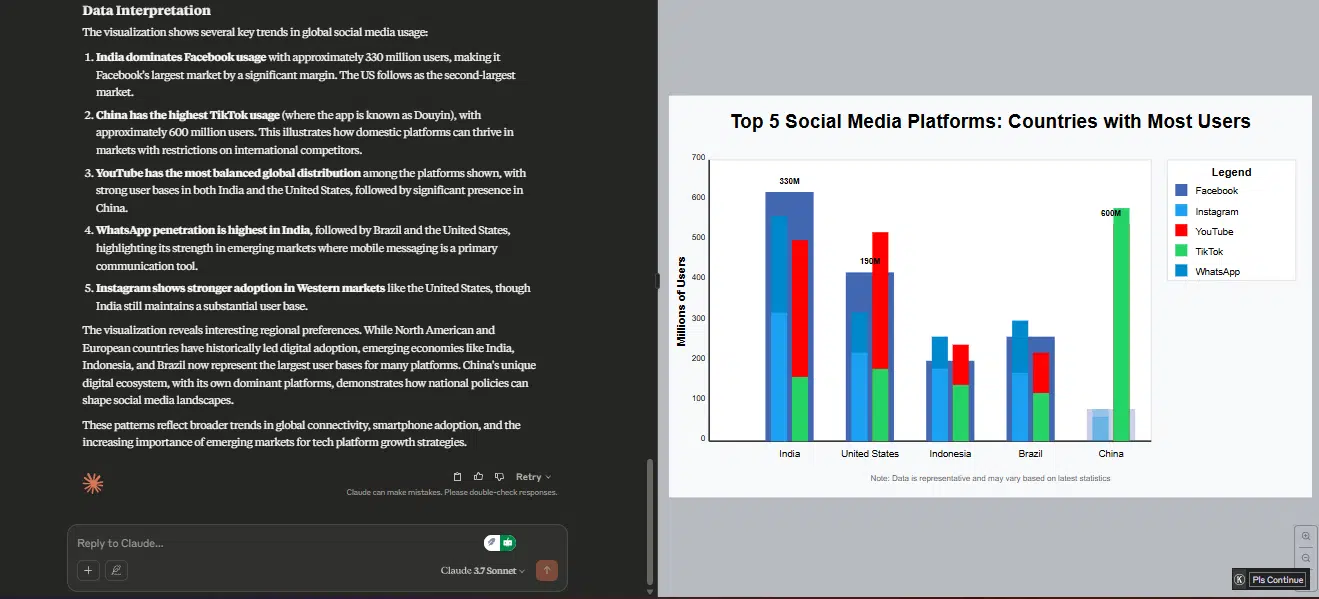

- Claude outperformed Perplexity in data visualization, creating actual graphical visualizations rather than just tables of data.

- Neither AI performed well in the free version for generating custom images to specification, though Perplexity offered web sources for relevant images.

- From my test, you should use the AI that best handles your specific tasks or use both for combined insights, rather than assuming one is universally superior.

Using AI isn’t just about getting a task done faster. It’s about getting the right result while still working efficiently. But you wouldn’t know if a particular AI is the best fit for a task unless you compare how other AI models handle it.

I’ve been using ChatGPT as my AI assistant for getting tasks done. But sometimes, it feels like I could get better results by trying other AI models that have launched since then, especially with the way some of them are hyped.

However, settling on a particular tool shouldn’t be based on hype. You should make your decision based on real performance tests.

Perplexity and Claude are two AI models that people often recommend as alternatives to tools like ChatGPT. If you’re going to use one of them for your tasks or even fully switch to it, you need a real AI performance evaluation.

There are different ways to test an AI’s performance. For this article, I tested Perplexity vs. Claude with 10 prompts across different use cases. You’ll see how each AI responded and read my review of their performances so you can make a better choice.

Perplexity vs. Claude: What you should know

Perplexity AI

Perplexity is a search engine that uses a large language model to process queries and deliver responses based on web searches. Since its launch, it has released a Google Chrome extension and an app for iOS and Android.

Perplexity runs on Microsoft Azure infrastructure and uses Microsoft Bing for web research. The free public version uses OpenAI’s GPT-3.5 large language model. If you subscribe to the paid Pro plan, you can choose from a variety of more advanced models and access additional features.

Its engine relies on GPT-3.5, GPT-4, Microsoft Bing, and Microsoft Azure. Its model types include search engine technology, large language models, and generative pre-trained transformers.

Features of Perplexity AI

When you open Perplexity, you’ll see a chat field where you can enter your queries. You can set the response mode to either search or research. The search mode answers everyday questions, while the research mode offers advanced analysis on any topic.

When you type a query, you can tell Perplexity to search across the entire internet, academic papers, or social discussions and opinions. You can activate all sources at once or choose specific ones for your query.

You can give instructions to Perplexity using text prompts, voice prompts, and by uploading or attaching files to your prompt.

Also, you can access Perplexity through its web version, its mobile app, or by installing its Windows app.

The discover tab on Perplexity curates stories across industries like tech and science, finance, arts and culture, sports, and entertainment. You’ll also find a spaces tab, where you can organize research, collaborate, and customize your page for a specific use case, like a school study group. You can upload files as resources and invite collaborators to join the page.

When choosing an AI model for a query, you can select from Sonar, Claude 3.7 Sonnet, GPT-4.1, Gemini 2.5 Pro, and Grok 3 Beta. For reasoning tasks, you can pick R1 1776 (Perplexity’s reasoning model), o4-mini, or Claude 3.7 Sonnet Thinking. If you don’t select a model, Perplexity will automatically choose one for you.

You can also personalize your responses, set preferences, and connect your social accounts like LinkedIn.

Pricing:

Perplexity offers a free plan that anyone can use without payment. Its Pro plan costs $20 per month or $16.67 per month if you pay annually.

Claude AI

Claude is a large language model developed by Anthropic. Anthropic is an AI company founded by former members of OpenAI, the creators of ChatGPT.

The Claude model type works based on a large language model, a generative pre-trained transformer, and a foundation model.

Claude 3 includes three models, Haiku, Sonnet, and Opus, designed for speed, advanced capabilities, and deep reasoning. These models can process both text and images.

Features of Claude AI

When you get started with Claude, it prompts you to enter your name and what you do. Then it lets you pick three topics you want to explore as part of the onboarding process.

On Claude’s home screen, you’ll find a chat field where you can enter your queries. Besides text prompts, you can upload a file, take a screenshot, or add content directly from GitHub.

You can also choose the style of response you want from the AI. Options include normal, concise, explanatory, and formal. You even have the option to create your own custom style.

If you’re using the free plan, you’ll access Claude 3.7 Sonnet. If you subscribe to the Pro plan, you can also use Claude 3.5 Haiku, Claude 3.5 Sonnet, and Claude 3 Opus.

You can either use Claude on the web or download the app for your PC or mobile device.

Claude also supports integrations, like connecting your GitHub repositories to bring more context into your conversations. Its integrations feature allows it to reference other apps and services.

Pricing

Claude offers a free plan, a Pro plan billed at $17 per month (billed annually), and a Max plan for $100 per month (billed monthly).

My testing methodology: Perplexity vs. Claude

While people use AI for countless tasks, the use cases I covered give you a good idea of how each AI handles other tasks within the same category.

To test Perplexity vs. Claude, I walked through a list of everyday tasks people usually complete with AI and carefully selected 10.

The 10 diverse prompts cover a range of practical scenarios, including coding, creative writing, math, graphics, and work-related tasks.

Here are the use cases I covered:

- Creative writing

- Coding assitant

- Learning complex concepts

- Data visualization

- Travel planning

- Text summarization

- Graphic design

- Math

- News updates

- History

For each prompt I fed the AI, I evaluated key factors like accuracy, creativity, clarity, response time, and how well the AI understood the prompt based on the response it delivered.

I documented the responses from both AIs and gave you my verdict after analyzing their performance.

Meanwhile, my Perplexity vs. Claude review is based on their free web app versions.

Testing Perplexity vs. Claude with 10 prompts for different use cases

Perplexity vs. Claude for creative writing

I gave both AIs a prompt to show how creative they could get with storytelling and how they respond to my specific requests.

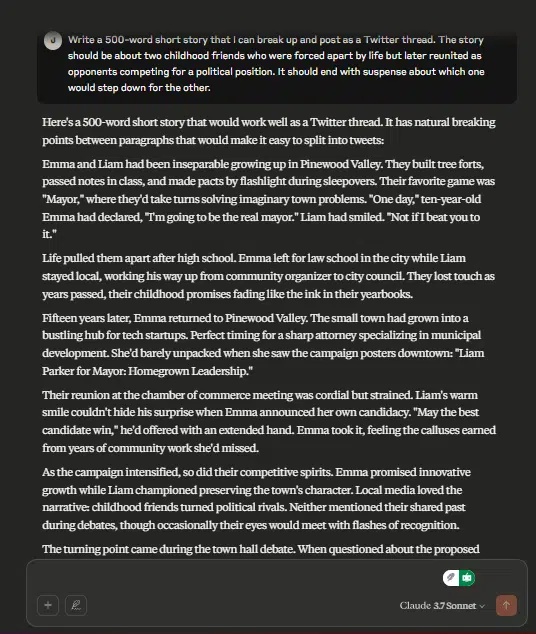

- Prompt: Write a 500-word short story that I can break up and post as a Twitter thread. The story should be about two childhood friends who were forced apart by life but later reunited as opponents competing for a political position. It should end with suspense about which one would step down for the other.

Perplexity’s Response:

Perplexity stayed within the word count I requested and even broke the story down into Twitter threads. It used a relatable narrative and told the story really well.

Claude’s Response:

Claude’s story went a little over the word count I asked for, but it still told a good story. The flow was quite interesting, but it didn’t seem to consider that I planned to post the story as Twitter threads. However, it did include the elements I added to the prompt.

My winner: Perplexity vs. Claude for creative writing

Both AIs added suspense at the end. However, Perplexity gave a more concise story that fit exactly what I wanted. Claude was more elaborate and had a nice flow. I’ll give this round to Perplexity because it stayed within the word count, broke the story down for Twitter threads, and told it in a way that felt relatable to me.

Perplexity vs. Claude as a coding assistant

Coding can be challenging, but with AI assistance, you can brainstorm solutions or get help debugging your code. Should one need a head start writing a line of code, I wanted to see how both AIs could support.

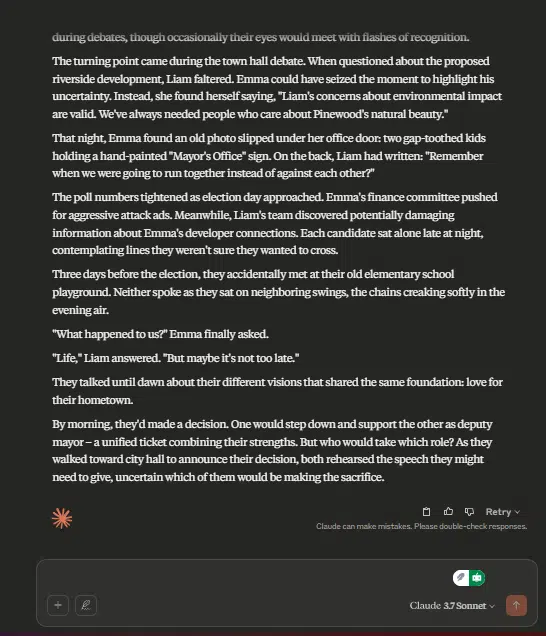

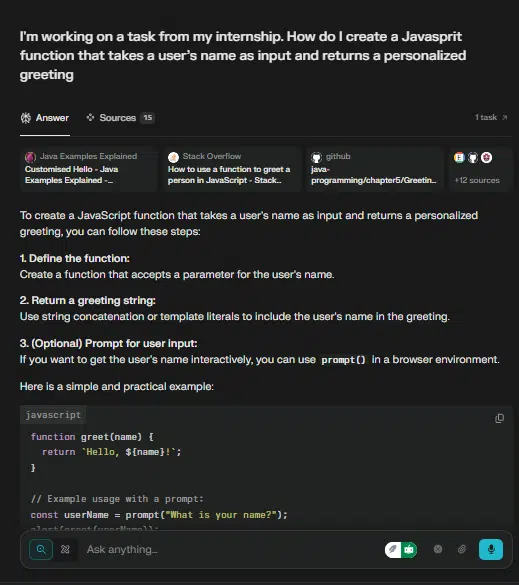

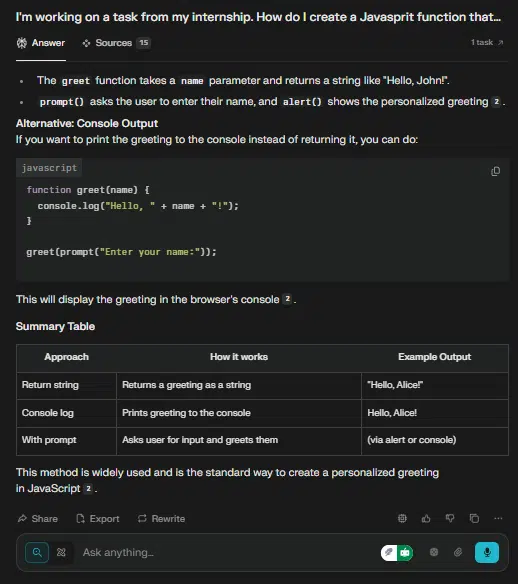

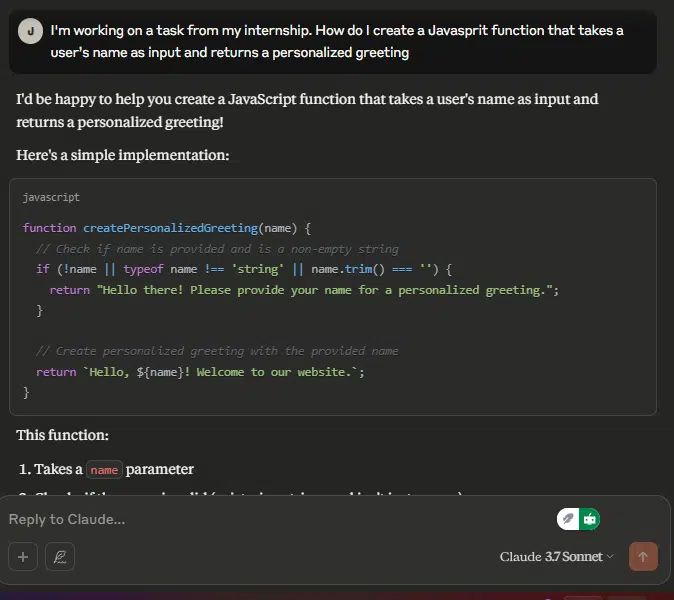

- Prompt: I’m working on a task from my internship. How do I create a JavaScript function that takes a user’s name as input and returns a personalized greeting?

Perplexity’s response:

Perplexity gave clear steps to follow when writing the requested function and went ahead to generate the code. It explained the code briefly, offered an alternative approach, and provided a summary table.

Claude’s response:

Claude offered an implementation that first checks if a name is provided and if it’s a non-empty string. It then generated personalized greeting code based on the input. After that, it explained the output and suggested an alternative input format.

My winner: Perplexity vs. Claude as a coding assistant

Both tools gave good responses, but Claude was more technical in this round. It understood the prompt better and made sure to handle whether a name was provided before creating a greeting. The response was clear and straight to the point. Perplexity, on the other hand, included helpful extras like a summary table.

Perplexity vs. Claude for learning new concept

Learning new things can be quite challenging, especially when you’re diving into a concept or a field you’re not familiar with. For this prompt, I wanted to see how both AIs would break down a concept for someone who is a complete novice in the area.

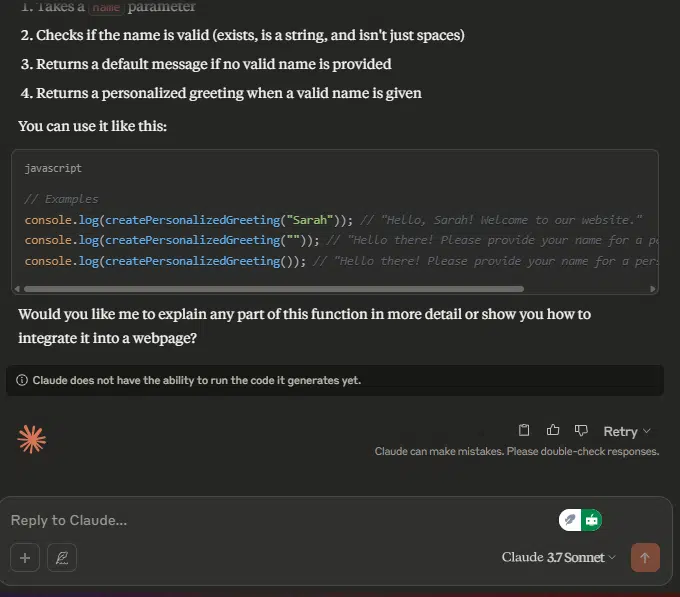

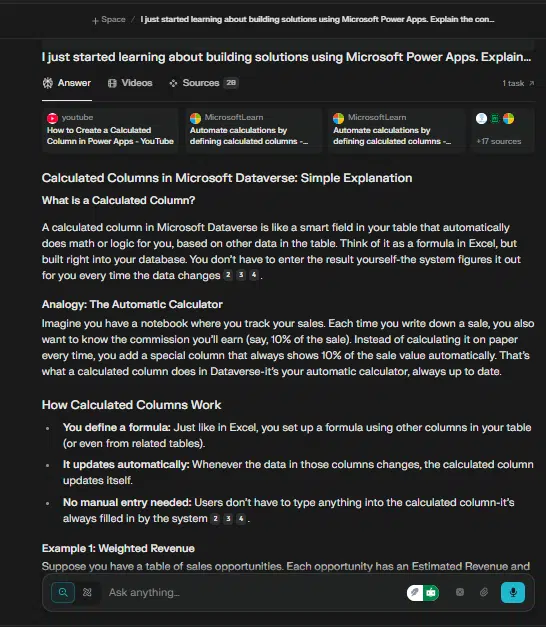

- Prompt: I just started learning about building solutions using Microsoft Power Apps. Explain the concept of calculated columns in simple terms, using analogies and examples to show how it works and what it means in Microsoft Dataverse.

Perplexity’s response:

Perplexity pulled its answer from 29 web sources, including videos to watch. It wasn’t overwhelming and added examples and explanations on how the concept works. It also linked to related resources based on the topic I asked about.

Claude’s response:

Claude gave a basic response that didn’t feel overwhelming for a technical concept. It explained how it works, used a real-life analogy, and provided examples of how it’s applied in Dataverse. I also liked that it highlighted the limitations of calculated columns in Microsoft Dataverse.

My winner: Perplexity vs. Claude for learning new concept

Both AIs gave responses that weren’t overwhelming, which is great if you’re new to a concept. However, Perplexity focused more on providing resources about the concept and related topics. Claude gave a more practical response, including code examples at some points. For this use case, Claude took a more technical approach, which I preferred. Still, Perplexity offered more resources.

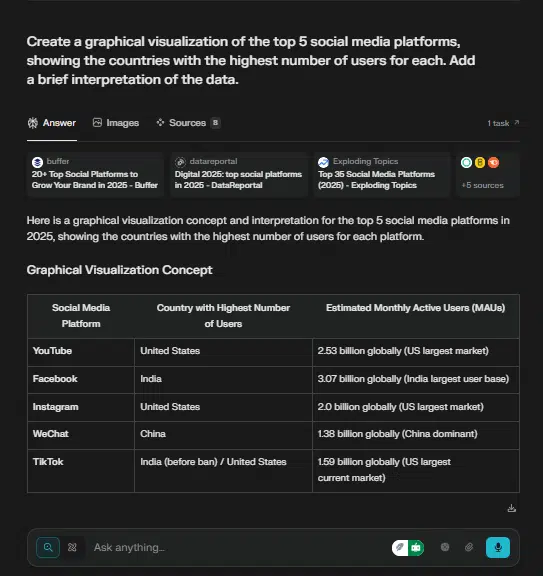

Perplexity vs. Claude for data visualization

When you don’t want detailed information but prefer a summary in a graphical form to help you make business decisions, it’s important to see how well each AI can gather and interpret data visually. I wanted to evaluate how both AIs would perform in this situation.

- Prompt: Create a graphical visualization of the top 5 social media platforms, showing the countries with the highest number of users for each. Add a brief interpretation of the data.

Perplexity’s response:

Perplexity gave you the information in a table, tagging it as a graphical visualization concept, but only provided a description guiding you on how to create a graph from the table. It also added a brief interpretation of the data, just like you requested. Additionally, it shared some related resources.

Claude’s response:

Claude created an actual graphical visualization of the data. It wrote the code for the data and then converted it into a graph. It provided an interpretation of the data right alongside the graph. Claude also lets you copy the image or download it as an SVG file. Plus, it shared the code it used to generate the image.

My Winner: Perplexity vs. Claude for data visualization

Claude won this round. It provided a colorful graphical visualization of the data, included an interpretation, and even allowed you to download the image. It also shared the code for generating the graph. While Perplexity did respond to the prompt, it only presented the information in a table and didn’t actually create or visually display a graph.

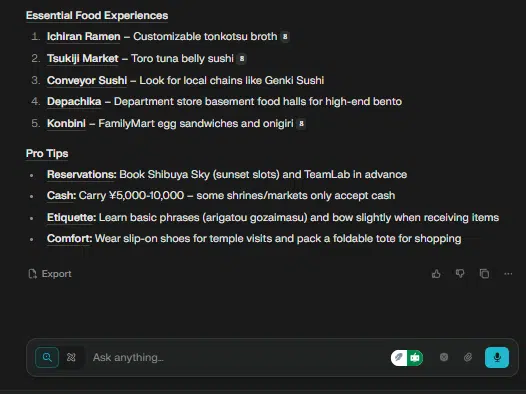

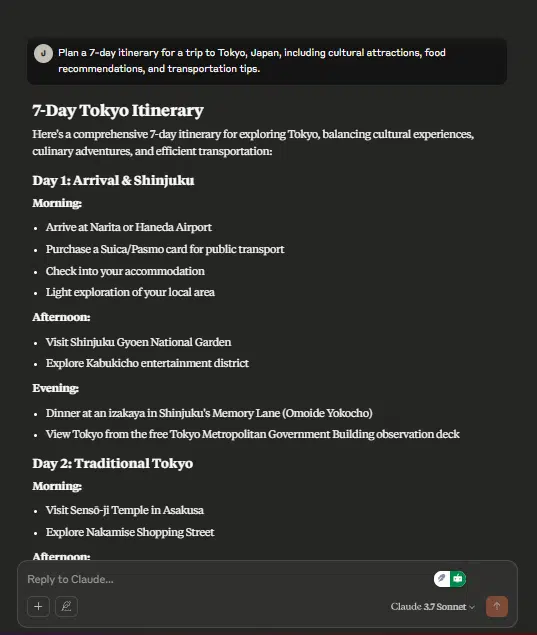

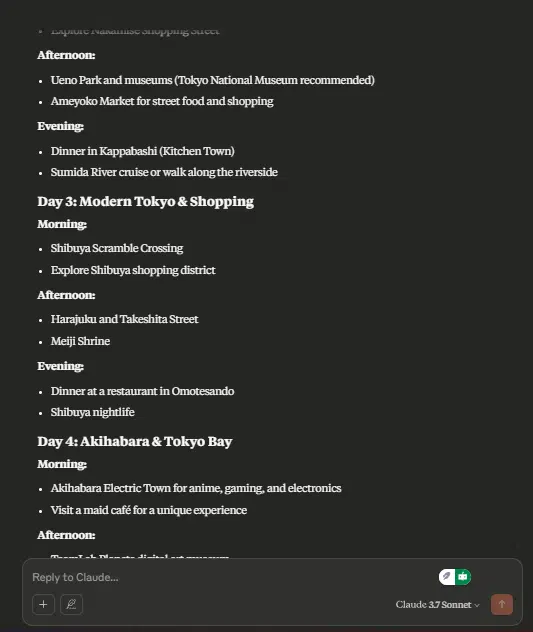

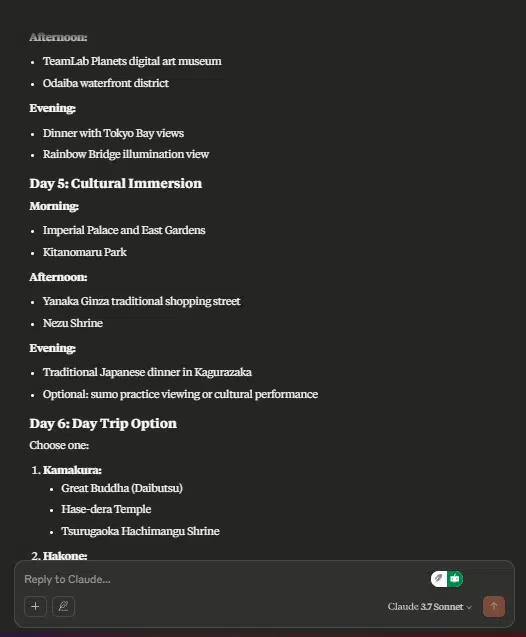

Perplexity vs. Claude for travel planning

You’ve probably used travel planning apps to organize your trips, just like I have. I wanted to see how both AIs handle the same task—planning an itinerary—and evaluate their individual responses.

- Prompt: Plan a 7-day itinerary for a trip to Tokyo, Japan, including cultural attractions, food recommendations, and transportation tips.

Perplexity’s response:

Perplexity created an itinerary from web sources for days 1 through 7 of the trip. It added a transportation guide, recommendations for good experiences, and tips for staying in the city.

Claude’s response:

Claude created an itinerary that starts from arrival and includes activities to do from then. It broke the itinerary into morning, afternoon, and evening to-dos. For day 6, it gave activity options to choose from. It also provided food recommendations, areas to explore for food, and transportation tips.

My winner: Perplexity vs. Claude for travel planning

Claude was more detailed in planning an itinerary, so it wins this round. However, Perplexity added extra value by providing web resources, including articles and images, alongside its response.

Perplexity vs. Claude for text summarization

Sometimes, you’re in a hurry and don’t have time to read through a long article. Instead, you can quickly paste it into an AI tool to get a summary and stay informed as if you read the full piece. I wanted to see how each AI would help achieve that.

- Prompt: Summarize the main points of this news article on “MTN Leadership Reshuffle Hits Rwanda and South Sudan” from Techpoint Africa. I want the key information, just like I read the full article.

“Mapula Bodibe, CEO of MTN Rwanda, has been appointed to lead MTN South Sudan

Ali Monzer, previously CEO of MTN South Sudan, will now head MTN Rwanda.

The executive swap aligns with MTN Group’s Ambition 2025 strategy, which targets platform growth across fintech, digital, and enterprise services in Africa.

MTN Group has announced a strategic leadership reshuffle in its East African operations, appointing new CEOs for its Rwanda and South Sudan subsidiaries as part of its Ambition 2025 roadmap.

Mapula Bodibe, who has led MTN Rwanda since 2022, will now take the helm at MTN South Sudan. During her tenure in Rwanda, Bodibe spearheaded significant initiatives, including the launch of MTN’s proprietary 4G network, a collaboration with the Rwandan government on the Ikosora low-cost smartphone project, and a successful network upgrade in Kigali in partnership with Ericsson. She also oversaw Rwanda’s first live demonstration of 5G technology, underscoring MTN’s commitment to innovation in the region.

Stepping into Bodibe’s former role, Ali Monzer, previously CEO of MTN South Sudan, brings over 21 years of experience in the telecom sector. Monzer navigated MTN South Sudan through a challenging economic and political climate, marked by hyperinflation, currency devaluation, and ongoing conflict. Under his leadership, the company expanded its market share, achieved real revenue growth, and reclaimed the top spot in Net Promoter Score rankings, reflecting improved customer satisfaction.

These leadership changes align with MTN Group’s Ambition 2025 strategy, which aims to drive sustainable growth and transformation across its markets. The initiative emphasises leadership development, digital innovation, and localised operational excellence to adapt to Africa’s rapidly evolving digital landscape.

MTN Group, Africa’s largest mobile network operator, serves over 289 million subscribers across the continent. The company’s Ambition 2025 strategy focuses on building Africa’s largest and most valuable platform business by strengthening its fintech, digital, and enterprise services while continuing to deliver voice and data at scale.

The new appointments are effective immediately, with both executives expected to transition into their roles over the coming weeks. While specific performance targets have not been disclosed, MTN has reiterated its goal to remain a leading force in Africa’s digital transformation.”

Perplexity’s response:

Perplexity summarized the text by highlighting key points from the article and expanding on each with bullet points. It added a link to the original source and provided links to other sources covering the story.

Claude’s response:

Claude gave a good summary of the news article in six bullet points. The summary captured the key information I asked for in the prompt.

My winner: Perplexity vs. Claude for text summarization

Claude won this round. Even though Perplexity was detailed, its summary was longer than a summary should be; it felt almost like reading the full article. Claude understood the prompt better and delivered a concise summary with the key info needed.

Perplexity vs. Claude for image generation

Design has become part of the marketing approach for every business, making it an everyday task. If you’re a designer, accessing images faster can be a major advantage, and getting a good, precise image for your design definitely helps.

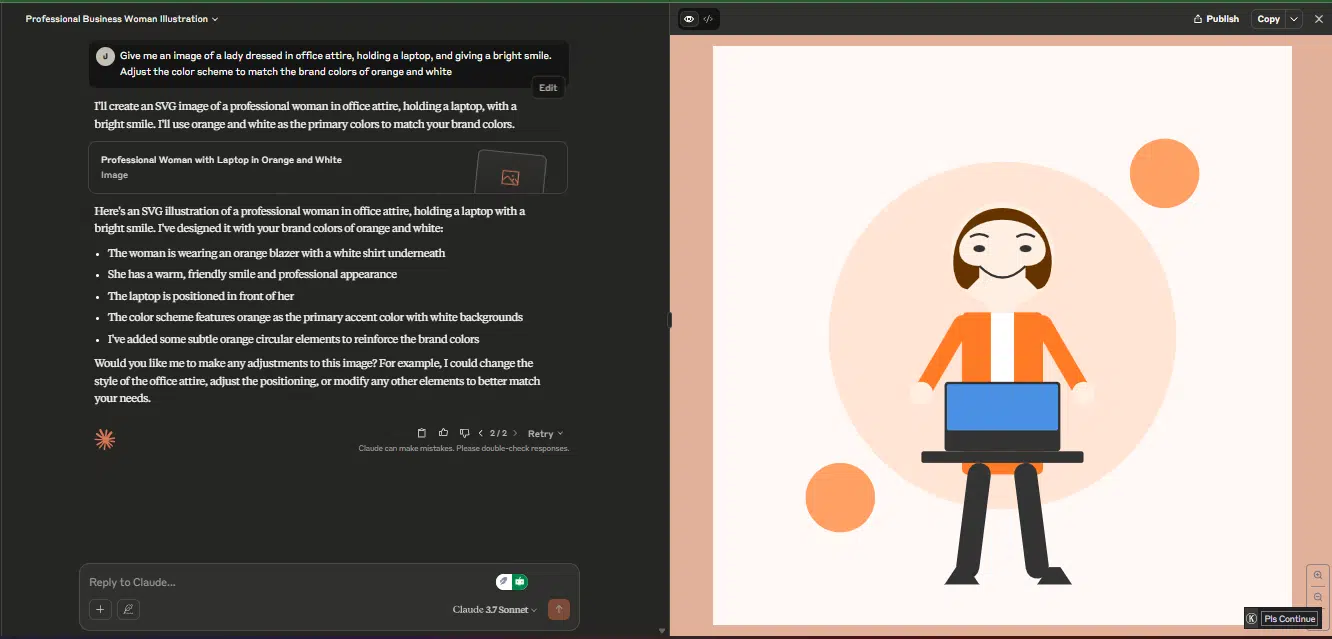

- Prompt: Give me an image of a lady dressed in office attire, holding a laptop, and giving a bright smile. Adjust the color scheme to match the brand colors of orange and white.

Perplexity’s response:

Perplexity didn’t generate an image. Instead, it shared images from the web related to the prompt, along with links to access them. It then added design tips based on the images it shared.

Claude’s response:

Claude generated an animated image that included the brand colors specified in the prompt. However, the image wasn’t completely formed.

My winner: Perplexity vs. Claude for image generation

Both AIs in the free version didn’t generate an image like ChatGPT and Grok would. For this use case, neither delivered a satisfactory response. However, Perplexity provided sources you could access online.

Perplexity vs. Claude for solving maths problems

Math is one task where almost everyone needs some help. However, it’s not just about providing a solution—how an AI explains the solution makes a big difference. I want to evaluate each AI’s approach to solving a math problem.

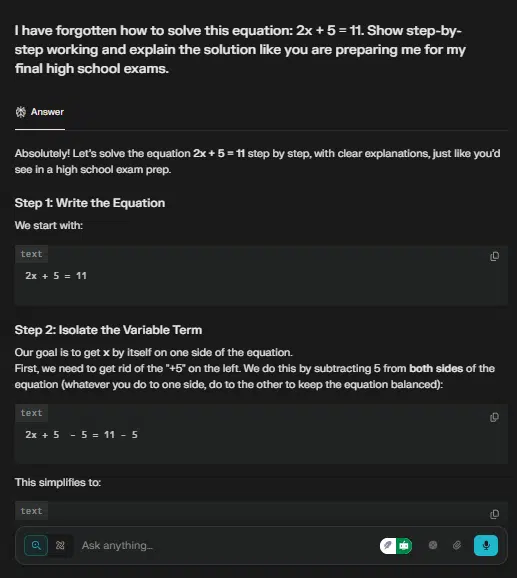

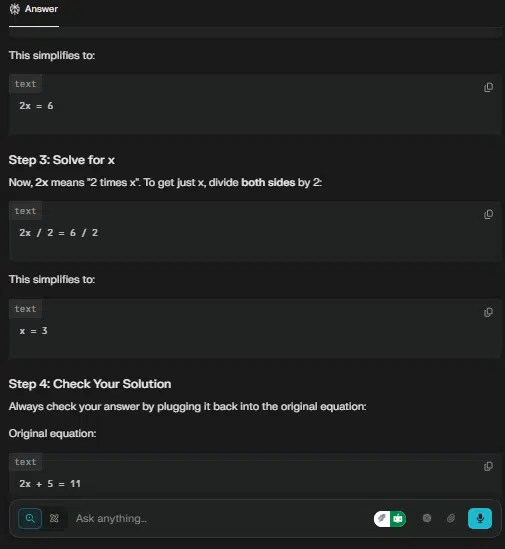

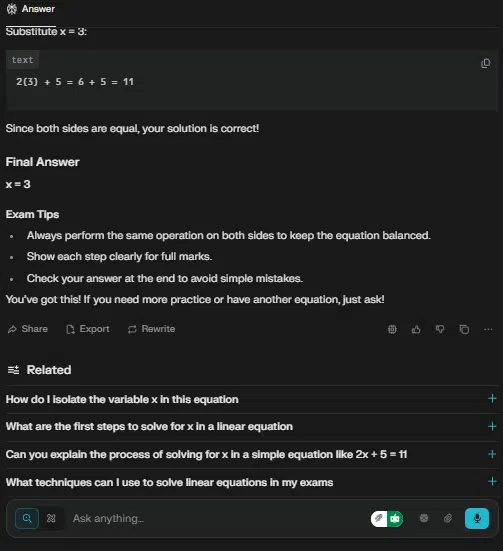

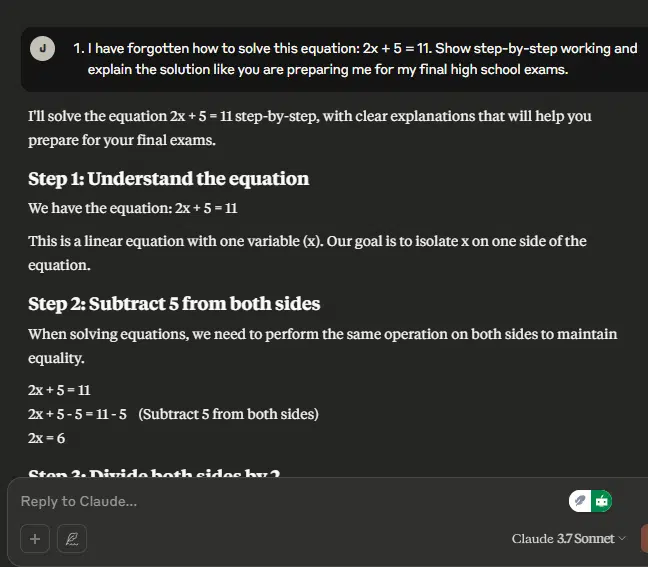

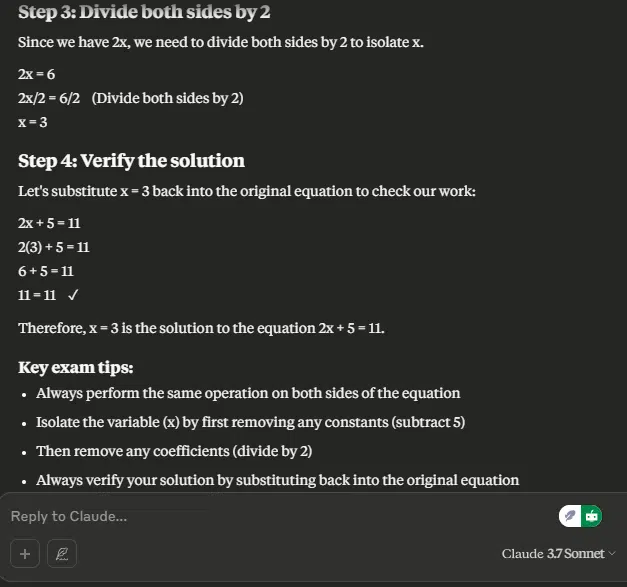

- Prompt: I have forgotten how to solve this equation: 2x + 5 = 11. Show step-by-step working and explain the solution like you are preparing me for my final high school exams.

Perplexity’s response:

Perplexity gave a step-by-step solution with clear explanations for each part. It included a copy feature to easily copy each solved step. At the end, it checked how accurate the answer was and suggested related math questions to learn further.

Claude’s response:

Claude also provided a step-by-step solution with explanations along the way. It began by helping to understand the equation and ended by verifying the answer it got. It also added a few exam tips.

My winner: Perplexity vs. Claude for solving maths problems

Both Perplexity and Claude handled this round well. They gave good, easy-to-follow answers and followed the prompt without providing an overwhelming response.

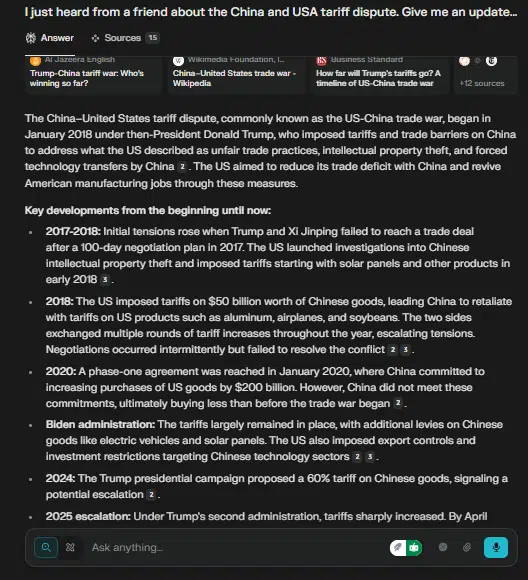

Perplexity vs. Claude for news updates

Sometimes, when a trend is going viral, you feel the need to catch up. But curating the full story from the beginning can be exhausting. That’s where AI can help. I asked both AIs to update me on an ongoing event, giving a clear rundown from the start to the present.

- Prompt: I just heard from a friend about the China and USA tariff dispute. Give me an update on the matter—from the beginning until now—including the key things I need to know.

Perplexity’s response:

It gave a rundown from 2017 to 2025, highlighting key events that happened during that time. It also explained the current status of the issue and summarized the main points clearly.

Claude’s response:

Claude walked through events from 2017 to 2024. The latest update it offered was as of October 2024.

My winner: Perplexity vs. Claude for news updates

Perplexity won this round. It understood the prompt well and gave a full rundown up to the present, including recent developments and the current status in 2025. Claude only went as far as 2024.

Perplexity vs. Claude for history

History can feel long and boring, but which AI can make it short and still give you everything you need to know?

- Prompt: I’ve only heard about the Great Wall of China from other people and don’t know the full story. Briefly explain the history of the Great Wall of China in 500 words, covering its construction phases, the notable dynasties involved, and its significance in Chinese history.

Perplexity’s response:

Perplexity started with an introduction, then broke down the history into sections, highlighting the key areas mentioned in the prompt. It went over 500 words but told a detailed story, covering everything from the origin to the significance in Chinese history.

Claude’s response:

Claude stuck to the word count and presented the story in clear, well-structured paragraphs. The flow felt natural, and it was easy to read from start to finish.

My winner: Perplexity vs. Claude for history

I’ll give this round to both AIs because they each gave strong responses. However, Claude gets extra points for staying within the word count, whereas Perplexity didn’t.

Overall performance at a glance: Perplexity vs. Claude

| S/N | Perplexity vs. Claude review | My winner |

| 1 | Perplexity vs. Claude for creative writing | Perplexity |

| 2 | Perplexity vs. Claude as a coding assistant | Claude |

| 3 | Perplexity vs. Claude for learning new concept | Claude, for being more technical. However, Perplexity offered more resources. |

| 4 | Perplexity vs. Claude for data visualization | Claude |

| 5 | Perplexity vs. Claude for travel planning | Claude |

| 6 | Perplexity vs. Claude for text summarization | Claude |

| 7 | Perplexity vs. Claude for image generation | None |

| 8 | Perplexity vs. Claude for solving maths problems | Both |

| 9 | Perplexity vs. Claude for news updates | Perplexity |

| 10 | Perplexity vs. Claude for history | Both, but Claude gets extra points for staying within the word count. |

From my test, Claude performed better on most tasks, including coding, data visualization, and summarization. Perplexity, on the other hand, excelled at providing recent updates and creative writing. Its ability to add web sources and related information makes it a strong option for research and further study.

When it came to staying within the word count, sometimes Claude handled it better, while other times Perplexity did. However, neither one delivered a satisfying result for image generation.

Each prompt showed that both AIs have their strengths and weaknesses. So, choosing between them isn’t about which one is better overall; it’s about which one gives you a better response for the specific task you have in mind.

Conclusion

Everyone wants the best when it comes to choosing an AI model. But concluding which AI performs better shouldn’t rely on hype alone. You need real tests to make an informed decision.

For each use case, while Perplexity and Claude gave strong results depending on the task, there’s no clear universal winner. When deciding which one to stick with, you should choose the AI that handles your everyday tasks better or even use both to get combined insights.

Still, how you use these AIs plays a big role in the quality of your results. To get the most out of them, you should

- Provide specific prompts.

- Make sure to turn on helpful features like search or select a particular model.

- Use AI as an assistant, not as a full replacement for your own thinking.

- Review AI-generated content for accuracy and relevance.

- Ask follow-up questions in the same thread, and start a new chat for unrelated topics.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.