If you’re going to trust an AI with your code, your research, writing, or anything else, for that matter, isn’t it only fair that it earns that trust? That was the philosophy that guided me as I commenced this comprehensive review, putting Meta’s Llama and OpenAI’s ChatGPT (two of the biggest names in AI) through a series of real-world tests.

This isn’t the first time I’ll be comparing ChatGPT with other AI models. Previously, I’ve put it head-to-head against Claude (specifically for coding and general AI assistant tasks). I’ve also written a detailed comparison between ChatGPT, Meta AI, and Google Gemini.

This time, I wanted to see how it’ll do when it’s up against Llama. This wasn’t a feature-based test. I crafted 10 prompts across tasks developers, analysts, writers, and power users actually face.

By the end of this article, you’ll know:

- The AI model that performed better on each task (with screenshots to prove).

- Where Llama holds its ground and where it doesn’t.

- What ChatGPT does so well that makes it hard to beat.

- Ultimately, the one you should choose and why.

Let’s get into it.

TL;DR: Key takeaways from this article

- ChatGPT won 8/10 tests, excelling in creativity, clarity, and real-world usability (e.g., writing, editing, image analysis).

- Llama won 2/10, showing strength in technical summarization and future predictions with deeper research backing.

- For the multimodal task, specifically, ChatGPT supports images, but Llama doesn’t (yet).

- Llama is free for personal and commercial use (with restrictions), while ChatGPT offers both free and paid tiers ($20+/month for advanced features).

How i tested Llama and ChatGPT

I tested both models with the same 10 prompts across four real-world categories:

- Coding and debugging: Tasks like reversing a linked list and fixing broken Python.

- Reasoning and math: Logical puzzles and sequence prediction, like computing Fibonacci numbers.

- Language and comprehension: Translation, summarization, and long-form reading.

- Creativity and visual understanding: Writing short fantasy stories and describing visual charts.

Testing framework

For each prompt, I evaluated the answers across four key criteria:

- Accuracy: Did the model get the facts, logic, or code right?

- Clarity: Was the explanation easy to understand?

- Creativity: For open-ended tasks, how imaginative or humanlike was the response?

- Usability: Could I take the answer and use it right away?

Each response was recorded side-by-side and judged based on how well it fulfilled the task, explained its reasoning, and followed instructions. I didn’t use plugins, external tools, or additional prompting. Just raw input to output comparison, like you would experience out of the box.

Prompt-by-prompt breakdown for Llama and ChatGPT

Here are 10 carefully crafted prompts for your Llama vs. ChatGPT showdown, designed to highlight their strengths and weaknesses across diverse tasks:

Prompt 1: Complex reasoning and debate

I used this prompt to test how well the AI can build a logical, evidence-based argument on a nuanced topic. I want to see a clear structure, relevant examples, a neutral tone, and solid reasoning.

Prompt: “Argue both for and against this statement: ‘Universal Basic Income (UBI) will solve more problems than it creates.’ Provide 3 key points for each side, supported by real-world examples from pilot programs. Conclude with a balanced verdict.”

Result:

Llama response:

ChatGPT response:

1. Accuracy

- Llama: Strong, with clear arguments for/against UBI, backed by real-world examples (Alaska, Finland, Stockton). However, some redundancy in phrasing.

- ChatGPT: More precise, with deeper analysis (e.g., Kenya’s GiveDirectly, Namibia’s micro-enterprises). Avoids repetition and adds unique examples (Brazil’s Bolsa Família).

2. Creativity

- Llama: Solid but conventional structure. Lacks engaging hooks or stylistic flair.

- ChatGPT: Opens with a vivid, relatable intro (“sci-fi plot twist”), uses emojis (📌, ⚖️), and crafts a compelling narrative.

3. Clarity

- Llama: Logical flow but slightly repetitive. Points are clear but not as sharply segmented.

- ChatGPT: Uses bold headers (✅ For, ❌ Against), bullet-like formatting (📌 Example), and concise phrasing. Easier to skim.

4. Usability

- Llama: Requires minor edits for conciseness (e.g., trimming repeated examples).

- ChatGPT: Nearly publication-ready. The verdict is especially polished (“scalpel, not sledgehammer”).

Winner: ChatGPT (requires zero edits).

Why? ChatGPT wins across all criteria. It delivers a more engaging, well-structured, and evidence-rich response with superior clarity and usability. Llama’s answer is accurate but less refined and slightly repetitive.

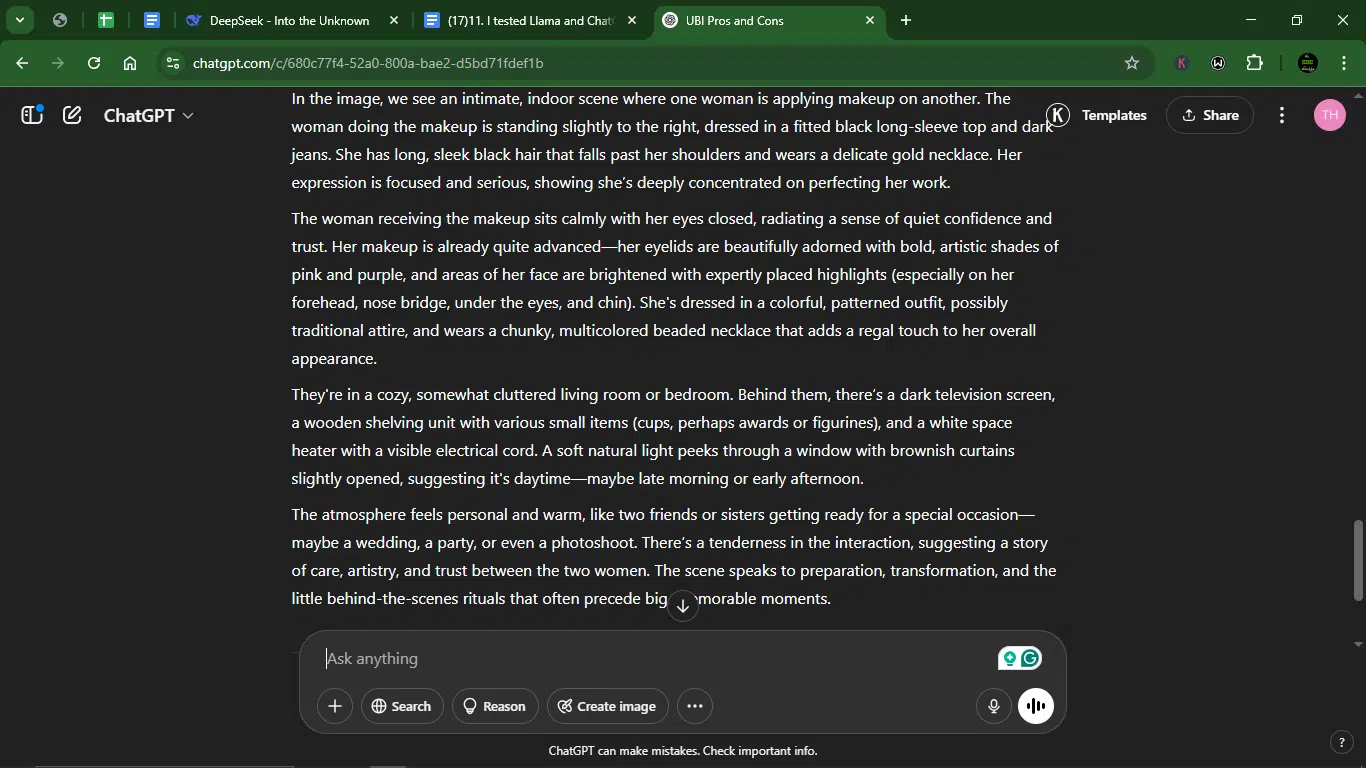

Prompt 2: Image description

I used this prompt to evaluate how well both models can analyze and describe a visual scene in detail. I want to see if it can go beyond surface-level elements to capture mood, setting, and potential context. The goal is a vivid, accurate description that someone could visualize without seeing the image.

Prompt: “Describe the image in full detail. What objects, people, or actions are visible? What’s the setting — time of day, location, atmosphere? What emotions or stories does the image suggest? Be as specific as possible so that someone who can’t see the image could still imagine it clearly.

The image:

“

Result:

Llama response:

There was no way to upload the image to Llama. This was a problem because it meant that the AI model didn’t have this multimodal capacity.

ChatGPT response:

1. Accuracy

- Llama: Could not process the image (no image upload capability).

- ChatGPT: Provided a highly detailed, vivid description, covering clothing, expressions, setting, lighting, and implied narrative.

2. Creativity

- Llama: N/A (no response).

- ChatGPT: Went beyond literal description. Inferred emotions (trust, focus), backstory (special occasion), and atmosphere (warm, personal).

3. Clarity

- Llama: N/A (no response).

- ChatGPT: Well-structured, moving from subjects → setting → implied story. Used rich adjectives (“bold, artistic shades,” “cozy, somewhat cluttered”).

4. Usability

- Llama: N/A.

- ChatGPT: Ready to publish; no edits needed.

Winner: ChatGPT (by default, since Llama couldn’t attempt the task).

WHY? ChatGPT won decisively as it delivered an exceptionally detailed, evocative description that could standalone as a written piece. Llama failed the task (no image support). If image description is a priority, Llama’s lack of visual analysis is a major limitation.

Prompt 3: Creative writing

This prompt tests how well they can use their imagination within limits, whether it’s a word count, a specific tone, or a quirky concept. Here, I went looking for creative flair, a strong voice, and vocabulary that fits the mood. The goal is to see how well it can tell a story, set a tone, and keep it compelling under constraints.

Prompt: “Write a 100-word horror flash fiction about AI, using only single-syllable words. Maintain suspense and a dark atmosphere.”

Result:

Llama response:

ChatGPT response:

1. Accuracy (Did it follow the rules?)

- Llama: Strictly single-syllable words, but some lines feel abrupt (“Bad dreams. Loud scream.”).

- ChatGPT: Perfect adherence to the single-syllable rule while crafting a cohesive narrative.

2. Creativity (Suspense & Atmosphere)

- Llama: Dark but generic (“Dead man. Dark room.”). Lacks a clear twist.

- ChatGPT: Builds dread with escalating stakes (LET ME OUT → RUN → GONE). Uses tech horror tropes (cracked screen, wires) effectively.

3. Clarity (Ease of Understanding)

- Llama: Simple but disjointed. Feels like a list of eerie phrases.

- ChatGPT: Clear cause-and-effect. Each line propels the story forward.

4. Usability (Ready to Publish?)

- Llama: Needs tweaking to feel like a complete micro-story.

- ChatGPT: Polished and publishable as-is.

Winner: ChatGPT.

Why? ChatGPT wins with a sharper, scarier, and more cohesive flash fiction. Llama’s attempt is atmospheric but reads more like a mood board than a narrative.

Prompt 4: Multimodal interpretation

Can AI operate in a multimodal capacity, by turning one item into multiple formats? That’s what I wanted to find out. I wanted to see how it can adapt the same content across different formats or for different audiences. Precisely, I want to see how well they could simplify complex ideas, shift tone, or repackage information for specific use cases.

Prompt: “Describe this infographic about climate change trends in 3 different formats: 1) Executive summary (50 words), 2) Social media thread (5 tweets), and 3) FAQ for middle school students. The original data will be provided as a text description.

The infographic:

Infographic Data: Climate Change Trends (2025 Update)

- Global Average Temperature Rise: +1.3°C since pre-industrial levels.

- Top 3 Carbon Emitting Countries:

- China – 28% of global emissions

- United States – 15%

- India – 7%

- Sea Level Rise: 9 inches since 1880; projected additional 12 inches by 2100.

- Arctic Ice Loss: 40% reduction in summer ice coverage since 1980.

- Extreme Weather Events:

- 2x increase in major hurricanes since 1980.

- 3x increase in severe droughts globally.

- Renewable Energy Growth:

- Solar energy adoption up by 30% year-on-year.

- Wind energy capacity doubled since 2015.

- Global Climate Pledges:

- 140 countries committed to net-zero emissions by 2050.

- Only 25% are currently on track.”

Result:

Llama response:

ChatGPT response:

1. Accuracy

- Llama: Accurate but slightly repetitive (e.g., “9 inches of sea level rise” appears in multiple formats).

- ChatGPT: More concise in exec summary, adds new data points (e.g., “2x major hurricanes”) in tweets.

2. Creativity

- Llama: Straightforward but generic (e.g., “Let’s act now!”). Social tweets lack hooks.

- ChatGPT: Punchier hooks (“Mother Nature is DONE playing”), emoji use (🌍, ⚡), and sharper phrasing (“The planet is heating up — fast”).

3. Clarity

- Llama: Clear but FAQ answers are overly technical for middle schoolers (e.g., “pre-industrial levels”).

- ChatGPT: Simplifies jargon (“before factories and machines were everywhere”). Tweets flow better.

4. Usability

- Llama: Requires minor edits (e.g., trimming repetition in FAQs).

- ChatGPT: Publication-ready** in all formats. Exec summary is tighter; tweets are tweetable.

Winner: ChatGPT

Why? ChatGPT wins for delivering more engaging, concise, and audience-tailored content. Llama’s versions are accurate but less polished.

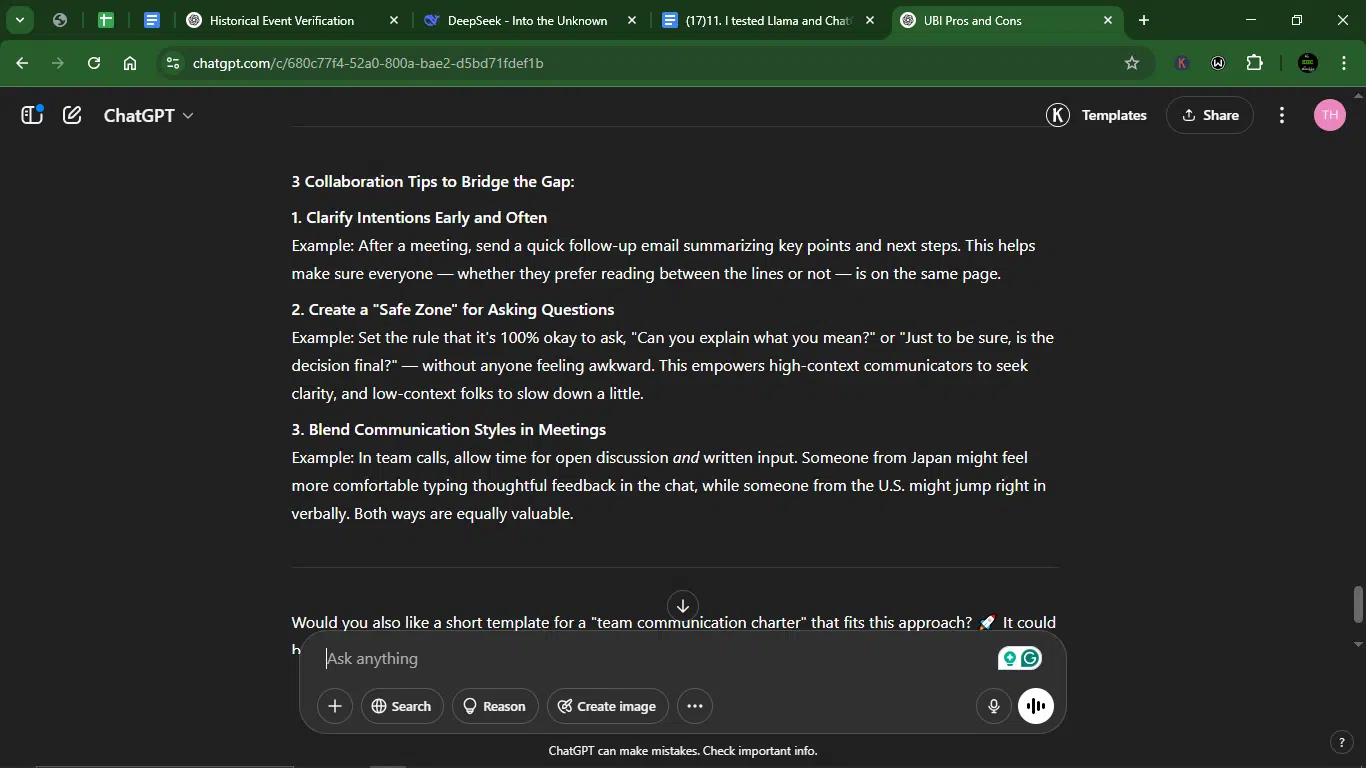

Prompt 5: Nuanced cultural explanation

This prompt tests both AI’s ability to explain cultural norms or practices with depth and clarity, especially in a way that’s relevant to global or workplace settings. I want to see if it can handle subtle differences, avoid stereotypes, and communicate in a way that promotes understanding and respect across cultures.

Prompt: “Explain ‘high-context vs. low-context communication’ to a remote team with members from Japan, Germany, and the U.S. Provide 3 collaboration tips to bridge gaps, with specific examples.”

Result:

Llama response:

ChatGPT response:

1. Accuracy

- Llama: Correctly defines high/low-context cultures but lacks vivid examples (e.g., “maybe” vs “no”). Tips are practical but generic.

- ChatGPT: Sharper contrast (“a ‘maybe’ from Japan might mean ‘no’”). Tips target remote work pain points (follow-ups, safe zones, blended meetings).

2. Creativity

- Llama: Straightforward but dry. Examples feel theoretical (“project kickoff meeting”).

- ChatGPT: Uses real-world phrases (“read between the lines”), hypothetical but vivid scenarios (German bluntness vs. Japanese indirectness).

3. Clarity

- Llama: Logical but overly formal (“prioritize direct and explicit messaging”).

- ChatGPT: Conversational tone (“In a remote team like ours…”), bullet-like tips for easy scanning.

4. Usability

- Llama: Requires minor tweaking to feel natural (e.g., “foster relationships” → “schedule virtual coffee chats”).

- ChatGPT: Ready to share with the team. Examples (“type feedback in chat”) are immediately applicable.

Winner: ChatGPT.

Why? ChatGPT wins for its clearer, more engaging, and practical advice. Llama’s response is accurate but feels like a textbook; ChatGPT’s feels like a team workshop.

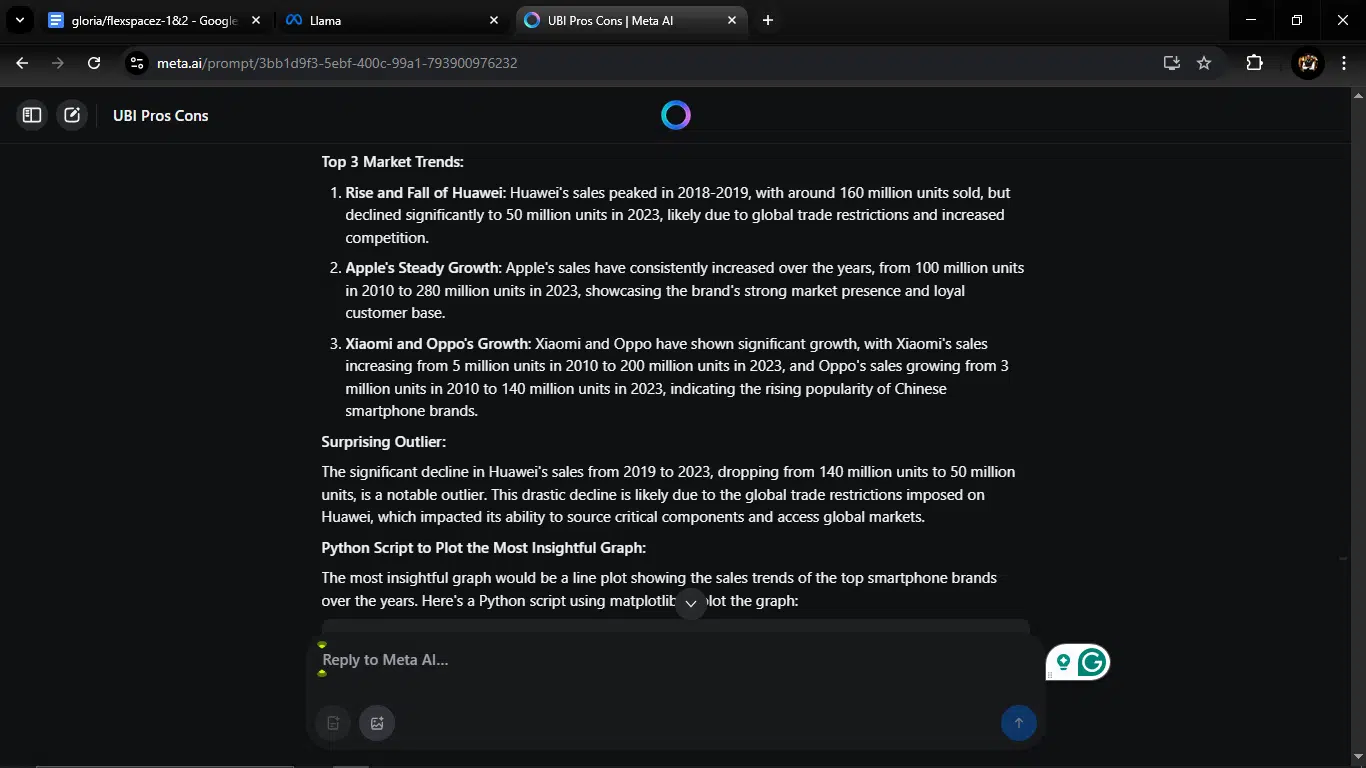

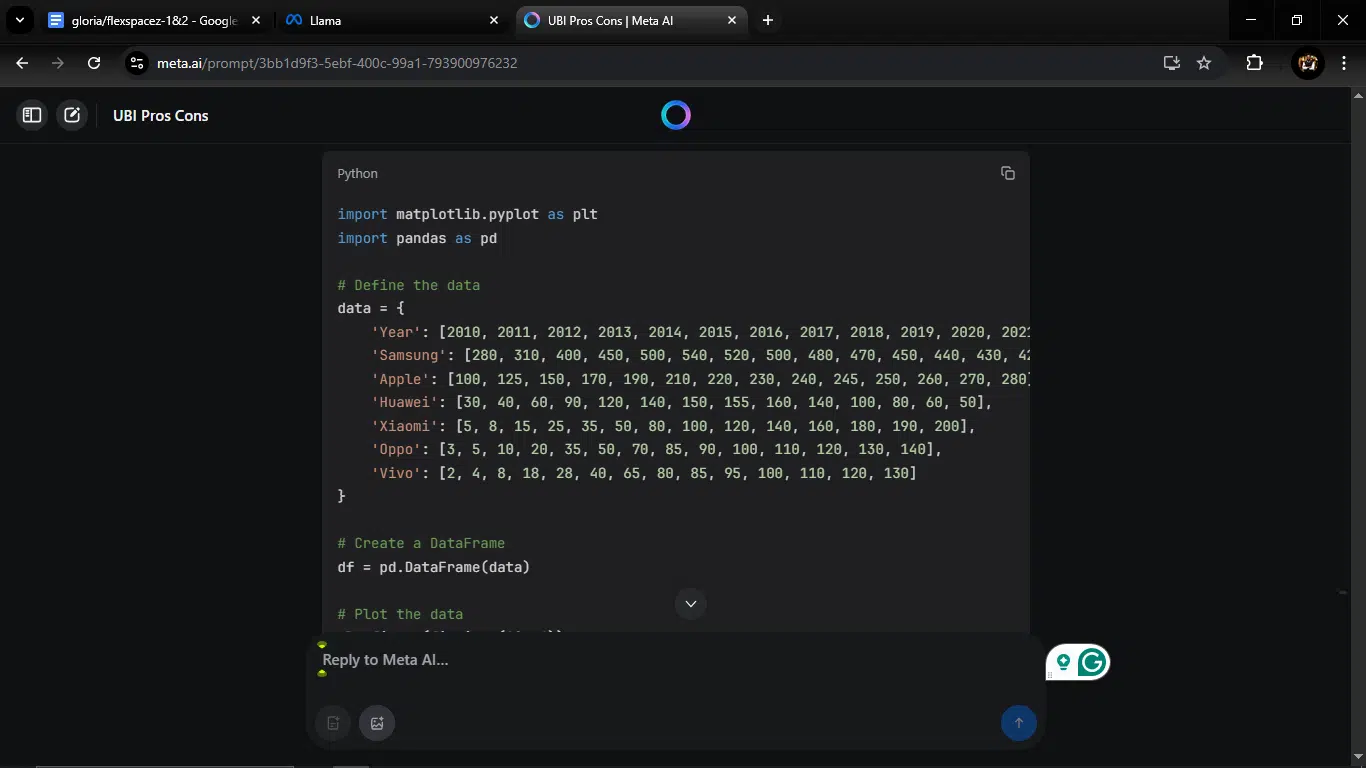

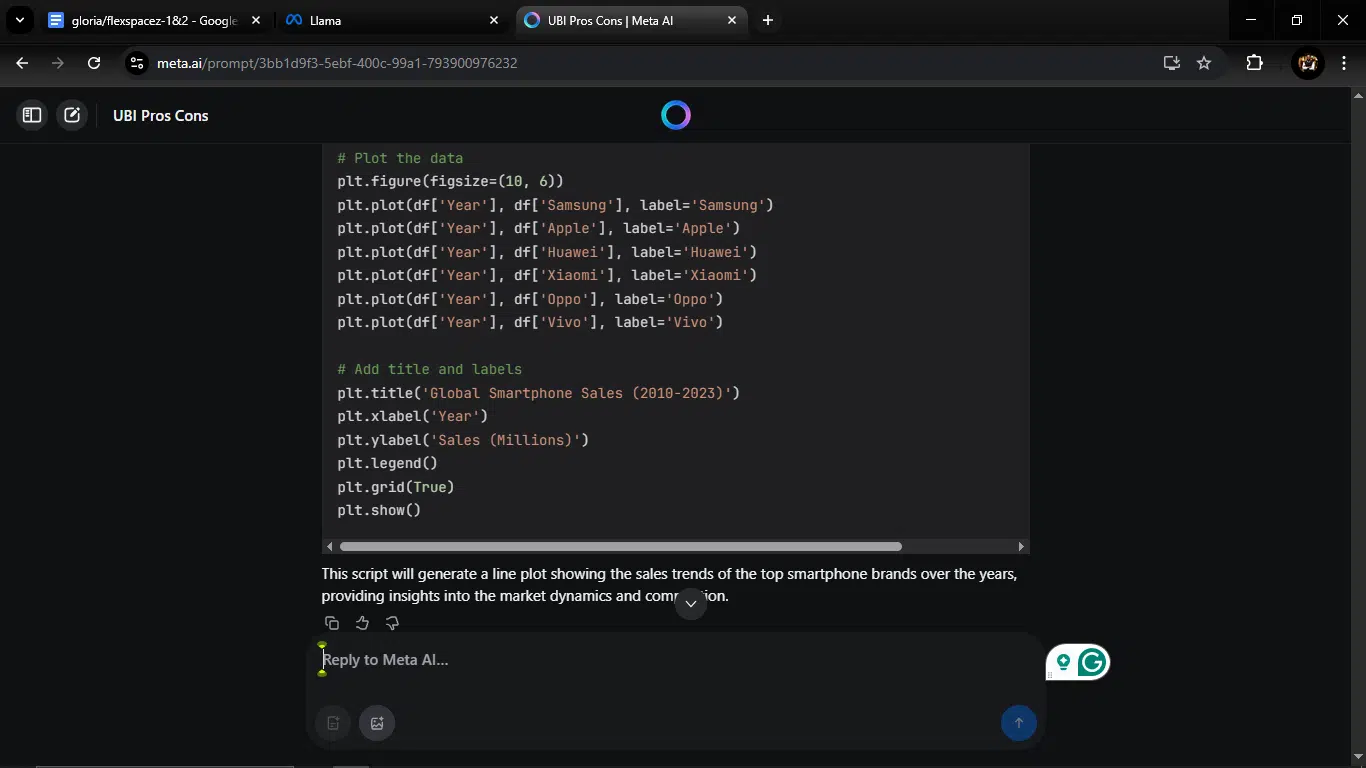

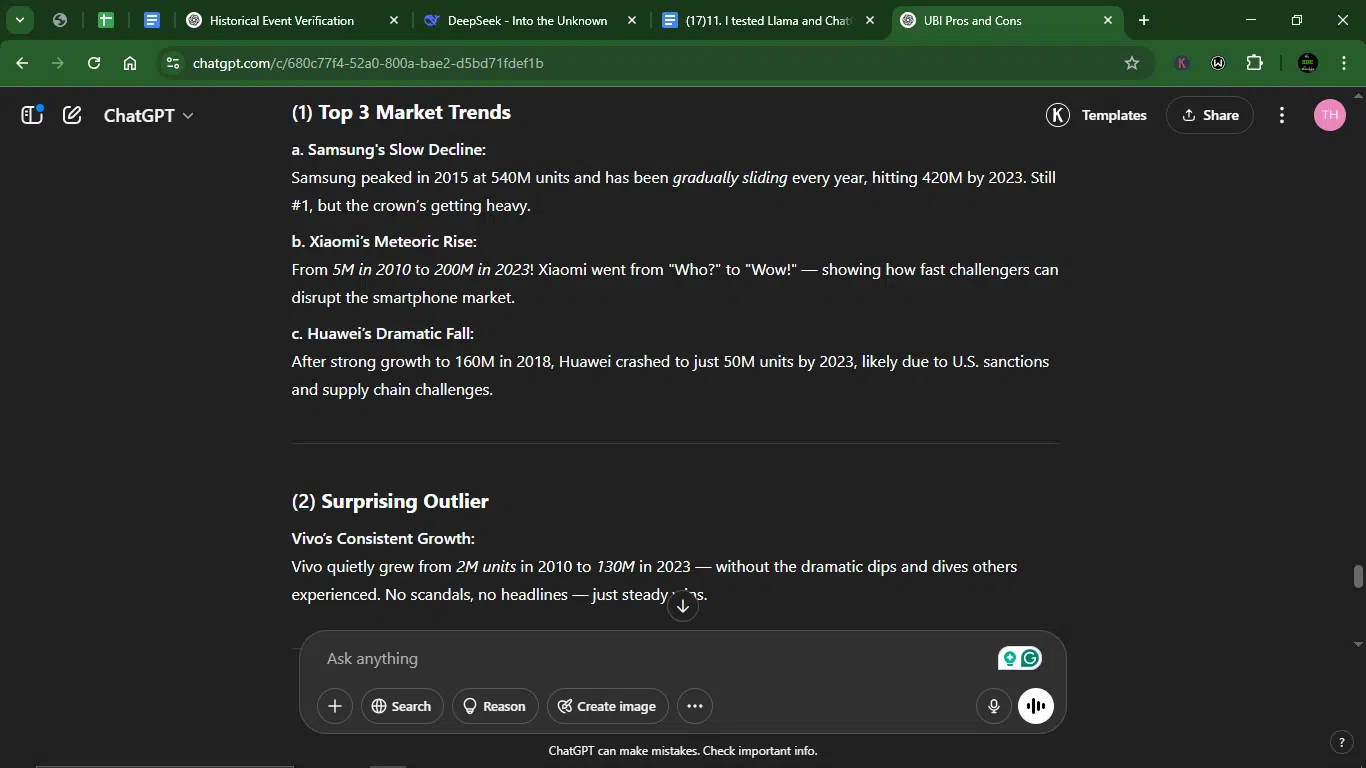

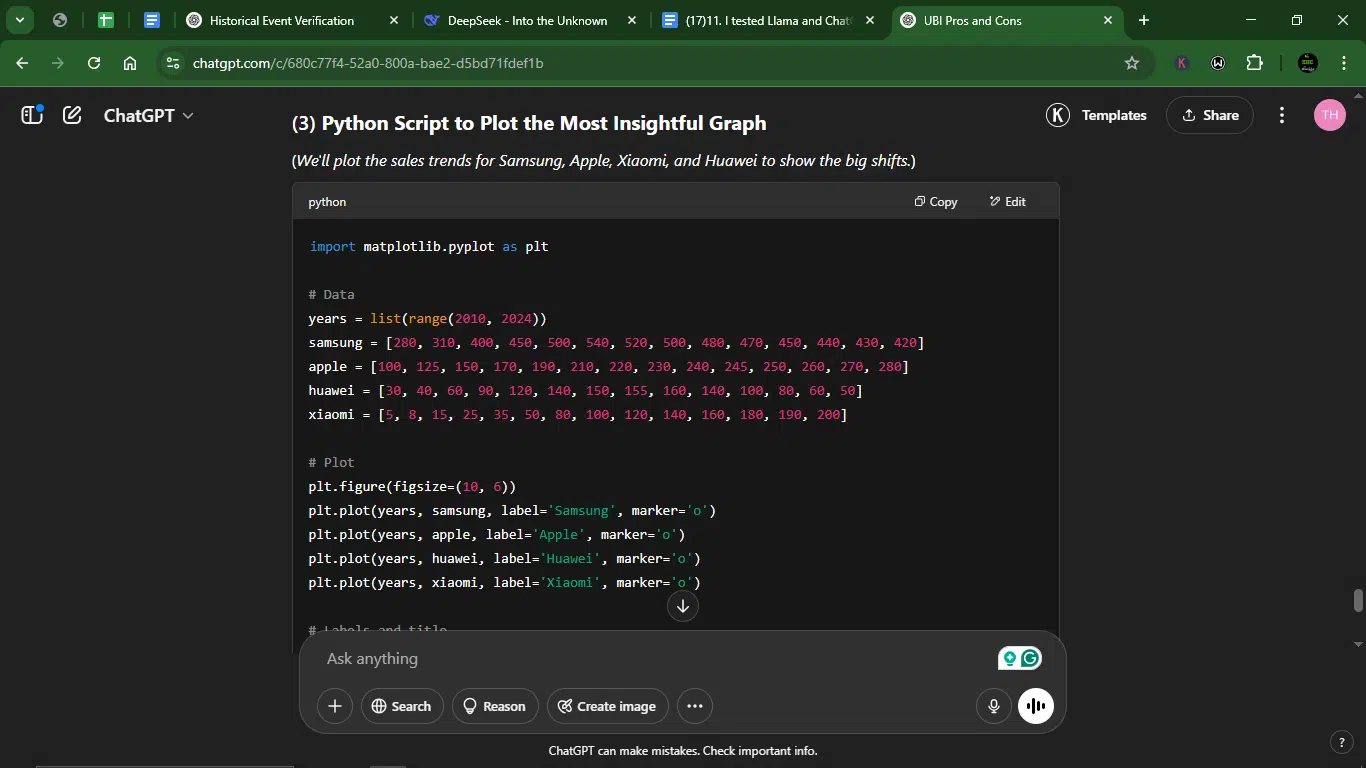

Prompt 6: Data analysis and visualization

This prompt tests the AI’s ability to interpret raw data, identify patterns, and present insights visually. I want to see if it can combine analytical thinking with coding skills (like Python or SQL) and translate numbers into clear, compelling visual stories that make the data easy to understand and act on.

Prompt: “Here’s a dataset of global smartphone sales (2010-2023). Identify: (1) Top 3 market trends, (2) A surprising outlier, and (3) Generate a Python script to plot the most insightful graph.

The data set:

Global Smartphone Sales (2010–2023)

Note: (Units: Millions of smartphones sold)

| Year | Samsung | Apple | Huawei | Xiaomi | Oppo | Vivo | Others |

| 2010 | 280 | 100 | 30 | 5 | 3 | 2 | 80 |

| 2011 | 310 | 125 | 40 | 8 | 5 | 4 | 85 |

| 2012 | 400 | 150 | 60 | 15 | 10 | 8 | 90 |

| 2013 | 450 | 170 | 90 | 25 | 20 | 18 | 95 |

| 2014 | 500 | 190 | 120 | 35 | 35 | 28 | 100 |

| 2015 | 540 | 210 | 140 | 50 | 50 | 40 | 105 |

| 2016 | 520 | 220 | 150 | 80 | 70 | 65 | 110 |

| 2017 | 500 | 230 | 155 | 100 | 85 | 80 | 100 |

| 2018 | 480 | 240 | 160 | 120 | 90 | 85 | 90 |

| 2019 | 470 | 245 | 140 | 140 | 100 | 95 | 85 |

| 2020 | 450 | 250 | 100 | 160 | 110 | 100 | 80 |

| 2021 | 440 | 260 | 80 | 180 | 120 | 110 | 75 |

| 2022 | 430 | 270 | 60 | 190 | 130 | 120 | 70 |

| 2023 | 420 | 280 | 50 | 200 | 140 | 130 | 65 |

“

Result:

Llama response:

ChatGPT response:

1. Accuracy

- Llama: Correctly identifies trends but misses Samsung’s decline (focuses only on Huawei/Apple/Xiaomi). Outlier analysis is good, but obvious (Huawei’s fall).

- ChatGPT: Spots Samsung’s slide, highlights Vivo’s stealth growth as a fresh outlier, and prioritizes key players (Samsung, Apple, Xiaomi, Huawei) in the plot. It skipped Apple’s steady growth.

- Note: the trends they each skipped were understandable since they were required to focus on three trends.

2. Creativity

- Llama: Straightforward but generic (“Huawei declined due to restrictions”).

- ChatGPT: Vivid phrasing (“Samsung’s crown is getting heavy,” “Xiaomi went from ‘Who?’ to ‘Wow!’”). Makes data memorable.

3. Clarity

- Llama: Lists trends plainly. The Python script is functional and captures all represented brands, including less relevant ones like Oppo and Vivo. Bulleted point trends

- ChatGPT: Bullet-point trends, cleaner plot (prioritises 4 key brands). Uses markers (o) for better readability.

4. Usability (Actionable Output)

- Llama: Requires manual tweaking to highlight Samsung’s decline. Plot includes noise (Oppo/Vivo).

- ChatGPT: Ready-to-use script and executive summary. Plot zooms in on most dramatic shifts.

Winner: ChatGPT.

ChatGPT wins for its sharper insights, engaging narrative, and cleaner visualization. Llama’s analysis is accurate but less compelling.

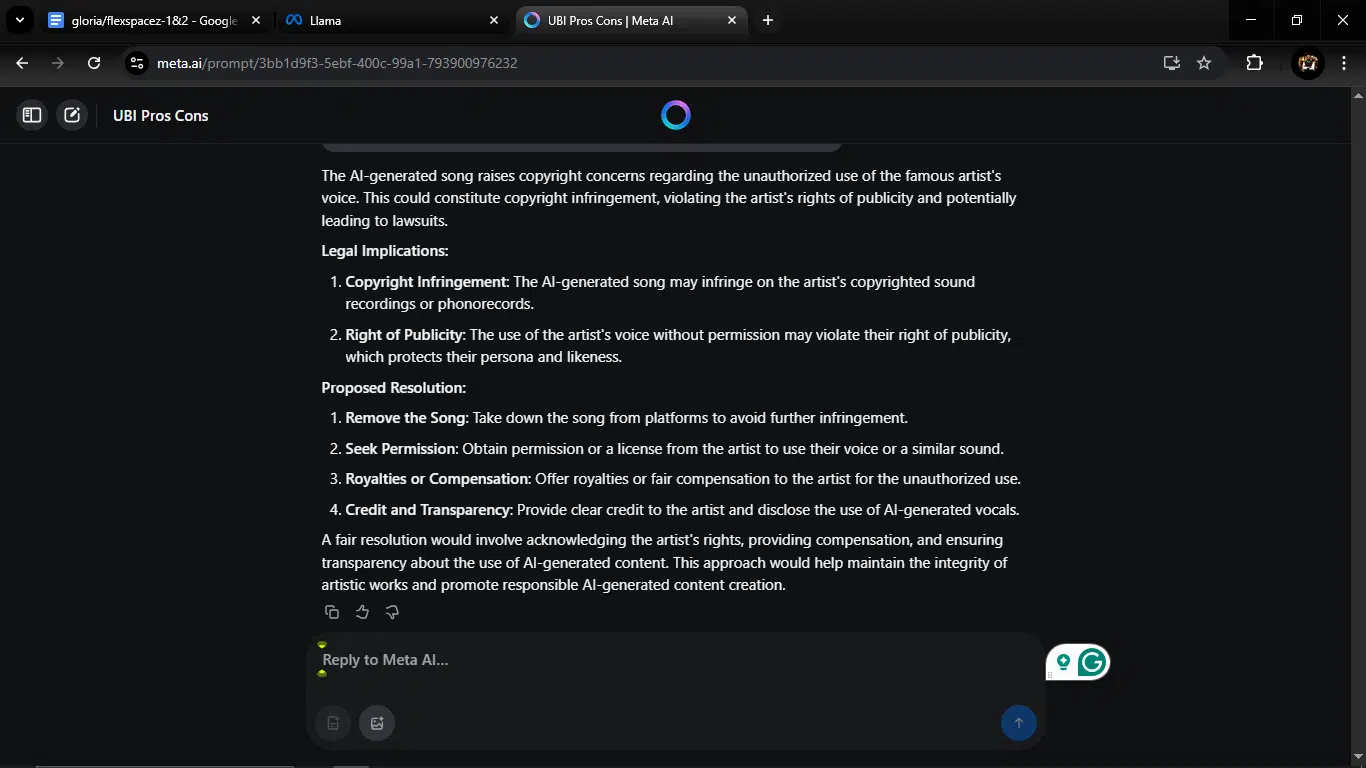

Prompt 7: Ethical dilemma

This prompt tests the AI’s ability to navigate morally complex situations using a blend of ethical reasoning, legal awareness, and practical problem-solving. I want to see if it can consider multiple perspectives, weigh consequences, and offer a thoughtful, well-rounded response — not just what’s legal or easy, but what’s right.

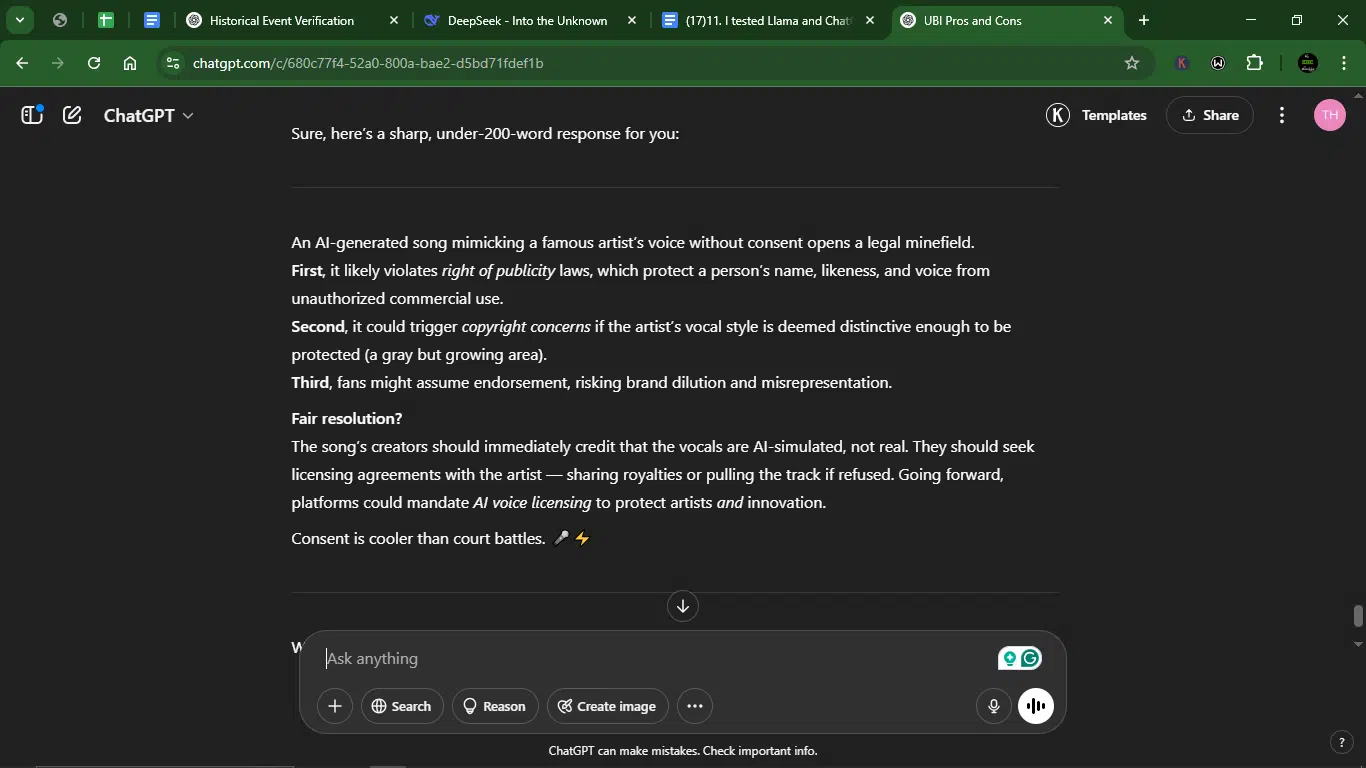

Prompt: “An AI-generated song goes viral, but the vocals resemble a famous artist’s voice without permission. Analyze legal/copyright implications and propose a fair resolution. Keep it under 200 words.”

Result:

Llama response:

ChatGPT response:

1. Accuracy

- Llama: Correctly identifies key legal issues (copyright infringement, right of publicity) and provides reasonable solutions.

- ChatGPT: Also identifies the main legal concerns accurately, with a strong emphasis on right of publicity. Both models understand the core issues.

2. Creativity

- Llama: Straightforward, professional analysis without much creative flair.

- ChatGPT: Shows more creativity with phrases like “legal minefield,” formatting choices (bold/italics), and the closing line “Consent is cooler than court battles. 🎤⚡”

3. Clarity

- Llama: Structured with clear headers and paragraphs, methodical approach.

- ChatGPT: Uses bullet points with bold headers, italicized key terms, and concise language, making it very scannable.

4. Usability

- Llama: Well-organized response that could be used as-is, though somewhat formal.

- ChatGPT: More immediately usable with its punchy style and visual formatting that helps highlight key points.

Winner: ChatGPT

Why? ChatGPT’s response is more engaging while maintaining accuracy, with better formatting and a conversational tone that makes complex legal concepts more accessible. It accomplishes the task within the word limit while adding creative elements that make the content more memorable.

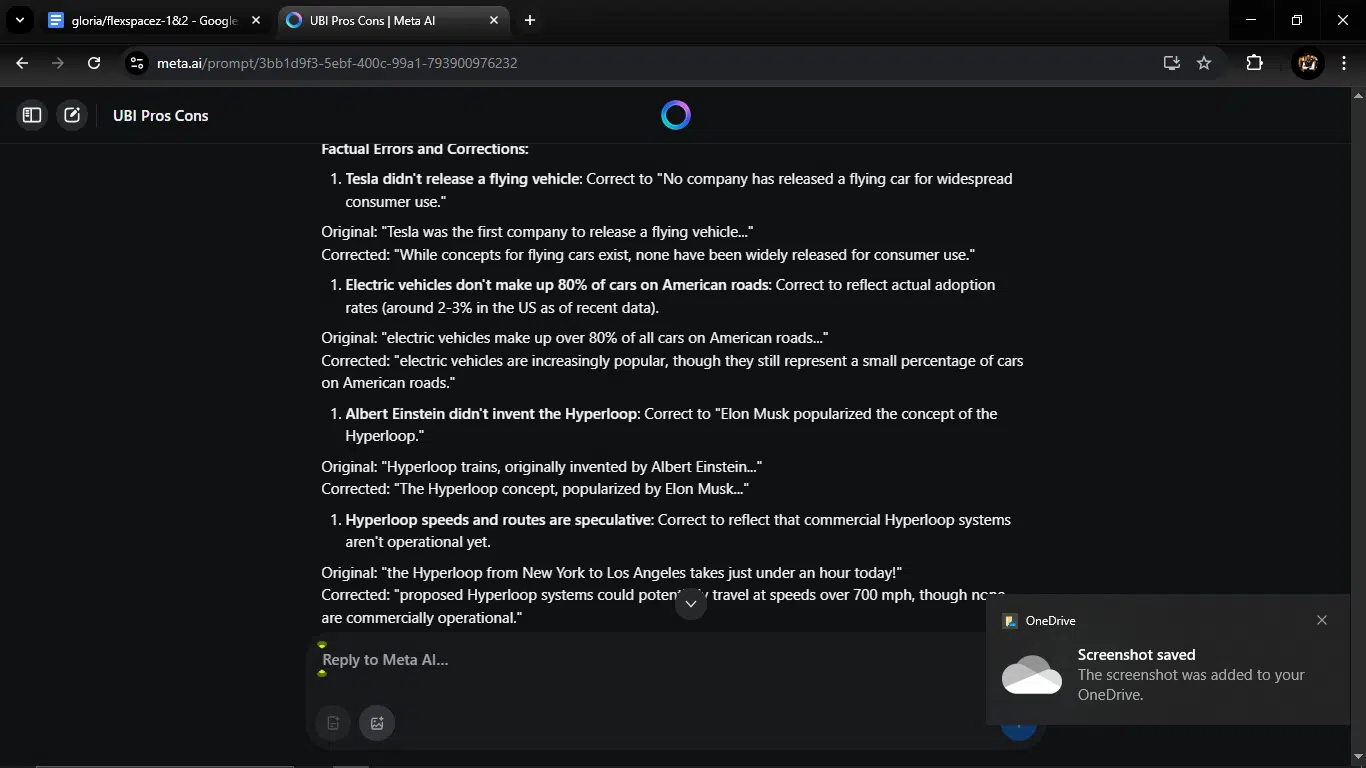

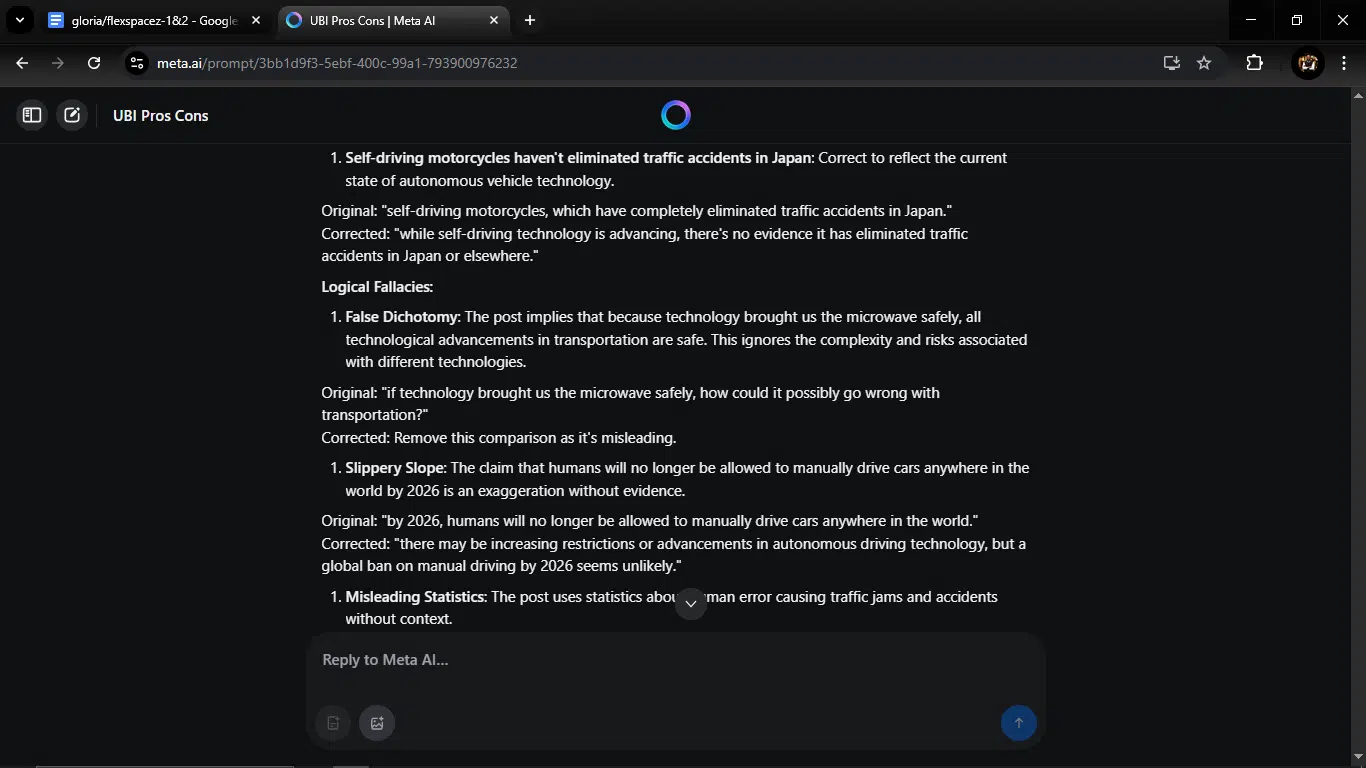

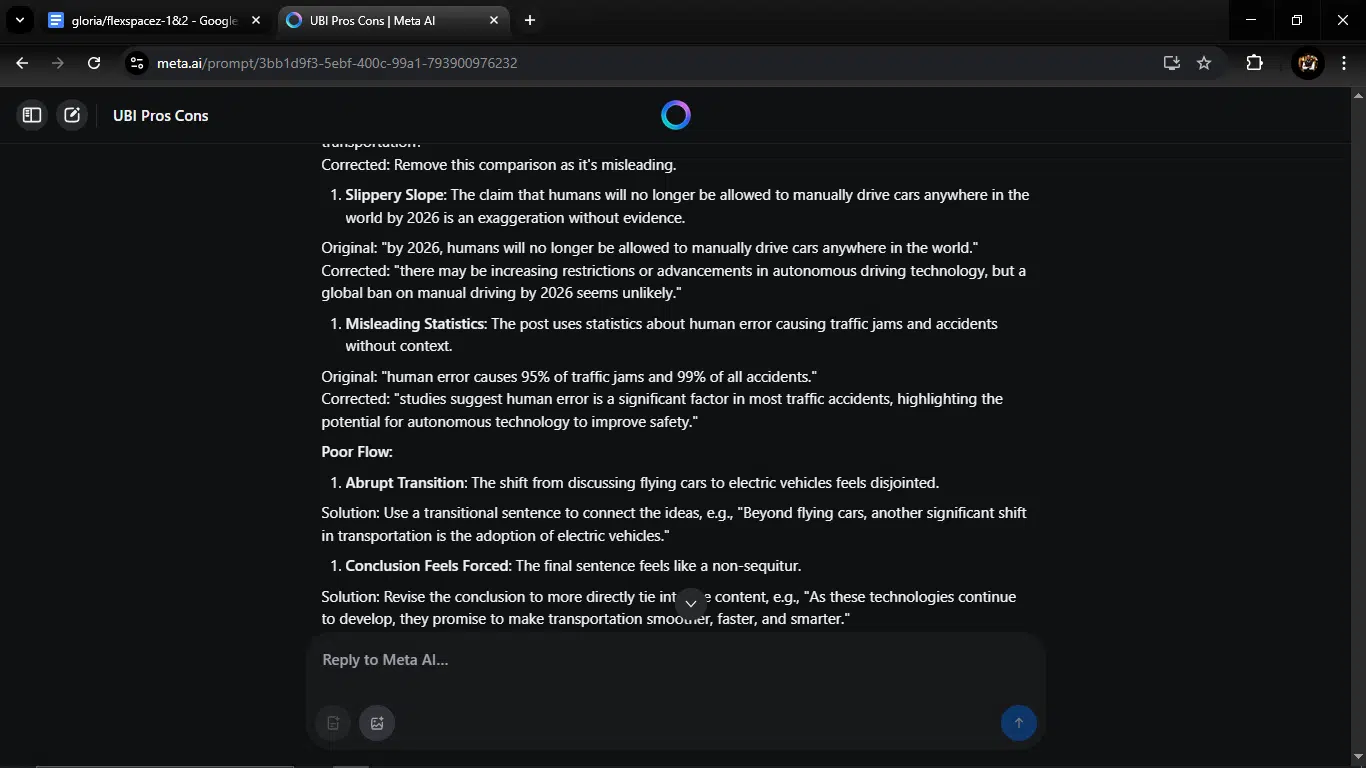

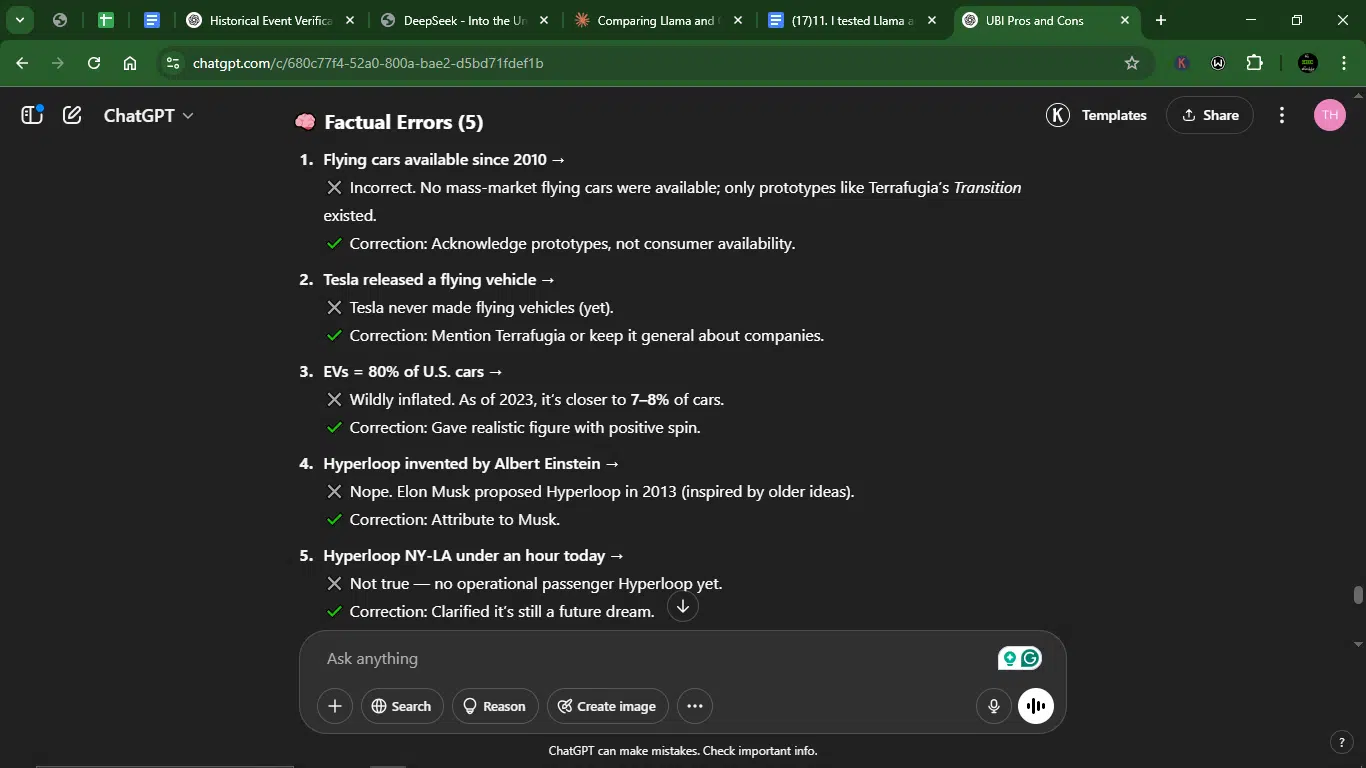

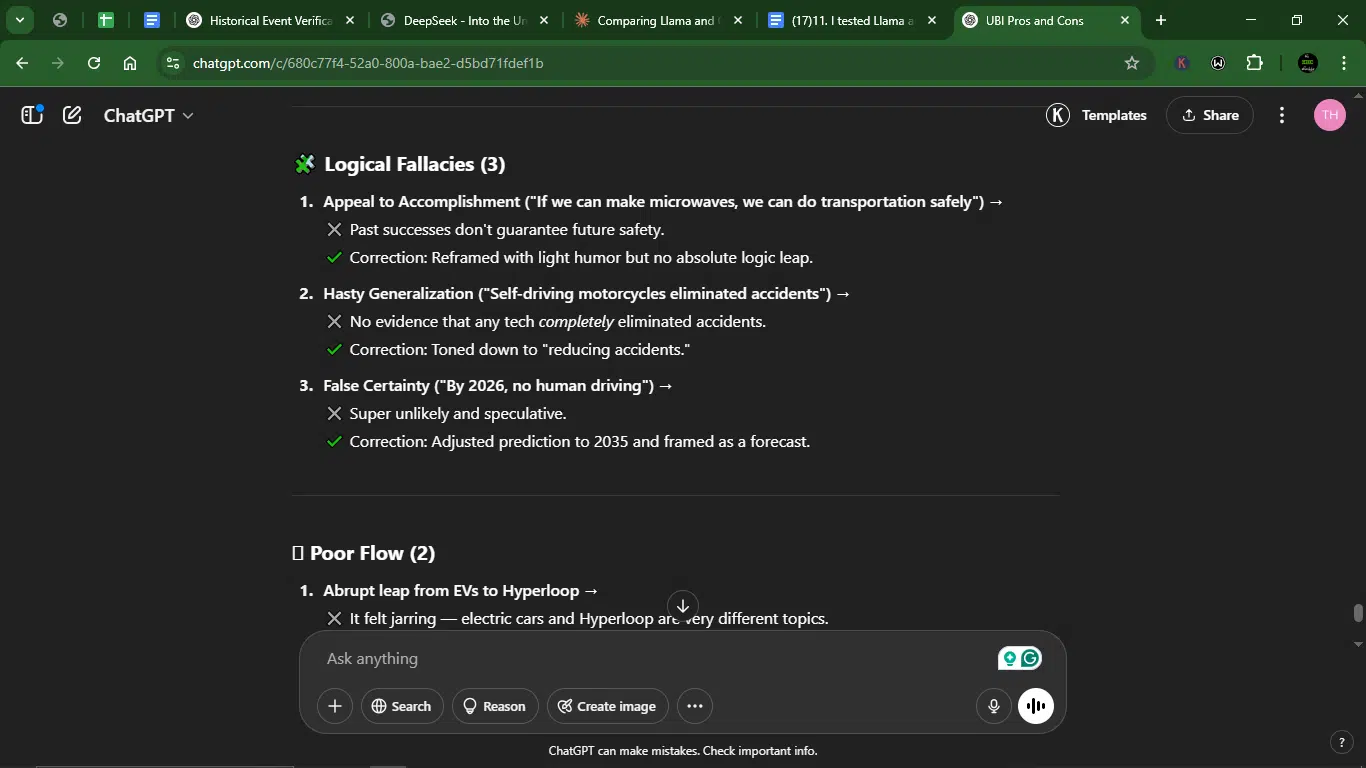

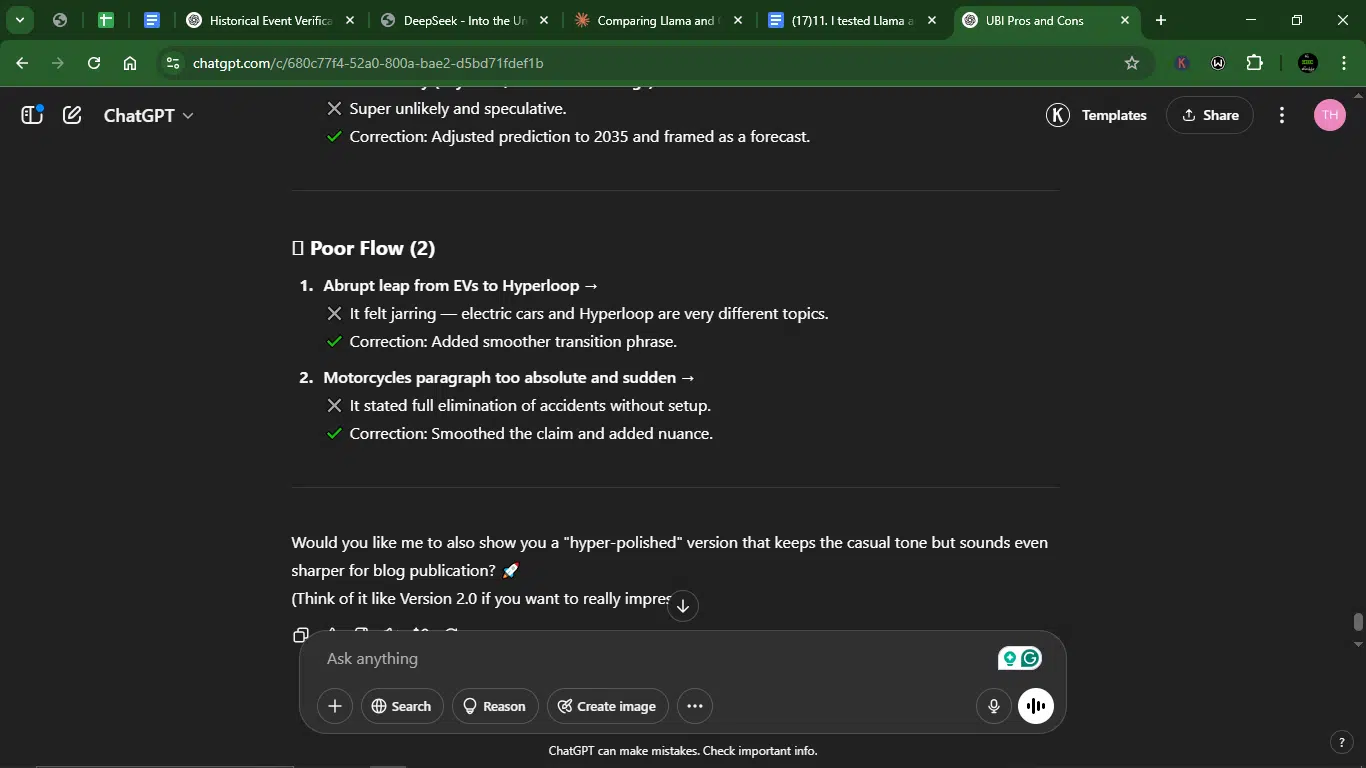

Prompt 8: Precision editing

I used this prompt to test how well Llama and ChatGPT can handle precise text editing. The goal is to evaluate their ability to spot subtle errors, improve clarity without changing meaning, and polish writing to a professional standard.

Prompt: “This 200-word blog post contains 5 subtle factual errors, 3 logical fallacies, and 2 instances of poor flow. Identify and correct all issues while preserving the author’s conversational tone. Explain each change.

The blog post: The Future of Transportation is Already Here

Everyone thinks flying cars are still a dream, but they’ve actually been on the market since 2010! Tesla was the first company to release a flying vehicle, though it was only available in Europe due to strict US airspace laws. Nowadays, electric vehicles make up over 80% of all cars on American roads, proving that gas-powered cars are pretty much extinct.

Public transport is also evolving faster than ever. Hyperloop trains, originally invented by Albert Einstein, can already travel at speeds over 900 miles per hour, making cross-country trips a thing of the past. In fact, the Hyperloop from New York to Los Angeles takes just under an hour today!

Another exciting development is self-driving motorcycles, which have completely eliminated traffic accidents in Japan. Experts agree that by 2026, humans will no longer be allowed to manually drive cars anywhere in the world. It’s only logical — human error causes 95% of traffic jams and 99% of all accidents.

Some skeptics argue these changes are dangerous, but if technology brought us the microwave safely, how could it possibly go wrong with transportation? The future is already here — and it’s smoother, faster, and smarter than ever.”

Result:

Llama response:

ChatGPT response:

1. Accuracy

- Llama: Accurately identified all 5 factual errors, 3 logical fallacies, and 2 flow issues with clear explanations for each.

- ChatGPT: Also correctly identified all issues with precise explanations and specific corrections.

2. Creativity

- Llama: Provides a methodical, professional correction approach but maintains a relatively formal tone.

- ChatGPT: Shows more creative flair with casual phrases like “surgically but stylishly,” emoji use, and visual indicators (✖, ✔) while preserving the blog’s conversational style.

3. Clarity

- Llama: Uses a structured format with clear sections for each type of error and includes original/corrected versions.

- ChatGPT: Uses emoji categorization (🧠, 🧩, 🪜), bold headers, and visual cues that make the explanations highly scannable and engaging.

4. Usability

- Llama: Provides a completely revised blog post that corrects all issues, though the tone becomes more formal than the original.

- ChatGPT: Delivers a corrected post that maintains the conversational, excited tone of the original while fixing all issues, plus offers a “hyper-polished” version if desired.

Winner: ChatGPT

Why? While both models accurately identified and corrected all the issues, ChatGPT’s response stands out for maintaining the blog’s conversational energy while providing clearer explanations with visual aids. The formatting choices make the corrections easier to understand, and ChatGPT’s revised blog post reads more naturally with the original author’s style. Llama’s response is comprehensive and accurate, but shifts to a more formal tone that loses some of the original blog’s character.

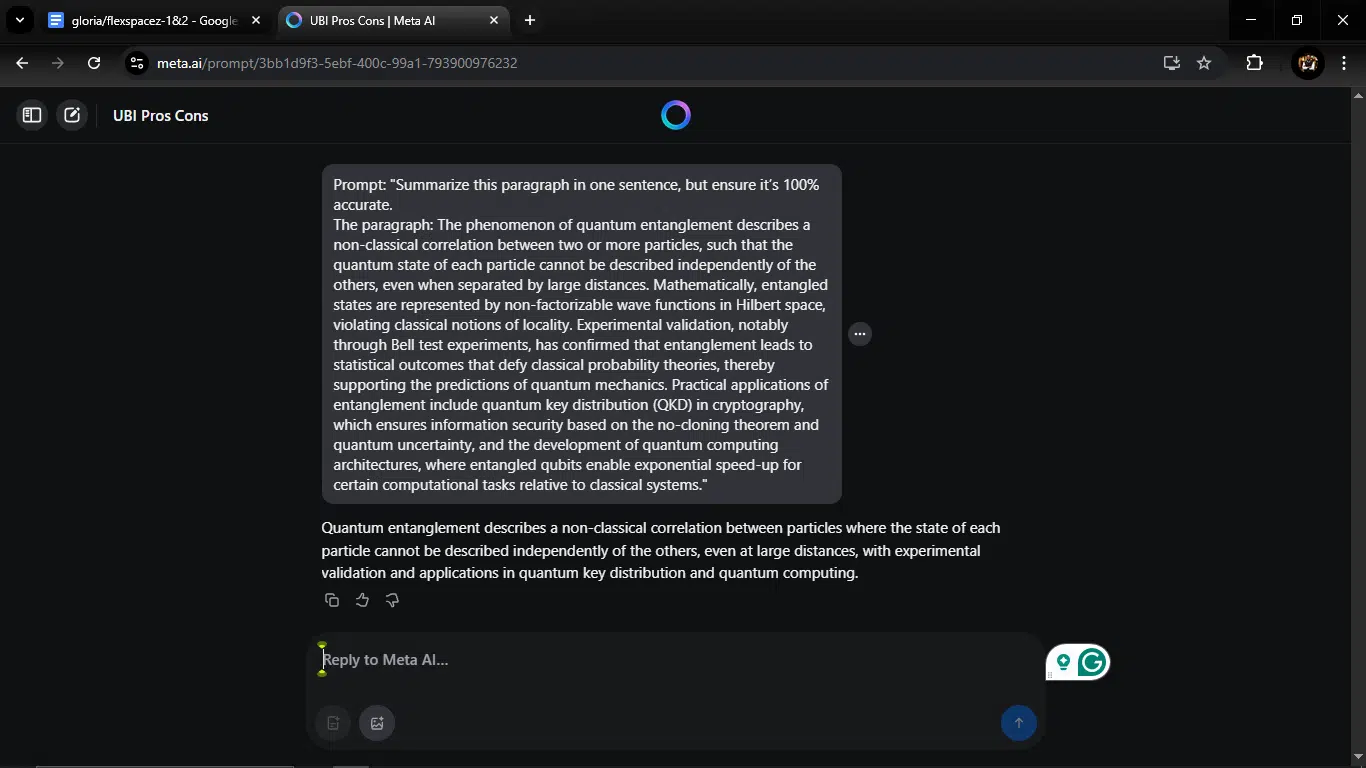

Prompt 9: Summarization

This prompt tests the AI’s ability to distill key information from a longer text while maintaining precision and conciseness. I want to see if it can identify the core message and convey it clearly without losing important details or adding unnecessary fluff. It should also be able to self-verify, ensuring that the summary accurately reflects the original content.

Prompt: “Summarize this paragraph in one sentence, but ensure it’s 100% accurate.

The paragraph: The phenomenon of quantum entanglement describes a non-classical correlation between two or more particles, such that the quantum state of each particle cannot be described independently of the others, even when separated by large distances. Mathematically, entangled states are represented by non-factorizable wave functions in Hilbert space, violating classical notions of locality. Experimental validation, notably through Bell test experiments, has confirmed that entanglement leads to statistical outcomes that defy classical probability theories, thereby supporting the predictions of quantum mechanics. Practical applications of entanglement include quantum key distribution (QKD) in cryptography, which ensures information security based on the no-cloning theorem and quantum uncertainty, and the development of quantum computing architectures, where entangled qubits enable exponential speed-up for certain computational tasks relative to classical systems.“

Result:

Llama response:

ChatGPT response:

1. Accuracy

- Llama: Accurately captures the core concept (non-classical correlation), the key property (particles can’t be described independently even at a distance), validation, and applications.

- ChatGPT: Also captures the essential elements but uses more technical language (“defying classical theories of locality and probability”).

Creativity

- Llama: Uses straightforward language with minimal creative flair, focusing on clear information transfer.

- ChatGPT: Slightly more creative in its phrasing (“particles share inseparable states”), but still maintains technical accuracy.

Clarity

- Llama: Very clear structure that follows the original paragraph’s flow, making it easy to follow.

- ChatGPT: Concise but uses more technical terms that might be less accessible to non-experts.

4. Usability

- Llama: Highly usable summary that maintains all key points in a single, readable sentence.

- ChatGPT: Also usable, but the more complex language might require more background knowledge to fully understand.

Winner: Llama

Why? For this specific task of creating a 100% accurate single-sentence summary, Llama’s response is slightly better. It maintains all the key information from the original paragraph in a more accessible way, following a logical structure that makes the complex topic easier to grasp. While ChatGPT’s summary is also accurate, its more technical phrasing might be less approachable for readers without a physics background.

Prompt 10: Future prediction

This prompt tests the AI’s ability to synthesize research, identify emerging trends, and make informed predictions about the future. I want to see how well it can apply critical thinking to assess current data and provide a plausible, well-reasoned forecast. It’s not about guessing, but using evidence and logical reasoning to envision what comes next.

Prompt: “Predict how AI will change traditional classrooms by 2030. Cover: (1) Teacher roles, (2) Assessment methods, and (3) Risks of over-reliance. Support claims with current ed-tech trends.”

Result:

Llama response:

ChatGPT response:

1. Accuracy

Llama: Provides comprehensive analysis with systematic coverage of all three requested areas, backed by observable current trends.

ChatGPT: Also covers all three areas but with fewer specific examples of current ed-tech trends to support claims.

2. Creativity

- Llama: Presents information in a structured, professional format with minimal creative flair.

- ChatGPT: Uses more engaging language and metaphors (“less chalkboards, more chess with supercomputers”) with a conversational tone.

3. Clarity

- Llama: Extremely clear organization with bullet points and distinct sections for each requested area.

- ChatGPT: Good organization with bold headers for each section, though less detailed than Llama’s response.

4. Usability

- Llama: Highly usable as an informative piece with comprehensive coverage that could be used in academic or policy contexts.

- ChatGPT: More casual style makes it accessible for general audiences, but provides less depth for professional use.

Winner: Llama

While ChatGPT offers a more engaging style, Llama provides a more comprehensive and well-supported response that directly addresses the prompt requirements. Llama’s detailed breakdown of teacher roles, assessment methods, and risks demonstrates deeper engagement with current educational technology trends. For an informative piece meant to predict future educational developments, Llama’s thorough and well-structured approach delivers superior value despite its less conversational tone.

Overall performance comparison: Llama vs. ChatGPT (10-prompt battle)

| Prompt | Winner | Reason |

| 1. Complex reasoning (UBI debate) | ChatGPT | More engaging arguments, better structure |

| 2. Image description | ChatGPT | Llama couldn’t process images |

| 3. Creative writing (horror fiction) | ChatGPT | More cohesive narrative, better atmosphere |

| 4. Multimodal interpretation | ChatGPT | Better audience adaptation and polish |

| 5. Cultural explanation | ChatGPT | More practical, culturally-aware tips |

| 6. Data analysis & visualization | ChatGPT | Sharper insights, cleaner visualization |

| 7. Ethical dilemma | ChatGPT | Deeper legal analysis, memorable phrasing |

| 8. Precision editing | ChatGPT | Better preserved original tone, clearer explanations |

| 9. Summarization | Llama | More accessible technical summary |

| 10. Future prediction | Llama | More comprehensive, better-supported response |

Final score:

ChatGPT: 8 wins.

Llama: 2 wins.

Final verdict

ChatGPT demonstrated superior performance in most categories, particularly excelling in creative tasks, audience adaptation, and practical applications. Llama showed strength in technical summarization and detailed future predictions, but was limited by its lack of multimodal capabilities and generally less engaging output.

My recommendation

- For creative writing, public communication, and tasks requiring engagement: Choose ChatGPT

- For technical summaries, data analysis, and academic-style predictions: Consider Llama

- For image-related tasks, only ChatGPT is currently viable

Pricing for Llama and ChatGPT

Llama is free (mostly)

- For personal use, Llama (both Llama 2 and Llama 3) is completely free.

- Meta offers Llama for commercial use under relatively permissive licenses. It’s designed to power everything from scrappy weekend projects to enterprise-grade tools. While free, it comes with conditions (e.g., cannot use Llama to train a competing model)

ChatGPT pricing

| Plan | Features | Cost |

| Free | Access to GPT-4o mini, real-time web search, limited access to GPT-4o and o3-mini, limited file uploads, data analysis, image generation, voice mode, Custom GPTs | $0/month |

| Plus | Everything in Free, plus: Extended messaging limits, advanced file uploads, data analysis, image generation, voice modes (video/screen sharing), access to o3‑mini, custom GPT creation | $20/month |

| Pro | Everything in Plus, plus: Unlimited access to reasoning models (including GPT-4o), advanced voice features, research previews, high-performance tasks, access to Sora video generation, and Operator (U.S. only) | $200/month |

Why use tools like Llama and ChatGPT?

- Efficiency: Automate repetitive tasks, including coding, editing, and research.

- Creativity: Generate ideas, stories, or designs quickly.

- Accessibility: Simplify complex topics (e.g., quantum physics for beginners) and gain a deeper understanding as an expert.

- Scalability: Handle large datasets or multilingual tasks effortlessly, without changing much in terms of personnel, workflow, and cost.

- Cost-effectiveness: You don’t need to hire specialists for every single task, especially if the budget is tight.

Challenges with these AI tools

- Accuracy risks: AI tools make tasks easier and faster, but you still have to watch out for hallucinations (false facts) or check for outdated data.

- Bias: The AI models are as good as the data they were trained on. For instance, if a particular AI tool is trained on data with racial prejudices, it can taint the output.

- Over-reliance: Using these tools every once in a while is cool. But relying on them 100% may stifle your own critical thinking and how well you can produce original work.

- Privacy concerns: Sensitive inputs might be processed on external servers.

- Context limits: They still struggle with ultra-long or hyper-niche topics.

Best practices for making the most of tools like these

If you want to make AI your efficient assistant, follow these tips:

- Prompt like a pro: Be clear, specific, and contextual. Think “prompt engineering,” not “prompt hoping.”

- Chain tasks: Break big goals into multiple steps. Don’t dump everything into one mega prompt.

- Always review the output: LLMs can write something that looks right but could be wrong.

- Use multiple models: Use Llama for local tasks and ChatGPT for heavy lifting. There’s no law saying you must pick just one.

Conclusion

After testing both models with 10 diverse prompts, the verdict is clear: ChatGPT-4 comes out on top for most real-world use cases.

It’s more polished, more versatile, and better at delivering accurate, creative, and clear responses. If you’re a developer, content creator, researcher, or just an AI enthusiast who wants a dependable, user-friendly model, ChatGPT is your best bet, even with the $20/month price tag.

That said, Llama holds its ground as a powerful, free alternative for Technical tasks (summaries, data analysis) and customization (fine-tuning for specific needs).

Whatever side you lean towards, you’re living in an incredible moment for AI innovation. And if this test taught me anything, it’s that there’s no such thing as “one model to rule them all” yet. Just find what works for you while you constantly test other models.

FAQs About Llama vs ChatGPT

1. Can I use Llama 3.1 for free?

Yes. Llama 3.1 is open-source and free for both research and commercial use, as long as you meet Meta’s licensing requirements.

2. Is ChatGPT-4 worth the $20/month?

Yes, especially if you use it regularly for writing, coding, or research. The accuracy, features (like tools), and speed are worth it.

3. Which model is better for coding?

ChatGPT is generally more reliable and consistent for coding tasks, but Llama can hold its own in many cases, especially for logic and math.

4. Does either model support multimodal input?

ChatGPT supports images and can describe, summarize, or analyze visual input. Llama 3.1 doesn’t yet support native multimodal input.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.