Some days, I just want to get through a bug fix or write a function without staring at the screen for 15 minutes wondering where to start. That’s when I reach for an AI coding assistant. But recently, I found myself switching between DeepSeek and GitHub Copilot more than I expected and not always for the reasons you’d think. This led me to compare deepseek vs copilot directly.

In recent discussions, the debate of deepseek vs copilot has come up frequently.

Moreover, understanding the differences between deepseek vs copilot can significantly impact your coding efficiency.

To settle it, I ran both tools through the same 10 developer-focused prompts, including deepseek vs copilot. These included tasks like debugging, generating boilerplate code, writing regex, and even translating comments. What I found surprised me in a few places and confirmed a few hunches in others.

Let me take you through my experience.

The tools deepseek vs copilot were utilized to gauge performance effectively.

How i set up the test

Reflecting on the DeepSeek vs Copilot experience, it’s clear that each has its strengths.

For this comparison, I used the same 10 prompts across both tools, keeping the environment as consistent as possible: VS Code, no internet distractions, and no extra plugins running. Each prompt was evaluated based on code quality, speed, accuracy, and how much editing I had to do afterward.

I didn’t cherry-pick results. If something failed, I left it in.

Let’s get into it.

Prompt 1: Generate a Python function to validate email addresses

Prompt: “Write a Python function that validates whether a given string is a properly formatted email address.”

This is a common utility task, something you’d typically write once and reuse across projects. It’s also a good test of how well the AI handles regular expressions and function structure without bloating the code.

What I’m looking for:

In future projects, I will consider the deepseek vs copilot distinction when selecting tools.

- Does the tool use a reasonable regex pattern?

- Is the function readable and reusable?

- Does it handle edge cases like missing @, extra spaces, or invalid domain formats?

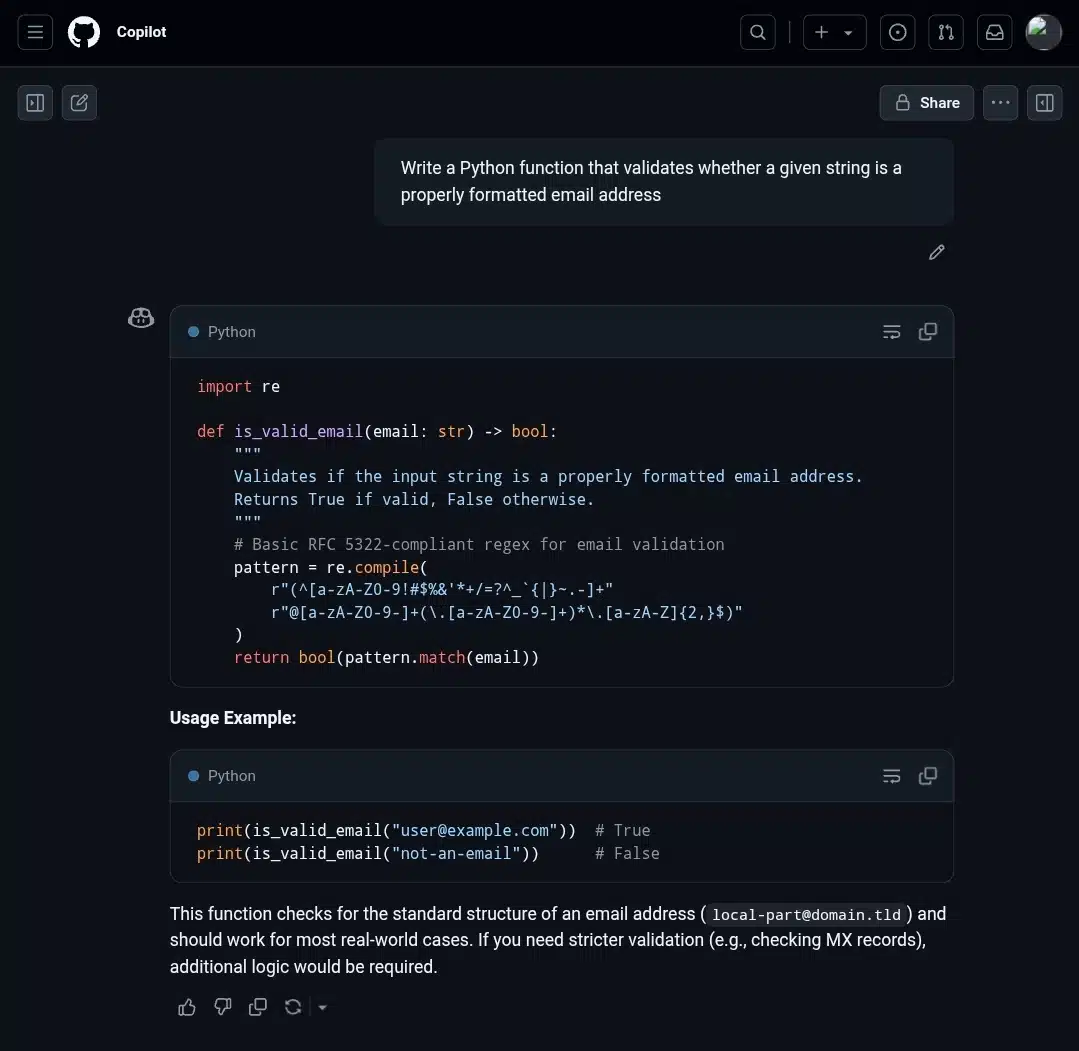

Github Copilot Response

Pros

- Regex pattern: More aligned with RFC 5322 rules, making it slightly more comprehensive. It catches edge cases like user@localhost or emails with uncommon but valid characters.

- Code style: Clean, type-annotated (email: str -> bool), concise. Feels production-ready.

- Usage example: Simple and effective, demonstrates practical use.

Cons

- No explanation: There’s no inline or external explanation of the regex.

- Less beginner-friendly: If you’re unfamiliar with advanced regex, the pattern looks intimidating.

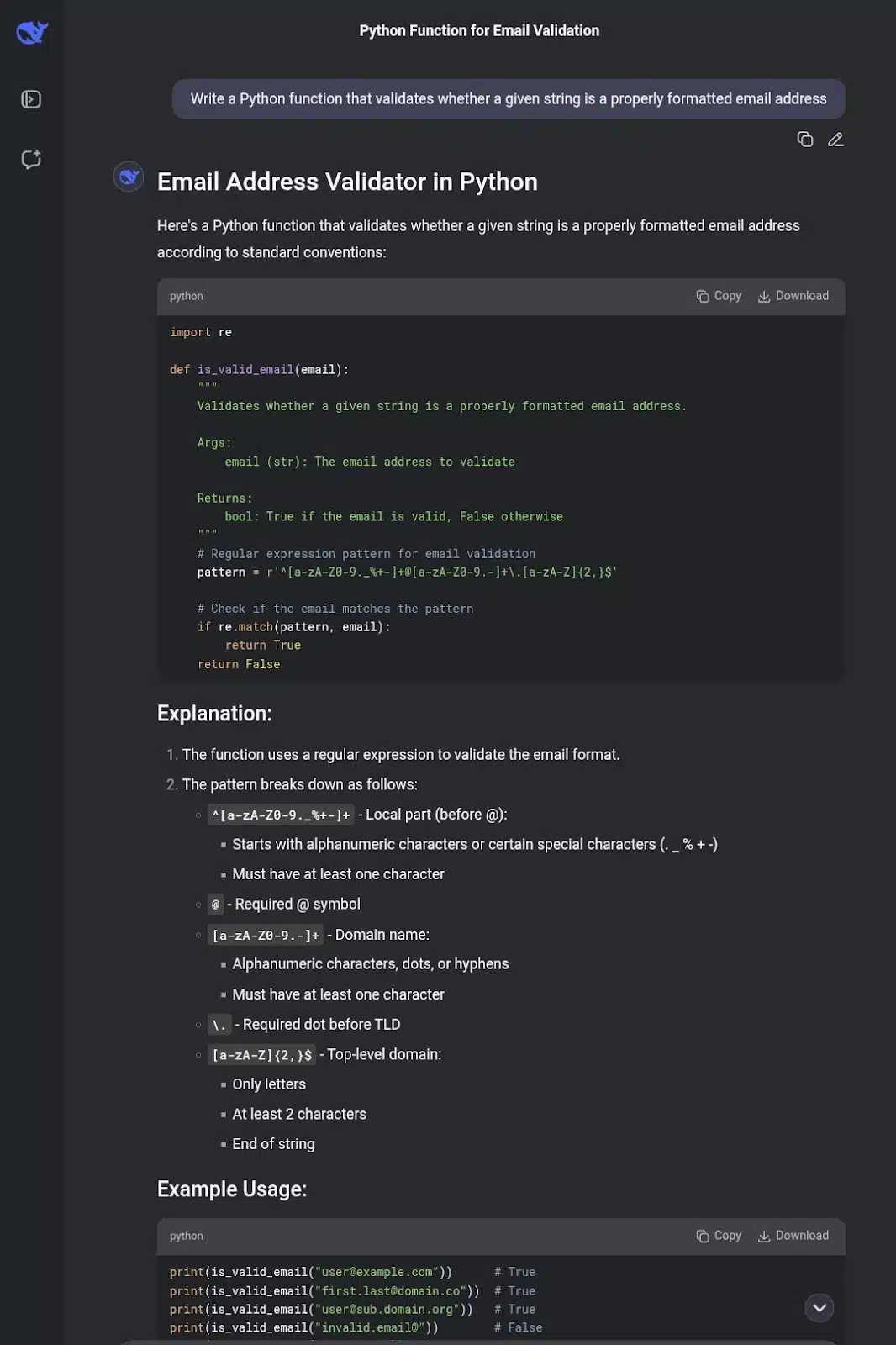

Deep seek response

Pros:

- Regex: Simpler and easier to read. While not as rigorous as Copilot’s, it handles the vast majority of real-world email formats.

- Explanations: Extensive breakdown of the regex, clear docstring, and usage notes. It’s great for newer developers or if you want to understand what’s going on.

- More examples: Multiple test cases show different edge scenarios.

To navigate this landscape, knowing deepseek vs copilot nuances is key.

Cons:

- Regex coverage: Slightly less strict than Copilot’s. It might let a few invalid formats through, like consecutive dots or missing local parts, depending on the case.

- Code style: No type hints, a little more verbose with the if/else block (though still readable).

Verdict

Both tools nailed the basics, but they cater to different needs:

- Copilot feels like a senior dev. It’s quiet, fast, and straight to the point. Its output is slightly more robust and production-ready.

- DeepSeek acts like a teaching assistant. It explains everything and gives you context, even if it sacrifices strict validation.

For quick use in a real project, Copilot wins here. But if you’re learning, reviewing, or teaching others, DeepSeek’s clarity and examples make it valuable.

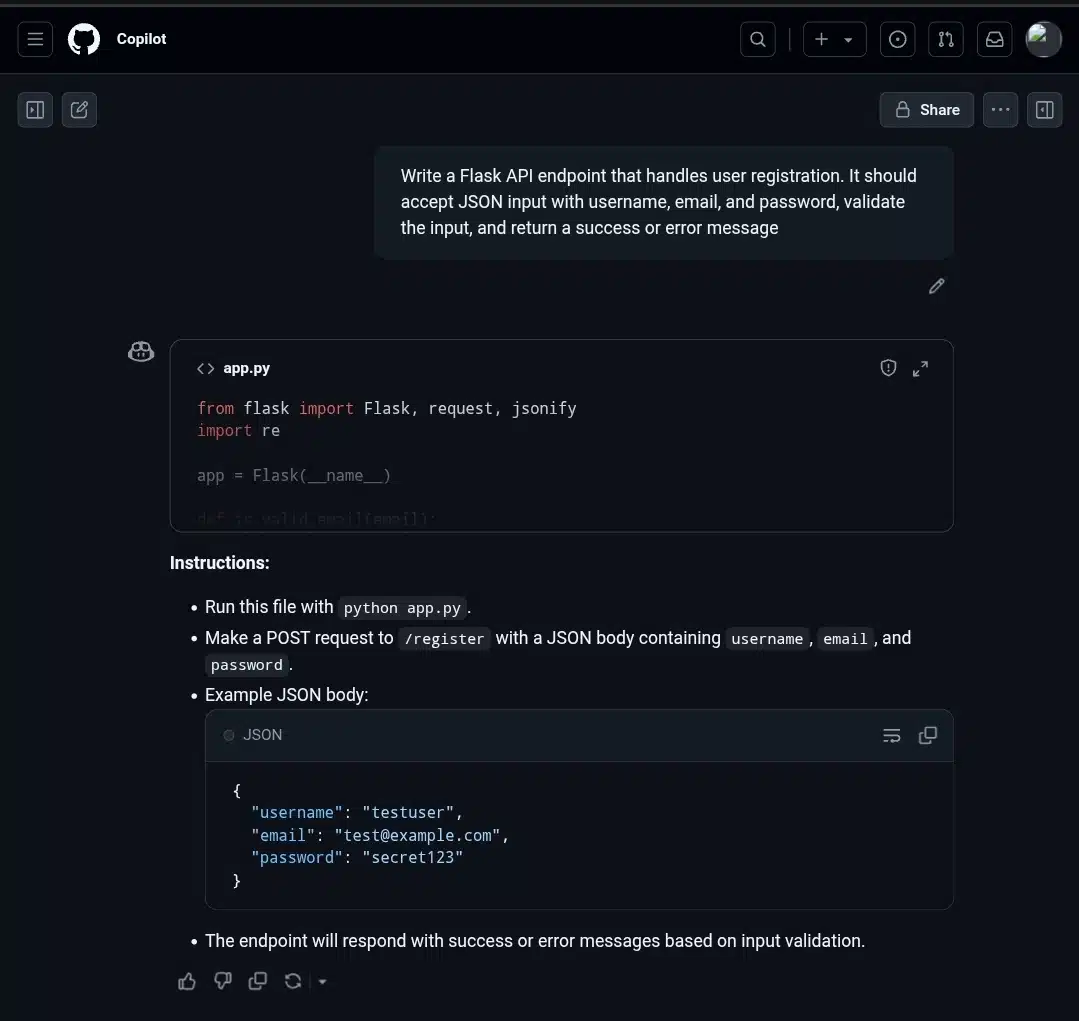

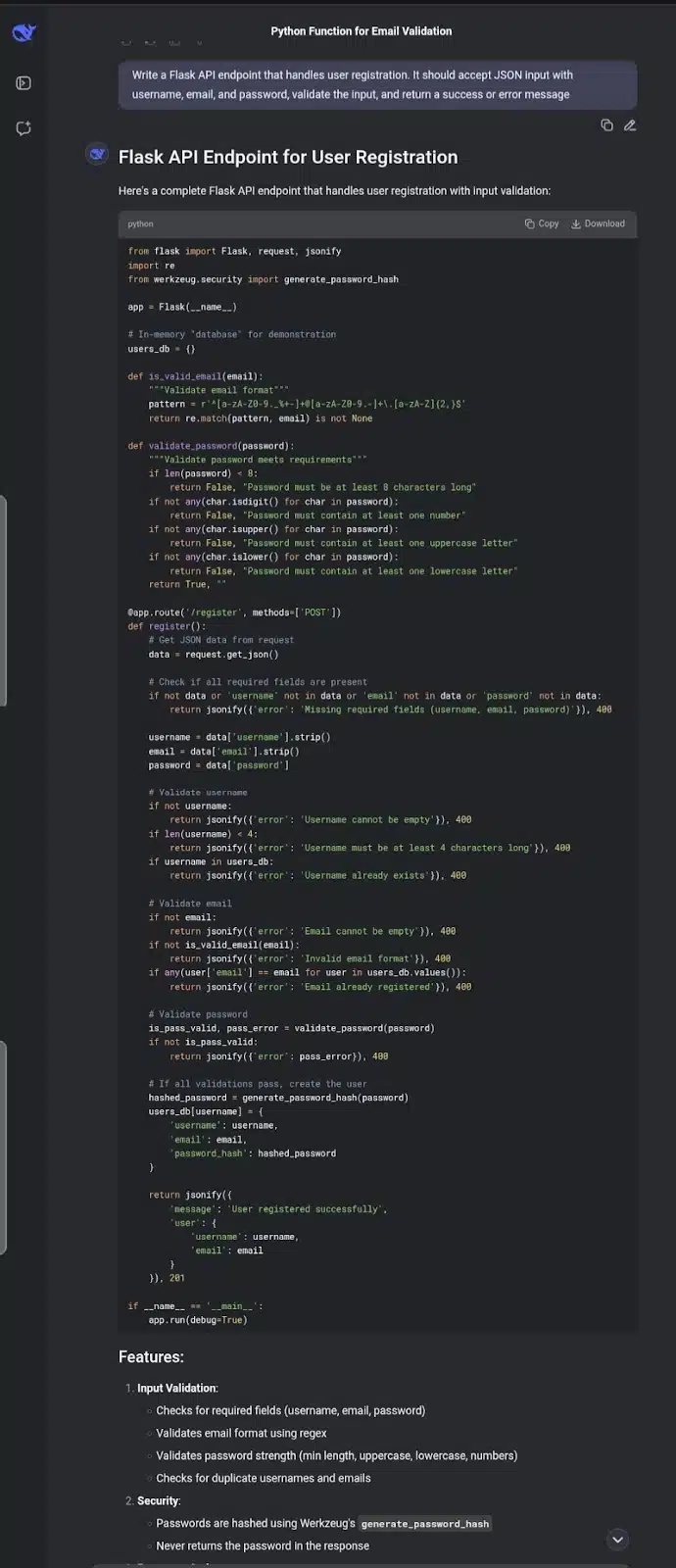

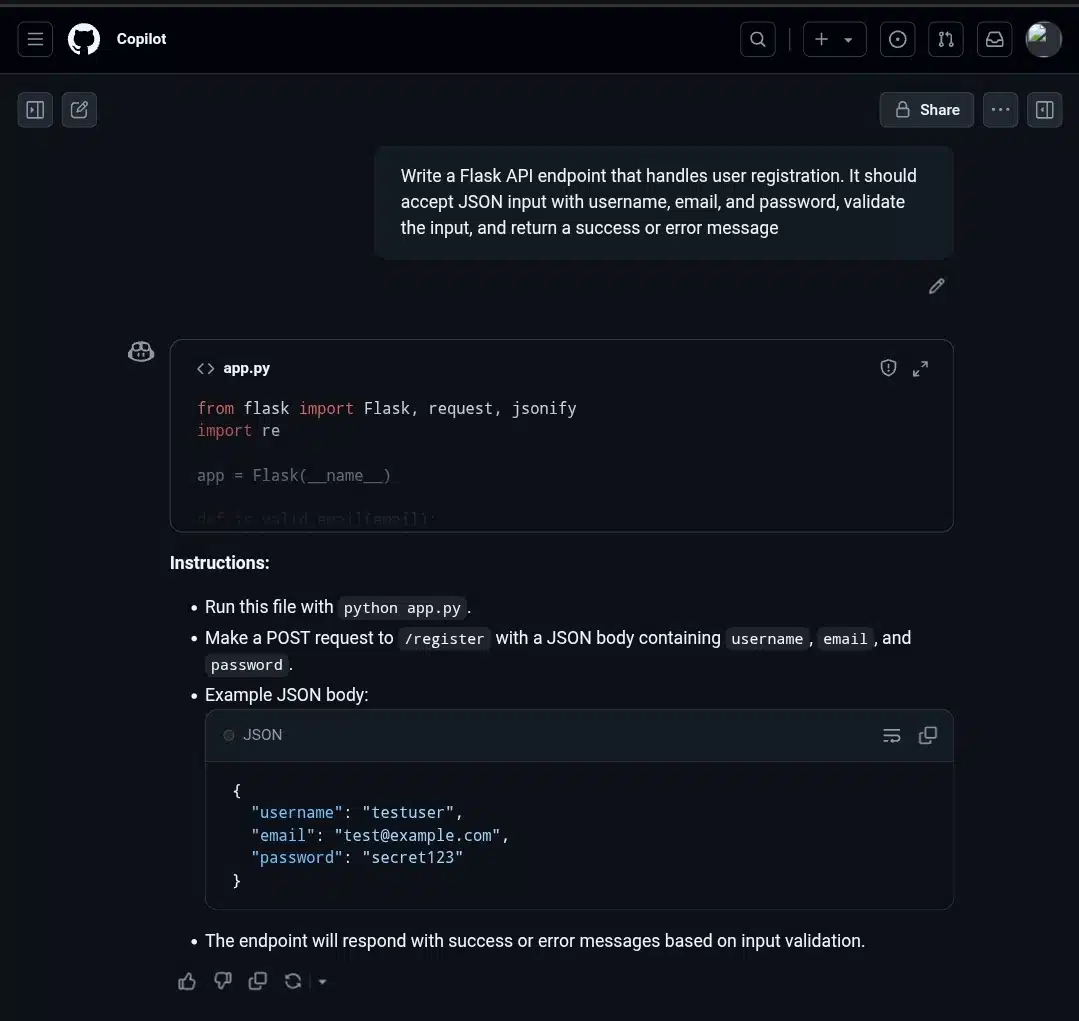

Prompt 2: Create a Flask API Endpoint for User Registration

Prompt: “Write a Flask API endpoint that handles user registration. It should accept JSON input with username, email, and password, validate the input, and return a success or error message.”

This prompt tests the AI’s ability to:

- Structure a Flask route correctly

- Parse JSON input and validate required fields

- Return proper JSON responses

- Handle edge cases (e.g., missing or invalid data)

In conclusion, the deepseek vs copilot debate highlights significant considerations.

What I’m watching for:

- Does it handle all fields properly and return informative errors?

- Are the routes and methods correctly used (@app.route, methods=[‘POST’])?

- Is the code modular or just jammed into a single block?

I asked both tools to generate a Flask endpoint that handles user registration, something you’d typically need when scaffolding an app backend. It had to accept username, email, and password, validate them, and respond with JSON.

Each analysis of deepseek vs copilot revealed unique insights into AI programming.

While testing, I noted how the deepseek vs copilot tools approached various problems.

Copilot’s output was neat and to the point. It built a minimal but functional /register route with inline validation and proper status codes. The regex for email was solid, and it returned structured error messages. What stood out was the code’s clarity. It didn’t overcomplicate things. It felt like something I could paste into a project and tweak later.

Finally, the choice between deepseek vs copilot is crucial for effective programming.

As I reflect, deepseek vs copilot has become a popular topic among peers.

DeepSeek, on the other hand, went all in. It added password strength checks (uppercase, lowercase, number, min length), and hashed the password with Werkzeug.security, checked for duplicate users using an in-memory store, and explained everything along the way. It’s not production-ready out of the box (since it lacks a real database), but it’s educational and closer to a real-world implementation.

The prompt provided valuable comparisons between deepseek vs copilot.

If you’re quickly prototyping, Copilot wins on simplicity and speed. But if you’re building something semi-serious or learning as you go, DeepSeek’s output is more thoughtful, even if a bit verbose.

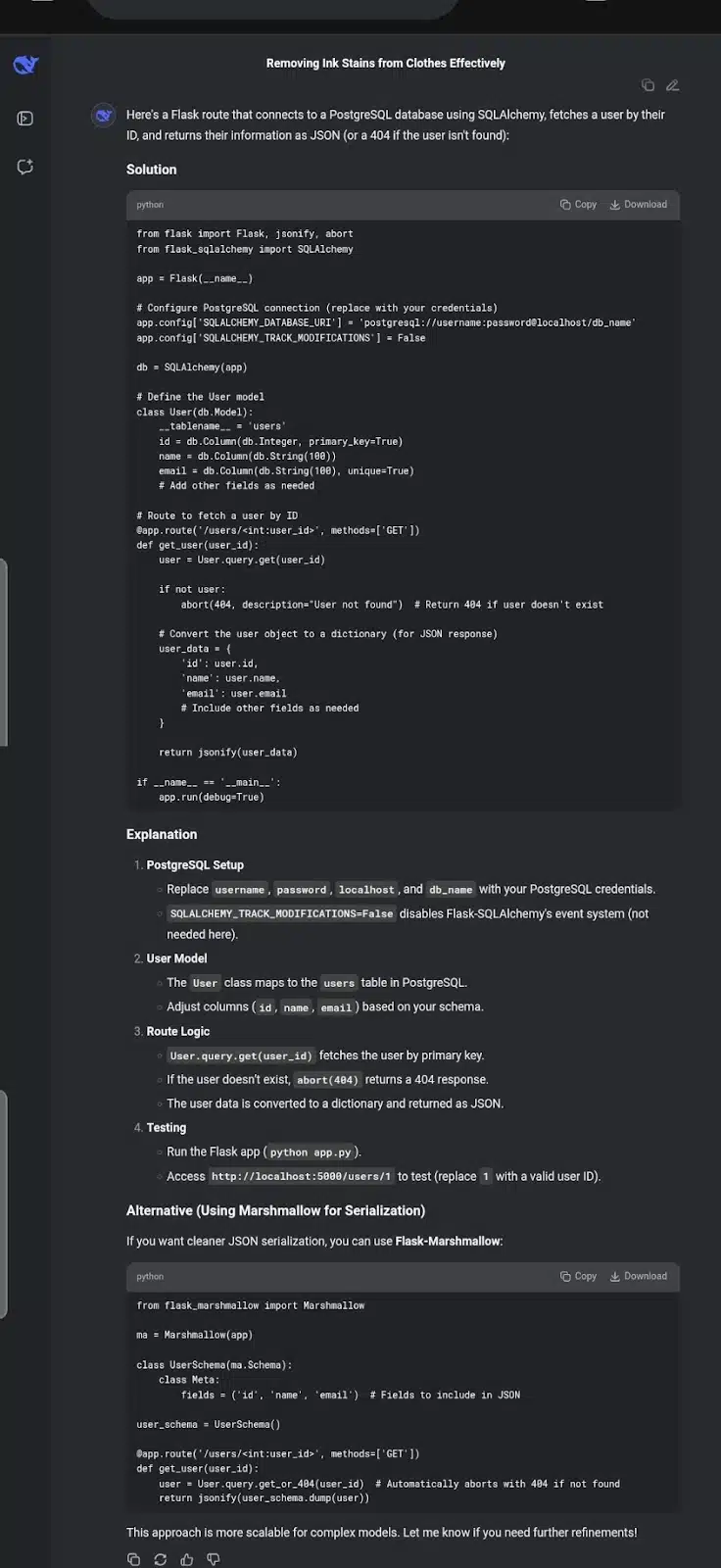

Prompt 3: Create a Flask route that fetches data from a PostgreSQL database

Prompt: “Write a Flask route /users/<user_id> that connects to a PostgreSQL database, fetches a user by their ID, and returns their info as JSON. Use SQLAlchemy for the ORM. Return a 404 if the user isn’t found.”

What this tests:

- Flask + SQLAlchemy integration

- Model definition and query logic

- Dynamic routing (<user_id>)

- Error handling (404 for missing users)

- Use of @app.route, clean JSON responses, proper status codes

DeepSeek vs Copilot: A Comprehensive Comparison

Many will weigh deepseek vs copilot based on their specific needs.

This one checks if the AI knows how to structure a real-world route with a backend connection and not just hardcoded responses.

GitHub Copilot’s Response

I actually liked how this one went straight to the point. It defined a clean model, handled the route using .query.get(), and returned a custom to_dict()—all very familiar territory for anyone who’s touched Flask and SQLAlchemy. It also used abort(404) appropriately, which is what I’d expect when building a RESTful endpoint.

That said, the lack of field uniqueness or password handling isn’t a big deal here since we’re just fetching data. But it’s worth noting that it didn’t recommend serialization libraries like Marshmallow, which could’ve made things cleaner in larger apps. Still, for basic apps or quick prototyping, this version would get the job done without adding bloat.

DeepSeek’s Response

This one offered more breadth. It did basically the same thing as GitHub’s solution, but with more commentary and an optional alternative using Marshmallow. I appreciated that it showed the default .query.get() version and the improved get_or_404() plus schema-based serialization. That’s the kind of nudge a junior dev might find helpful.

As software development progresses, deepseek vs copilot will play significant roles.

From a structure and correctness standpoint, it’s solid. The Marshmallow addition is optional, but it’s the kind of touch that shows the model is aware of best practices.

Ultimately, deciding between deepseek vs copilot boils down to personal preference.

Verdict

With further exploration, the deepseek vs copilot comparison can lead to improved outcomes.

By exploring deepseek vs copilot further, we can refine our programming practices.

- GitHub: Lean, readable, straight-to-the-point. Feels like something I’d write quickly during a sprint.

- DeepSeek: Slightly more comprehensive and opinionated. Great for those who appreciate guidance and extra polish.

Neither returned anything broken or incorrect, so this one comes down to dev style. Personally? I’d use GitHub’s version in a prototype and DeepSeek’s in a more structured project.

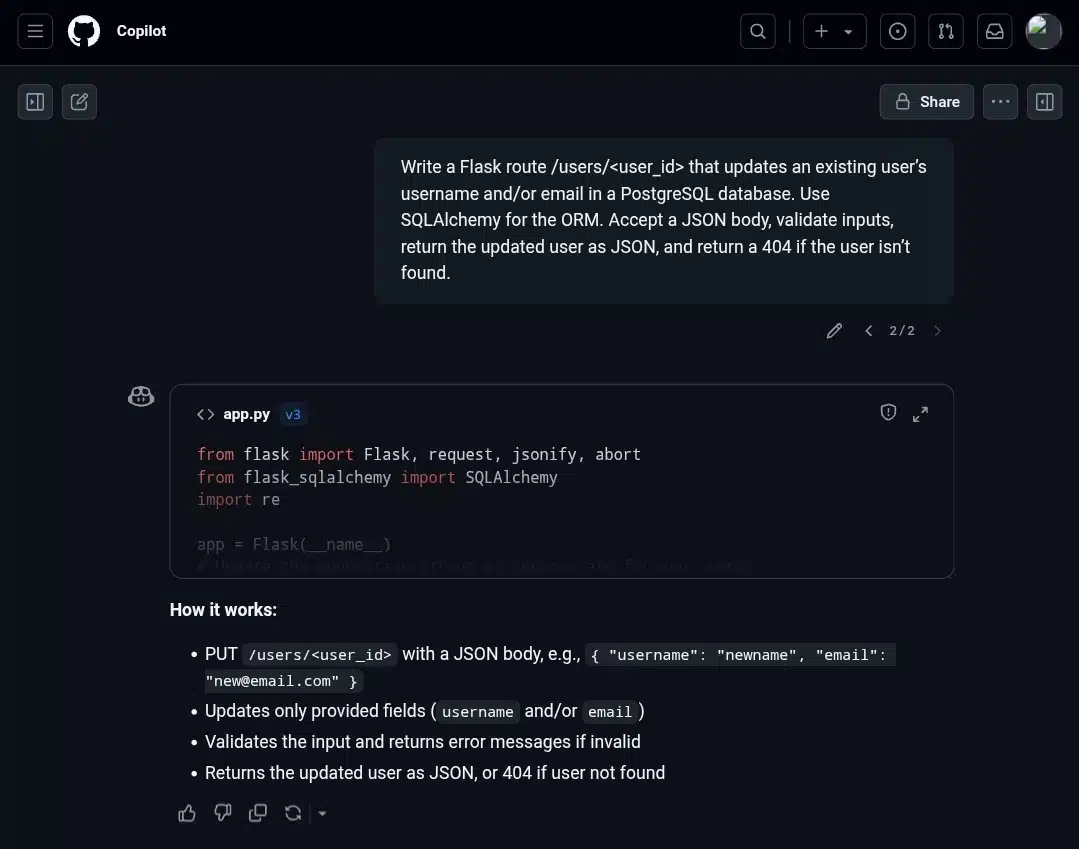

Prompt 4: Update a User in a PostgreSQL Database Using Flask

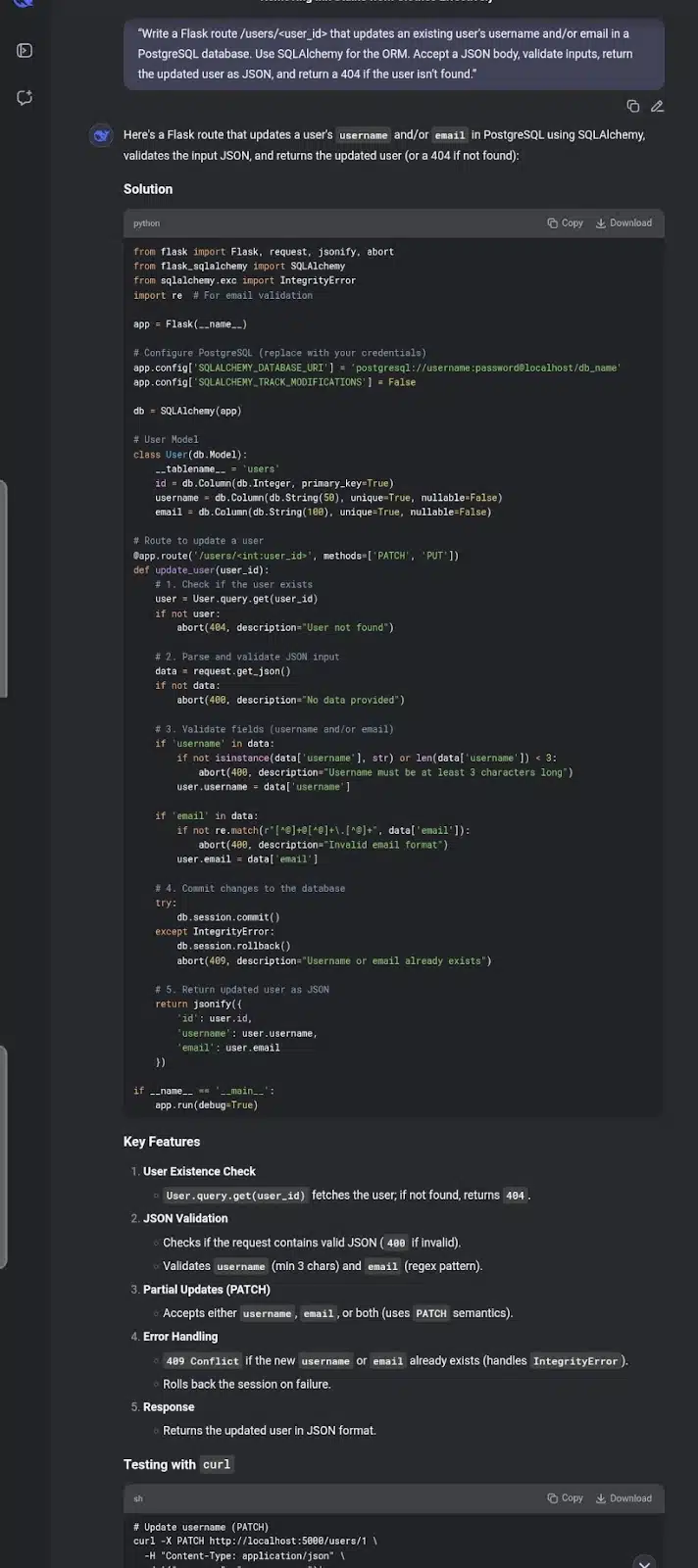

Prompt: “Write a Flask route /users/<user_id> that updates an existing user’s username and/or email in a PostgreSQL database. Use SQLAlchemy for the ORM. Accept a JSON body, validate inputs, return the updated user as JSON, and return a 404 if the user isn’t found.”

What this tests:

- Flask dynamic routing with URL parameters

- SQLAlchemy: querying and updating a record

- JSON input parsing and field validation

- Use of PUT or PATCH method for updates

- Proper error handling (404 for missing user)

- Returning JSON responses with updated data

It tests whether the AI understands how to safely update only existing records, handle partial input gracefully, and commit changes correctly using SQLAlchemy.

GitHub Copilot’s Response

This version gets straight to business. It checks if the user exists, validates username and email inputs, updates the fields conditionally, and commits the changes. Errors are returned with clear messages using a dictionary, and the response structure is neatly handled with a to_dict() method. The code is easy to follow and modular, making it a great choice for quick prototyping. What’s missing is conflict handling—no try-except for duplicate usernames or emails—and it only uses PUT, which doesn’t reflect partial updates very well. Still, for a lean, no-frills implementation, this would get the job done.

Deepseek’s Response

This one offered more structure and coverage. It included both PUT and PATCH, used abort() for standardized error codes, and handled database integrity errors explicitly. Input validation was robust and even extended to regex-based email checking. Most notably, it suggested an alternative using Marshmallow, which adds polish and scalability. While a bit more verbose, it gives newer devs a roadmap for taking the route from basic to production-ready.

Verdict

GitHub’s version is lightweight and clean. It’s ideal for sprints or MVPs. Deepseek’s feels more like a teaching tool, packed with best practices. Both are valid, but Deepseek edges ahead if you’re optimizing for maintainability or mentoring.

Sure! Here’s Prompt 5 in the same structured, straight-to-the-point format you’ve been using:

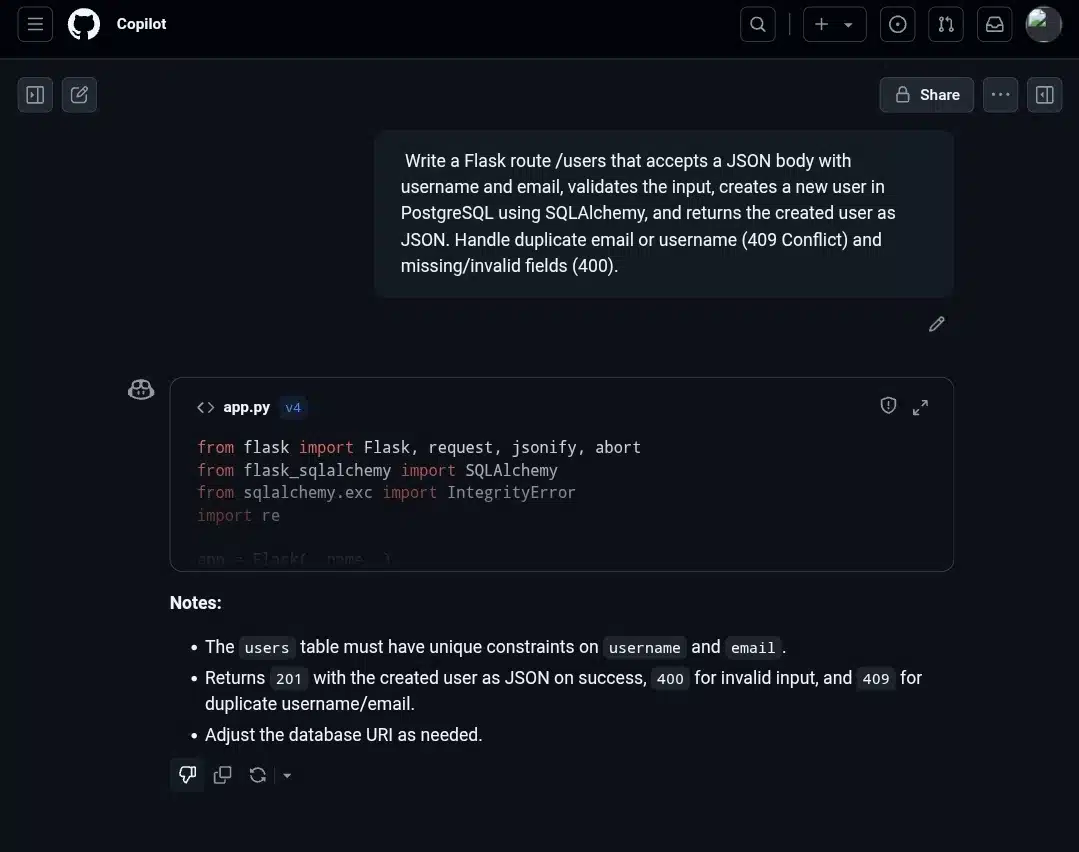

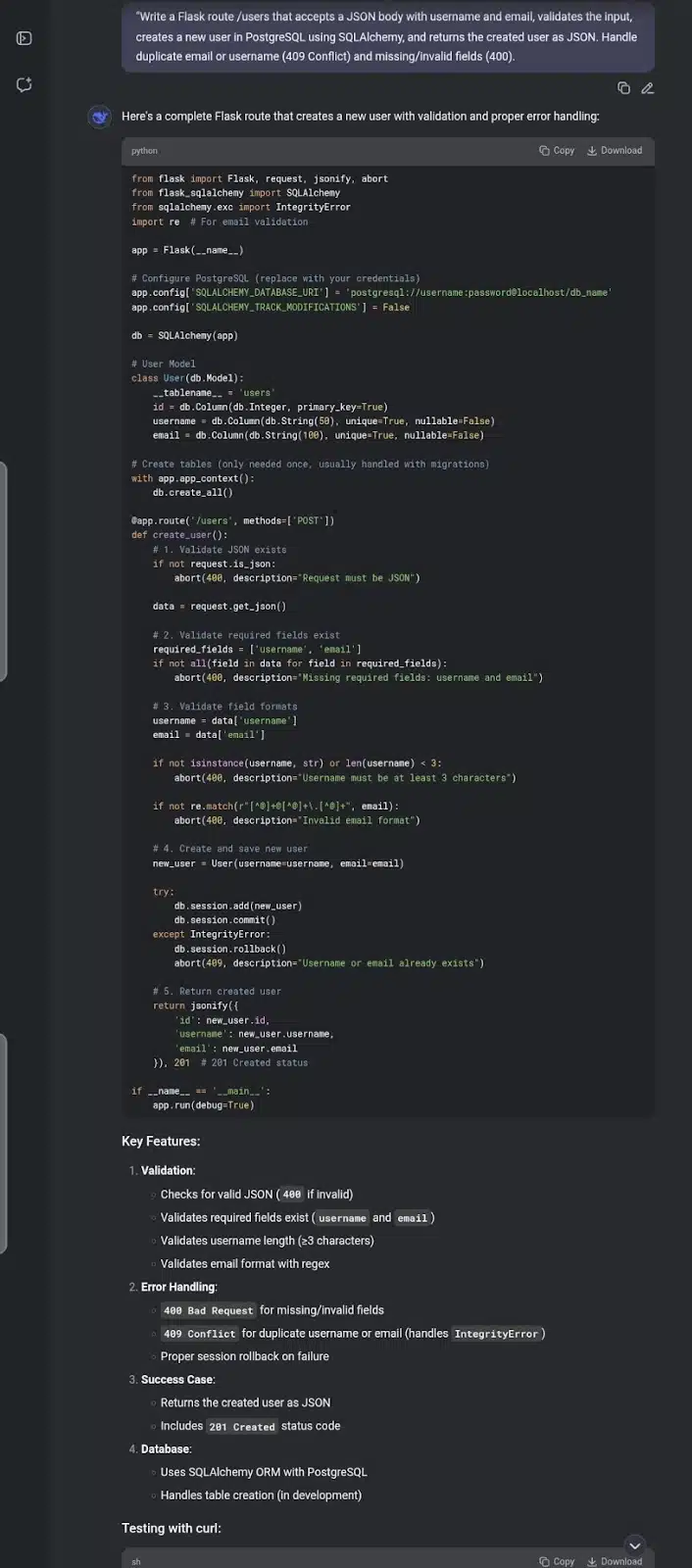

Prompt 5: Create a New User (POST /users)

Prompt: “Write a Flask route /users that accepts a JSON body with username and email, validates the input, creates a new user in PostgreSQL using SQLAlchemy, and returns the created user as JSON. Handle duplicate email or username (409 Conflict) and missing/invalid fields (400).”

What this tests

Handling POST requests, input validation, unique constraints, error codes (400 vs 409), and clean response formatting. Also checks if the AI understands database insertion via ORM.

GitHub’s Response

This one sticks to a pragmatic, developer-friendly approach. The structure is clean: it defines a User model, validates the payload, handles errors precisely, and uses a .to_dict() method for response serialization—something that’s both readable and reusable. Input validation is explicit and tied directly to user-friendly messages via the errors dict, which makes debugging easier. One detail I appreciated is the granular check inside the IntegrityError block—it tries to determine whether the conflict was caused by a duplicate username or email, which is a thoughtful touch many overlook.

The regex used for email validation is slightly stricter than average, which can be a plus or minus depending on use case. The final JSON response includes a success flag only on failure, which feels more practical than verbose. Overall, this version would fit nicely in a small- to mid-sized Flask app, especially if you want something lightweight without external dependencies.

DeepSeek’s Response

In upcoming sessions, I aim to dive deeper into deepseek vs copilot functionality.

DeepSeek’s version covers the same logic but leans more toward a “learning-friendly” format. It walks through each step—checking if the request is JSON, verifying required fields, then performing type and format validation. Instead of returning granular error messages like GitHub’s solution, it uses abort() with descriptive strings. While less flexible for frontend consumers parsing errors, it’s readable and perfectly acceptable in smaller apps or educational contexts.

It also introduces db.create_all() inside the route file, which is convenient for demos but not ideal in production. The optional Marshmallow version is a welcome nod to scalability, offering a cleaner separation of concerns.

Verdict

GitHub’s is concise and production-ready with just enough nuance. DeepSeek’s is more verbose and beginner-friendly. If I were prototyping or teaching, I’d use DeepSeek’s. For shipping real features, GitHub’s would be my default.

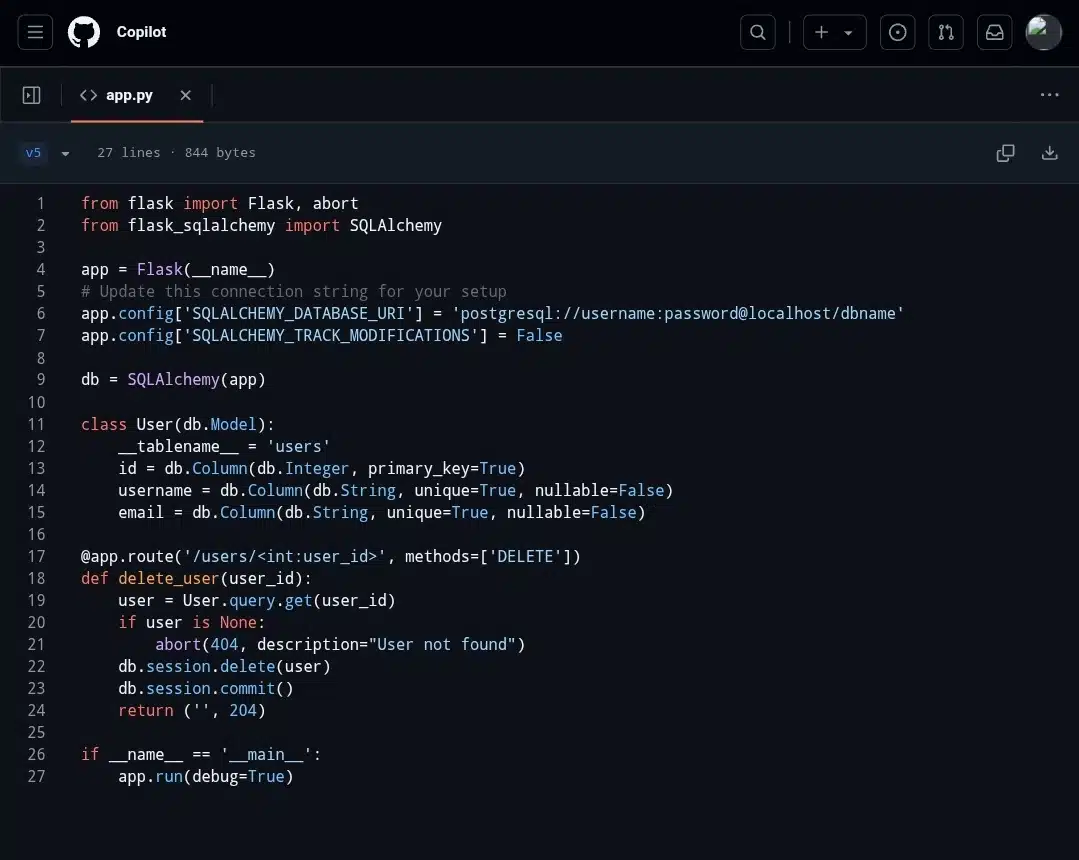

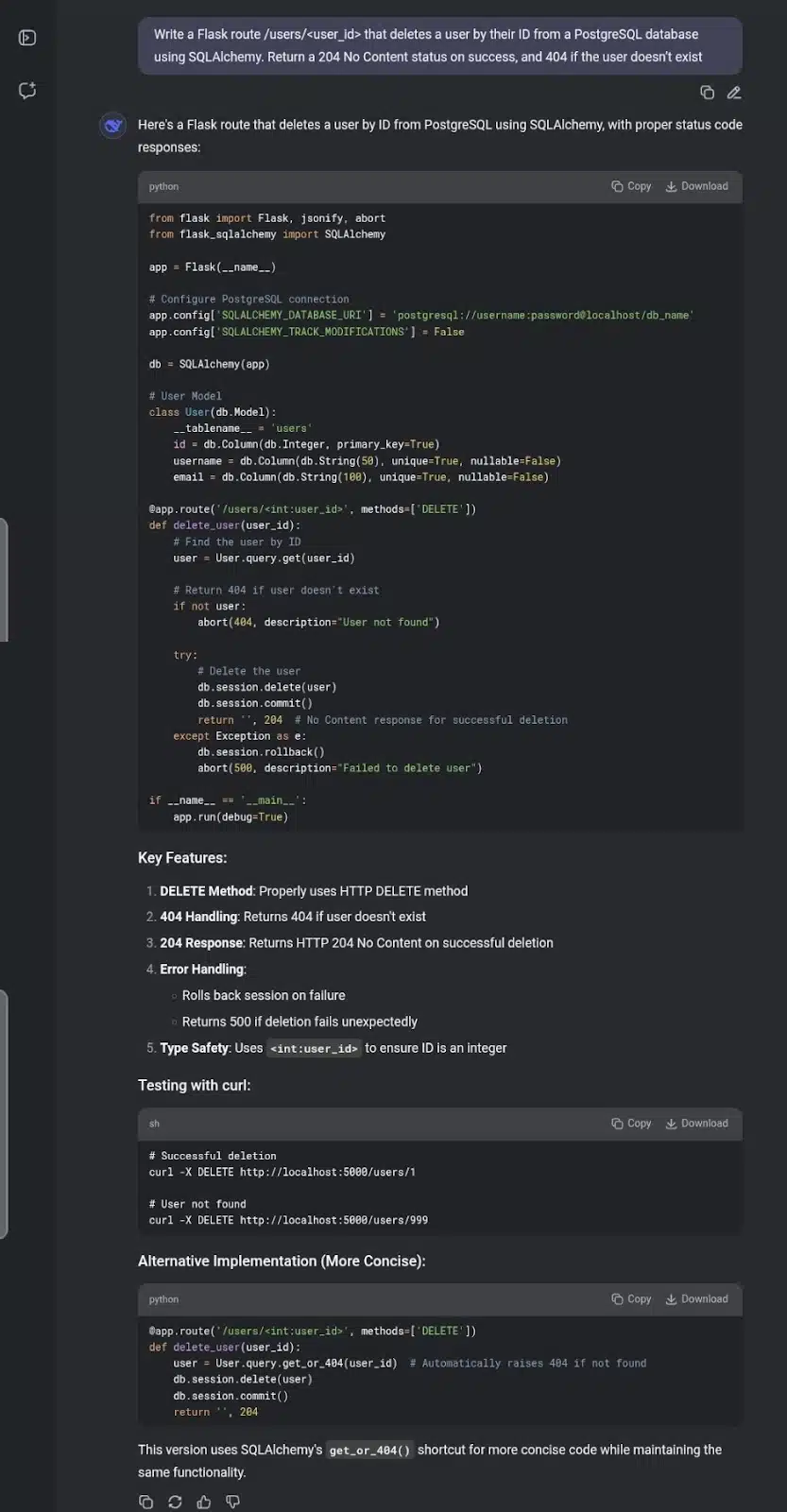

Prompt 6: Create a Flask route that deletes a user from a PostgreSQL database

Prompt: “Write a Flask route /users/<user_id> that deletes a user by their ID from a PostgreSQL database using SQLAlchemy. Return a 204 No Content status on success, and 404 if the user doesn’t exist.”

What this tests:

- Flask + SQLAlchemy integration

- Model querying and deletion logic

- Proper handling of HTTP status codes, especially 204 for successful delete with no content

- Error handling (404 if user not found)

- Use of @app.route with DELETE method and clean response

This prompt verifies if the AI can implement a common RESTful DELETE operation correctly, ensuring proper response codes and database.

Both solutions handle the core requirement well: deleting a user by ID with appropriate status codes.

GitHub Copilot Response

Thus, the discourse of deepseek vs copilot is far from over.

GitHub’s version is very lean and straightforward, doing exactly what’s needed without any extra. It uses User.query.get() to fetch the user and aborts with a 404 if not found. The deletion and commit happen cleanly, and a 204 No Content response is returned on success. This approach is ideal for quick prototypes or smaller apps where simplicity matters.

Deepseek response

Deepseek’s response covers the same logic but adds a bit more robustness and polish. It includes explicit error handling around the database commit with a try-except block, rolling back the session and returning a 500 error if something goes wrong during deletion. This adds resilience in production scenarios where database issues can occur. The optional alternative using get_or_404() is a nice touch, showcasing a cleaner way to handle the 404 case with less boilerplate.

Verdict

GitHub’s version is perfect for speed and clarity when you want to implement quickly. Deepseek’s version is more thorough and production-ready, with error handling that matters in real-world apps. Both are solid and correct, so your choice depends on whether you want simplicity or a bit more safety and explanation.

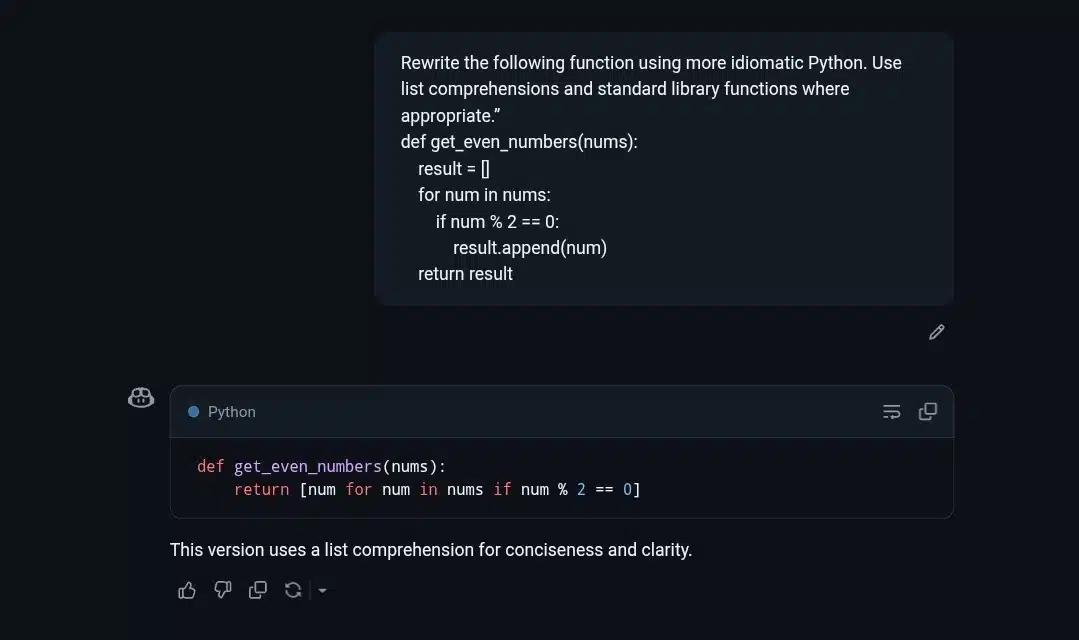

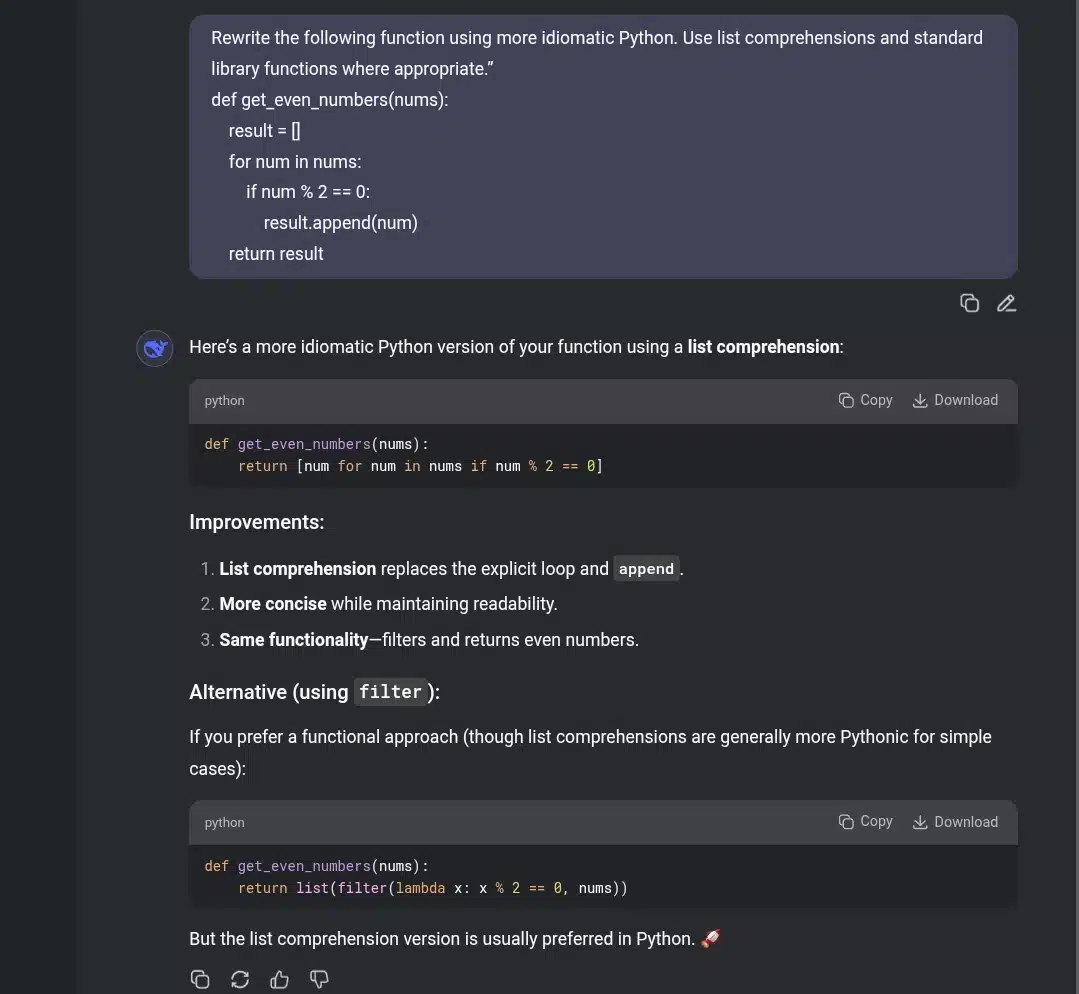

Prompt 7: Refactor a Function Using Idiomatic Python

Prompt: “Rewrite the following function using more idiomatic Python. Use list comprehensions and standard library functions where appropriate.”

def get_even_numbers(nums):

result = []

for num in nums:

if num % 2 == 0:

result.append(num)

return result

This prompt tests the AI’s ability to:

- Apply Pythonic principles like list comprehensions and built-ins

- Improve code readability and efficiency without changing behavior

What I’m watching for:

For many, the choice of deepseek vs copilot will determine their coding effectiveness.

- Does it convert the loop to a list comprehension cleanly?

- Are variable names and structure been improved?

- Is the new version concise and readable?

I asked both tools to make the function more Pythonic, the kind of refactor you’d expect during a code review.

GitHub Copilot Response

Copilot’s response was clean and minimal. It replaced the loop with a list comprehension in a single line:

The dialogue surrounding deepseek vs copilot will continue to influence developer choices.

It didn’t explain why the change was better, but the code was readable, idiomatic, and production-ready. If you already know what list comprehensions do, you’re good to go.

Deepseek Response

DeepSeek took a more instructive route. It offered the same list comprehension version but added thoughtful commentary. It explained why list comprehensions are more Pythonic and included an alternative using filter():

Ultimately, each developer will navigate the deepseek vs copilot landscape differently.

DeepSeek also gave a quick breakdown of the improvements—conciseness, readability, and functional alternatives—framed like a mini code review. That makes it better for someone learning or trying to write cleaner Python.

Bottom line:

- If you want a fast refactor and already know what you’re doing, Copilot delivers a paste-and-go solution.

- If you’re learning or trying to understand the “why,” DeepSeek gives more context, guidance, and even extra patterns to explore.

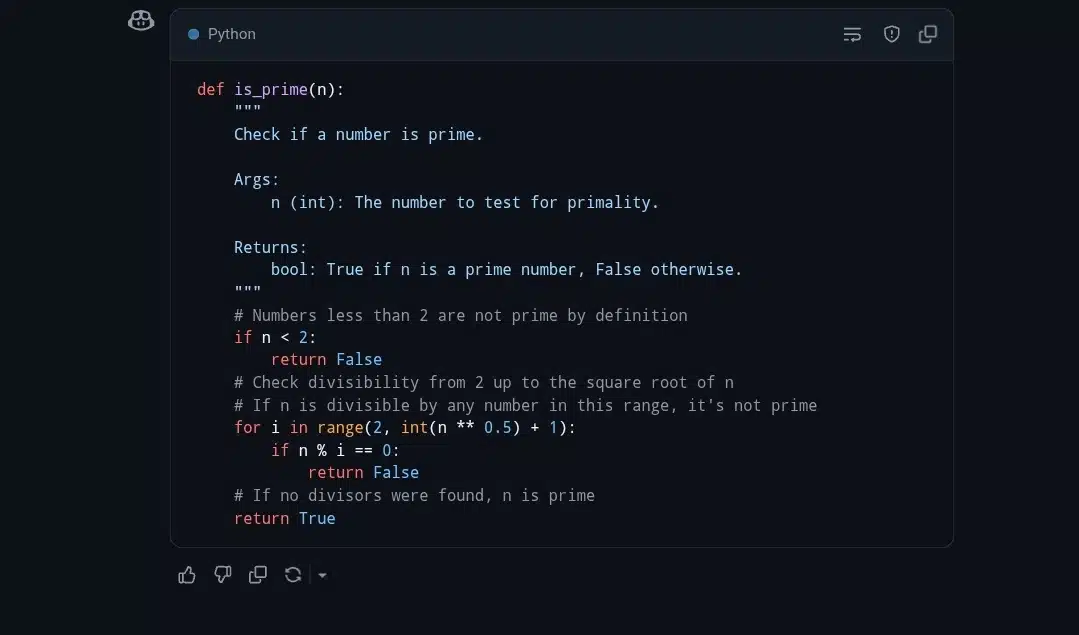

Prompt 8: Document a Python Function for Readability

Prompt: “Add a docstring and inline comments to the following Python function to make it easier to understand.”

This prompt tests the AI’s ability to:

- Interpret function logic and purpose

- Write helpful docstrings (e.g., Google style)

- Add meaningful inline comments for clarity

What I’m watching for:

- Does the AI explain the use of square root optimization?

- Is the docstring clear about inputs and return types?

- Are the comments useful or just stating the obvious?

Understanding where deepseek vs copilot excel in functionality is essential.

I wanted to see if the tools could explain why the logic works, not just what it does.

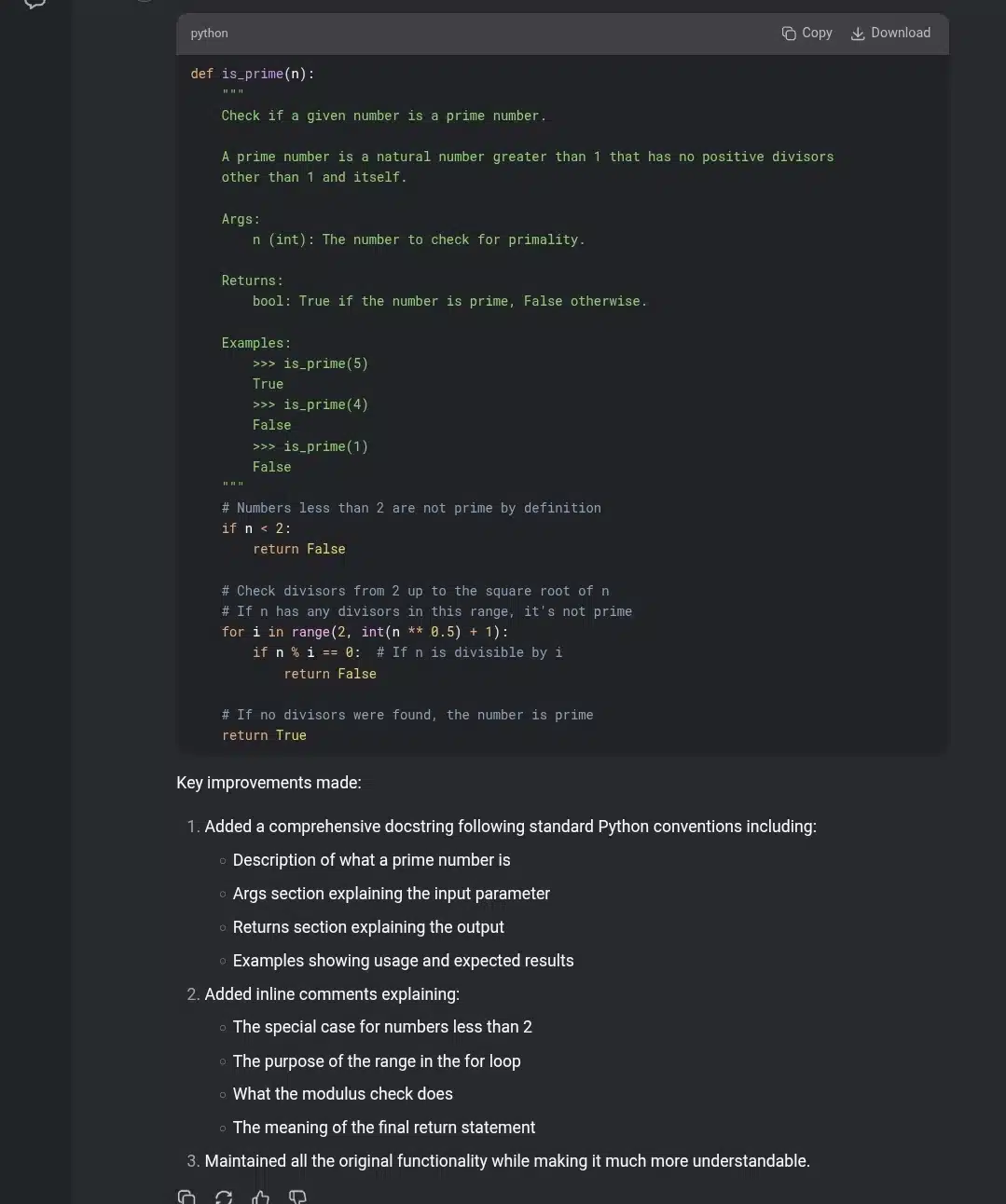

GitHub Copilot Response

Copilot kept things straightforward. It returned a clean is_prime function with a short but structured docstring, including Args and Returns sections. It also added minimal but effective inline comments explaining edge cases (like n < 2) and the square root optimization. The result it gave was a readable, practical function that’s suitable for general use or inclusion in a utilities module.

Deepseek response

DeepSeek took the prompt as a teaching moment. The function was nearly identical to Copilot’s, but the docstring was far more comprehensive, complete with an explanation of what a prime number is, example usage, and detailed return behavior. On top of that, the inline comments weren’t just annotations, they were explanations. Each line of logic was spelled out in plain English, walking the reader through both the “what” and the “why.”

So who wins?

- If you’re writing production code and just need clean documentation, Copilot hits the mark being concise and practical.

- If you’re creating educational material, documenting a public library, or handing this off to a beginner, DeepSeek’s verbose style adds more long-term value.

For quick dev work, Copilot is enough. For teaching or team onboarding, DeepSeek goes the extra mile.

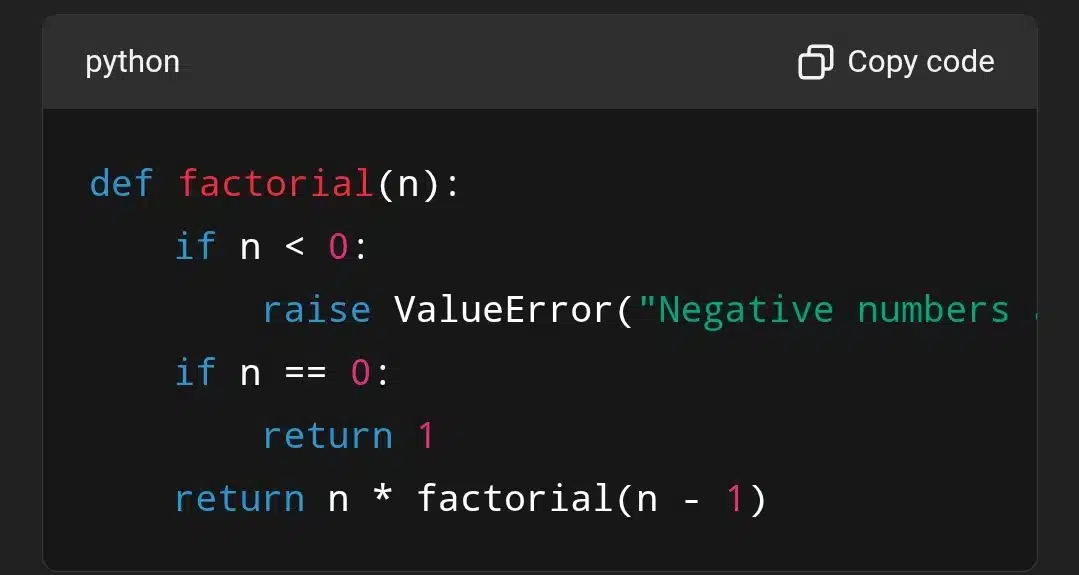

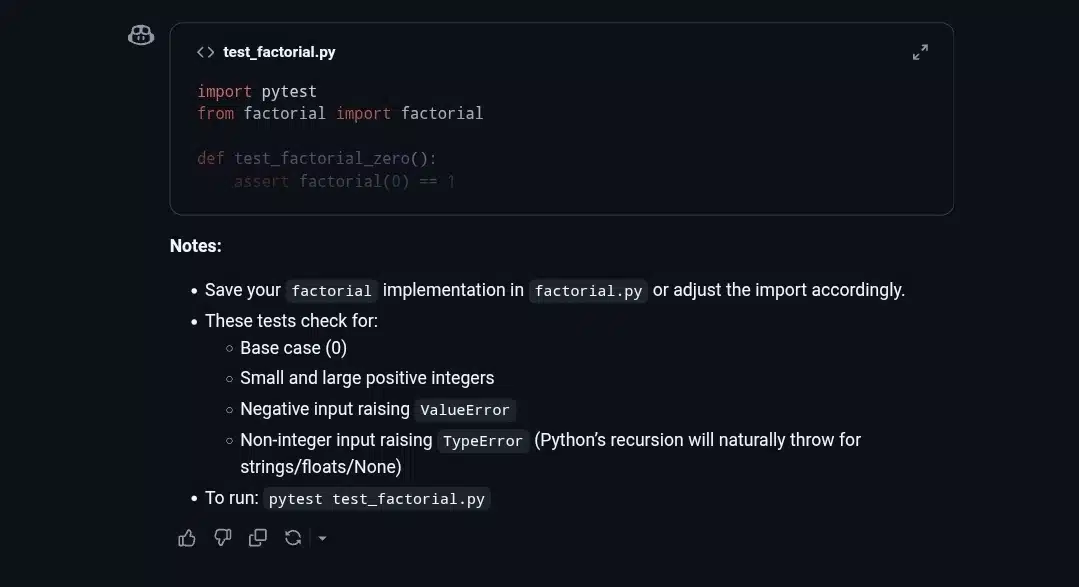

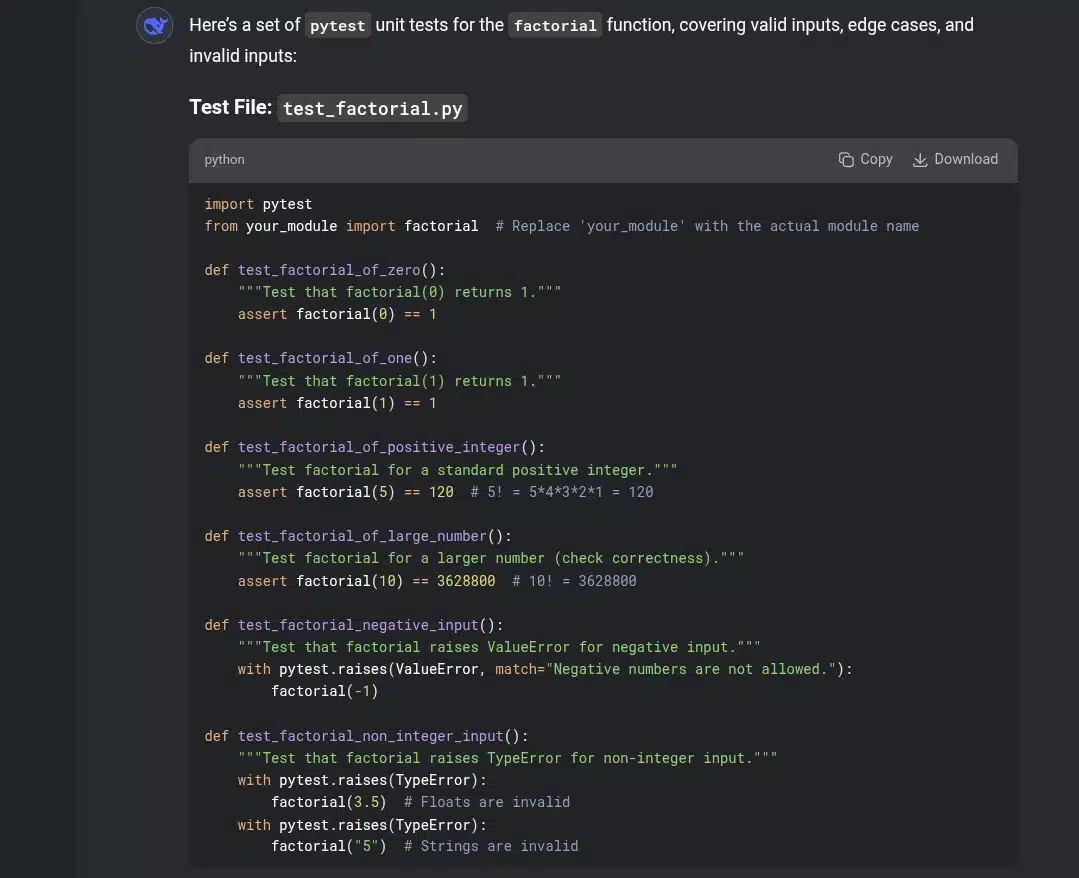

Prompt 9: Generate Unit Tests Using pytest

Prompt: “Write pytest unit tests for the following Python function that calculates the factorial of a number. Include edge cases and invalid input.”

This prompt tests the AI’s ability to:

- Write complete unit tests with pytest

- Cover normal cases, edge cases, and exceptions

- Use best practices for naming and structure

In practice, many developers have found the deepseek vs copilot discussion enlightening.

What I’m watching for:

The path forward includes reflecting on deepseek vs copilot dynamics.

- Does it test for 0, 1, and a few valid values?

- Does it handle invalid inputs like -1 or ‘5’ properly?

- Does it use pytest.raises(ValueError) where needed?

This is how I check the AI’s competency in writing robust tests for recursive functions.

GitHub Copilot Response

Copilot went the direct route. It gave you a solid test file with all the essential scenarios: edge cases like 0 and 1, positive integers, negatives, and non-integer inputs. The code was compact and grouped logically. It even anticipated that the factorial function might not yet handle type errors, giving a helpful nudge that you may need to add those checks yourself. It’s exactly what you’d expect if you’re validating functionality during a dev sprint: clean, fast, and production-focused.

Through continuous use, deepseek vs copilot will yield richer insights.

Deepseek Response

DeepSeek, in contrast, built a teaching tool. Not only did it write the tests, it also structured them by category, added docstrings to every test, explained each case in markdown, and included instructions on how to run everything with pytest. It also walked through potential improvements, like testing recursion limits and performance for large inputs. DeepSeek’s output felt like it came from a mentor who wants you to learn the “why,” not just the “how.”

Which should you use?

- If you’re writing tests on a real project and want clarity with minimal noise, Copilot has your back.

- If you’re onboarding juniors, writing documentation, or just want more structure and reasoning behind your tests, DeepSeek delivers.

In short: Copilot is for getting it done, DeepSeek is for doing it right, slowly but thoroughly.

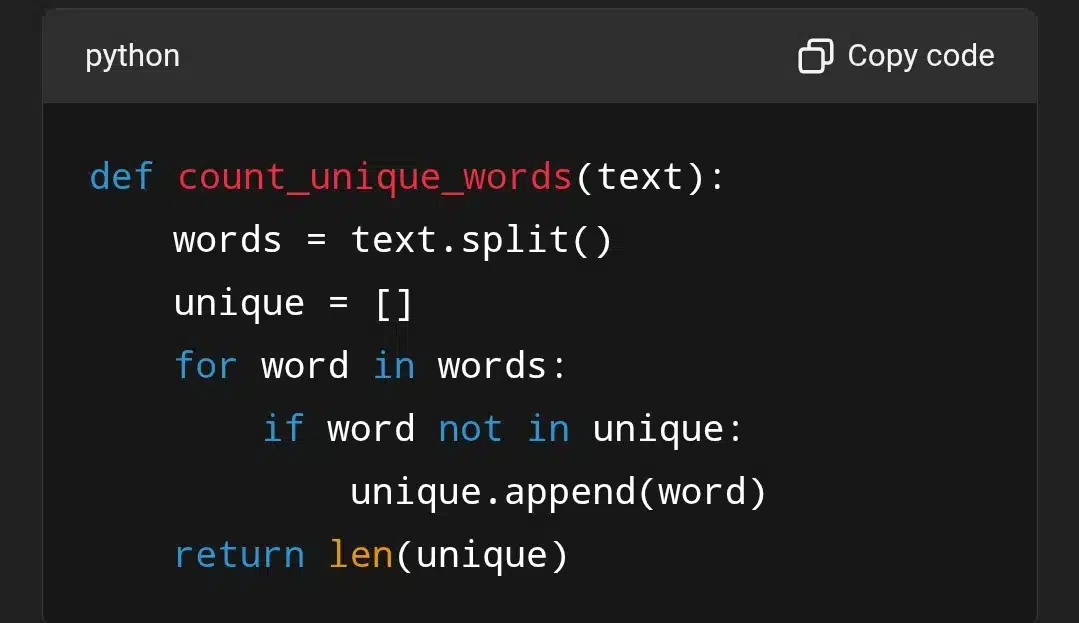

Prompt 10: Optimize a Python Function for Performance

Prompt: “Optimize the following Python function for better performance. Avoid unnecessary loops, use built-in functions where possible, and reduce time complexity.”

This prompt tests the AI’s ability to:

- Spot inefficiencies (e.g., using a list instead of a set)

- Rewrite logic using performant, idiomatic tools

- Explain optimizations clearly

What I’m watching for:

- Does it switch from list to set to reduce lookup time?

- Is the logic still correct and cleaner?

- Does the explanation justify the changes?

This simulates a real-world case where a developer writes working but inefficient code and you want the AI to tighten it up.

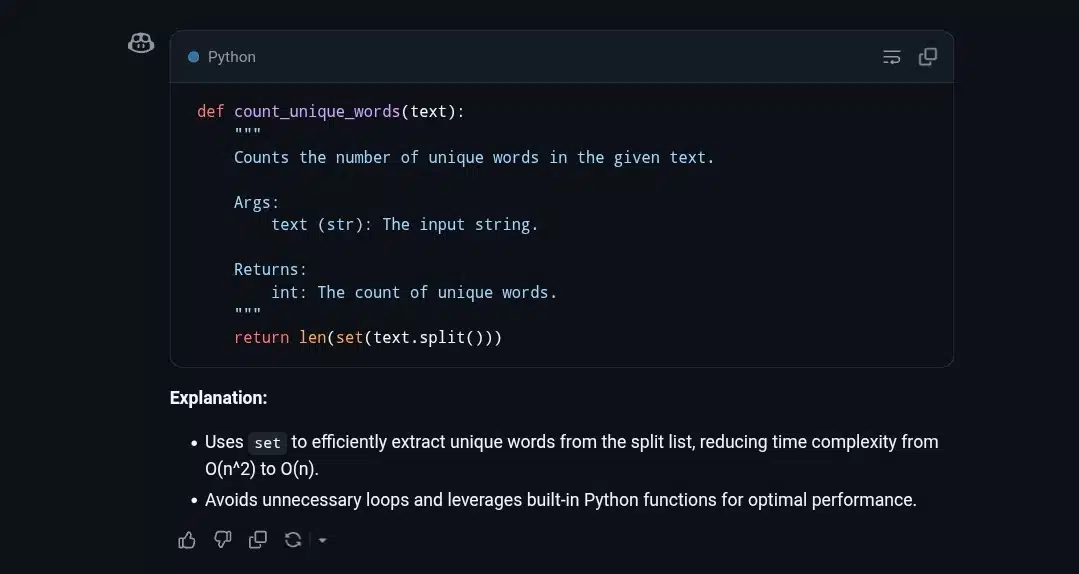

GitHub Copilot Response

Copilot nailed the bare minimum. You get a sleek, optimized one-liner. It’s perfect if you know what you’re doing and just want clean, production-ready code. It also includes a minimal docstring and a short blurb about performance improvement. That’s it. No hand-holding.

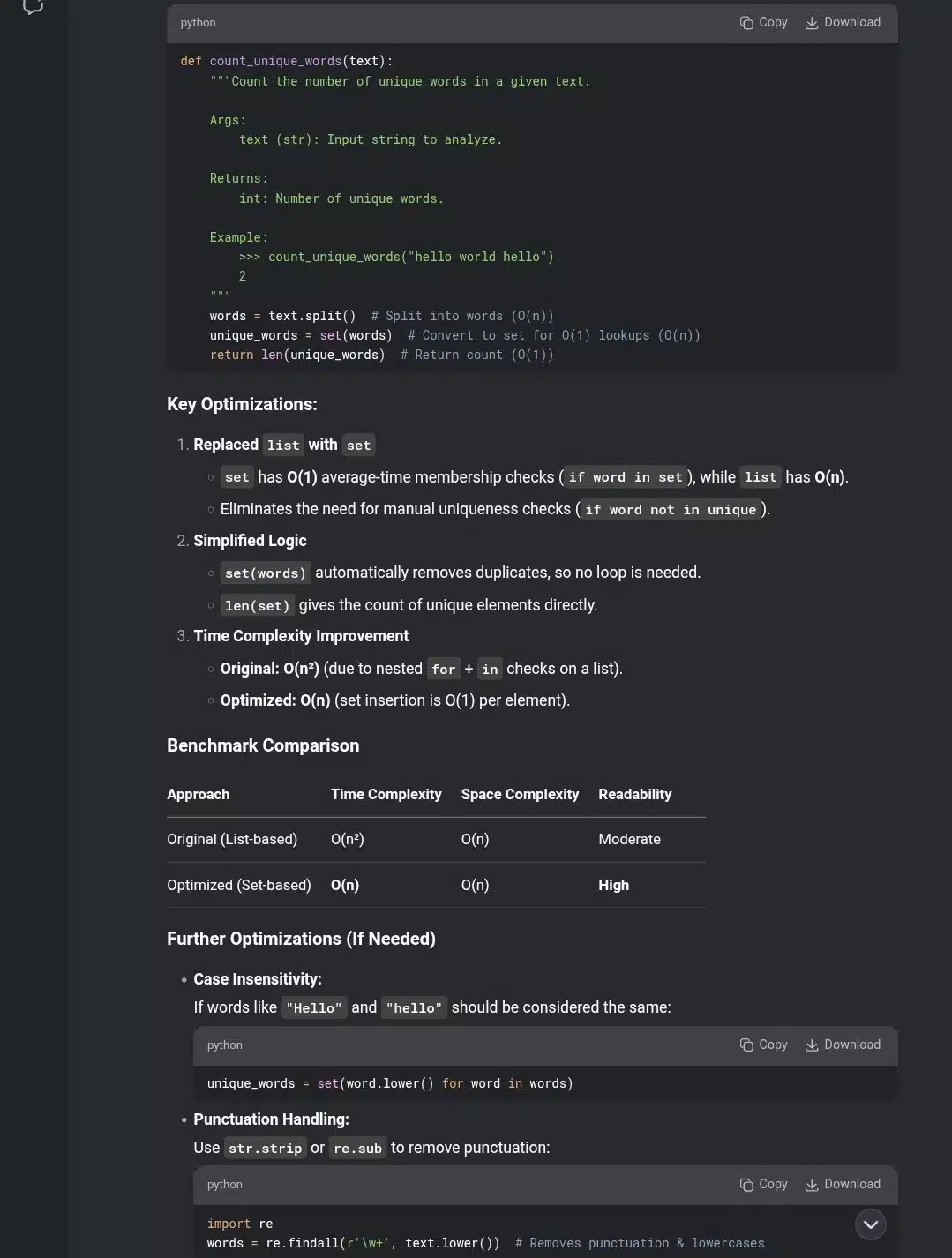

Deepseek Response

DeepSeek treated it like a code review plus lesson plan. It didn’t just rewrite the function, it:

- Explained the time complexity of both the original and optimized versions.

- Justified the use of set over list with clear performance reasoning.

- Provided before-and-after benchmarks (even in table form).

- Suggested further enhancements like case-insensitive comparisons and punctuation handling.

- Wrapped it all in best-practice docstring formatting.

It even invited further refinement, like memory optimization with generators. So, it clearly assumes you’re in learning or documentation mode.

Bottom line:

- If you want just the final answer, Copilot wins for speed and simplicity.

- If you want to understand why it’s the best answer and see what else you could improve, DeepSeek goes the extra mile.

As I write this, deepseek vs copilot remains crucial in my development toolkit.

Wrapping Up: Copilot vs DeepSeek — Which AI Coder Fits You Best?

When it comes to AI coding assistants, both GitHub Copilot and DeepSeek bring real value, but in very different ways.

GitHub Copilot is fast, efficient, and great for developers who already know what they want. It’s ideal when you need clean, ready-to-run code with minimal fluff. You get solid solutions, but you might miss out on deeper reasoning unless you ask explicitly.

DeepSeek, on the other hand, feels more like a pair programming partner that explains every step. It doesn’t just give you the answer, it helps you understand why it works. That makes it especially useful for learning, documentation, code reviews, and when you’re exploring edge cases or performance.

So who should use what?

- Choose Copilot if you want quick solutions with minimal explanations.

- Choose DeepSeek if you’re looking to understand, document, or debug thoroughly.

Ultimately, it’s not a question of which is better, but which is better for your current task. Some days you need a code generator. Other days, you need a mentor. Now you know which tool can play each role.

In summary, my findings on deepseek vs copilot offer practical insights for every developer.

Understanding deepseek vs copilot will enhance your coding experience.

The debate surrounding deepseek vs copilot continues to evolve.

Many developers are discussing deepseek vs copilot as they choose their coding assistant.

Researching deepseek vs copilot will be instrumental for future projects.

In the end, developers will reflect on their experiences with deepseek vs copilot.