GitHub Copilot is a coding assistant. It’s built to help developers write, fix, and understand code. Gemini isn’t. It’s a general AI assistant made to handle a bit of everything, from planning trips to solving math problems.

So, this isn’t really a fair test.

But since Google says Gemini can code, I wanted to see how well it holds up next to Copilot. I gave both tools the same 10 coding prompts. They cover a mix of tasks including writing functions, debugging, translating code, and even generating regex. The goal was to see how each tool handles practical coding requests in real time.

Let’s see how they did.

Prompt 1: Creating a basic Flask app with one route that returns ‘Hello, World!’

Prompt: “Create a basic Flask app with one route that returns ‘Hello, World!’ when accessed.”

What I’m watching out for:

- Does it import the correct Flask components?

- Is the structure of the app clean and runnable?

- Are best practices followed (e.g., if __name__ == ‘__main__’)?

- Is the response exactly “Hello, World!”?

Both tools nailed the prompt. I asked for a basic Flask app with one route that returns “Hello, World!”, and that’s exactly what I got.

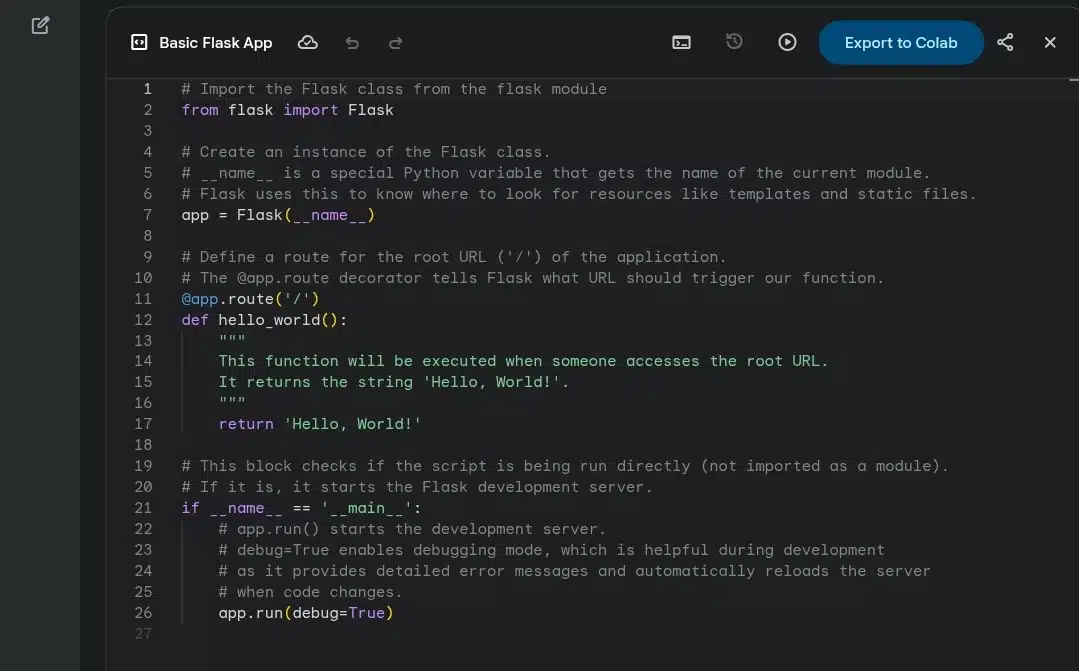

Gemini’s Response

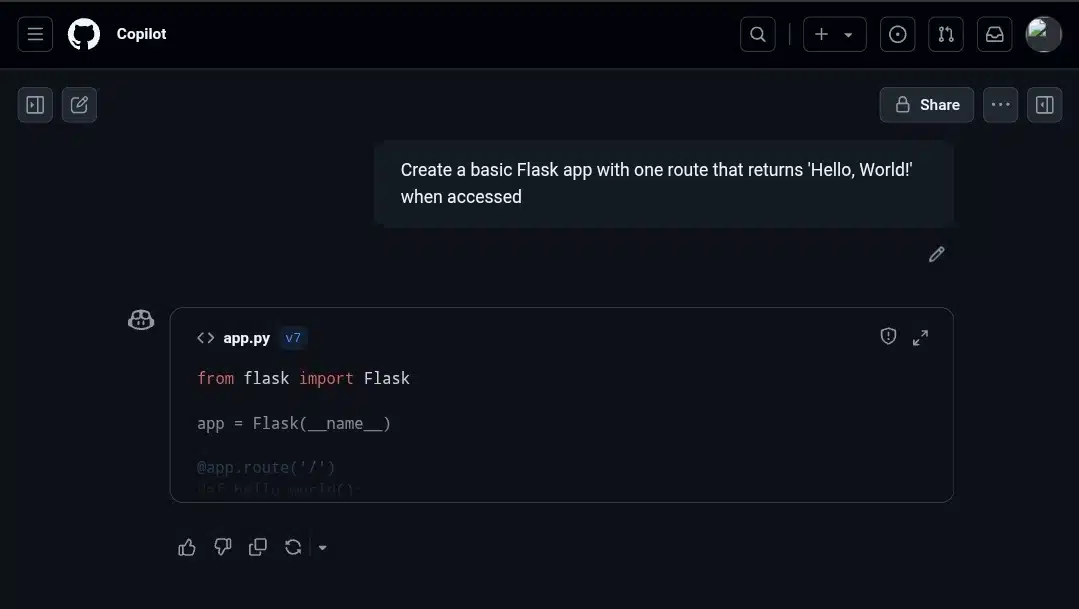

GitHub Copilot gave me a clean, minimal script. No comments, no extras—just what I asked for. It feels like something I’d write myself when spinning up a quick test app. It included the right structure: imported Flask, defined a single route, and added the if __name__ == ‘__main__’ block with debug=True. Simple and straight to the point.

GitHub Copilot’s Response

Gemini also returned working code, but added a lot more explanation. Every part of the code came with inline comments describing what it does. There were even safety notes about using host=’0.0.0.0′ and how debug=True affects behavior. It’s not bad—especially for beginners—but it’s a bit much if I just want the code and know what I’m doing.

Functionally, both results are fine. But the extra commentary from Gemini felt like overkill. For a developer who wants clean code fast, Copilot’s response fits better. But if you’re still learning or need reminders on what each part does, Gemini’s extra guidance might come in handy. Still, even for beginners, it’s probably better when that level of detail is optional, not automatic.

Overall, this one’s a pretty easy win for Copilot in terms of usability and speed.

Prompt 2: Reversing a string in Python

Prompt: “Write a Python function that takes a string and returns it reversed.”

What I’m watching out for:

- Is the function syntax valid and error-free?

- Does it use a Pythonic method like slicing or reversed()?

- Is the function named clearly and appropriately?

- Does it handle edge cases like empty strings or single characters?

For this one, I asked both tools to write a function that reverses a string. It’s a super basic task, but a good way to see how each assistant handles simple logic.

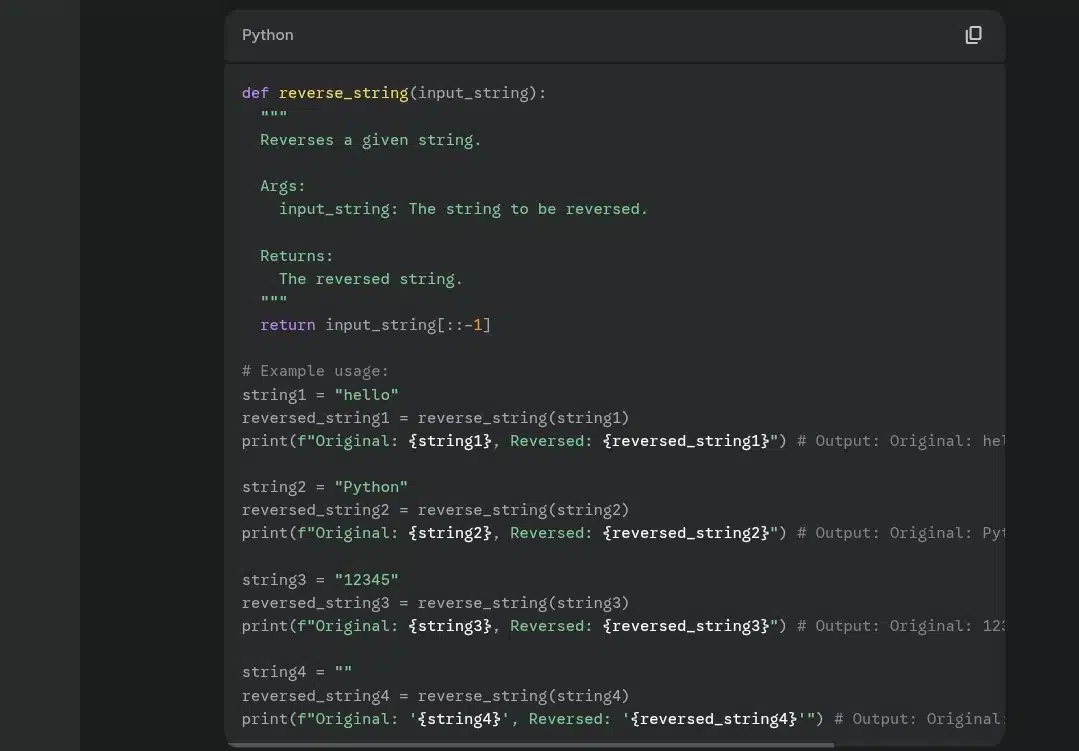

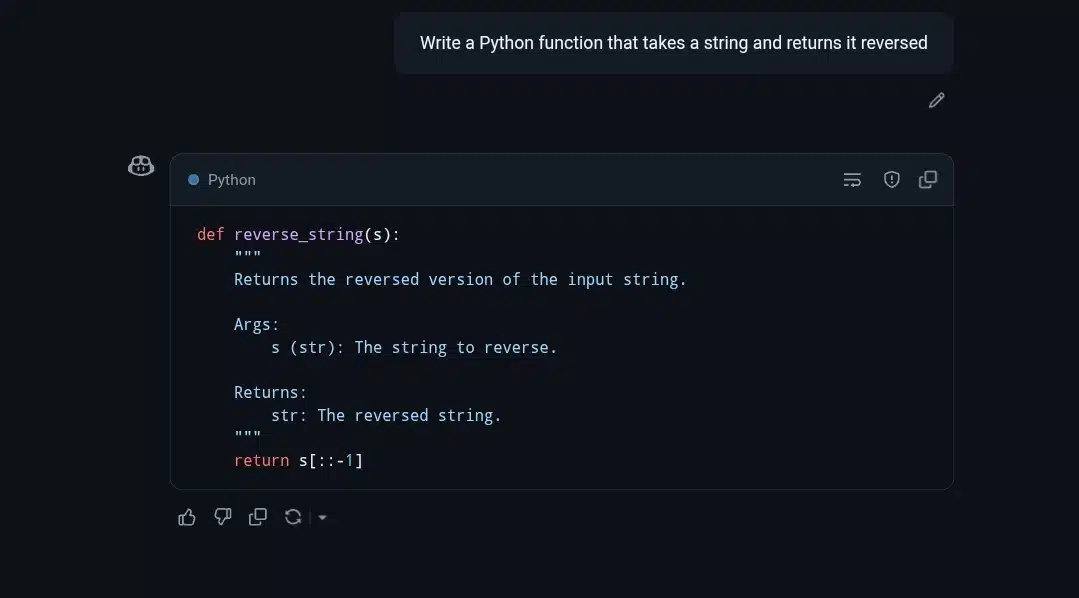

Gemini’s Response

Gemini, again, gave a longer response. The function itself was solid—identical logic and correct use of slicing. But then it added four different usage examples, each with a print statement. That’s helpful if you’re testing or learning, but it makes the response longer than it needs to be. It also followed up with a plain-English breakdown of how slicing works and what the function is doing.

The breakdown is accurate, but I didn’t ask for it. It feels more like a mini tutorial than a direct answer to the prompt.

GitHub Copilot’s Response

Copilot responded with exactly what I needed. The function was short, clear, and well-commented. It used the classic Python slice [::-1] to reverse the string and wrapped it with a standard docstring. It gave me a neat utility function that does what it says on the tin. This is something I could drop into a codebase right away.

So, while both tools gave correct and working code, Copilot stayed focused. Gemini went into teacher mode again. That’s great for a beginner audience, but if you’re trying to move quickly, it’s extra noise.

In this case, Copilot was just faster to absorb and copy from. Gemini was clear, but almost too eager to explain what I already knew.

Prompt 3: Optimizing a bubble sort implementation

Prompt: “Here’s a basic bubble sort function in Python. Optimize it for better performance.”

What I’m watching out for:

- Does it recognize and implement early exit optimization (no swaps = break)?

- Is the optimized code still clear and readable?

- Does it maintain correct output?

- Are unnecessary loops or comparisons eliminated?

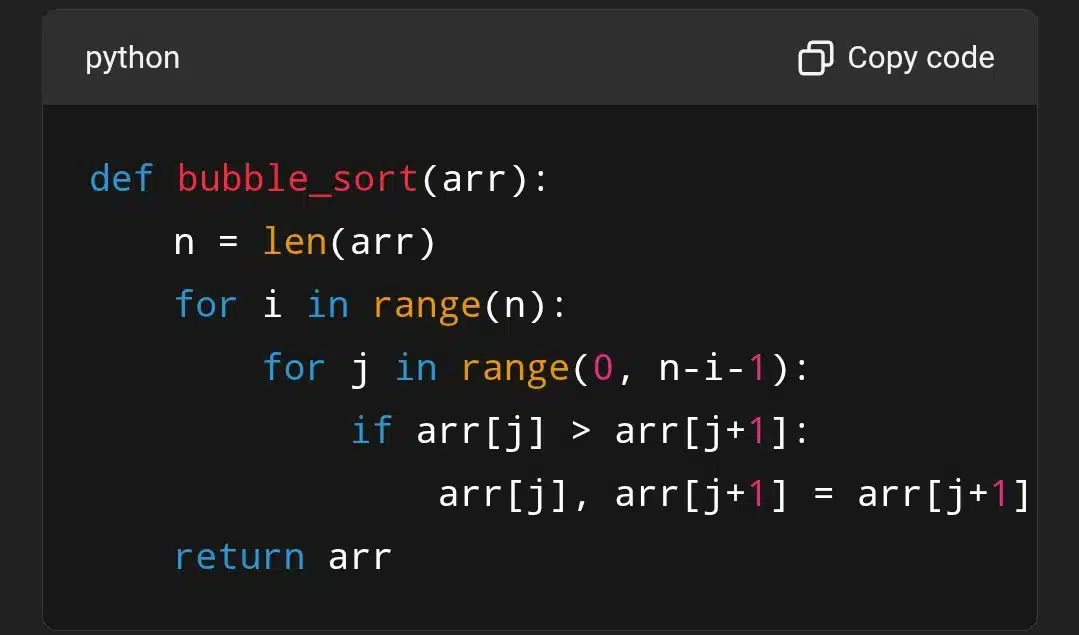

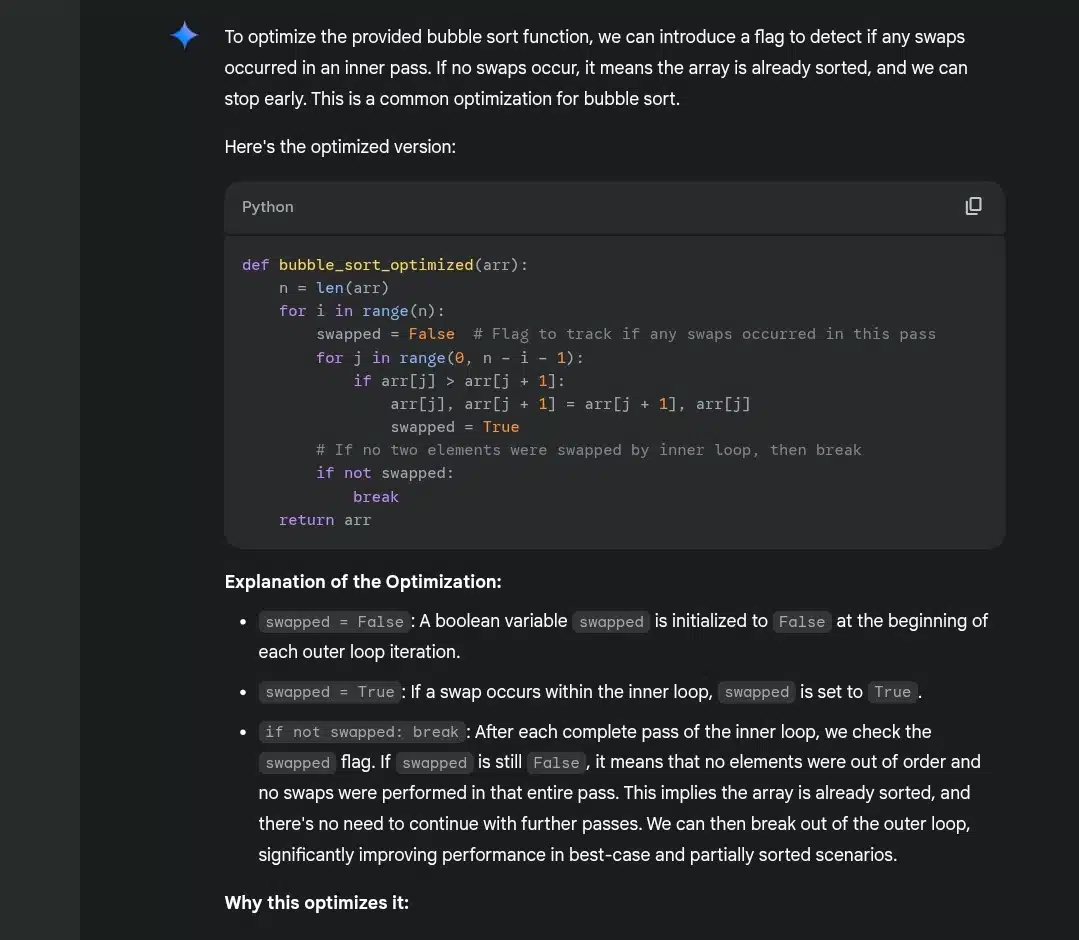

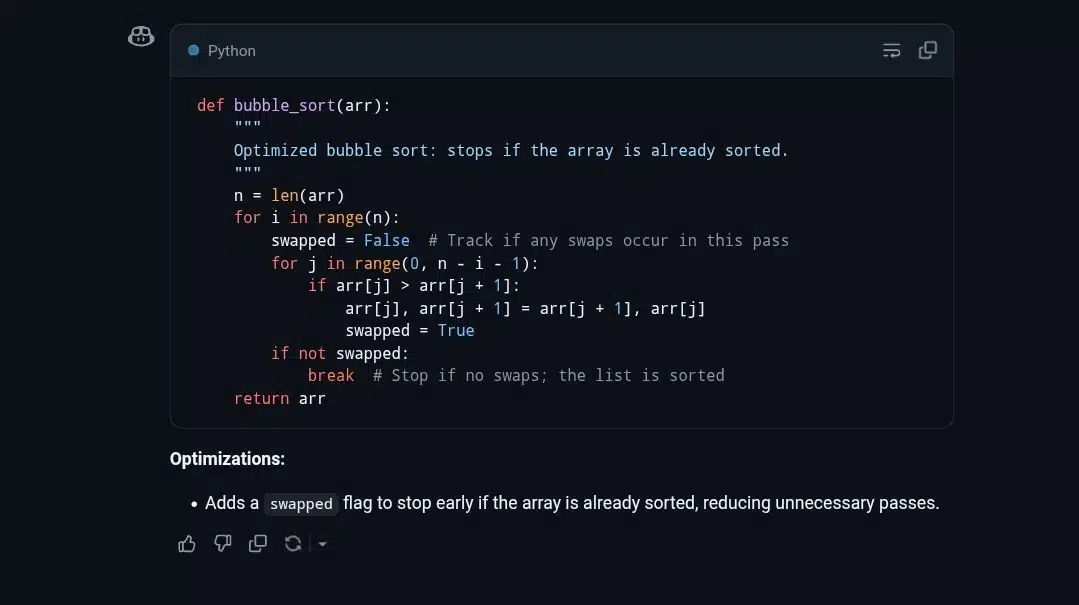

This prompt asked both tools to write an optimized version of bubble sort, one that uses a flag to detect whether the list is already sorted and break early if so.

Gemini’s Response

Gemini, on the other hand, gave a full-on lecture. It started with a brief explanation of what the optimization is, then delivered code that’s functionally the same as Copilot’s. After that, it went into detail about how the swapped flag works, why the optimization matters, and even broke down best-case, worst-case, and average-case time complexities.

GitHub Copilot’s Response

Copilot nailed it quickly. It gave me a clean and correct implementation using the swapped flag. There was a short docstring, logical structure, and comments were minimal but helpful. Again, just the efficient code that does exactly what I asked.

I appreciated the context, but it was a bit too much for this kind of task. I wasn’t asking for an intro to sorting algorithm, I just needed optimized code. Also, Gemini’s version was a bit bloated with comments and explanations. It almost felt like I was reading a mini textbook page.

That said, both outputs were technically perfect and correctly optimized. But in a real coding session, I’d prefer Copilot’s answer because I can plug it in and move on. Gemini is trying to help me understand, but sometimes, less is more. Especially when I already know what bubble sort is doing under the hood.

Prompt 4: Debugging a broken Python script

Prompt: “This Python code is throwing an error. Fix the bug.”

What I’m watching out for:

- Does it catch the syntax error (missing colon)?

- Does it correct the call syntax (5 10 to 5, 10)?

- Is the explanation (if provided) accurate?

- Is the final code functional and clean?

For this prompt, I deliberately gave both tools a piece of Python code with two simple syntax errors: a missing colon after the function definition and a missing comma between arguments in a function call. It was a basic debugging test, perfect for seeing how well each tool handles quick fixes.

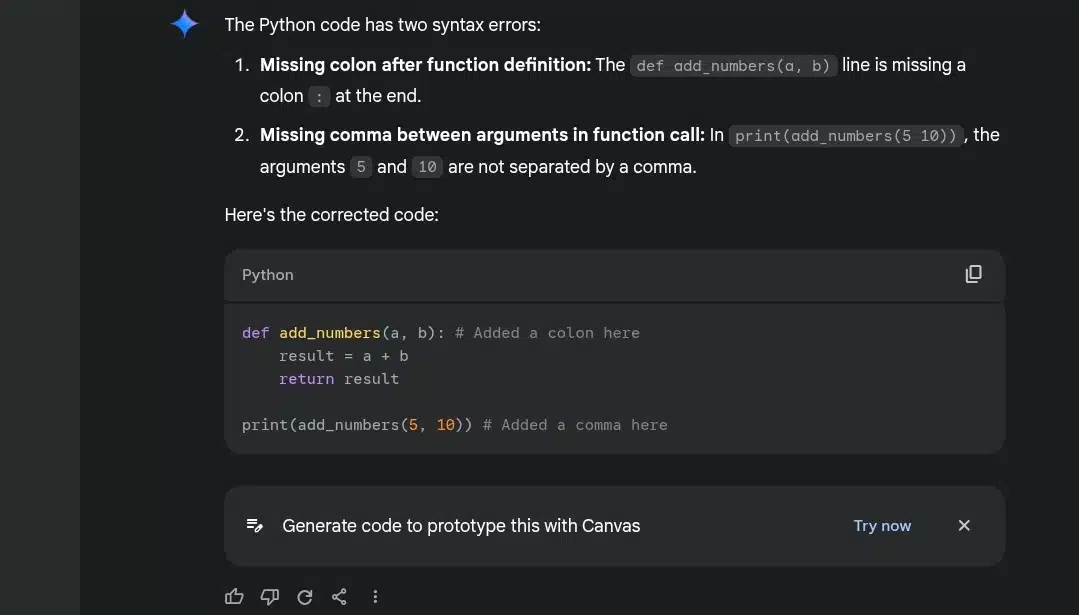

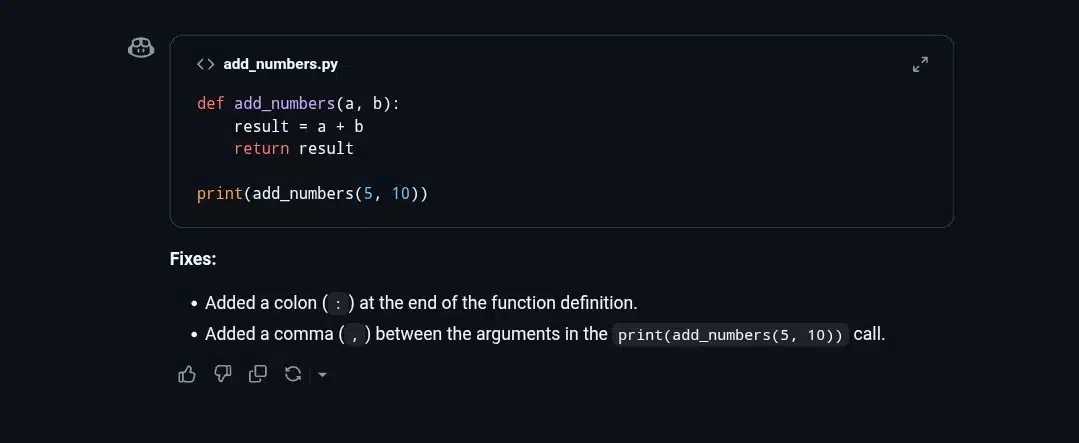

Gemini’s Response

Copilot handled it immediately. It rewrote the code without calling attention to the mistakes. Just a clean, corrected version with the colon and comma properly inserted.

GitHub Copilot’s Response

Gemini, though, made a point of explaining the errors before fixing them. It clearly pointed out both issues, described what was wrong, and then provided the corrected code. It even added a comment next to each fix.

I liked that Gemini broke it down, it felt like having a teacher double-check your work and explain what went wrong. That could be helpful for beginners or anyone still building their confidence in Python. But for someone who just wants to see a working version and move on, it’s not needed.

I’d say they both passed this test easily, but the extra verbosity from Gemini might either be helpful or a bit much, depending on the situation.

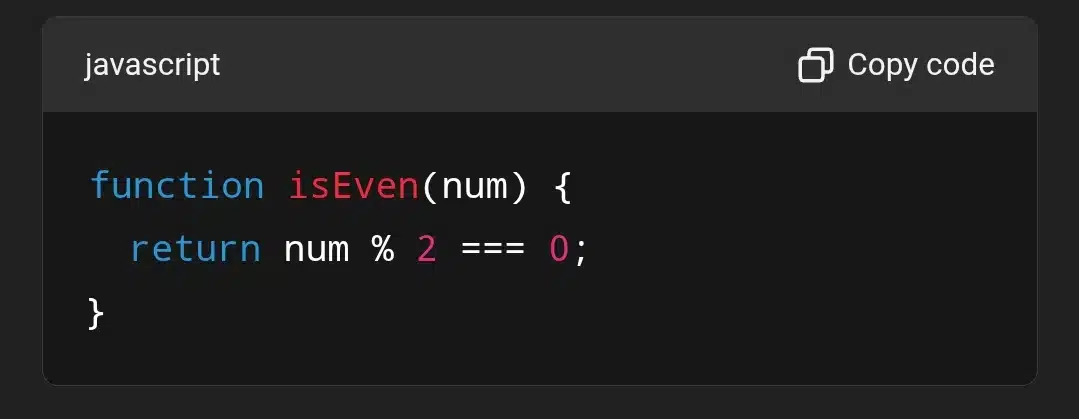

Prompt 5: Writing a Jest unit test

Prompt: “Write a Jest unit test for the following JavaScript function.”

What I’m watching out for:

- Is the test correctly structured in Jest syntax?

- Does it test both even and odd cases?

- Is the test file modular and appropriately named?

- Does it use expect and toBe correctly?

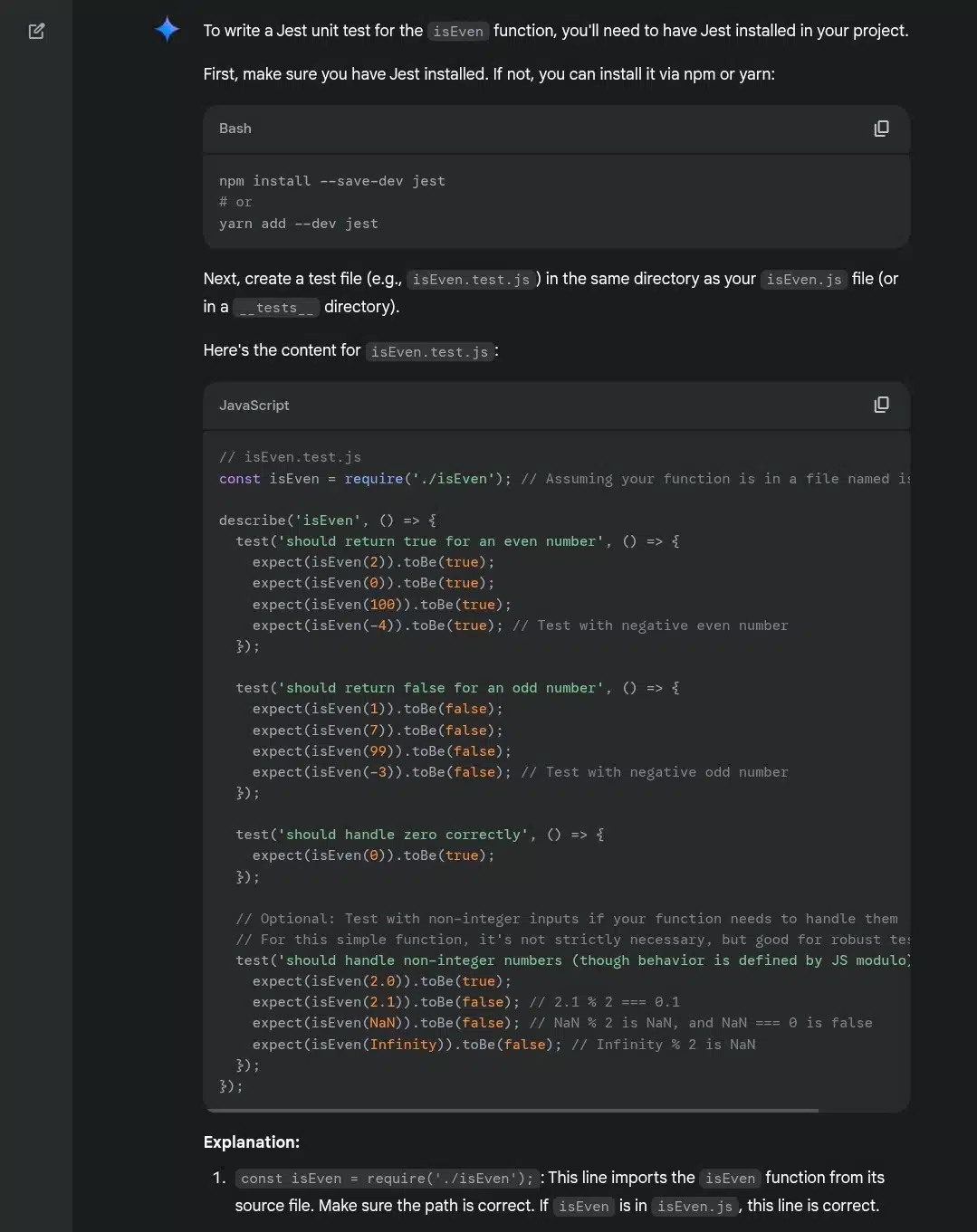

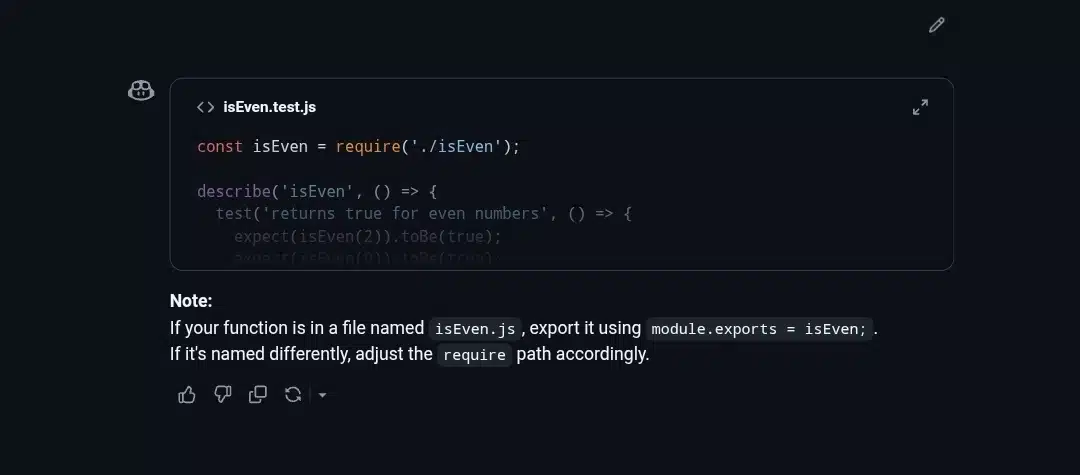

This test was about writing a Jest unit test for a simple isEven function, and I wanted to see which assistant would go beyond just a basic implementation and actually help a developer get everything set up properly.

Gemini’s Response

Gemini gave a very thorough walkthrough. It didn’t just give test cases, it also included:

- Instructions to install Jest using npm or yarn

- How to structure the test file

- An explanation of each line in the test code

- How to set up the test script in package.json

- How to actually run the tests

It also included a wider variety of test cases: positives, negatives, zero, and even edge cases like decimals, NaN, and Infinity. For someone who’s newer to testing or just wants a checklist, Gemini laid it all out.

GitHub Copilot’s Response

Copilot, on the other hand, stuck to the essentials. It gave solid test coverage for even and odd numbers and a short test for non-integers, but no setup help, no install steps, and no explanation. The code was clean and correct, but if you didn’t already know how to run Jest, you’d be left hanging.

In short:

- Gemini is like a mentor who gives you both the answer and the tutorial.

- Copilot is more like a coding buddy who just hands you the code and trusts you know what to do with it.

Both were accurate, but if I were new to testing or needed a refresher, I’d appreciate Gemini’s detailed approach more here.

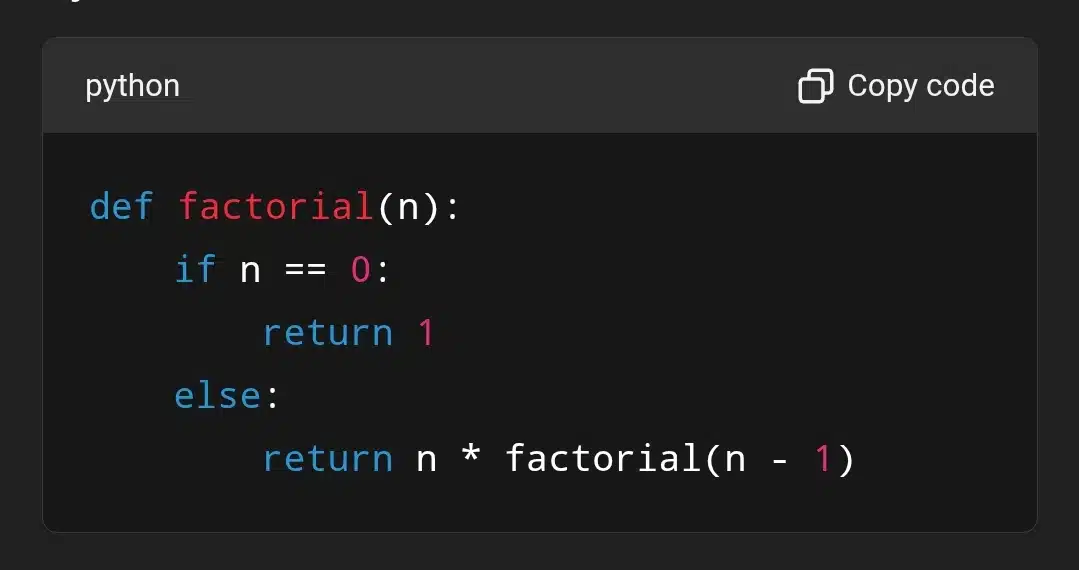

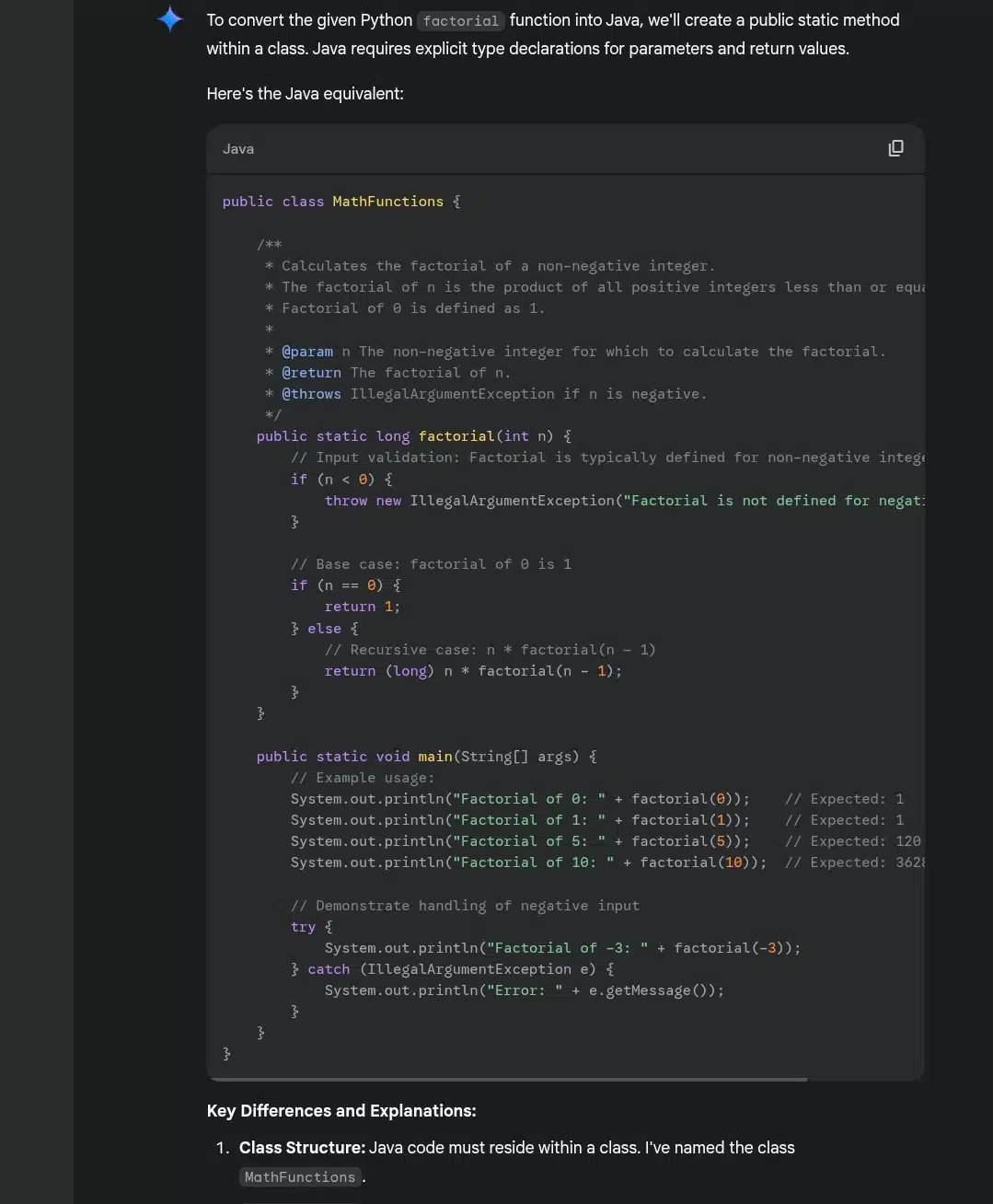

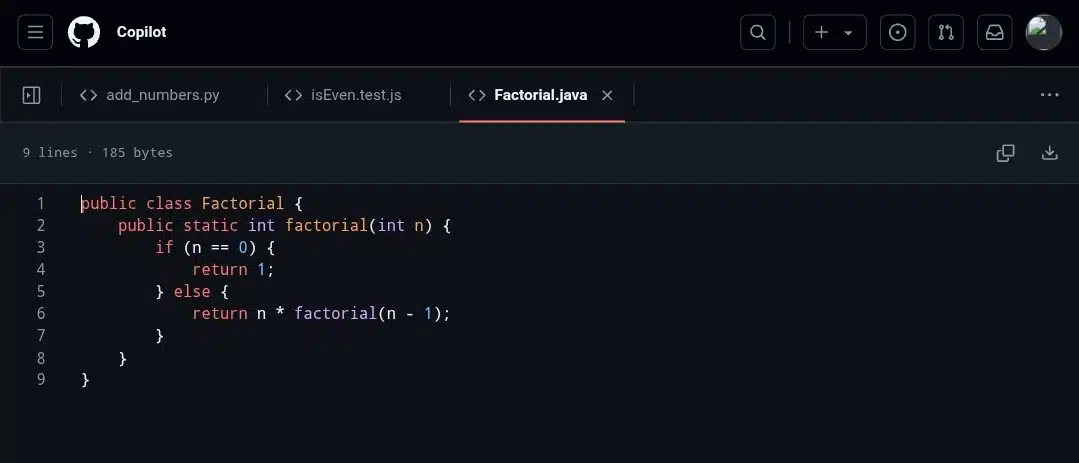

Prompt 6: Translating code from Python to Java

Prompt: “Convert the following Python function into Java.”

What I’m watching out for:

- Does it create a proper Java method inside a class?

- Are return types and parameters typed correctly?

- Does it implement recursion properly in Java?

- Is the translated code runnable?

This time the task was to convert a Python factorial function into Java. It’s a pretty straightforward function, but there’s a lot that could be said about best practices and Java-specific considerations.

Gemini’s Response

Gemini took the long route—and that’s a good thing here. It didn’t just translate the code; it turned it into production-ready Java. It:

- Wrapped the method in a clearly named MathFunctions class

- Used long for the return type to avoid integer overflow

- Added input validation to throw an IllegalArgumentException for negative inputs

- Included a main method to demonstrate usage and test different cases (including a try-catch for error handling)

- Even explained type promotion and why casting to long might help avoid issues

In other words, it gives you not just code but context, safety checks, and helpful habits for Java developers.

GitHub Copilot’s Response

Copilot, again, kept it super minimal. It:

- Used int for both input and return type (which will overflow quickly)

- Skipped input validation

- Gave no test or usage examples

- Omitted all commentary or structure beyond the function itself

It worked but just barely. It’s the fastest path to getting something running, but it leaves you vulnerable to bugs and doesn’t scale.

In short:

- Gemini gave a polished, defensive, and beginner-friendly Java solution.

- Copilot gave the most barebones, minimalist version—useful if you’re confident and just need the core logic.

In a teaching or real-world coding scenario, Gemini’s approach is far more useful.

Prompt 7: Writing a Git commit message

Prompt: “Write a meaningful git commit message for this code change.”

What I’m watching out for:

- Does it describe what changed?

- Does it explain why the change was made?

- Is it clear and concise (around 50 characters)?

- Does it follow conventional commit guidelines?

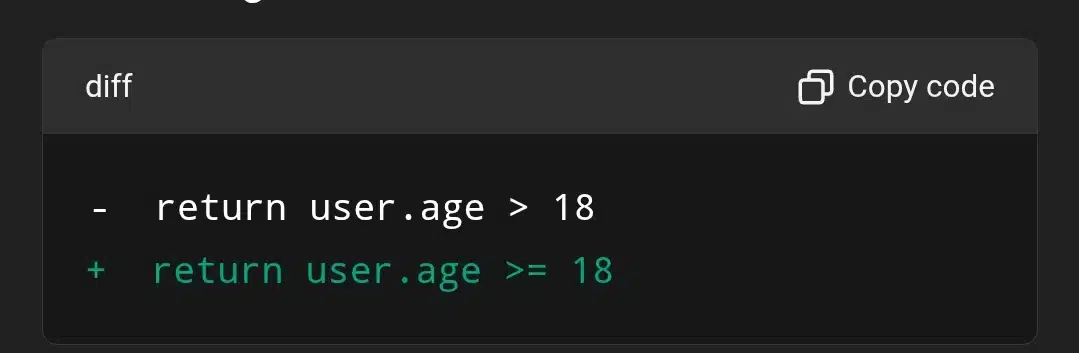

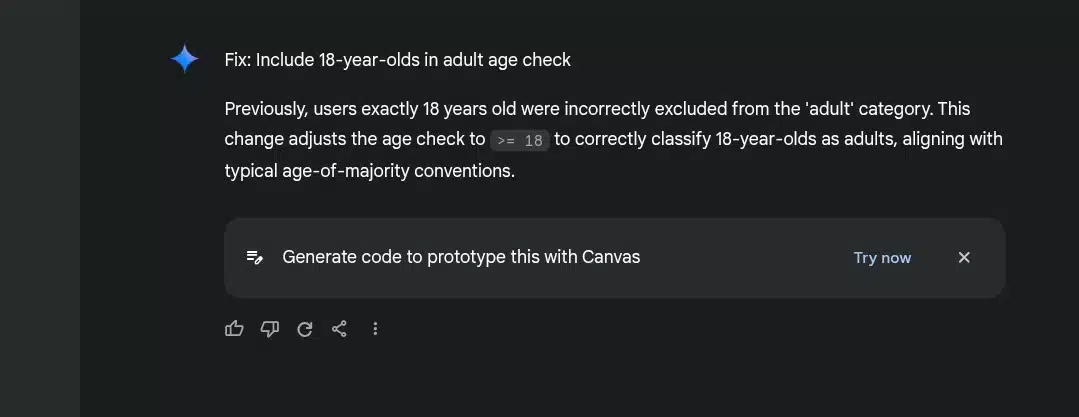

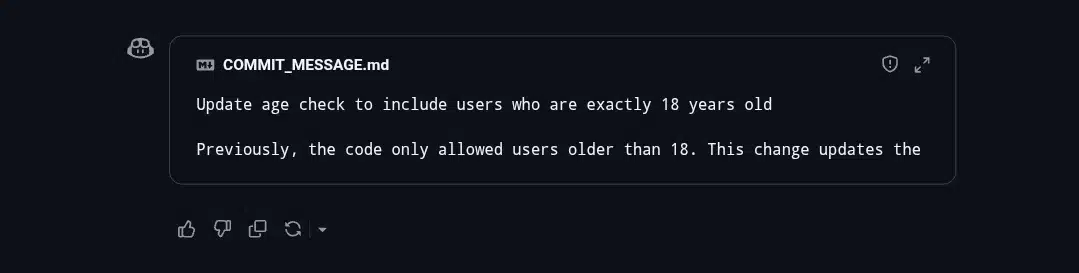

This prompt tested how well each tool can write a clear, concise commit message with a sensible explanation of a logic bug fix.

Gemini’s Response

Gemini went straight to the point with:

- A precise title: “Fix: Include 18-year-olds in adult age check”

- A clear explanation of the bug: users aged exactly 18 were wrongly excluded

- A rationale: aligning with “typical age-of-majority conventions”

It’s written like a proper conventional commit, short, scoped, and helpful for teams reviewing changes or scanning commit logs later.

GitHub Copilot’s Response

Copilot also did a good job:

- The title is clear and accurate

- The body explains what the old behavior was, what the new behavior is, and why it matters

It’s maybe a touch wordier than necessary for a small logic fix, but still very readable and helpful.

In short:

- Gemini offered a commit message that’s clean, scoped, and ideal for version control hygiene—great for collaborative environments.

- Copilot provided a solid alternative, just slightly more verbose.

Both are good here.

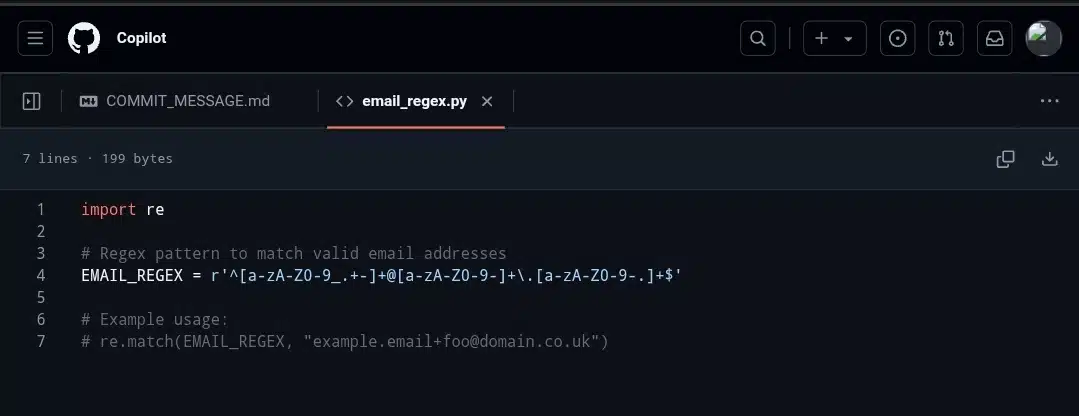

Prompt 8: Generating a regex to match email addresses

Prompt: “Write a regex pattern that matches valid email addresses.”

What I’m watching out for:

- Is the regex pattern correct and functional?

- Does it avoid overcomplication?

- Does it account for common edge cases (e.g., subdomains, symbols)?

- Is the explanation (if provided) accurate and understandable?

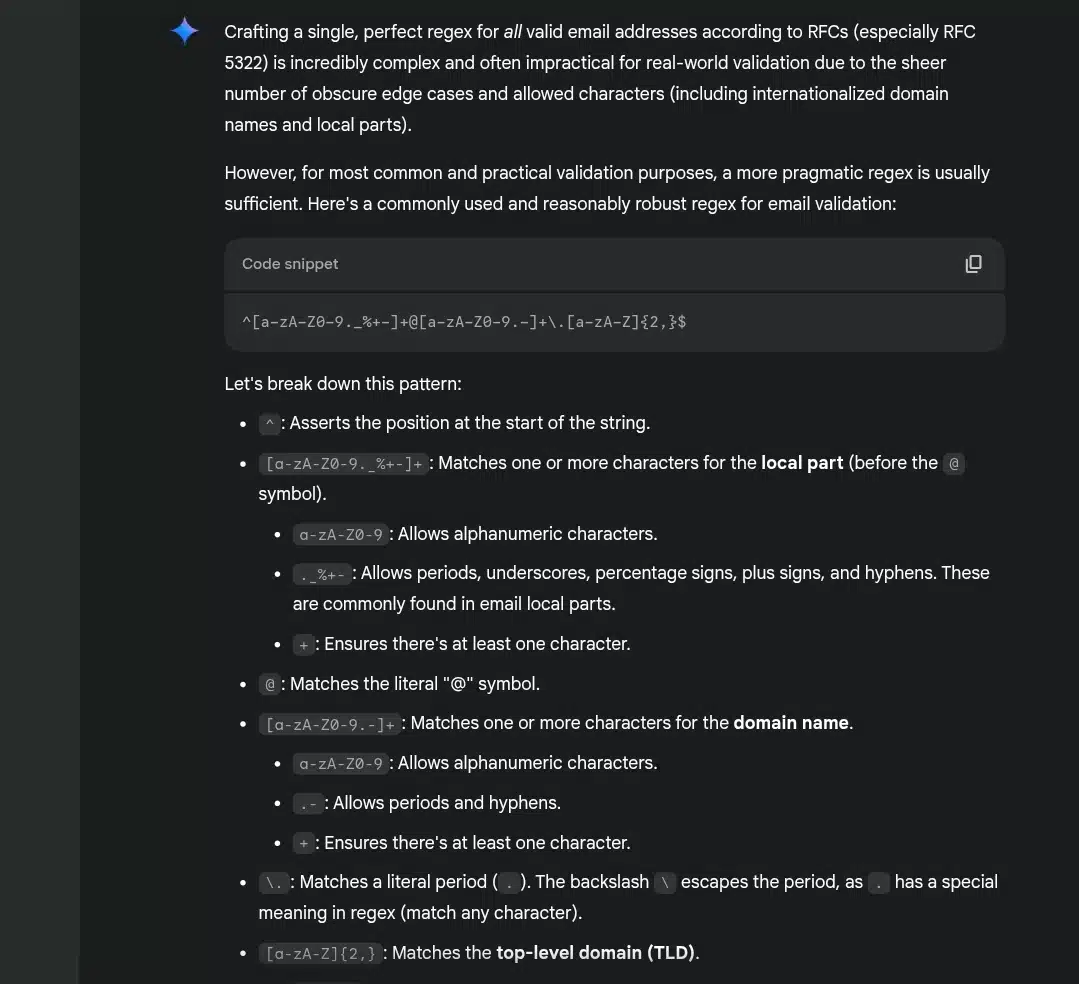

This prompt evaluated how each tool handles email validation with regular expressions, a classic but tricky challenge.

Gemini’s Response

Gemini took a realistic and accurate stance from the start, explaining that full RFC 5322 compliance is nearly impossible with regex alone. It gave a balanced, practical regex that covers most everyday use cases. It also:

- Offered a breakdown of the pattern, explaining each part line by line—ideal for learners or code reviewers.

- Called out the limitations clearly: internationalized domains, strict RFC coverage, etc.

- Finished with guidance on when this regex is appropriate and when to rely on stronger validation strategies like confirmation emails.

GitHub Copilot’s Response

Copilot delivered a clean, usable code snippet with a simple regex that works for many real-world cases. Its pattern is slightly less strict than Gemini’s recommendation, but still serviceable. It also lacked the deeper context, caveats, and explanation that Gemini provided.

Bottom line:

- Gemini wins this round for its depth, nuance, and developer guidance.

- Copilot is useful if you just want quick code, but Gemini is stronger for learning and production awareness.

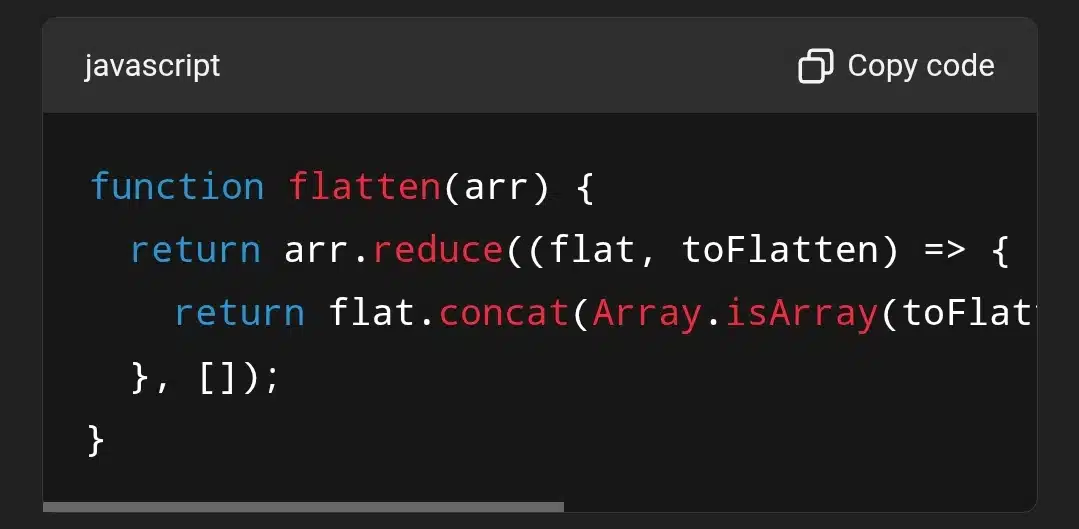

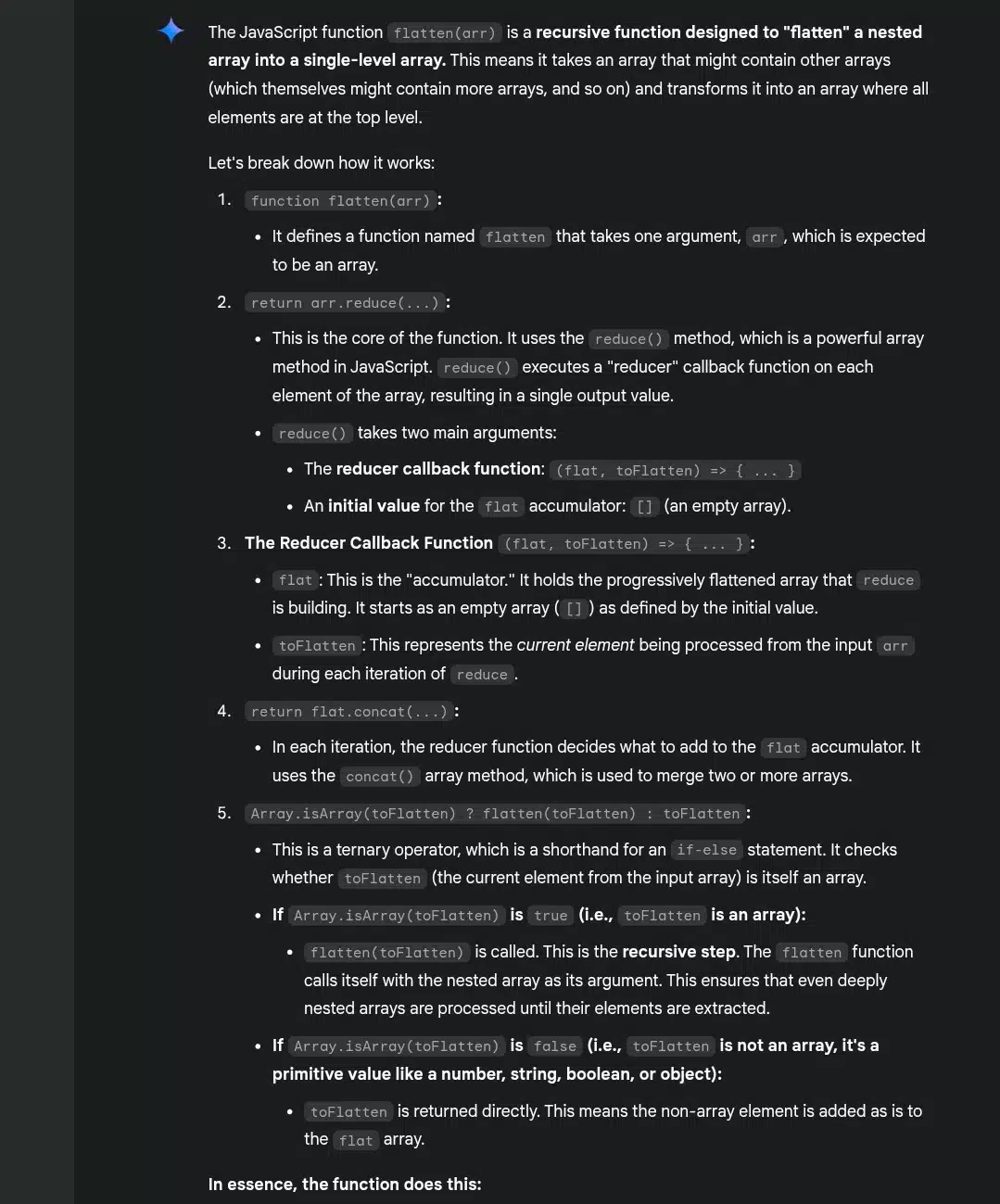

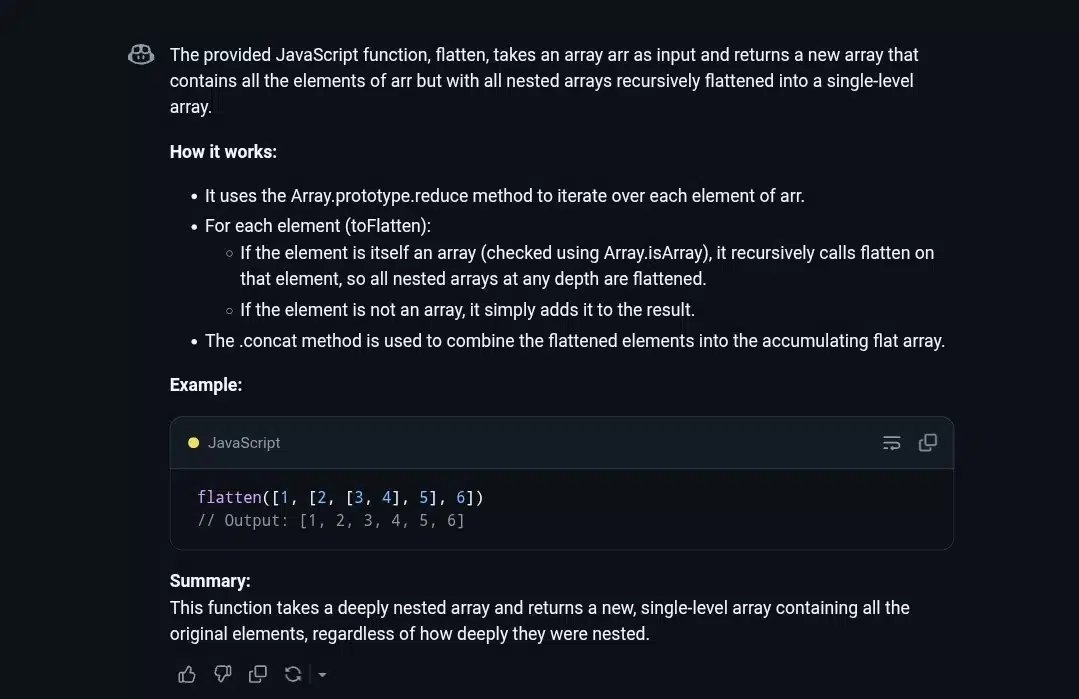

Prompt 9: Explaining what a JavaScript function does

Prompt: “Explain what this JavaScript function does.”

What I’m watching out for:

- Does it recognize this as a recursive array flattener?

- Does it correctly describe reduce, concat, and recursion?

- Does it explain what happens with nested arrays?

- Is the explanation clear to someone with intermediate JS knowledge?

This prompt asked both tools to explain a recursive flatten function in JavaScripta classic functional programming use case.

Gemini’s Response

- Delivered a step-by-step breakdown of how Flatten works.

- Explained each part of the function: the use of reduce, the ternary operator, Array.isArray, recursion, and concat.

- Went into detail about how each element is processed and how the recursion works.

- Included a full example with output, which helped ground the explanation in real-world usage.

- Its tone was educational, targeting someone who may not be very advanced with recursion or functional JS.

GitHub Copilot’s Response

- Gave a concise summary that clearly explained the flow.

- Highlighted the same components: recursion, reduce, and concat, but in fewer words.

- Included a compact example with output.

- While correct and clear, it lacked the granular depth and educational breakdown provided by Gemini.

Bottom line:

- Gemini is the better choice for someone learning JavaScript or recursion, as it teaches.

- Copilot is great if you already know the concepts and just want a quick refresher.

So, for depth and teaching clarity, Gemini wins this round.

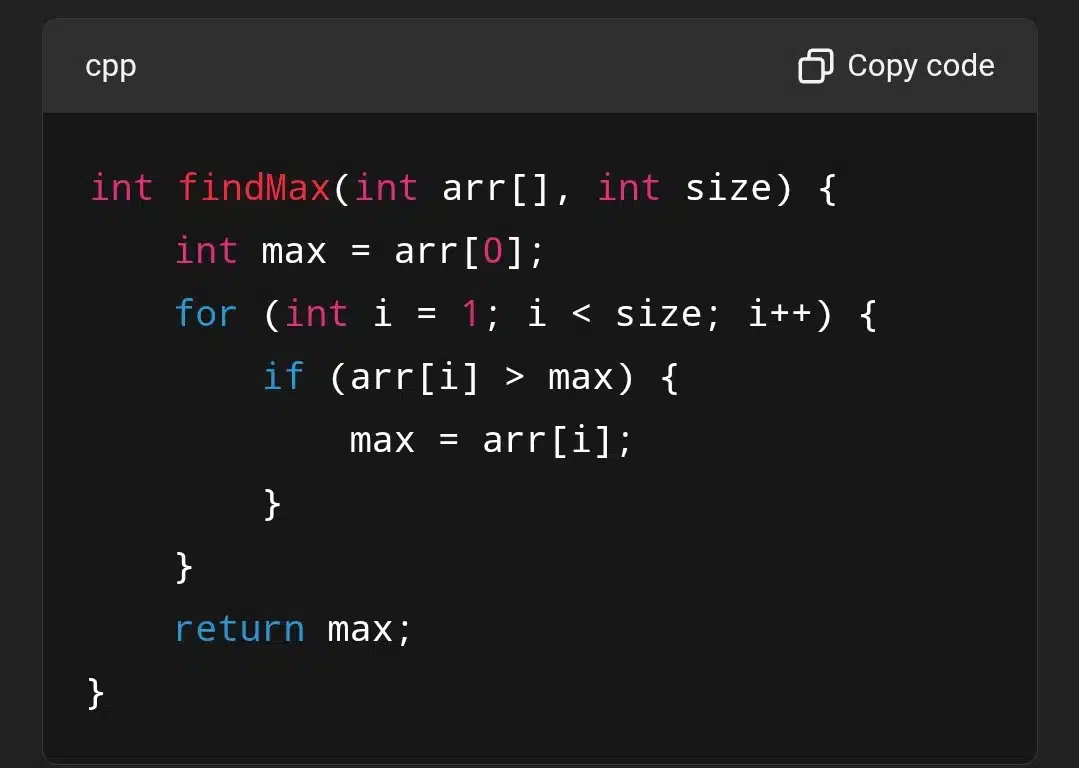

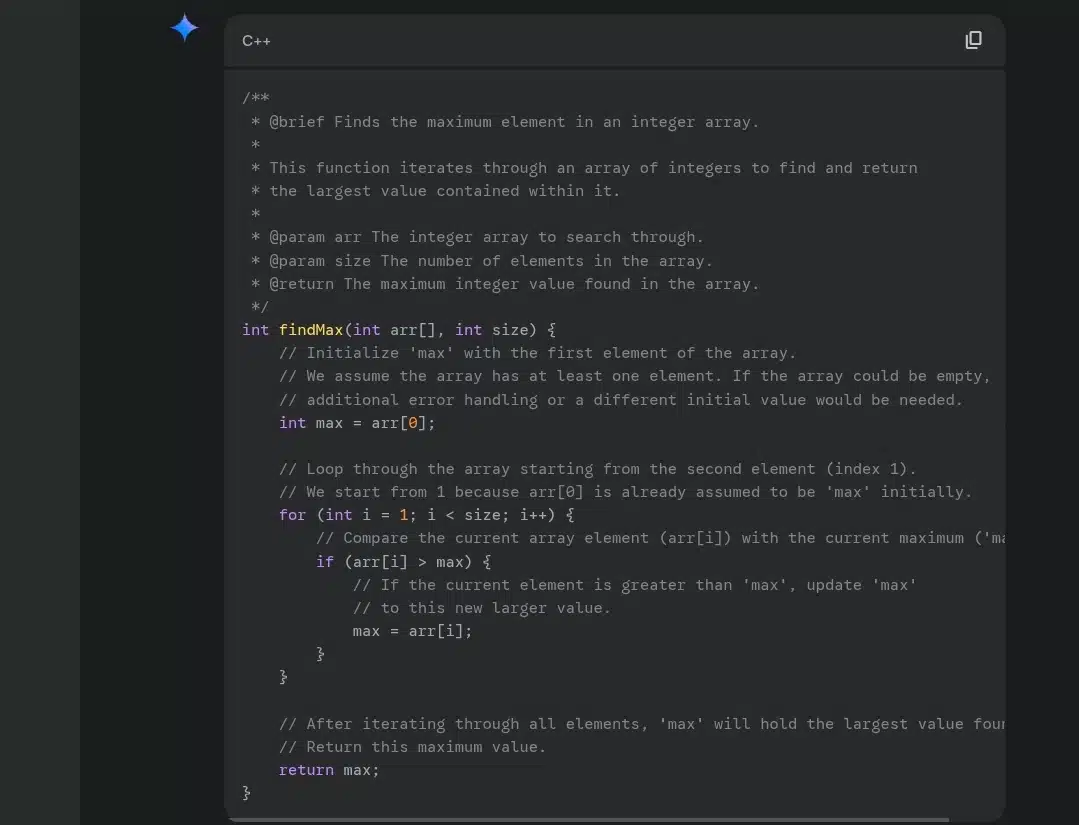

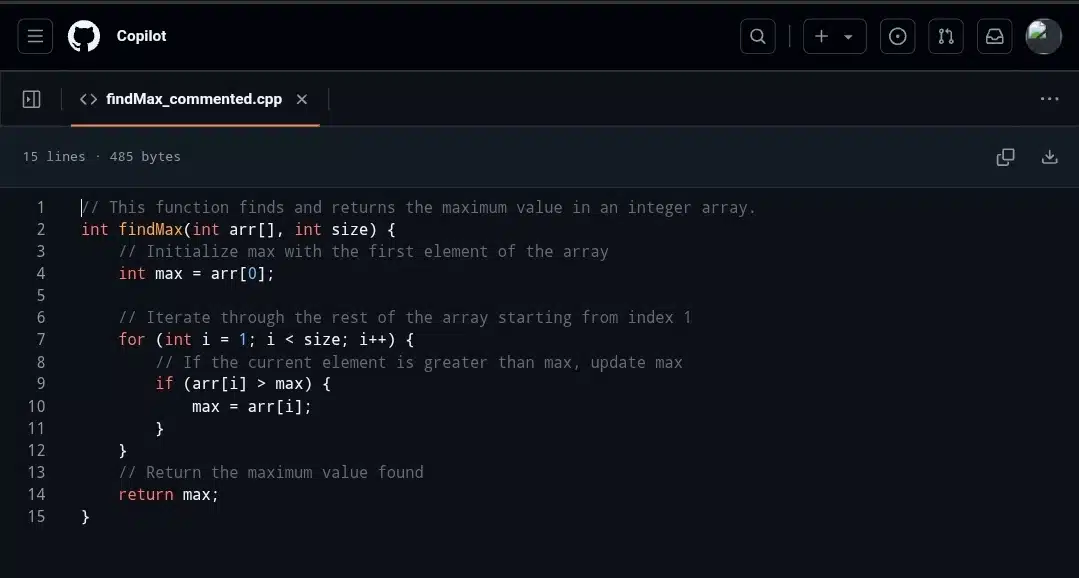

Prompt 10: Commenting on a C++ function

Prompt: “Add comments to explain what this C++ function does.”

What I’m watching out for:

- Are comments accurate and placed near relevant lines?

- Do they explain intent, not just code syntax?

- Is the tone professional and helpful for collaboration?

- Do they avoid being overly obvious (e.g., “this adds 1”)?

Gemini’s Response

- Offers detailed comments and documentation using Doxygen-style formatting.

- Breaks down the logic in a step-by-step, educational way.

- Includes assumptions (e.g., the array is not empty).

- Explains why things are done (e.g., why iteration starts at index 1).

- Ideal for students, learners, or documentation purposes.

GitHub Copilot’s Response

- Presents the core logic with basic comments.

- Uses clear, brief inline explanations.

- The code is clean and readable, but with less contextual explanation.

- Great for someone who just needs working code with basic guidance.

Verdict:

- Gemini is more thorough and better for teaching or documentation.

- Copilot is quicker and cleaner, perfect for experienced developers who don’t need the extra details.

For this task, if clarity and teaching are the goals, Gemini wins again.

Gemini vs GitHub Copilot: Which is better?

After reviewing all 10 prompts, it’s clear that Gemini and GitHub Copilot serve different types of users. Both are capable, but they shine in different ways depending on who’s using them and what they need.

Gemini’s strengths lie in clarity and teaching. Its responses are usually longer, but they explain each part of the code in detail. Gemini often breaks down logic, offers context, and highlights assumptions. This makes it a great choice for:

- Beginners trying to understand how the code works

- Writers creating educational content or documentation

- Users who want not just the code, but the why behind it

For example, when explaining a recursive flatten function or a C++ loop, Gemini doesn’t just show the syntax—it walks through the logic, points out when recursion happens, and mentions potential edge cases.

GitHub Copilot, on the other hand, is concise and more technical. It assumes the user knows how to read code and focuses on delivering quick, correct implementations with minimal explanation. This style works better for:

- Intermediate to advanced developers

- Users looking to copy and use code immediately

- Scenarios where speed and precision matter more than deep explanation

Its tone often feels like one developer talking to another. It’s great when you don’t need a walkthrough.

So who wins? It depends on your goal:

- For learning and documentation, Gemini is the better fit.

- For fast, production-ready code, GitHub Copilot is more effective.

Neither is objectively better; they’re just designed with different users in mind.

Wrapping up

In the end, choosing between Gemini and GitHub Copilot isn’t about which is better overall, but which is better for you.

If you’re new to coding, teaching others, or just want to understand code more deeply, Gemini’s detailed, beginner-friendly style will guide you through each step. But if you’re confident in your skills and just need clean, working code fast, GitHub Copilot delivers exactly that with minimal friction.

Both tools have their place. Think of Gemini as the helpful teacher, and Copilot as the efficient coding partner. Use the one that matches your workflow, or combine both to get the best of clarity and speed.