Key takeaways

- As content generated by artificial intelligence becomes more believable, it is important you learn ways to detect AI-generated content.

- Some of these ways include the use of tools or just using your keen sense of observation.

- But as AI gets better, detecting the faults in the content it generates is bound to get harder.

AI development is increasing every year. Since the launch of ChatGPT in 2022, many companies are now focusing on creating similar technologies to stay ahead of the competition.

The result of this competition is AI-generated content similar to or just as good as what a human would create. From written content to images and videos, companies like OpenAI are pushing the boundaries of what is possible with AI.

When the company launched Sora, a text-to-video model in February 2024, it left people stunned. However, as good as AI has become, there are still ways to detect AI-generated content.

Ways to detect AI-generated content

In this article, we will look at four content formats — images, audio, and video — and tell you how to detect if they’re AI-generated.

Some tools can help with this, but they aren’t always 100% accurate so you have to pair them with a very keen sense of observation.

Detecting texts written by AI

In 2023, I went head-to-head with GPT-4. We both wrote an article based on the same topic and some of my colleagues could not tell which article was written by mine.

This shows that AI writes well and just like my colleagues, you might need a little help differentiating between content written by humans and one written by AI.

Here are two ways you can know AI-written content.

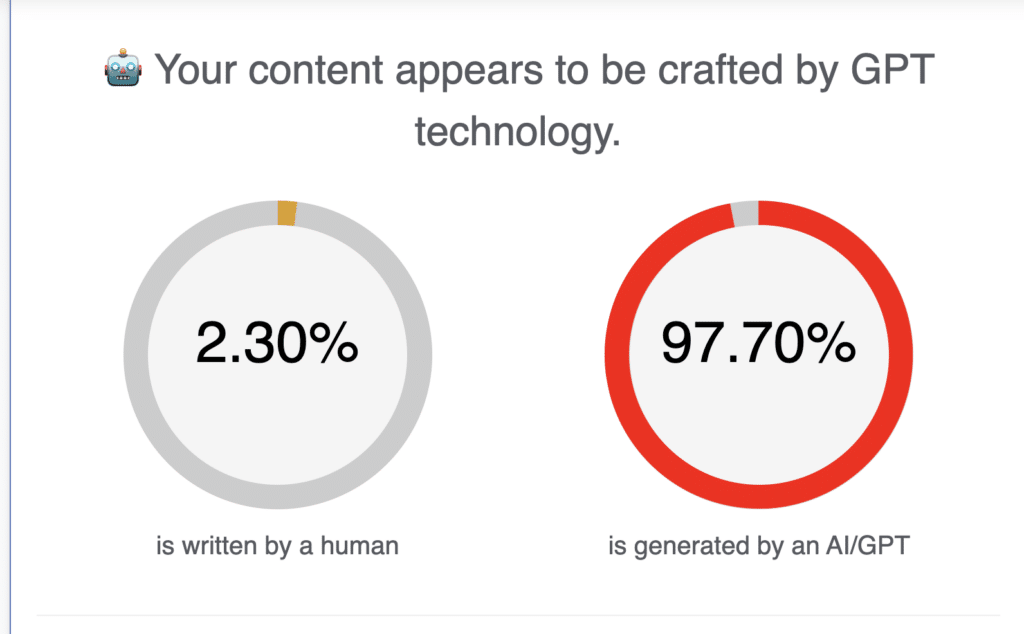

Use AI detection tools

Tools it easy to know if an article was generated by AI. Some of these tools include ZeroGPT, Winston AI, and GLTR.

Each tool has its unique feature but they all work based on the same concept, which is analysing text to figure out the likelihood it was predicted by AI.

Word prediction is how AI writes text. The AI models are trained on large datasets of content such as articles and books and they generate text by predicting the next word or phrase in a sequence of words, based on the content they have been trained on.

It is important to note, however, that some of these tools aren’t always accurate.

Look out for repetition

If you see an article where a keyword is repeated a lot, there’s a good chance it was written by AI. It is not clear why AI likes keyword stuffing but, it is one of the issues AI innovators haven’t figured out yet.

For example, I told ChatGPT to explain why some countries experience snowfall and why others do not. And out of 156 words, it mentioned snow 10 times.

To be fair, human writers also do keyword stuffing, so it might be a little hard to tell if the piece of content you’re reading was generated by AI or written by an exhausted SEO writer.

Check for a personal touch

AI-generated content generally lacks the personal touch human content would have. For example, I gave an instance from a personal experience where I competed with AI to write an article and what the result was.

AI isn’t capable of having personal experiences or opinions about something — at least for now — they typically generate content with facts, usually devoid of emotions and belief.

However, considering the pace of AI innovation, things might change and these models could learn how to cook up believable experiences and emotions.

Detecting audio created by AI

AI has also improved when It comes to generating content in audio format. There have been reports that fraudsters have been using it to improve their scam.

Researchers at McAfee found out that you can successfully clone a person’s voice with just a three-second recording of their voice.

According to a global study by the researchers, 77% of AI-related scams were done by a voice clone scam.

So that you do not fall victim, here’s how you can tell the voice of AI from that of a human.

Listen for breath

Humans expel air when they speak. If you’re on the phone with someone and you can’t hear them breathing, there’s a good chance you’re speaking with an AI. Other tips would be to ask completely unrelated questions to the topic the person is calling about or hang up and call the person back.

Odd speech patterns

Awkward pauses and a robotic flow of words are another way of spotting AI-generated speech. You can also listen to how slang and non-English names are pronounced.

Detecting AI-generated image

From OpenAI’s DALL-E to Midjourney, text-to-image models are creating very life-like images.

When an AI-generated image of the Pope wearing a white puffy jacket went viral, many people did not know the image was fake until news came out that it was generated using Midjourney.

Here’s how you can tell such images are fake.

Overly sharp images

AI-generated images tend to be very glossy and sharp. If it’s a close-up image of a person, the skin usually has an unnatural smoothness to it.

Abnormal or extra fingers

AI is getting great at generating fingers but it is not yet 100%. In pictures where the fingers of the subject are visible, there is a good chance they’ll have extra or not enough fingers.

Inspect the backgrounds

AI tends to pay a lot of attention to the primary subject in a picture, which leaves the background with some mistakes. In some cases, the background is blurred to hide these mistakes.

Misspelt text

While AI is good at spelling when it writes articles, it is a different story when it comes to images. Text in AI-generated images is often distorted misspelt or even unreadable.

How to detect AI-generated videos

OpenAI has set the bar high for AI-generated videos with the launch of Sora. If I hadn’t been told those videos were generated by AI, I would never have known, but after listening to AI professionals scrutinise the videos, here are the things that give them away as AI-generated.

Check the CP2A metadata

To prevent the spread of misinformation with Sora, OpenAI said all generated videos will include something called C2PA metadata. To check for the C2PA metadata, all you have to do is go to the website, Content Credentials, upload the video and it will tell you the content credentials.

However, content credentials like the C2PA metadata can be removed. It is automatically removed when the content is posted to a social media website.

Look out for multiple limbs

While videos created by Sora look life-like, they still have some abnormalities such as multiple limbs.

The image below is a screenshot from a Sora video where a cat is waking its owner and the cat’s paw was duplicated.

According to Stephen Messer co-founder of AI sales company, Collective[i], Sora is good but it hasn’t quite gotten the physics of the real world yet.

Abnormal movements

Taking a closer look at another Sora video, I discovered that some of the movements are abnormal. The woman in the screenshot below is trying to wave but her palms are closed. In another instance, cars moving on the street suddenly disappear or horses melt into the ground.

However, when it comes to animated videos, Sora makes it very hard to find faults because animated characters are expected to do unrealistic things.

While knowing the difference between real and AI-generated content is important to avoid misinformation, policies need to be created to address the dark side of AI innovation.

That is why Olumide Okubadejo, an AI Research Scientist and Head of Product at Sabi said the government should start thinking about policies now and not later.