A few days ago, the Federal Government of Nigeria launched a project aptly called “N-Power”, a project that seeks to “Empower Nigerian Youths for Prosperity.” While this is a laudable initiative, the main source of information collection — the website — wasn’t managed properly. It’s reminiscent of Obama’s healthcare.gov initiative and the N-Power website isn’t any different. Aside the fact that it takes an insane amount of time to load, the website crashes under pressure.

Suggested read: The palava with N-Power job creation effort by the Federal Government of Nigeria

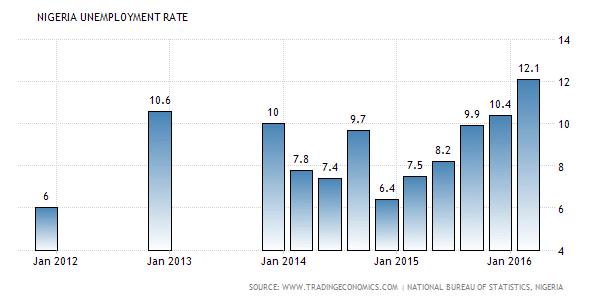

For context, the unemployment rate in Nigeria stood at a staggering 12.1% as of March 2016, this is up from 10.5% in the fourth quarter of 2015. For a country of approximately 180M people, these numbers sort of gives you a fair idea of how much load this website will have to deal with. In the first few days of its unveiling to the general public, a record number of 400,000 registration took place successfully in the first 36 hours and according to a statement issued by the SSA to the Vice President on Media and publicity, the website has gotten over 35 million visits thus far. Impressive.

While the number of successful registration looks impressive, it pales in comparison with the unemployment numbers of the nation. The fluctuating uptime of the website is affecting registrations negatively; a problem if not checked soon enough will render the project dead on arrival

I admire the government’s effort and intention to help solve the unemployment problem, but most times, good intentions aren’t always good enough, you have to do more. Some prospective registrant have taken to Twitter to vent their frustration on the inaccessibility of the website. The whole purpose of this exercise is defeated if people can’t use it.

it seems the FGN’s @Galaxybackbone does not have the know-how of getting a high traffic site to work https://t.co/HtTZotuDz4 @channelstv

— Day.0 (@dedayoa) June 15, 2016

https://twitter.com/ProgressivesG/status/743075692276002817

@bukolasaraki pls sir help us, https://t.co/YJQOIHCP4w is not responding, we need your help sir, please sir help us.

— Owoseni Samuel (@OwoseniSamuel) June 15, 2016

https://twitter.com/umaraliyumusa/status/742899255933816833

Below are a few thoughts of mine from a technical and product perspective with regards to how the website could have been better managed.

A website of this nature is guaranteed to have hundreds of thousands of visitors and proper load and stress testing should be part of its development life cycle. There are lots of tools that can help with this; Apache Benchmark, Locust, Siege just to mention a few. Not just that, the average response time of this website is about 70sec. This is unacceptable.

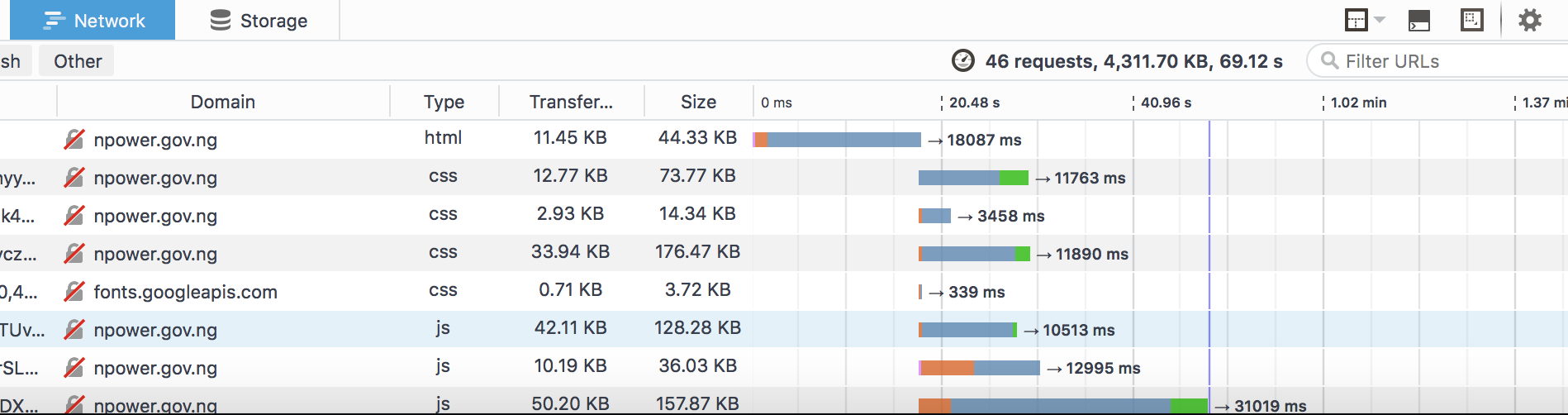

Static contents — Javascript, CSS and images — could have been better managed. Combining the stylesheets and javascript into a single file respectively would further help the response time as this will reduce the number of round trips. The different tiny images on the website could have been made into a sprite, there is no point in loading logos of the stakeholders individually. This will further reduce the round trip and create a better experience. Aside from minifying the static contents, serving them through a CDN(content delivery network) is something that should really be considered. It takes a total of 46 round trips and a content size of 5MB to get the entire website to display.

Network request of the N-Power website

Network request of the N-Power website

High availability is non-negotiable for a website of this nature, as such, a failover of a single node shouldn’t take down the entire website. A load balancer(Haproxy, Amazon’s ELB) could have been used here with all the web nodes sitting behind it. This will help disperse the load across multiple machines leading to a more resilient website.

Depending on the architecture, putting the web server and a database on the same machine isn’t a wise decision, nothing leads to a single point of failure like this setup. Separating the web node(s) from the database is almost always advisable and always replicate the master database. Always.

Frequently accessed pages should be cached, depending on the content type, a tool like Varnish is highly recommended and expensive queries should be cached, Redis and Memcache are great candidates for this task. And don’t forget to index the database tables. I can’t overemphasise the upside of this.

Monitor and measure everything, a high CPU throughput should trigger an alert to responsible parties. New Relic is an excellent server monitoring tool and for an open source equivalent, Nagios comes to mind. The rule of the game here is measure and monitor.

In a world where cyber security is becoming a major issue, logins and registrations pages must have SSL enabled. This is something I found lacking on the website.

I’ll love to hear from you. You want to say hello? Email me

This post first appeared on Celestine’s Medium.